In this entry, we will be looking at what a deployment looks like from the infrastructure as code (IaC) perspective with Terraform as well as the configuration management side with PowerShell DSC (Desired State Configuration). Both play important roles in automating ArcGIS Enterprise deployments, so let's jump in.

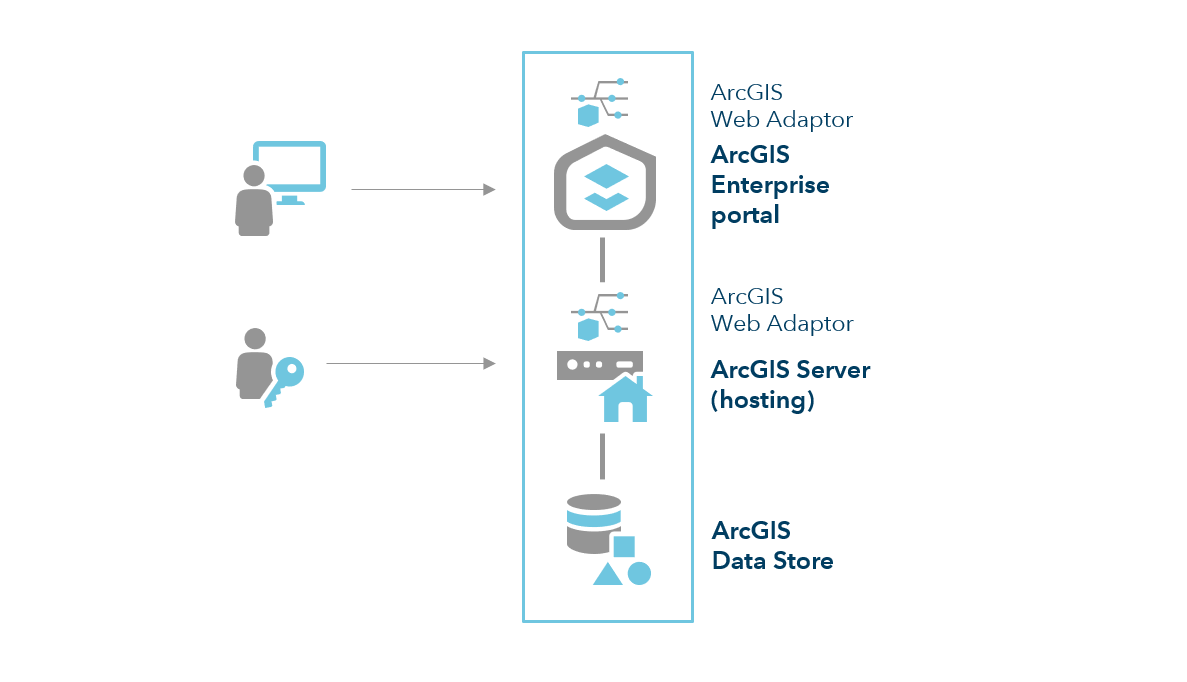

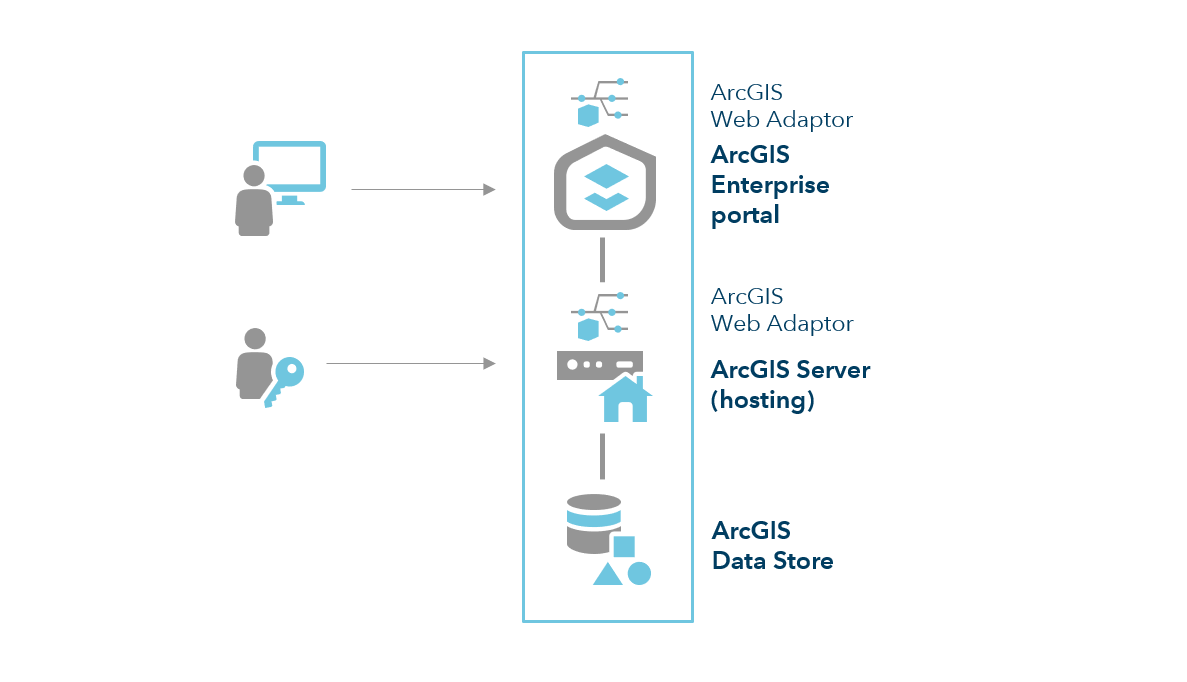

This deployment will follow a single machine model as described in the ArcGIS Enterprise documentation. It will consist of the following.

Portal for ArcGIS 10.7.1

ArcGIS Server 10.7.1 (Set as Hosting Server)

ArcGIS Data Store 10.7.1 (Relational Storage)

Two (2) Web Adaptors within IIS

Additional Configurations

WebGISDR is configured to perform weekly full backups that are stored within Azure Blob Storage via Task Scheduler

Virtual machine is configured for nightly backups to an Azure Recovery Services Vault

RDP (3389) access is restricted via Network Security Group to the public IP of the box in which Terraform is ran from.

Internet access (80, 443) is configured for ArcGIS Enterprise via Network Security Group

Azure Anti-malware is configured for the virtual machine

The complete code and configurations can be found attached below. You will need to provide your own ArcGIS Enterprise licenses however.

Note: This is post two (2) in a series on engineering with ArcGIS.

Infrastructure Deployment

If you are not already familiar with Terraform and how it can be used to efficiently handle the lifecycle of your infrastructure, I would recommend taking the time to read through the first entry in this series which can be found here. The Terraform code in that first entry will be used as the basis for the work that will be done in this posting.

As discussed in the first entry, Terraform is a tool designed to help manage the lifecycle of your infrastructure. Instead of rehashing the benefits of Terraform this time however, we will jump straight into the code and review what is being done. As mentioned above, the template from the first entry in this series is used again here with additional code added to perform specific actions needed for configuring ArcGIS Enterprise. Let's take a look at those additions.

These additions handle the creation of two blob containers that will be used for uploading deployment resources ("artifacts") and a empty container ("webgisdr") that will be used when configuring webgisdr backups along with the uploading of the license files, the PowerShell DSC archive and lastly, the web adaptor installer.

resource "azurerm_storage_container" "artifacts" {

name = "${var.deployInfo["projectName"]}${var.deployInfo["environment"]}-deployment"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

container_access_type = "private"

}

resource "azurerm_storage_container" "webgisdr" {

name = "webgisdr"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

container_access_type = "private"

}

resource "azurerm_storage_blob" "serverLicense" {

name = "${var.deployInfo["serverLicenseFileName"]}"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

storage_container_name = "${azurerm_storage_container.artifacts.name}"

type = "block"

source = "./${var.deployInfo["serverLicenseFileName"]}"

}

resource "azurerm_storage_blob" "portalLicense" {

name = "${var.deployInfo["portalLicenseFileName"]}"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

storage_container_name = "${azurerm_storage_container.artifacts.name}"

type = "block"

source = "./${var.deployInfo["portalLicenseFileName"]}"

}

resource "azurerm_storage_blob" "dscResources" {

name = "dsc.zip"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

storage_container_name = "${azurerm_storage_container.artifacts.name}"

type = "block"

source = "./dsc.zip"

}

resource "azurerm_storage_blob" "webAdaptorInstaller" {

name = "${var.deployInfo["marketplaceImageVersion"]}-iiswebadaptor.exe"

resource_group_name = "${azurerm_resource_group.rg.name}"

storage_account_name = "${azurerm_storage_account.storage.name}"

storage_container_name = "${azurerm_storage_container.artifacts.name}"

type = "block"

source = "./${var.deployInfo["marketplaceImageVersion"]}-iiswebadaptor.exe"

}

This addition handles the generation of a short-lived SAS token from the storage account that is then used during the configuration management portion to actually grab the needed files from storage securely. In this situation, we could simplify the deployment by marking our containers as public and not requiring a token but that is not recommended.

data "azurerm_storage_account_sas" "token" {

connection_string = "${azurerm_storage_account.storage.primary_connection_string}"

https_only = true

start = "${timestamp()}"

expiry = "${timeadd(timestamp(), "5h")}"

resource_types {

service = false

container = false

object = true

}

services {

blob = true

queue = false

table = false

file = false

}

permissions {

read = true

write = true

delete = true

list = true

add = true

create = true

update = true

process = true

}

}

The final change is the addition of an extension to the virtual machine that will handle the configuration management task using PowerShell DSC. Instead of reviewing this in-depth here, just know that the data that is getting passed under the settings and protected_settings json will be passed to PowerShell DSC as parameters for use as needed by the configuration file.

resource "azurerm_virtual_machine_extension" "arcgisEnterprise-dsc" {

name = "dsc"

location = "${azurerm_resource_group.rg.location}"

resource_group_name = "${azurerm_resource_group.rg.name}"

virtual_machine_name = "${element(azurerm_virtual_machine.arcgisEnterprise.*.name, count.index)}"

publisher = "Microsoft.Powershell"

type = "DSC"

type_handler_version = "2.9"

auto_upgrade_minor_version = true

count = "${var.arcgisEnterpriseSpecs["count"]}"

settings = <<SETTINGS

{

"configuration": {

"url": "${azurerm_storage_blob.dscResources.url}${data.azurerm_storage_account_sas.token.sas}",

"function": "enterprise",

"script": "enterprise.ps1"

},

"configurationArguments": {

"webAdaptorUrl": "${azurerm_storage_blob.webAdaptorInstaller.url}${data.azurerm_storage_account_sas.token.sas}",

"serverLicenseUrl": "${azurerm_storage_blob.serverLicense.url}${data.azurerm_storage_account_sas.token.sas}",

"portalLicenseUrl": "${azurerm_storage_blob.portalLicense.url}${data.azurerm_storage_account_sas.token.sas}",

"externalDNS": "${azurerm_public_ip.arcgisEnterprise.fqdn}",

"arcgisVersion" : "${var.deployInfo["marketplaceImageVersion"]}",

"BlobStorageAccountName": "${azurerm_storage_account.storage.name}",

"BlobContainerName": "${azurerm_storage_container.webgisdr.name}",

"BlobStorageKey": "${azurerm_storage_account.storage.primary_access_key}"

}

}

SETTINGS

protected_settings = <<PROTECTED_SETTINGS

{

"configurationArguments": {

"serviceAccountCredential": {

"username": "${var.deployInfo["serviceAccountUsername"]}",

"password": "${var.deployInfo["serviceAccountPassword"]}"

},

"arcgisAdminCredential": {

"username": "${var.deployInfo["arcgisAdminUsername"]}",

"password": "${var.deployInfo["arcgisAdminPassword"]}"

}

}

}

PROTECTED_SETTINGS

}

Configuration Management

As was touched on above, we are utilizing PowerShell DSC (Desired State Configuration) to handle the configuration of ArcGIS Enterprise as well as a few other tasks on the instance. To simplify things, I have included v2.1 of the ArcGIS module within the archive but the public repo can be found here. The ArcGIS module provides a means with which to interact with ArcGIS Enterprise in a controlled manner by provided various "resources" that perform specific tasks. One of the major benefits of PowerShell DSC is that it is idempotent. This means that we can continually run our configuration and nothing will be modified if the system matches our code. This provides administrators the ability to push changes and updates without altering existing resources as well as detecting configuration drift over time.

To highlight the use of one of these resources, let's take a quick look at the ArcGIS_Portal resource which is designed to configure a new site without having to manually do so through the typical browser based workflow. In this deployment, our ArcGIS_Portal resource looks exactly like the below code. The resource specifies the parameters that we must be provided to successfully configure the portal site and will error out if all required parameters are not provided.

ArcGIS_Portal arcgisPortal {

PortalEndPoint = (Get-FQDN $env:COMPUTERNAME)

PortalContext = 'portal'

ExternalDNSName = $externalDNS

Ensure = 'Present'

PortalAdministrator = $arcgisAdminCredential

AdminEMail = 'example@esri.com'

AdminSecurityQuestionIndex = '12'

AdminSecurityAnswer = 'none'

ContentDirectoryLocation = $portalContentLocation

LicenseFilePath = (Join-Path $(Get-Location).Path (Get-FileNameFromUrl $portalLicenseUrl))

DependsOn = $Depends

}

$Depends += '[ArcGIS_Portal]arcgisPortal'

Because of the scope of what is being done within the configuration script here, we will not be doing a deep dive. This will come in a later article.

Putting it together

With the changes to the Terraform template as well as a very high level overview of PowerShell DSC and its purpose, we can deploy the environment using the same commands mentioned in the first entry in the series. Within the terminal of your choosing, navigate into the extracted archive that contains your licenses, template and DSC archive, and start by initializing Terraform with the following command.

terraform init

Next, you can run the following to start the deployment process. Keep in mind, we are not only deploying the infrastructure but configuring ArcGIS Enterprise so the time for completion will vary. When it completes, it will output the public facing url to access your ArcGIS Enterprise portal.

terraform apply

Summary

As you should quickly be able to see, removing the manual configuration aspects of software as well as the deployment of infrastructure, a large portion of problems can be mitigated by moving toward IaC and Configuration Management. There are many solutions out there to handle both aspects and these are just two options. Explore what works for you and start moving toward a more DevOps centric approach.

I hope you find this helpful, do not hesitate to post your questions here: Engineering ArcGIS Series: Tools of an Engineer

Note: The contents presented above are examples and should be reviewed and modified as needed for each specific environment.