Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Cancel

Python Blog - Page 8

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Blog

- :

- Python Blog - Page 8

Options

- Mark all as New

- Mark all as Read

- Float this item to the top

- Subscribe to This Board

- Bookmark

- Subscribe to RSS Feed

Subscribe to This Board

Showing articles with label Py...Blog.

Show all articles

Latest Activity

(198 Posts)

MVP Emeritus

12-12-2018

08:37 PM

4

3

2,169

MVP Emeritus

12-12-2018

08:31 PM

9

9

10.1K

MVP Emeritus

12-12-2018

08:16 PM

2

0

1,627

MVP Emeritus

10-22-2018

09:12 AM

0

3

1,205

MVP Emeritus

10-08-2018

12:08 PM

0

2

2,010

MVP Emeritus

10-08-2018

03:14 AM

0

0

2,090

MVP Emeritus

10-03-2018

10:48 PM

2

3

1,925

MVP Emeritus

08-18-2018

05:47 PM

0

0

1,948

MVP Emeritus

07-11-2018

06:08 AM

0

0

1,816

MVP Emeritus

07-01-2018

04:10 PM

13

1

15.4K

229 Subscribers

Labels

-

Py...Blog

165

Popular Articles

Turbo Charging Data Manipulation with Python Cursors and Dictionaries

RichardFairhurst

MVP Alum

47 Kudos

91 Comments

The ...py... links

DanPatterson_Retired

MVP Emeritus

18 Kudos

6 Comments

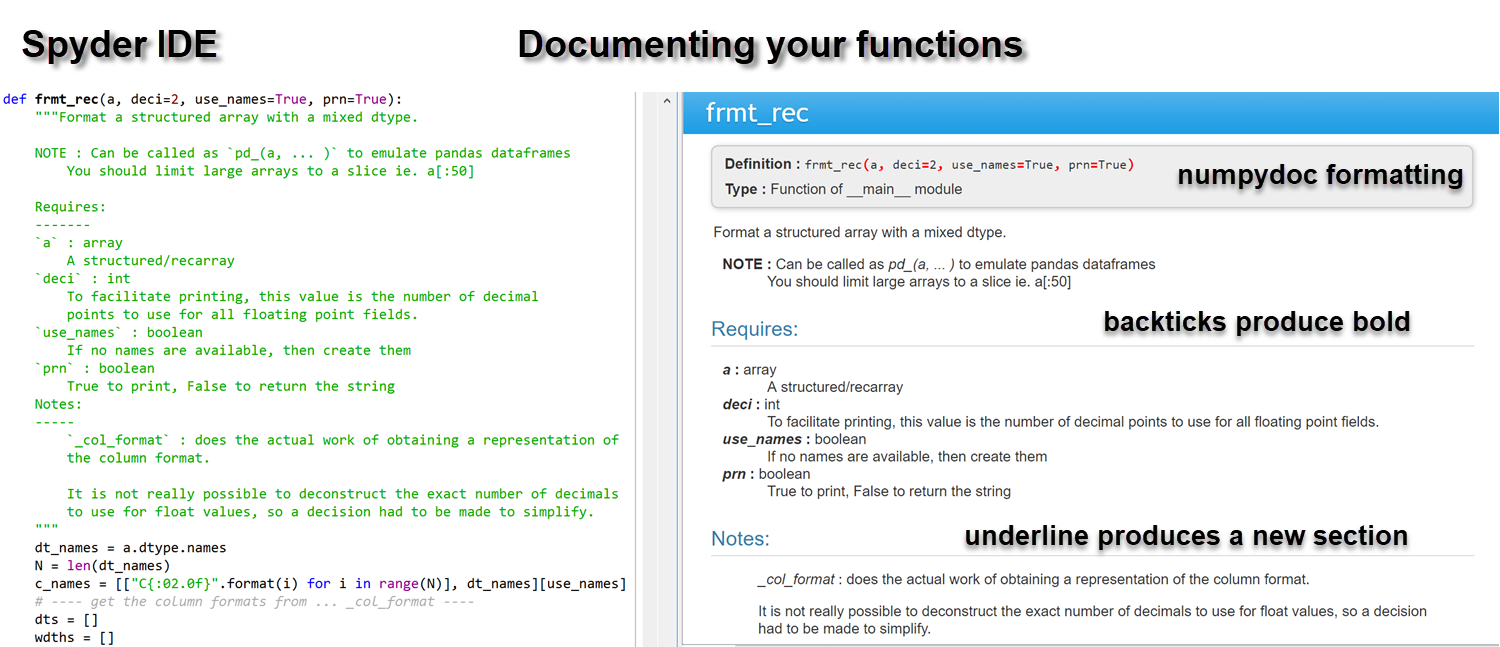

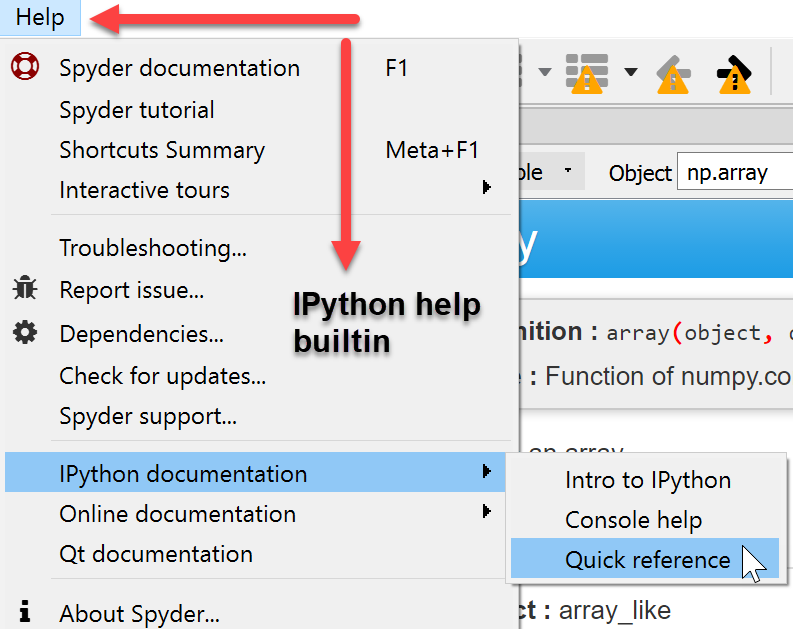

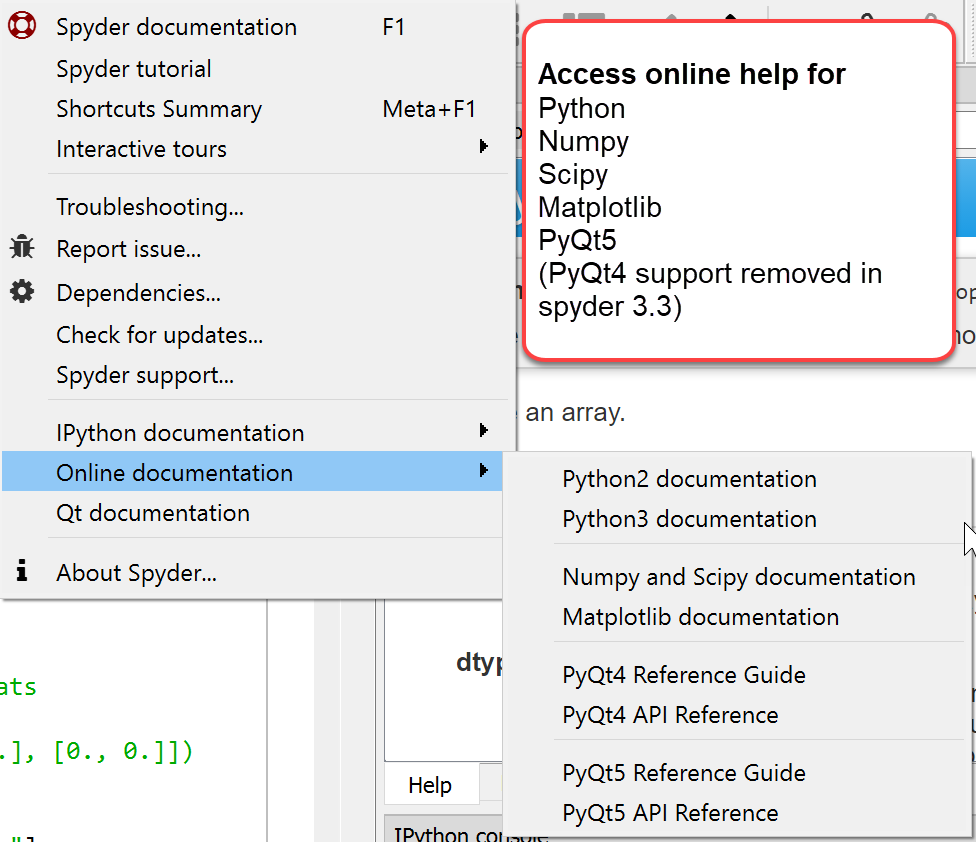

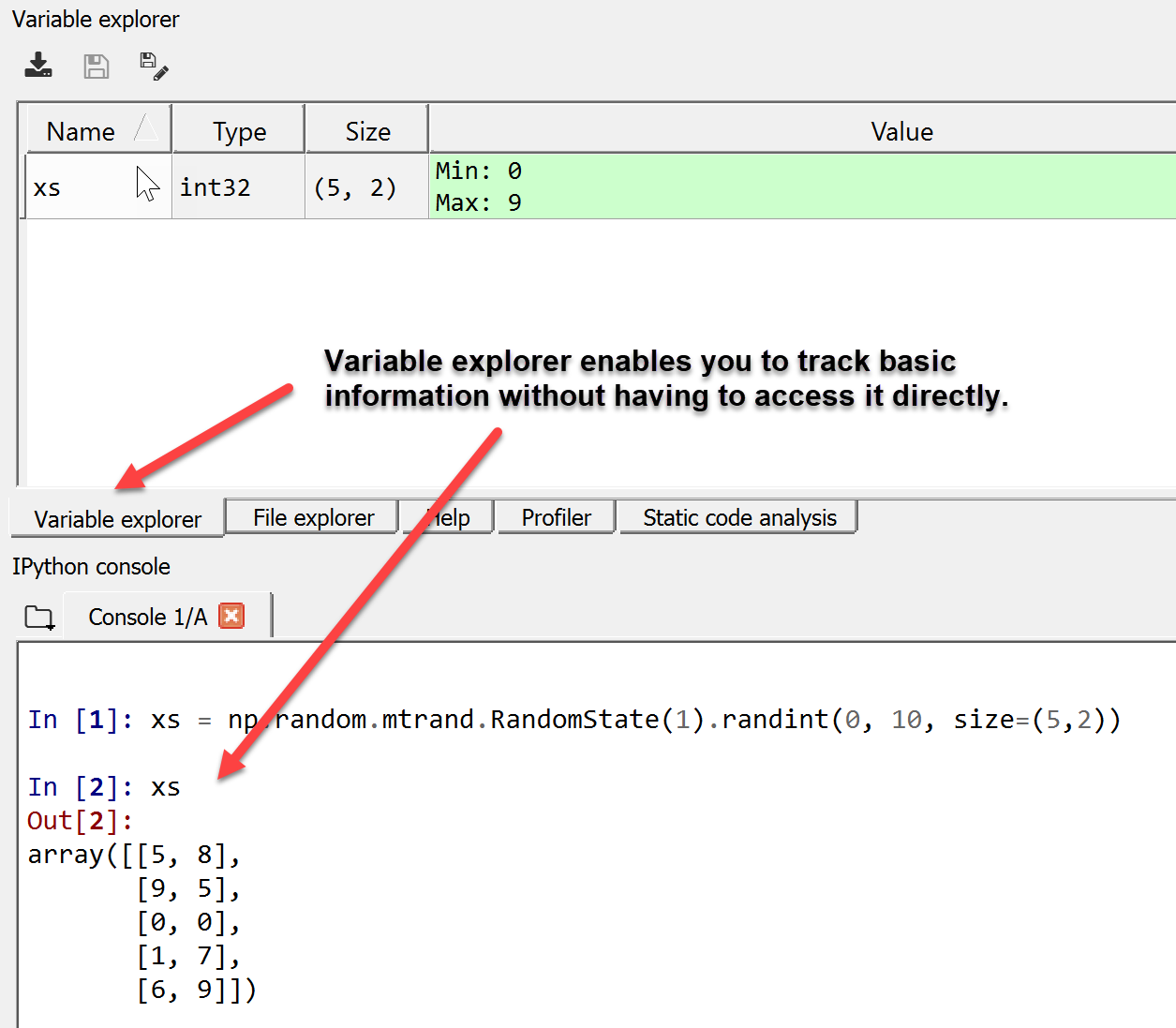

Code Formatting... the basics++

DanPatterson_Retired

MVP Emeritus

16 Kudos

0 Comments