Note: This blog post is the second in a series of three planned posts about egdbhealth. The first in the series described what the tool is, how to install it, and how to execute it. The third in the series will address using egdbhealth in a system design context.

Introduction

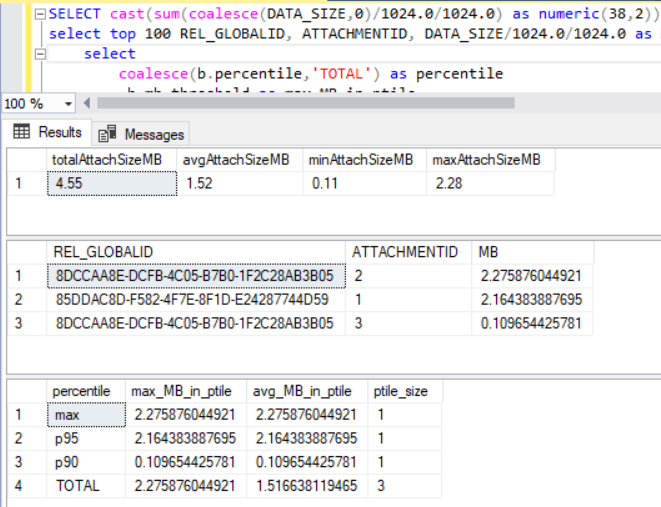

Egdbhealth is a tool for reporting on various characteristics of Enterprise Geodatabases (eGDBes). This article discusses how to use the outputs of egdbhealth to evaluate the health of an eGDB. All of the examples use a SQL Server-backed eGDB. However, similar principles apply to using the tool with Oracle- and PostgreSQL-backed eGDBes.

For information about installing and running the tool (i.e. creating the outputs), please refer to the first blog post in this series, "What is Egdbhealth?"

Viewing and Understanding Findings

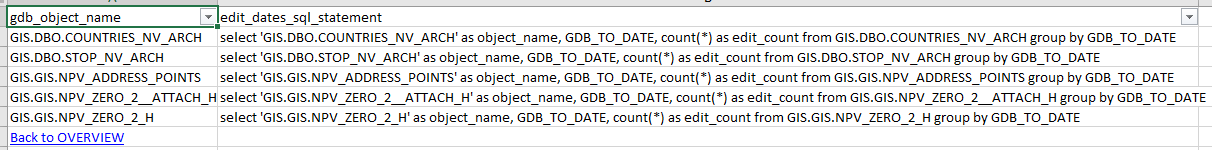

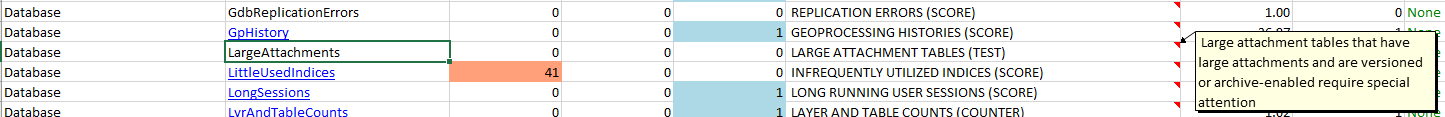

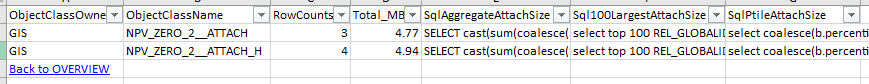

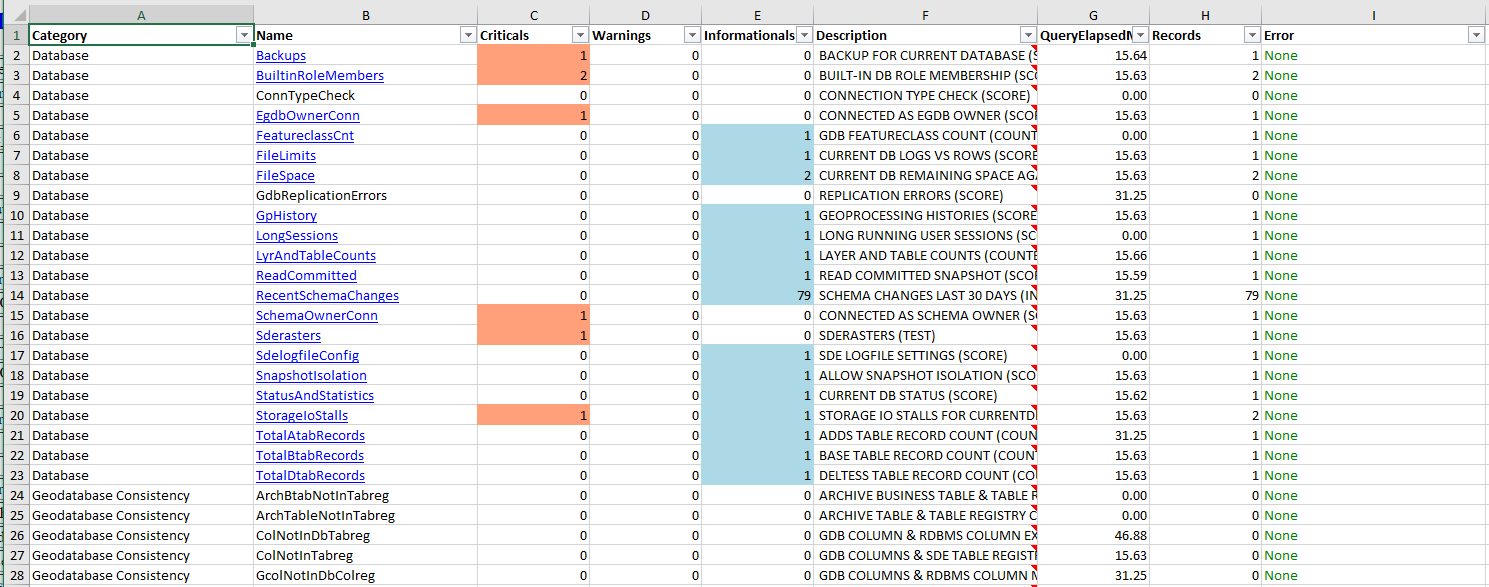

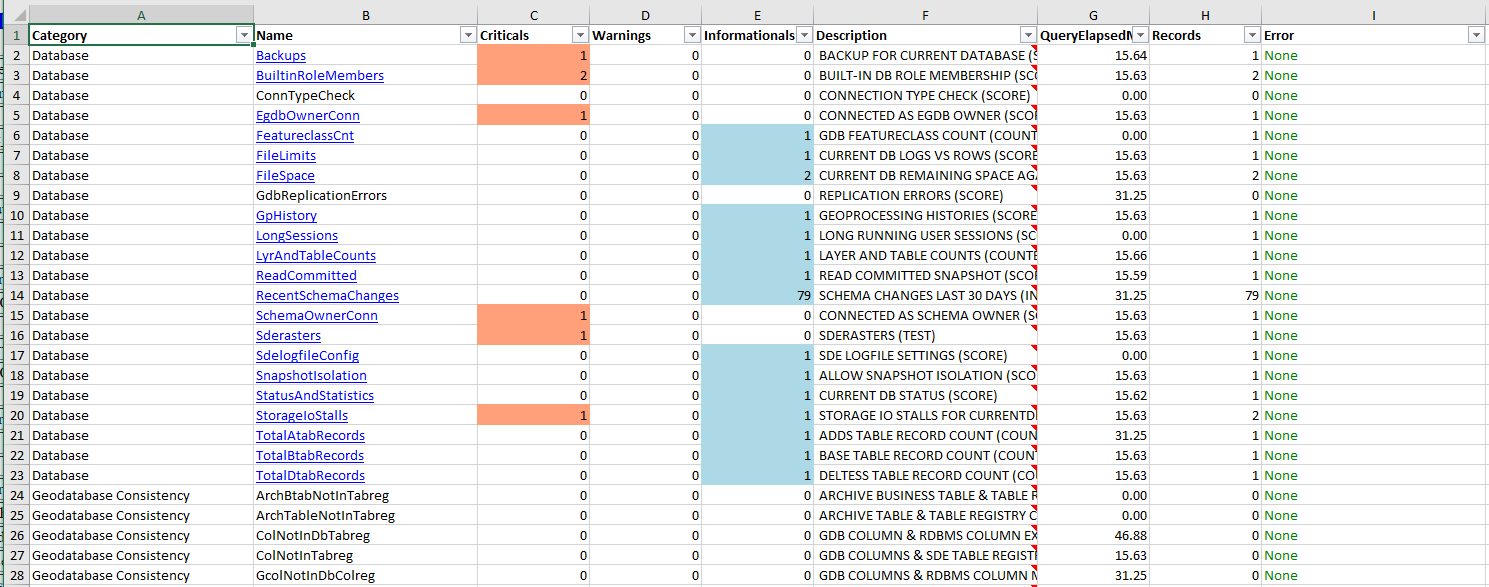

The Expert Excel file contains an “OVERVIEW_EXPERT” sheet that allows you to see the evaluations at a high-level and prioritize your review of the findings.

This article will not describe all of the evaluations and their various meanings. There are too many for that to be practical. Instead, the article describes the process and provides specific examples to illustrate the kinds of benefits that can be gained.

Criticals

The red-filled cells “Criticals” column should be viewed first. These findings are highlighted as top concerns in their respective Categories.

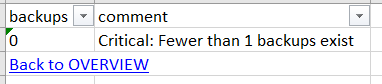

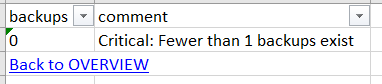

For example, in the screen capture above, “Backups” is flagged as a critical concern. Click on the hyperlinked cell to view the sheet with the detailed information.

In this case, the worksheet has a single record that says that the database has never been backed-up. This is a critical concern because if the system fails, data can be lost. There is also a hyperlink back to the “OVERVIEW_EXPERT” worksheet. This “Back to OVERVIEW” link appears in every detail worksheet to ease navigation.

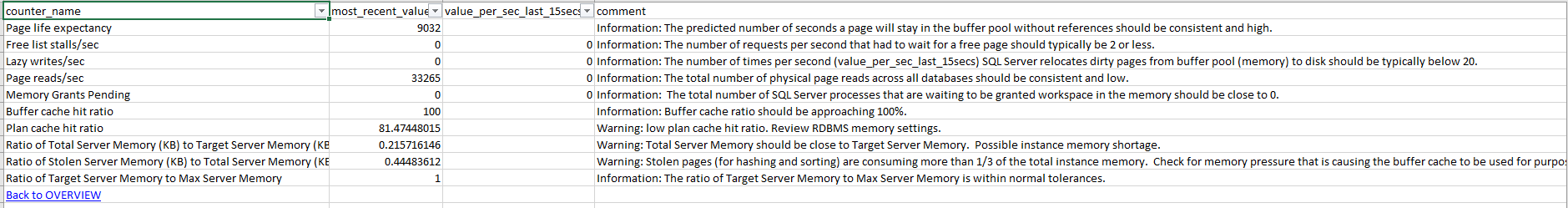

In the example below, “Memory Pressure”, the detail worksheet displays memory pressure warnings reported by SQL Server. When the RDBMS reports that there is memory pressure, it is an indication that there is, or soon will be, performance and/or scalability problems.

The Comments column (always found on the far right) describes the issue and the recommended course of action at a high level. Note that the amount of information reported is much greater than the “BackUp” example (more columns) and that the information is of a highly technical nature, requiring specialized knowledge to understand.

The Comments column is egdbhealth’s best effort to make the detail digestible and actionable with incomplete knowledge of the domain. In some cases, the Comments column will provide links to Internet resources that offer more information to support a deeper understanding.

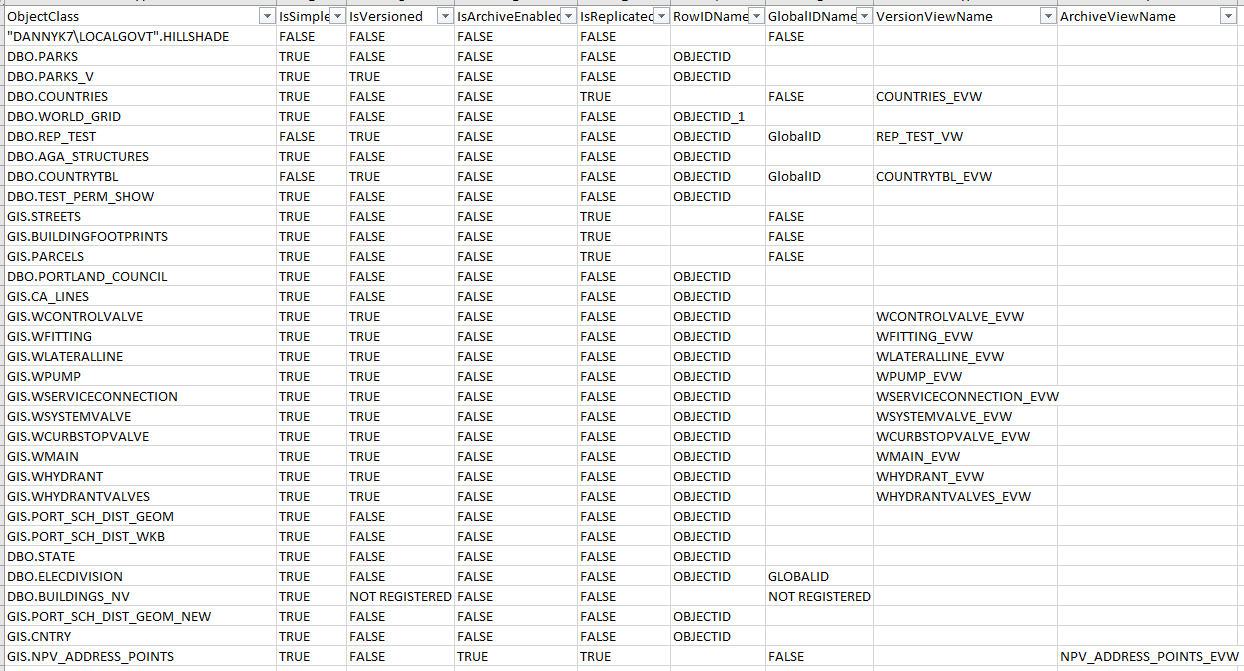

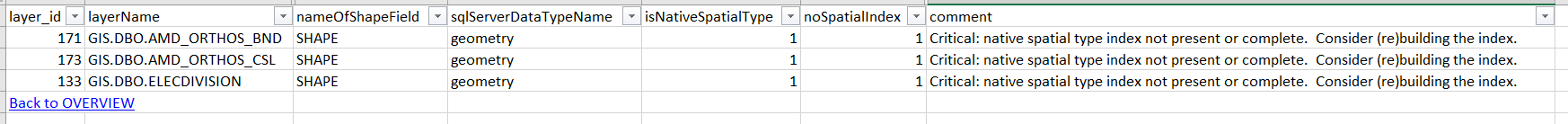

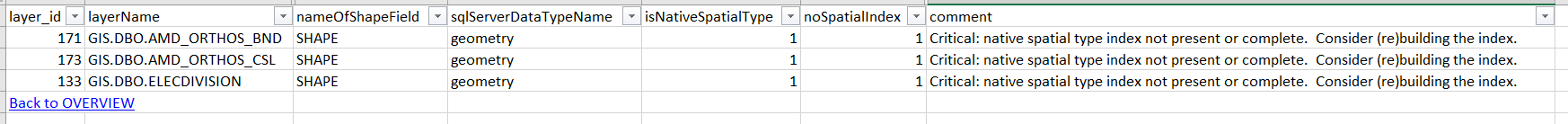

Here is another example that identifies tables that have geometry columns that do not have spatial indices:

The absence of spatial indices on geometry columns will degrade the performance of spatial queries. In this case, the “Comment” column recommends that spatial indices be created (or rebuilt) to heal the problem.

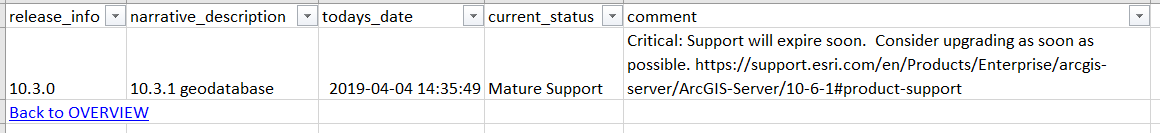

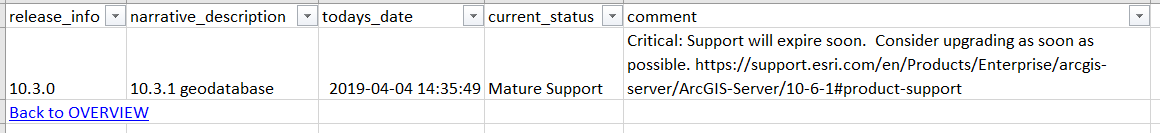

In this next example, the problem is that the release of the eGDB is quite old, indicating that it should be upgraded. Note that the “Comments” column provides a link to more information (online):

Warnings

“Warnings” follow the same pattern as “Criticals”. However, as the name implies, they are a lower priority for review. Note that a given evaluation may have both critical and warning findings.

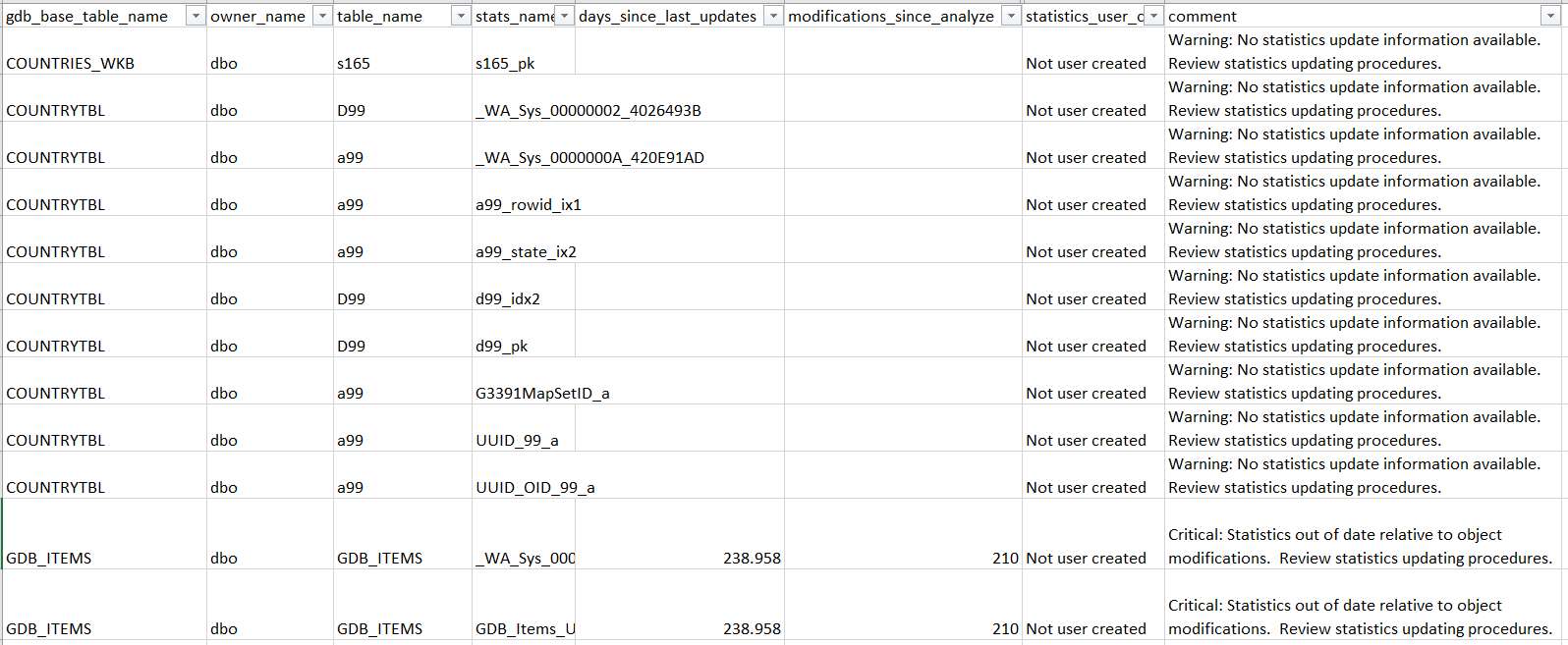

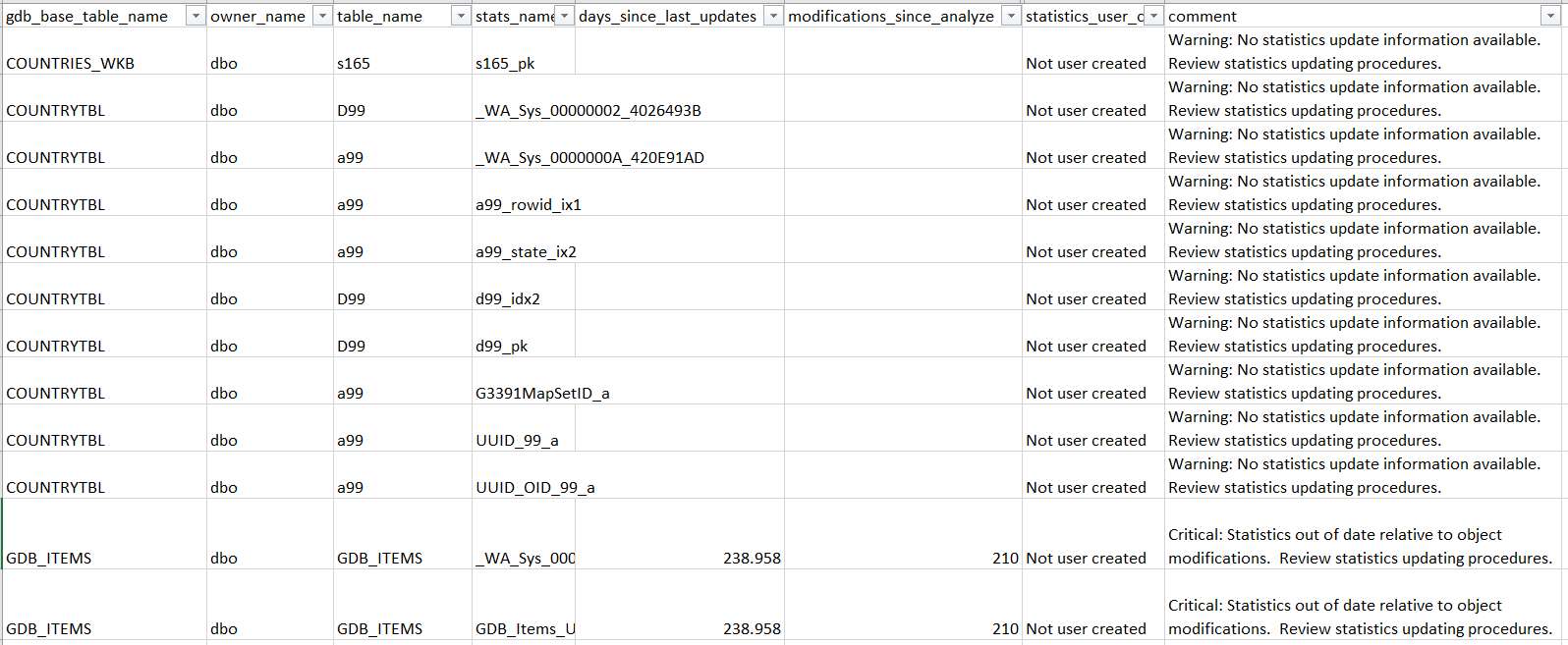

In the example below, egdbhealth is reporting that there are stale or missing statistics on a variety of objects in the eGDB:

Depending on the details of the specific statistics, the finding is flagged as “Warning” or “Critical” in the Comment column (always at the far right).

Here, in cases where no statistics information is available, the record is treated as a “Warning” because of the uncertainty. Statistics that have information indicating that they have not been updated recently, or there have been a lot of changes since the last update, are flagged as “Critical”.

The RDBMS’ cost-based optimizer uses these statistics to determine the best query execution plans. Thus, if the statistics are not current with respect to the state of the data, the optimizer may not make good choices and the performance of the system will be sub-optimal.

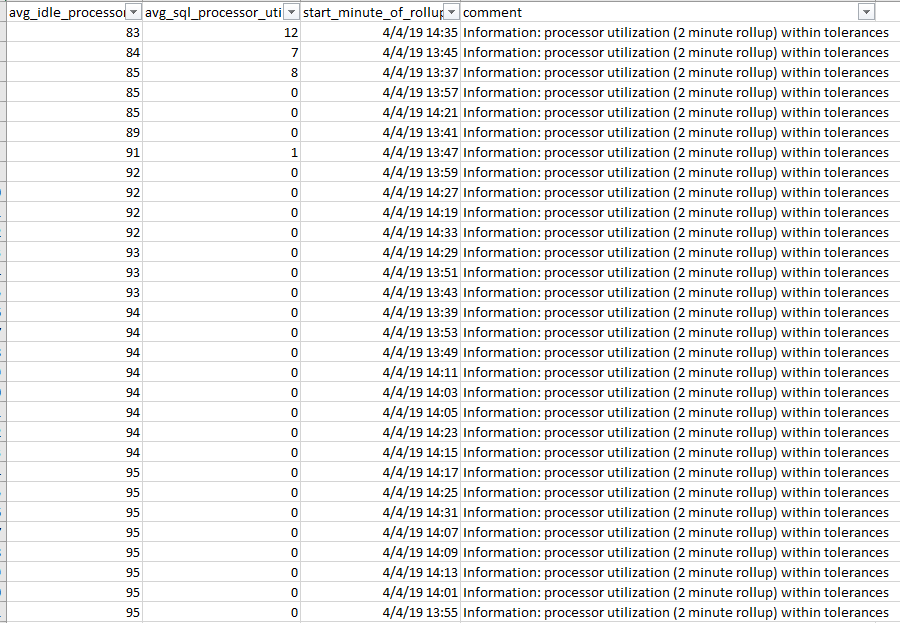

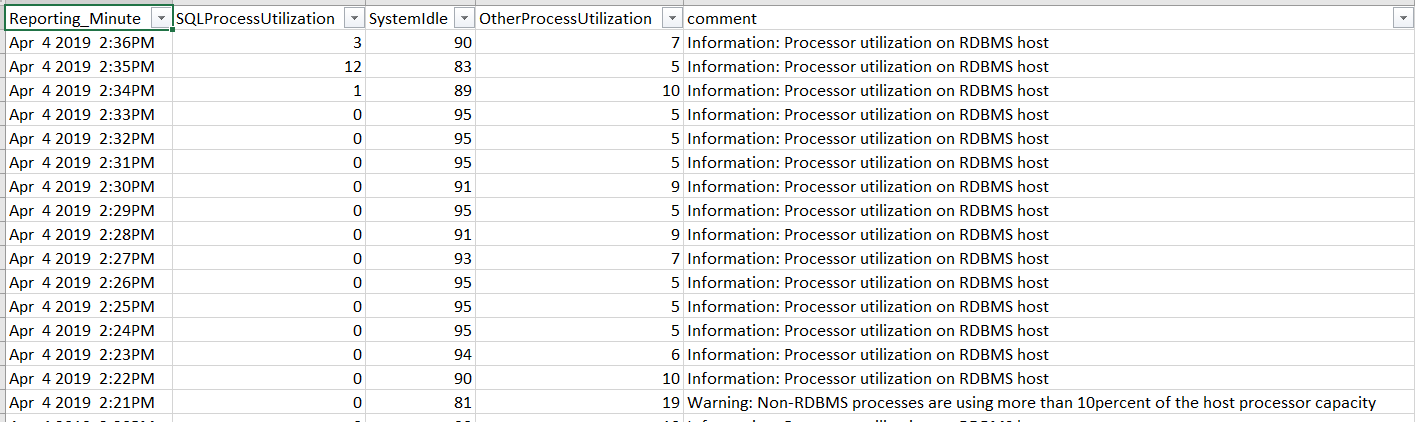

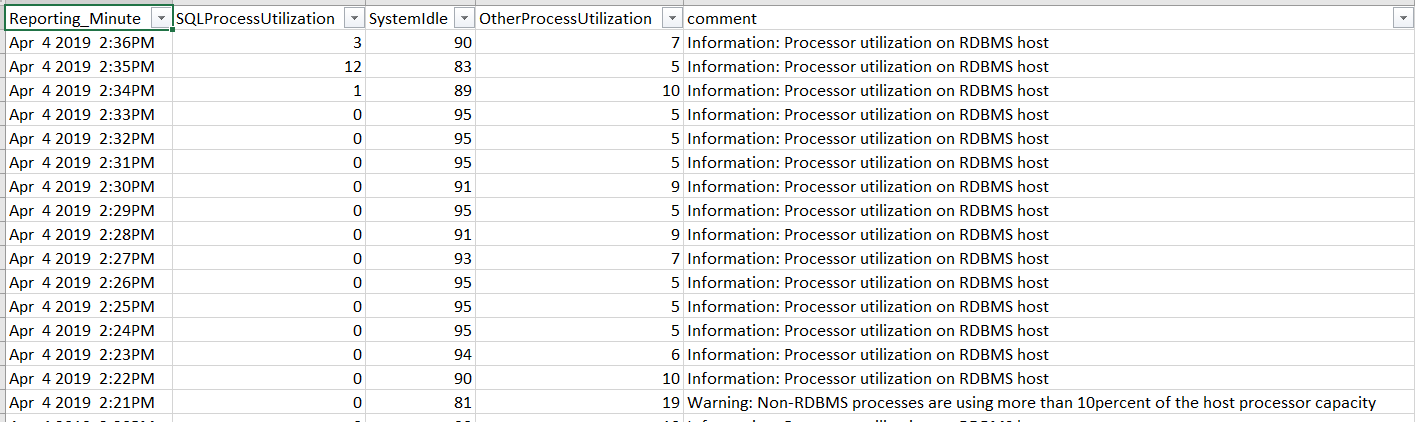

In the example below, most of the records are “Informationals”, simply reporting facts about the system. But, there are a few rows that have “Warnings”.

The Warnings are noting that, for a short period of time, the machine running SQL Server had more than 10% of its processor capacity used by a process other than SQL Server itself. This is not a condition that causes a performance or scalability problem. However, as most RDBMS systems are intended to run on dedicated machines, this may be an indication that there are other processes that do not belong or need special attention in the administration of the system.

Informationals

“Informationals” follow the same pattern as the other types of findings. However, the information is not evaluative in nature. As it is essentially descriptive, it could be placed in the Context Excel file. There are a few reasons why it is in the Expert file instead:

- The findings may not always be Informational … depending on the conditions encountered.

- The information is relatively volatile (i.e. changes over time). The Context Excel file is designed to provide information that is relatively static in nature.

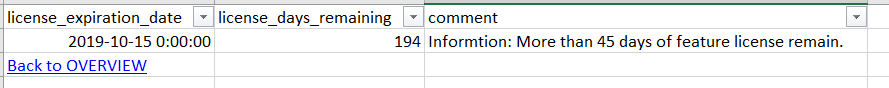

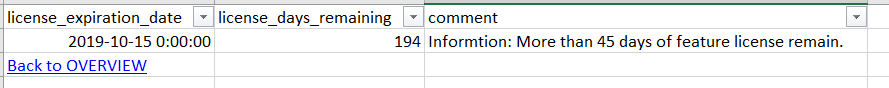

The example below illustrates this first case:

The licensing of this eGDB will not be a concern for many months. But, in about six months, if the license has not been updated, this message will no longer be informational.

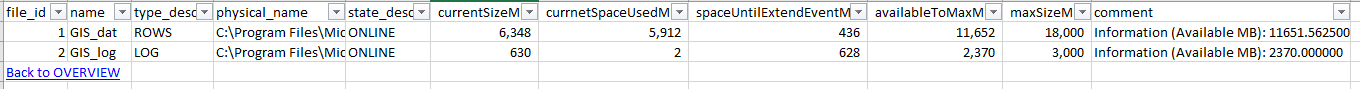

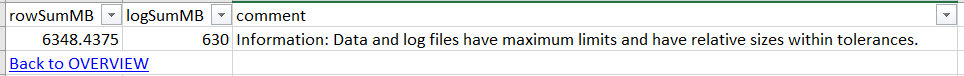

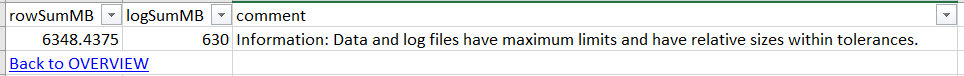

Similarly, the finding below about the underlying database file sizes could change at any time:

Thus, these descriptive pieces of information are reported in the Expert Excel, even though they are not currently reporting an evaluative finding that is negative.

Taking Action to Improve Health

Just as it is impractical to describe all of the individual evaluations in this document, it is impractical to provide action instructions for each one. Instead, this article discusses the process of understanding and acting on the evaluative information, along with specific examples.

The process involves the following steps:

- Understand the evaluation

- Validate the evaluation

- Try to resolve the evaluation

- Validate the resolution

Understand the Evaluation

Some evaluations are easier to understand than others. In those fortunate cases where the “comments” column adequately communicates the concern, this step happens automatically. In othere cases, some research may be appropriate.

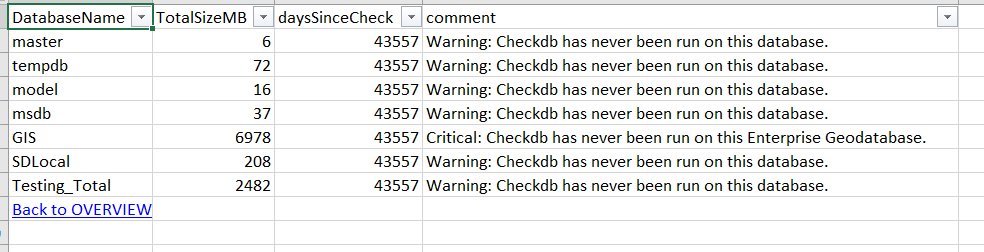

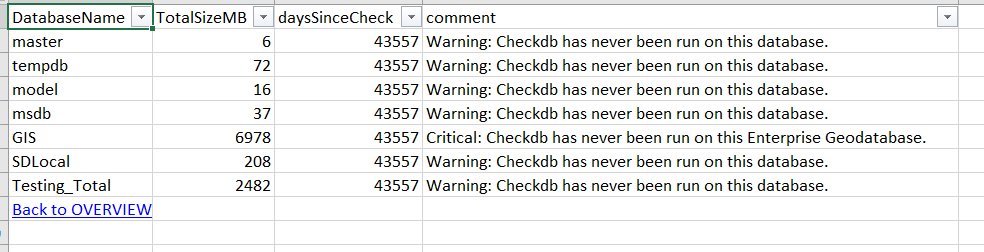

For example, the findings below report that Checkdb has never been run on the databases in this SQL Server instance (it flags the eGDB as critical, whereas the other databases are warnings):

If you are not already familiar with Checkdb, an Internet search for “SQL Server Checkdb” will return results to help you understand. In many cases, a modest research effort such as this will be all that is necessary to understand an evaluation which is in a topic that is unfamiliar to you.

In this case, an Internet search would likely surface the following links, offering more information and suggested actions: https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-checkdb-transact-sql?view=..., https://www.mssqltips.com/sqlservertip/4381/sql-server-dbcc-checkdb-overview/, and https://www.brentozar.com/blitz/dbcc-checkdb-not-run-recently/. In short, Checkdb runs a variety of internal checks on the database to identify possible corruption and other issues. So, it is good to run it once in a while to avoid such problems.

Validate the Evaluations

It is useful to validate evaluations before taking action because, for a variety of reasons, the information returned may have imperfections or require some judgement.

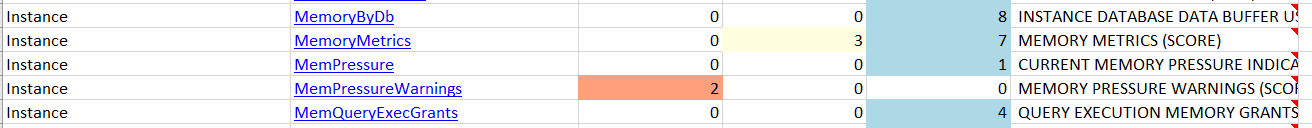

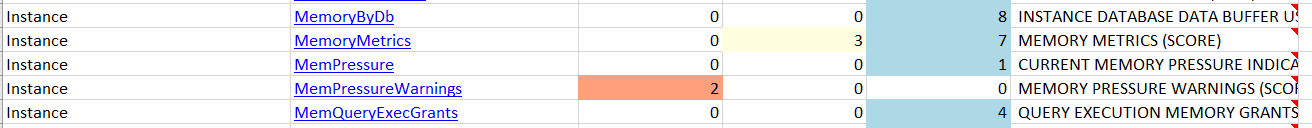

For example, in the “Instance” category below, there are 2 “Critical” Memory Pressure Warnings evaluations, but the Memory Pressure evaluation is only reporting “Informationals”, not “Warnings” or “Criticals”.

In this case, the situation is explained by the fact that there many different indicators of memory pressure. At any given time, and over time, they do not necessarily all point to the same conclusion. Thus, you must weigh the related information before concluding that action is warranted (and what action is warranted).

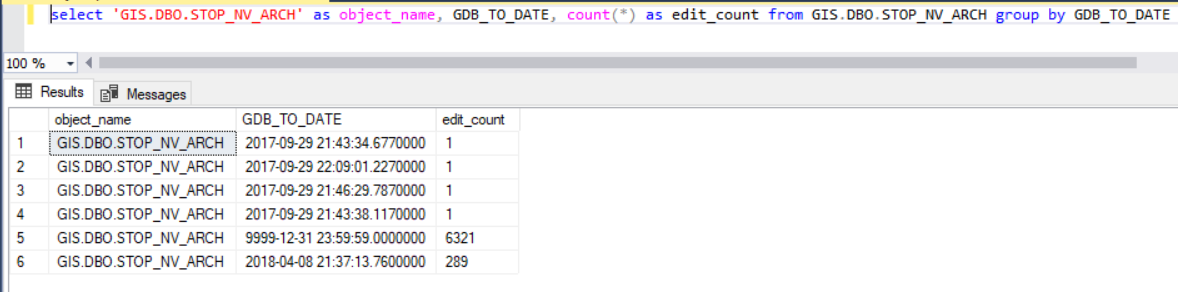

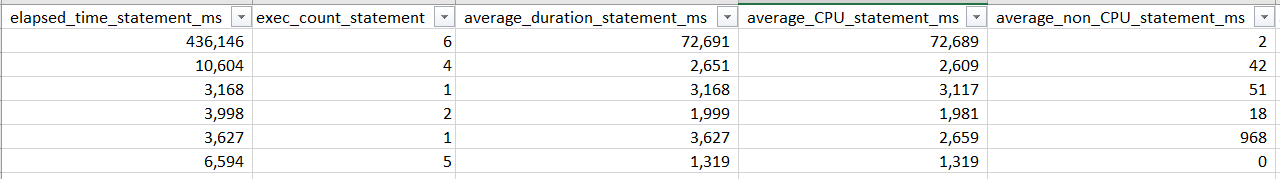

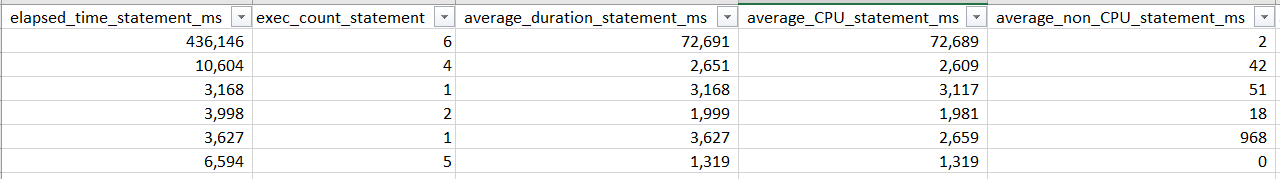

In other cases, the evaluations may benefit from your judgement about the detailed information provided in the findings sheet. For example, this detail about “Long Elapsed Time Queries” has surfaced that there are some queries that spend very long time in SQL Server.

In the first row, there is a query which has an average duration of 72 seconds (third column). However, it has only be executed 6 times in the period for which these statistics support.

Egdbhealth does not know the period of the statistics (perhaps they were just flushed a few moments ago). And, egdbhealth does not know if 6 executions is a lot or a little. Here, it is more than other queries, but it is not many in absolute terms. Finally, egdbhealth does not really know what “slow” is for this particular query. Perhaps this supports a “batch” process that is expected to take a long time. To make this determination, you would scroll over to the right (not in this screen capture) to view the SQL statement to see what the query is doing. Then, you can make an informed judgement, based on how your system is used, and the reasonable expectations that users have for its performance, about whether or not these queries with “long elapsed times” are ones that should be actionable for you.

Try to Resolve the Evaluation

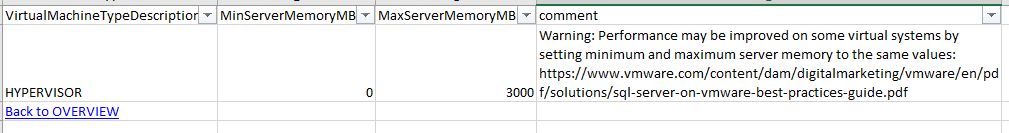

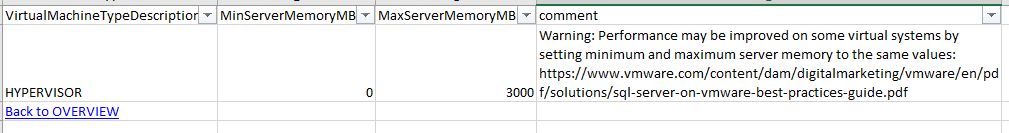

Your understanding of the evaluation will guide your efforts to address the problem. In some cases, such as the one below, egdbhealth will point to Internet-based resources that will help you plan and carry-out the actions.

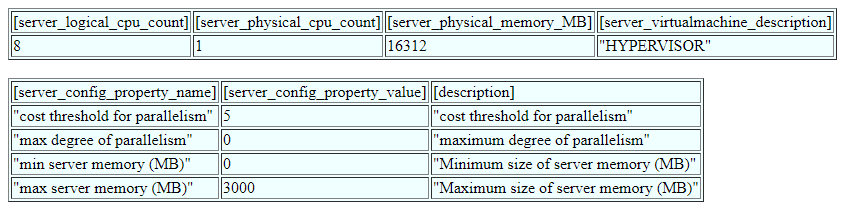

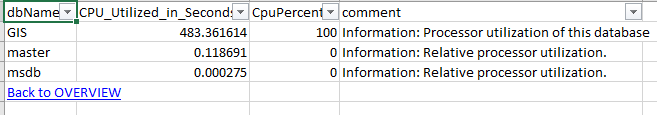

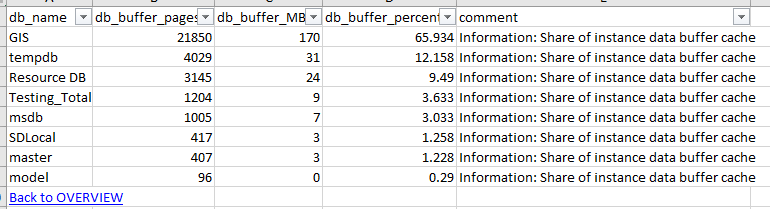

In this case, egdbhealth recognized that the SQL Server instance is running on virtual hardware. In the case of VMWare (and perhaps other platforms), best practice advice suggests that the minimum server memory and maximum should be set to the same value. Once you understand it, this change is relatively straight-forward to make and may require only a brief consultation with the virtual machine platform team to confirm that it corresponds with best practices in their minds also.

In other cases, egdbhealth’s guidance will be more oblique and you will need to rely upon specialists within your organization, Esri Technical Support, or your own Internet research to come up with an action plan.

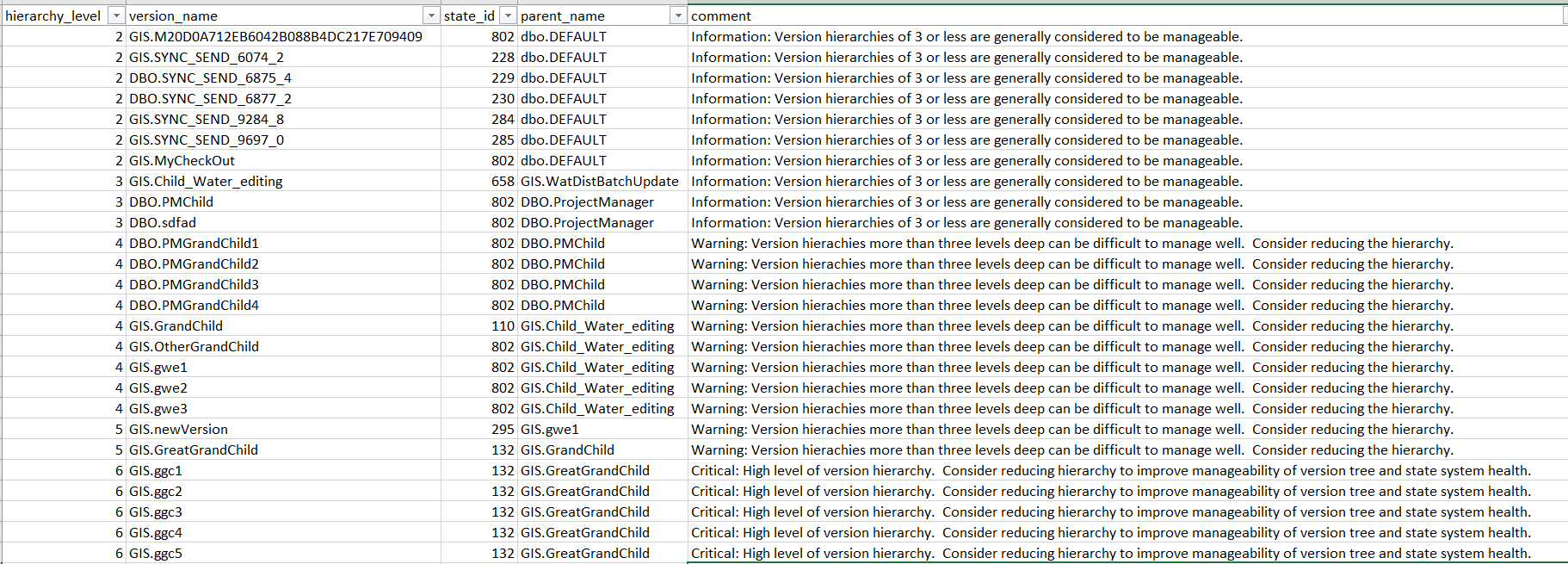

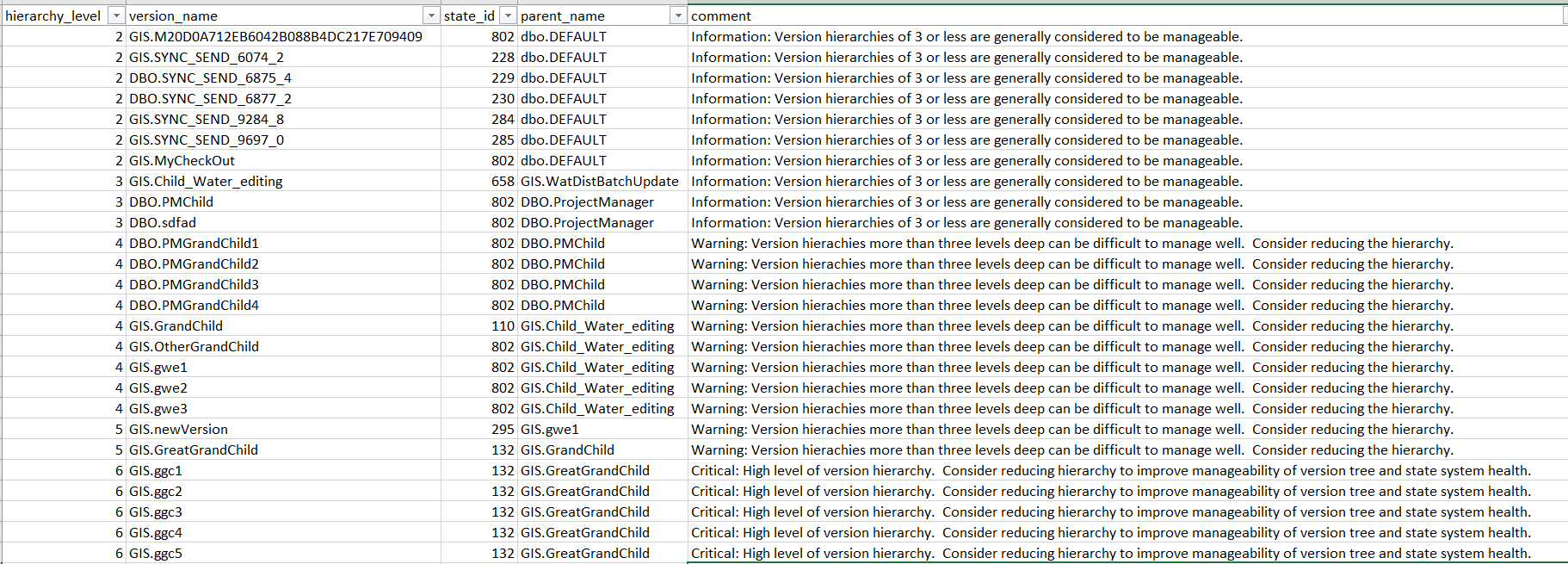

Sometimes actions will involve changes that will take a considerable amount of organizational and/or system change. In the example below, egdbhealth is suggesting that the performance of the versioning system could be improved by having less hierarchy in the version tree. Changing the way versioning is used by an organization is a major undertaking that requires planning and time. In this case, you can expect to spend time planning changes, socializing them within your organization, and then carrying it out.

Validate the Resolution

Running egdbhealth again, after your initial efforts to resolve the evaluation(s) will effectively validate whether or not your efforts succeeded. Note that, when you run egdbhealth again on the same eGDB, the prior Expert Excel file is placed in the “archive” subdirectory for your reference. (The Content Excel file is not re-created, because its information is less volatile.)

Naturally, you hope to find all of the “Criticals” or “Warnings” that you addressed have disappeared in the new Expert Excel output. And, this can be expected where you have correctly understood the problem and taken effective action.

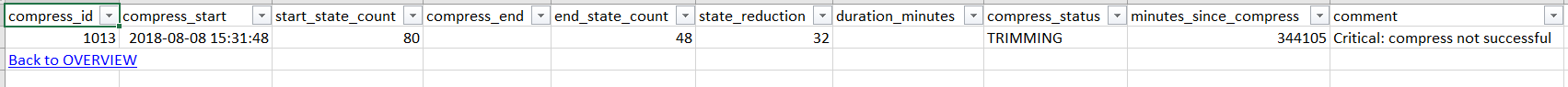

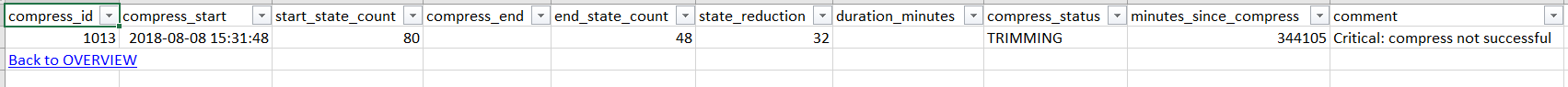

For example, a finding such as the one below (that the most recent compress failed) will be resolved in the “OVERVIEW_EXPERT” sheet as soon as you address the problem. In this case, as soon as you successfully compress and re-run egdbhealth, this evaluation will be resolved.

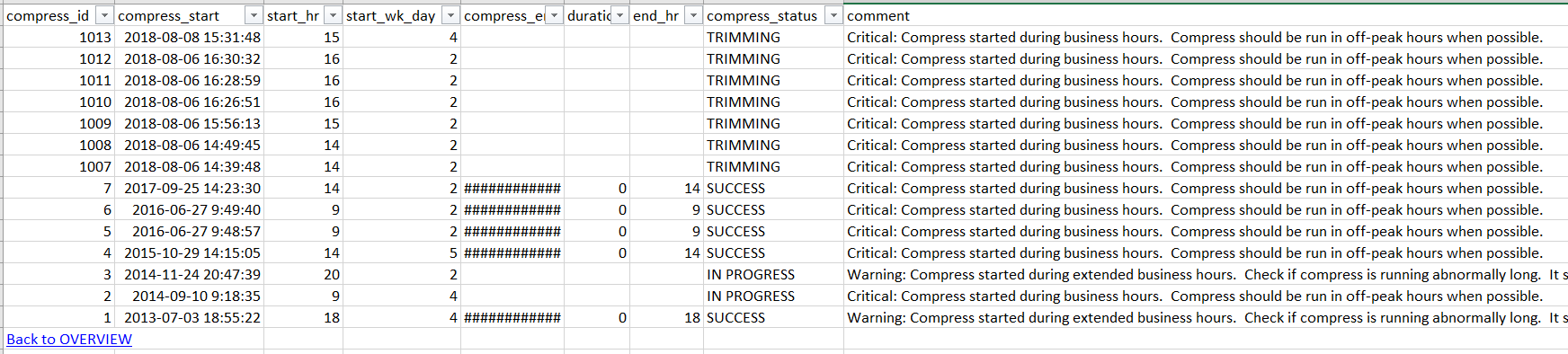

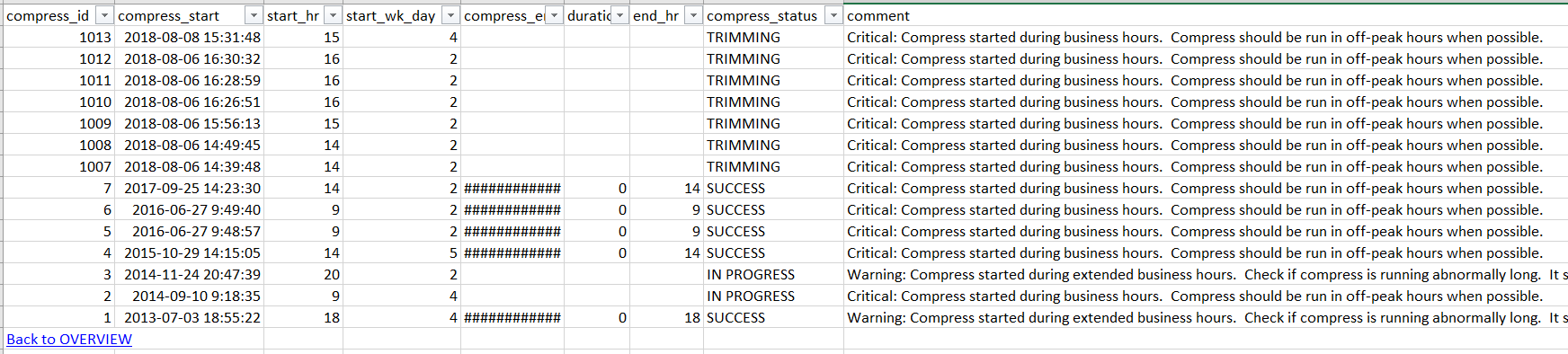

In a few cases, however, the “Critical” or “Warning” classifications will not fully resolve themselves even though the current condition is no longer the same. For example, the “Compress During Business Hours” evaluation reports on the recent history of compresses, not just the most recent compress. You can expect the evaluations to remain unchanged in the “OVERVIEW_EXPERT” sheet for some time.

The detail sheet and other sheets in the Versioning category will illustrate that your recent compress did not occur during business hours (if that is the case). Thus, you have resolved the evaluation. And, over time, egdbhealth will allow itself to agree.

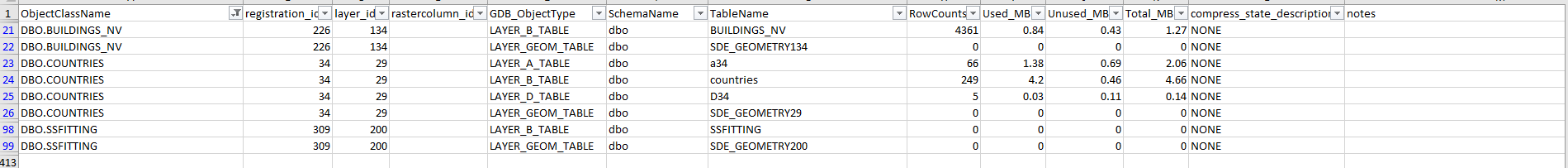

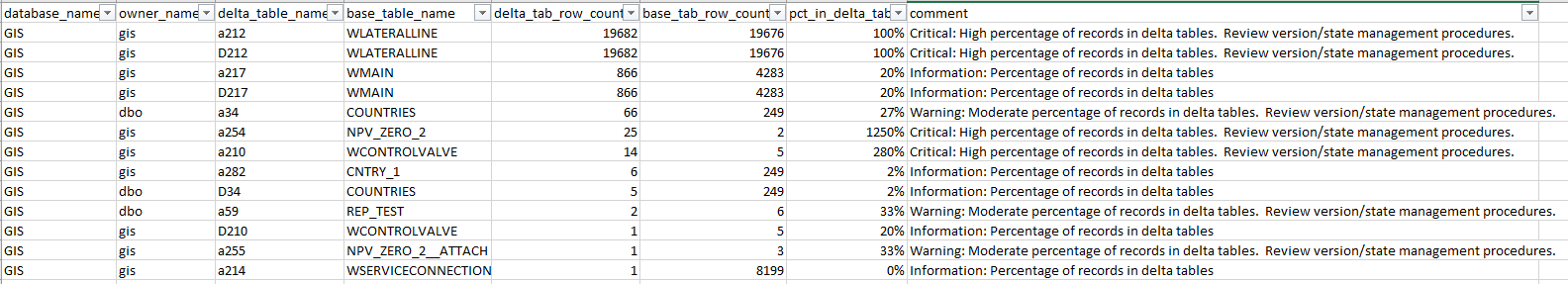

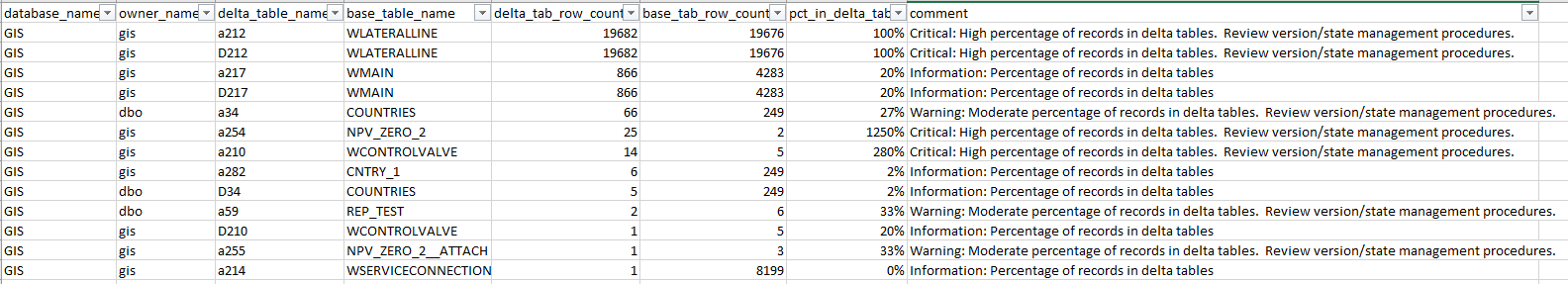

Finally, you will find that some evaluations are volatile. In repeated runs of egdbhealth, they will seem to be present or absent without relationship to your specific actions. For example, the evaluation below reports on the percentage of base table records that are in the delta tables (“A” and “D” tables). Where those percentages are high, it offers a negative evaluation.

The action you may have taken in response is to compress the eGDB. The effectiveness of that action, however, would depend upon the reconciling and posting that is occurring on the system. So, if there had been no new reconcile and post activity, the compress would not have changed the evaluation. On the other hand, if there had been reconcile and post activity, or if a very stale version had been deleted, the compress may have resolved many of the findings. It is also true, however, that even with the ideal reconciles, posts, and compresses, editors might be generating more updates which are populating the delta tables at the same time as you are de-populating them.

The “Memory Metrics” example discussed earlier in this article are another case where you can expect volatility in evaluations. This is because memory pressure indicators will be triggered by different conditions in the database. Your informed judgment will be required to determine whether the recurring evaluations indicate a problem that needs further action.

The point is that the goal of taking action is not necessarily to achieve a “clean report card” with no negative evaluations. The goal should be to have only the evaluations that are appropriate to your system. In the process, you will have deepened your understanding of your eGDB system and offered many tangible improvements to the users of that eGDB.

Summary

The primary purpose of egdbhealth is to help administrators understand and improve the health characteristics of eGDBes. Focusing on the Expert Excel file output, and prioritizing your analysis based on the Critical/Warning/Informational classification scheme, you can address the aspects of an eGDB which are most in need of investigation. Some of the evaluations offered by egdbhealth may require various kinds of research to understand and determine a course of action. Colleagues, Esri Technical Support, and Internet resources can be used to build your knowledge. When you do take action to improve the health of your eGDB, be sure to run egdbhealth again to validate and document your progress.

I hope you find this helpful, do not hesitate to post your questions here: https://community.esri.com/thread/231451-arcgis-architecture-series-tools-of-an-architect

Note: The contents presented above are recommendations that will typically improve performance for many scenarios. However, in some cases, these recommendations may not produce better performance results, in which case, additional performance testing and system configuration modifications may be needed.