- Home

- :

- All Communities

- :

- Services

- :

- Implementing ArcGIS

- :

- Implementing ArcGIS Blog

- :

- Using Public Domain Data to Benchmark an ArcGIS En...

Using Public Domain Data to Benchmark an ArcGIS Enterprise Map Service (Intermediate)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Choosing a Capability of ArcGIS Enterprise to Benchmark

As the foundational software system for GIS, ArcGIS Enterprise performs many duties such mapping and visualization, analytics. From this wide range of capabilities and functions there is no single test that can represent all of its ability.

However, if one function were to be used as a benchmark for testing an ArcGIS Enterprise deployment, a strong case can be made for the map service export function. Export map can be called easily and programmatically in an Apache JMeter Test Plan by varying the spatial extents of the requests from CSV data files across several map scales. This translates to just one request for each map scale transaction which helps keep the test from become complicated and difficult to maintain. Coupled with the fact that the export function has been available since version 9.3 makes for a proven and reliable operation to benchmark.

What is a Benchmark of a Map Service?

GIS testers and administrators are often tasked with understanding the differences in throughput between two systems or the same system after some form of environment modification. In such scenarios, a benchmark is the process of carrying out a load test to act as a standard for which multiple things can be compared to one another.

With respect to GIS, this load test would be an Apache JMeter Test Plan executing a step load test against an ArcGIS Enterprise map service to understand the highest rate of throughput (transactions/sec or requests/sec) that can be achieved from the deployment given a particular state or configuration. This rate is also known as the peak throughput. At peak throughput, understanding the performance (transaction or request response time) would also be critical to measure.

Benchmark Dataset

Any dataset can be used for a benchmark as long as it is kept constant where changes like feature class additions, updates, deletes and versions are not being made. This consistency helps create a dependable "standard" since it is a non-moving target. The test data can be private (e.g. proprietary) or public domain based.

What is Public Domain Data?

Generally speaking, public domain data would be any raster or vector datasets that are free to download and use. There are many public domain datasets out there (and potentially different licenses that define them). The data used in this Article is Made with Natural Earth and provided through the Creative Commons (CC0) license.

Why Use Public Domain Data?

One of the characteristics that make a good benchmark is constructing a test so that others are able repeat the same test that you did. Public domain data is a good choice in this regard as it promotes a testing standard and a dependable measuring stick for performance and scalability.

SampleWorldCities vs Natural Earth

While ArcGIS Server's inclusion of the SampleWorldCities through its installation helps make the dataset ubiquitous and good for test examples and walkthroughs, its extremely small size does not make it ideal to use for benchmarking a map service.

The Natural Earth datasets on the other hand, provides some decent map detail (at smaller scales) covering the whole world. Additionally, this can be achieved given an easily accommodating disk size foot print which help make it more practical to share, download and use.

The Benchmark Natural Earth Dataset

- Download the benchmark dataset here

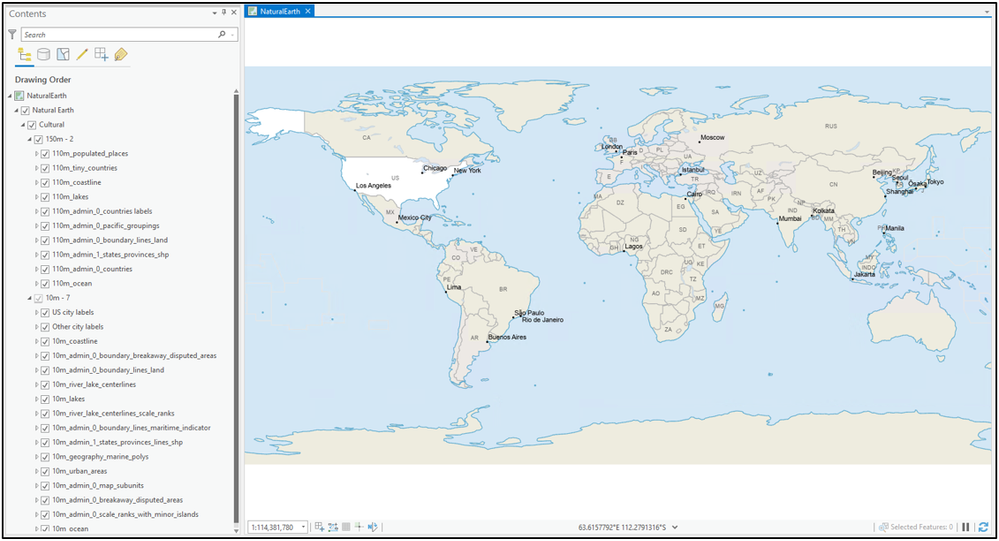

- The data is a subset of the Natural_Earth_quick_start.zip and includes a modified MXD for ArcMap 10.8.1 and ArcGIS Pro 2.8 project.

- Either can be used to publish a map service to ArcGIS Enterprise.

- The data is a subset of the Natural_Earth_quick_start.zip and includes a modified MXD for ArcMap 10.8.1 and ArcGIS Pro 2.8 project.

- The Natural Earth subset of data should look similar to the following when opened in ArcGIS Pro (or ArcMap)

Deployment Architecture

Architecture is import detail of a benchmark. The following are all important components of benchmark architecture that have an impact on the test:

- Does a Web Adaptor exist?

- Was authentication involved or was the service made available to everyone

- Portal for ArcGIS authentication

- ArcGIS Server token authentication

- Available to everyone

- How many machines took part in the ArcGIS Site?

- Processor details

- Processor model and architecture

- Number of CPU cores for each server (including the testing client workstation)

- Physical, virtual or cloud

- Physical Memory details

- Total amount of system memory

- Network speed

- ArcGIS Enterprise version

- Operating System Version

Note: It is recommended to take note of the deployment architecture details. Saving this information with the test results can help give proper context and meaning to the analysis or conclusions.

The results listed in for this benchmark test were run against the follow environment architecture:

- ArcGIS Server (10.9 Final)

- Dell PowerEdge R640

- SPECint_rate_base2006

- HyperThreading disabled

- 128GB RAM

- Windows Server 2019

- 10G network

- Dell PowerEdge R640

- ArcGIS Web Adaptor (10.9 Final)

- Dell PowerEdge R440

- SPECint_base2006

- HyperThreading disabled

- 64GB RAM

- Windows Server 2019

- 10G network

- Dell PowerEdge R440

- Test Client

- Apache JMeter 5.4.1

- Dell PowerEdge R640

- SPECint_rate_base2006

- 6 virtual CPUs

- 16GB RAM

- Windows Server 2019

- 10G network

Data Source Type and Location

Using either a file geodatabase or enterprise geodatabase to store data in for benchmark test is fine. Regardless of which is used, the detail of the data source is an important property of the environment which should be noted.

Note: It is recommended to take note of the data source type. Saving this information with the test results can help give proper context and meaning to the analysis or conclusions.

As for location, using a remote file geodatabase instead of a local file geodatabase might be necessary if the deployment has multiple servers that make up the ArcGIS Enterprise Site. In either case, remote or local, the data source location is also an important detail of the test environment that should be noted.

Note: It is recommended to take note of the data source location. Saving this information with the test results can help give proper context and meaning to the analysis or conclusions.

Service Type and Number of Instances

For the most widely used ArcGIS map services in a Site, it is recommended to publish the resource as a Dedicated instance instead of Shared. Although both types can scale to fully utilize the available hardware, a Dedicated service instance has resources behind the scenes that are devoted to it which make it an ideal choice for a benchmark test.

For predictable performance, it is recommended to set the Minimum and Maximum number of instances for the Dedicate instance type equal to the number of CPU Cores of the ArcGIS Server machine.

Note: It is recommended to take note of the service type and number of instances. Saving this information with the test results can help give proper context and meaning to the analysis or conclusions.

Do the Request Options in a Benchmark Test Matter?

Absolutely! Using a common dataset and the export map function is not enough to establish a dependable benchmark. The export operation is extremely versatile but through this flexibility an image can be generated through in a variety of different input options.

A load test that is sending in the requests to the map service consistently is an important for establishing a reliable benchmark. Can the test request a BMP image format instead of a PNG or ask for data to be in a different spatial reference other than the default of 4326? Yes, but changing such options may impact the performance and scalability of the test so it is recommended to leave this Test Plan settings as is.

The Map Service Benchmark Test Plan

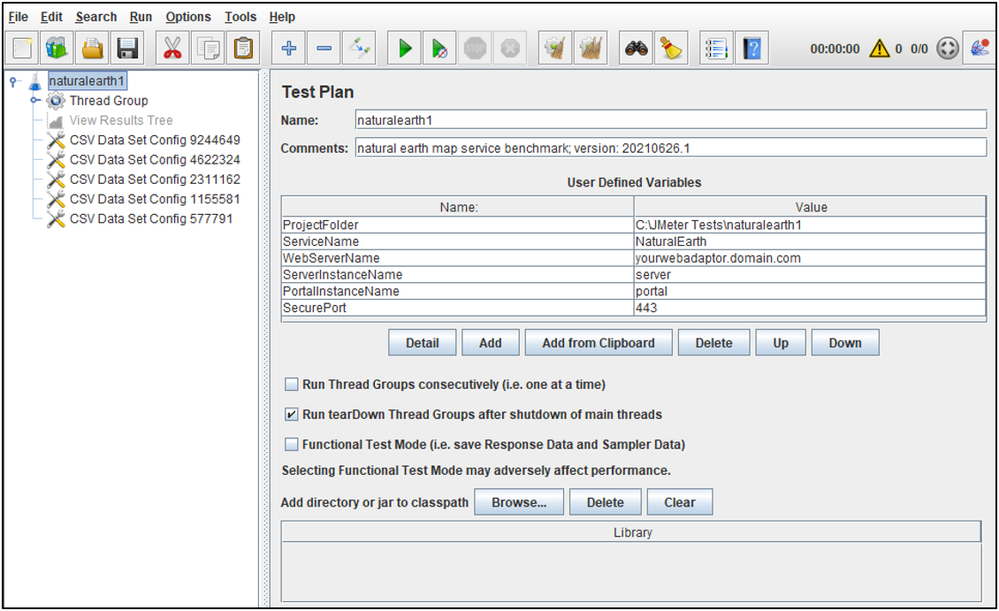

- To download the Apache JMeter Test Plan used in this Article see: naturalearth1.zip

- This Test Plan is largely based on the SampleWorldCities test project from a previous Article

- Downloading and opening the Test Plan in Apache JMeter should look similar to the following:

- Adjusted the User Defined Variables to fit your environment

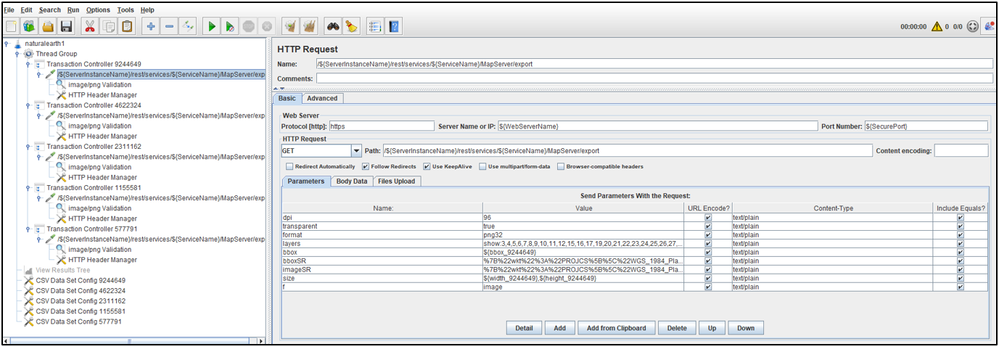

- The request composition (one for each of the 5 tested map scales) should look similar to the following:

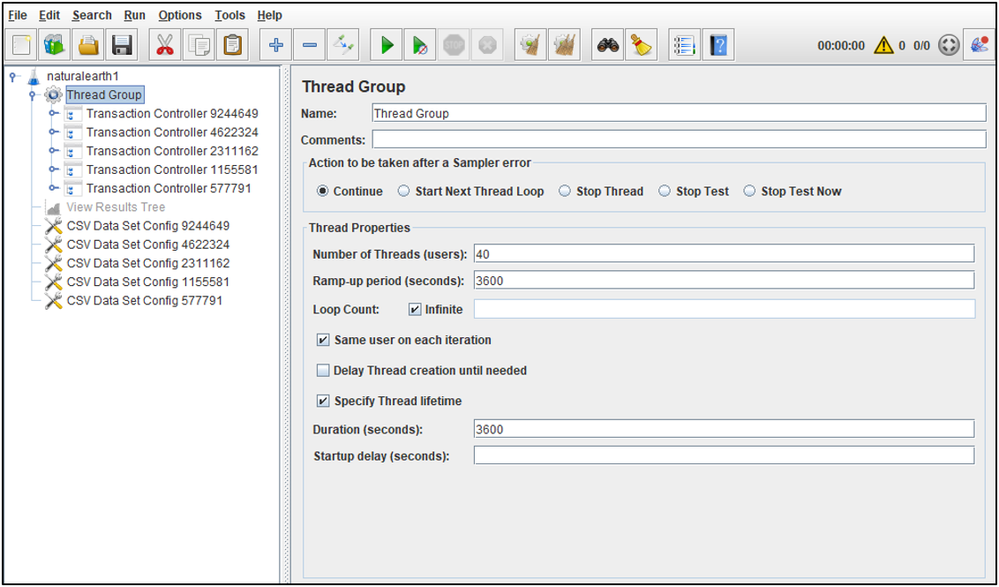

The Thread Group Configuration

The Thread Group defines the step load characteristics of the test and plays an important role. For an export map, the maximum Number of Threads for the test has a close relationship with maximum number ArcGIS Server CPU cores (and similarly, the maximum number of service instances). Configuring the test threads to exceed the number of cores helps ensure enough pressure is applied to fully utilize the server CPU resources. From there, peak throughput should observed which is a primary goal of a benchmark test.

Note: Not all tested datasets may show the respective service fully utilizing the CPU of the ArcGIS Server tier. In such cases, additional troubleshooting is needed to understand where the bottleneck exists that is limiting the scalability of the given workflow.

- As a general rule of thumb, configure the maximum step load to be 25% -- 60% higher than number of server CPU cores

- As seen below, the Test Plan is configured to run for 1 hour and reach a maximum step load of 40 concurrent test threads

- This would start the benchmark at 1 test thread and add an additional thread every 90 seconds

- This benchmark was designed to test an ArcGIS Server deployment running on 24 physical CPU cores

- Adjust accordingly, not every ArcGIS Server will run on 24 physical cores and the maximum step values may be too high for your deployment

- As seen below, the Test Plan is configured to run for 1 hour and reach a maximum step load of 40 concurrent test threads

Note: It is recommended to take note of the step load configuration details. Saving this information with the test results can help give proper context and meaning to the analysis or conclusions.

Benchmark Test Execution

The benchmark should be run in the same manner as a typical JMeter Test Plan.

See the runMe.bat script included with the naturalearth1.zip project for an example on how to run a test recommended by the Apache JMeter team.

Note: It is always recommended to coordinate the load test start time and duration with the appropriate personnel. This ensures minimal impact to users and other colleagues that may also need to use the ArcGIS Enterprise Site. Additionally, this helps prevent system noise from other activity and use which may "pollute" the test results.

Results and Analysis

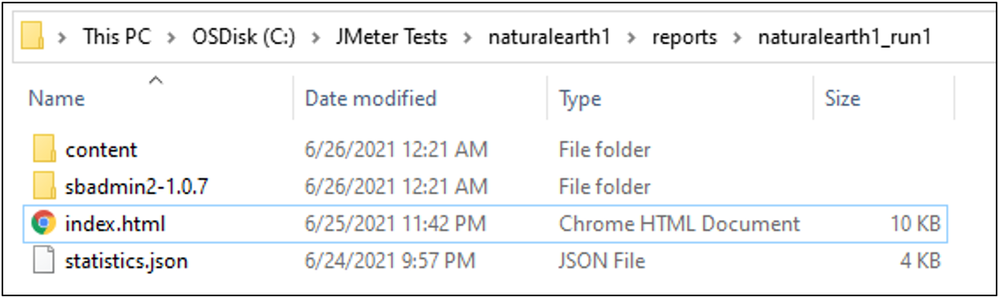

Once the load test has completed, the runME.bat instructs Apache JMeter to automatically generated a report to assist with the analysis of the results.

There can be entire Articles and internet resources devoted exclusively to analyzing the components of the results from a load test. So, in the interest of keeping things simple, our focus will be looking at request throughput (requests/sec) and request performance (seconds) metrics from the report.

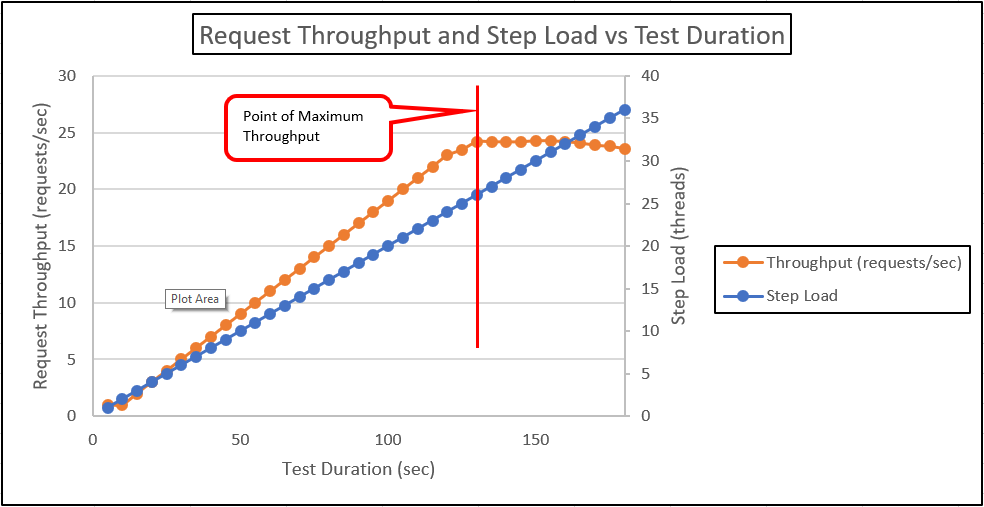

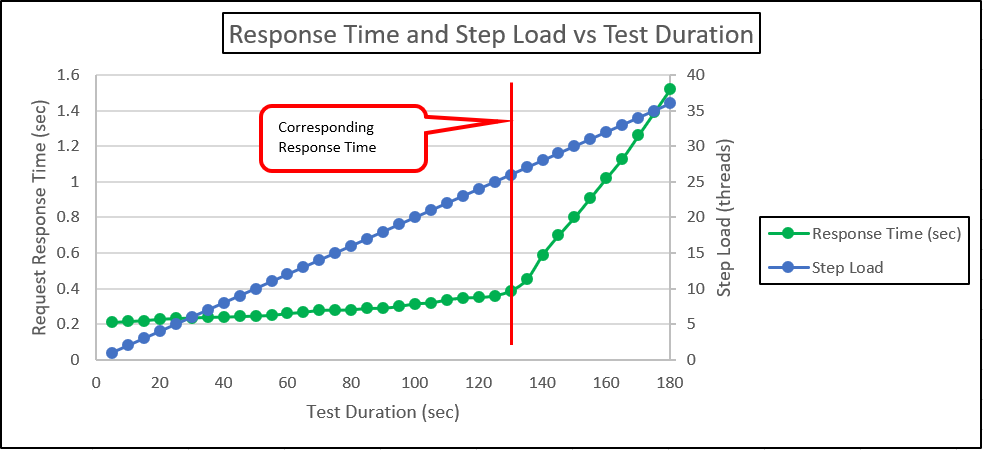

The diagrams below illustrates the ideal trends of these two items in the test over time.

The Ideal Throughput Curve

Ideally, the throughput curve will have the form of the orange line above. The point where the curve

peaks and begins to flatten is an indication that the system has reached its highest level of throughput (due to a hardware or software bottleneck). This area of the graph where the curve bends is referred to

as the knee and the value for maximum throughput is at this point.

The blue line represents the increasing step load of the test.

The Ideal Performance Curve

Ideally, the response time curve will have the form of the green line above. It is taken at this same point in the test as maximum throughput.

The blue line represents the increasing step load of the test.

JMeter Report

Included with the naturalearth1.zip project is a Apache JMeter report called naturalearth1_run1 within the reports folder.

- Opening the index.html will reveal multiple charts and table to assist with the analysis

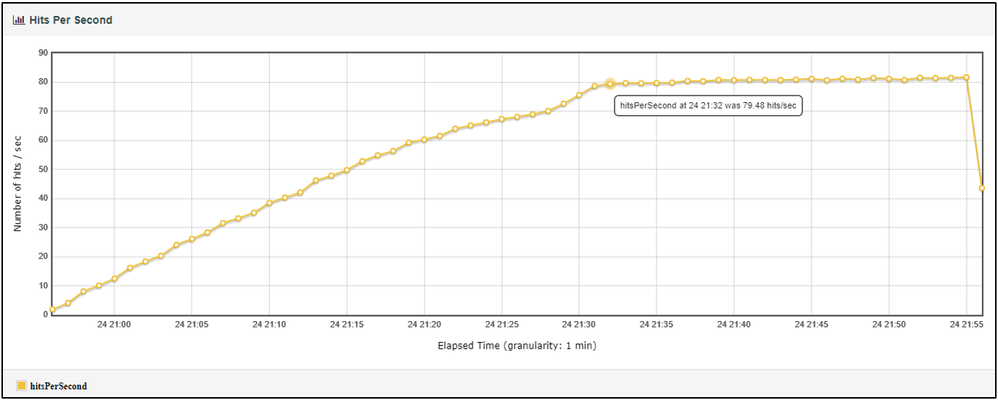

Actual Throughput Curve

- From the report:

- Under Charts-->Throughput, the Hits Per Second chart can be found where the request throughput from the test is plotted

- Since the test was constructed with each transaction containing only one request, "hits per second" is equivalent to both transactions/sec and requests/sec

- The system achieved a maximum throughput of about 80 transactions/sec (or 80 requests/sec)

- Under Charts-->Throughput, the Hits Per Second chart can be found where the request throughput from the test is plotted

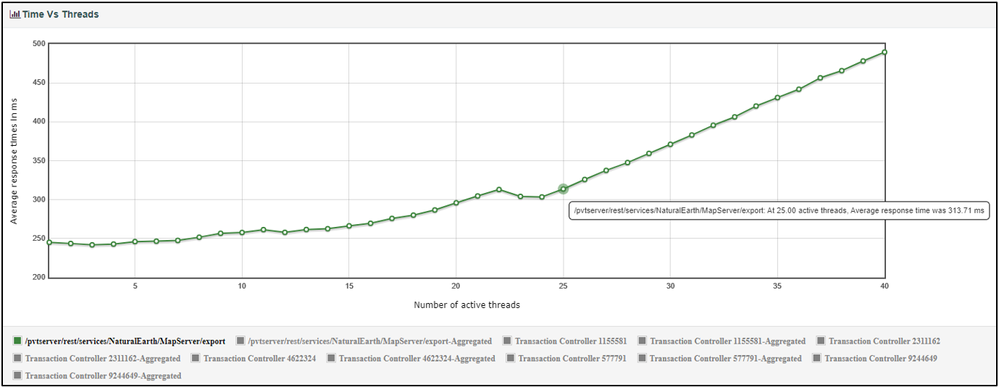

Actual Performance Curve

- From the report:

- Under Charts-->Response Times, the Time Vs Threads chart can be found where the request performance from the test is plotted

- All items except "/pvtserver/rest/services/NaturalEarth/MapServer/export" are filtered out (by clicking on them within the legend)

- Since the test was constructed with each transaction containing only one request, the "export request" is also representing the average transaction performance

- At the point of maximum throughput, the system deliver a transaction performance of about 314ms or 0.3 seconds

- Under Charts-->Response Times, the Time Vs Threads chart can be found where the request performance from the test is plotted

Note: A different approach to the analysis will need to be taken for load test containing transactions with more than one request

Comparing the Results

After you have completed the test of your system with the provided data and Test Plan you can compare the results with the those listed in this Article. This can provide an approximate measuring stick for equating two systems.

- To download the Apache JMeter Test Plan used in this Article see: naturalearth1.zip

- To download the Natural Earth subset of data used in this Article see: Natural_Earth_Test_Data

Apache JMeter released under the Apache License 2.0. Apache, Apache JMeter, JMeter, the Apache feather, and the Apache JMeter logo are trademarks of the Apache Software Foundation.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.