- Home

- :

- All Communities

- :

- Services

- :

- Implementing ArcGIS

- :

- Implementing ArcGIS Blog

- :

- ArcGIS Server Performance Strategies

ArcGIS Server Performance Strategies

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Performance: Challenges and Strategies

What is Performance?

- DNS lookup of the server's hostname

- SSL handshake between client and server

- TCP/IP connection between client and server

- Sending the request to server

- Server processing the request

- Receiving response from server

- For large responses, Time-to-first-byte (or TTFB) can be used instead to measure response time

Typically, the bulk of the time is spent at 4.1. This is where the server is working on the response.

This Community Article will explorer areas that can impact this portion of the response time.

Why is Performance Important?

Simply put: the faster the performance, the lower the response time.

What is Acceptable Performance?

It depends.

The criteria or requirement for classifying an item as having fast (or slow) performance can vary greatly by organization, the published services and expected operations users will be calling.

It is not uncommon to have different response time goals for ArcGIS Server functions or user application workflows (several requests grouped together to represent one operation).

Any number of seconds is fine for a requirement but keep in mind, it may take more hardware as well as more extensive tuning and strategies (this Article) to achieve aggressive goals.

How is Performance Measured?

Response time is the key metric for determining if performance is meeting or staying within or under a target requirement.

Common strategies for measuring are:

- Single user interaction

- Through the web browser or ArcGIS Pro

- This is the easiest place to start

- If there is no understanding yet on performance, start here

- This is the easiest place to start

- Through the web browser or ArcGIS Pro

- Statistically analyzing large volumes of response times

- Through log analysis tools, the ArcGIS Server Manager Statistics page, or other observability utilities

- These approaches have the benefit of analyzing real-world requests that users have already executed against the deployment

- This is discussed in more depth later in the Article

- Through log analysis tools, the ArcGIS Server Manager Statistics page, or other observability utilities

- Load Testing

- Can provide an understanding on performance and scalability

- More time consuming to setup

- Online resources exist for getting started

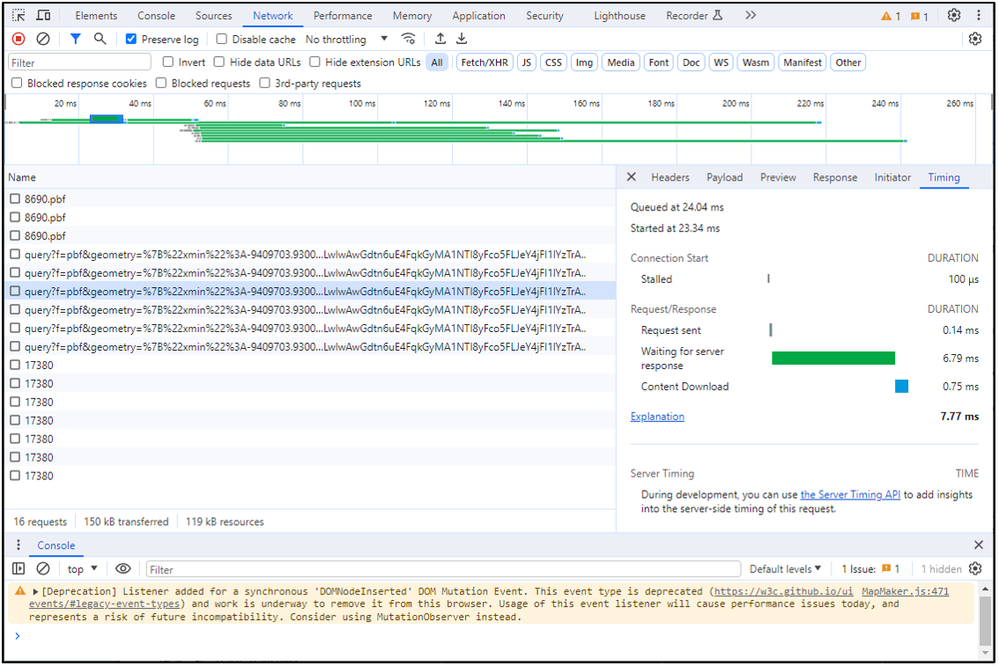

Capturing Response Times -- Web Browser

How response times are captured through single user interaction is a fun topic of discussion.

The easiest approach to capture response times of REST requests from a web application is with the browser's "developer tools" functionality. All major browsers offer some view of the requests, responses and times being sent and received. This duration can give an idea of how fast a request or operation (potentially multiple requests) performed. Decisions can then be made if this is acceptable or needs to be improved.

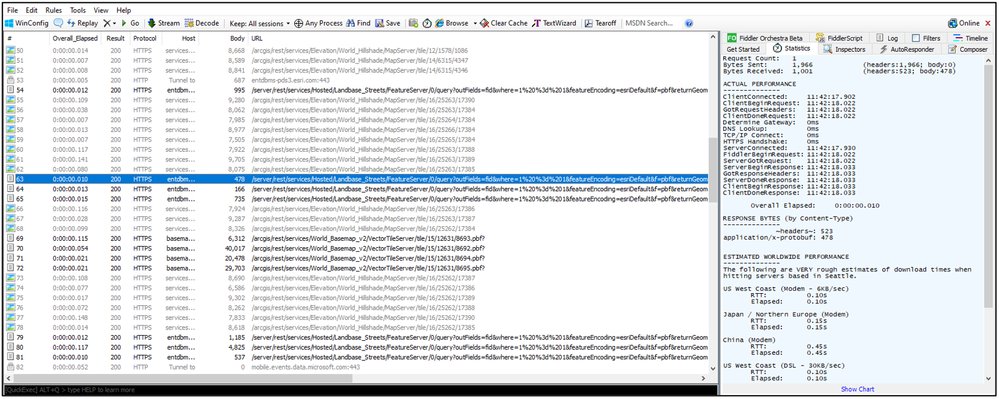

Capturing Response Times -- ArcGIS Pro

While ArcGIS Pro also communicates with ArcGIS Enterprise via REST, it does not have a built-in equivalent of the developer tools. For capturing responses times, you'll need a separate HTTP debugger. There are many available, a popular choice is Fiddler.

With Fiddler installed to the same machine as ArcGIS Pro, it can be configured to intercept the traffic. Request parameters, response content and times can be similarly captured and examined.

Are Goals Required for Improving Performance?

Absolutely not. GIS Administrators can always analyze, tune and apply best practices to the system even if no official performance requirements are in place.

However, it is highly recommended to understand what performance your system is initially delivering (e.g., these are typically referred to as baseline response time numbers) before adjustments are made. This way you can determine if the applied changes are having a positive effect.

Common Performance Challenges and Potential Strategies

Service Pool Types and Instances

One of the most frequent areas that ArcGIS administrators encounter performance challenges with is setting the appropriate number of instances for dedicated services. But first, let's review the different types. There are 3 service types, each with their own strengths.

- Dedicated

- Hosted

- Shared

As a GIS Administrator it is important to be able to identify the instance type for a service.

This can be easily viewed within ArcGIS Server Manager, under Manage Services:Selecting the Appropriate Type

Choosing a dedicated service type is ideal when achieving the most performance and scalability control is desired. With this type, the administrator can:

- Set the maximum number of instances to the number of CPU cores to take full advantage of the available processing capability ArcGIS Server machine (via the maximum)

- Conserve memory when idle (via the minimum)

- Adjust for predicable performance by setting the min and max to the same value

Dedicated services are ideal for heavily requested services or services where performance is paramount.

Hosted services do not utilization ArcSOC instances and auto-scale as needed. However, the ArcGIS capabilities available to it (Hosted) are limited as it is used primarily with feature queries.

Shared services are great for accessing items that are requested less frequently. They typically have more ArcGIS capabilities available to them but not all ArcGIS functionally is available (e.g., branch versioning). A shared instance pool is the default type when publishing a service with ArcGIS Pro.

Note: It is important to reiterate that if the selected service type is set to dedicated, the (minimum and maximum) number of instances should be evaluated to ensure they are optimal. The default when publishing in ArcGIS Pro is to only use a maximum of 2 (instances). This might be too low and inadeqaute for services where performance/scalability are important.

Focus the Map

Optimizing the map is not a new strategy but a relatively easy one to follow for getting better performance from your deployment.

When the map is focused on its primary purpose and presentation, the system does not have to do unnecessary work. Remember, the web is a multiuser platform. Making the display of the dynamic data as streamlined as possible is key to good performance (and scalability). With potentially many requests occurring at the same time, the content being shared needs to be as efficient as possible.

Map Strategies

Map Strategies

- Choose an Optimal Default Extent

- If the map is providing data on Los Angeles, the default extent should not be showing all of California

- If many different map scales are required, use scale dependencies and generalization to limit the detail to when you need most (e.g., the largest scales)

- Remove unneeded layers

- Consider removing nice-to-have data layers

- At the very least, unselect them and have user opt-in to enable

- Consider removing nice-to-have data layers

- Purposely limit what users can do with a service

- Avoid projecting on the fly

- Use the same coordinate system for data frame and data

- Definition queries

- Ensure indexes are in place if comparison logic is applied to attribute columns

Software Releases

A particular version of ArcGIS Enterprise (and its related solutions) can have a handful of patches after its initial base release. These patches can offer performance improvements as well as functionality and security fixes.

It is highly recommended to periodically check the Esri Patches and Updates site or run the "Check for ArcGIS Enterprise Updates" tool. Then, apply the updates at the appropriate time.

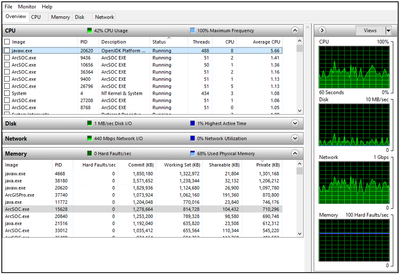

Resource Contention and Expansion

There are times when best practices and strategies for performance are applied but lower response times and higher scalability are still required. Perhaps the current hardware running ArcGIS Server is simply exhausted where the processing power or memory have become the bottleneck for improving the user experience.

For such situations, you need to consider expansion and/or upgrading the hardware.

Scalability

- Scaling up

- Adding more resources to the existing machine (e.g., additional processing cores)

- Additional memory can also assist with scaling by allowing the deployment to have more ArcSOC instances running concurrently

- Ideal for deployments such as: cloud, virtualization, Kubernetes

- Adding more resources to the existing machine (e.g., additional processing cores)

- Scaling out

- Adding more machines of equal resource capacity

- Ideal for deployments such as: on-premise, cloud, virtualization, Kubernetes

Performance

To improve performance with hardware, there is typically just one option:

- Obtaining faster processing cores

- Ideal for deployments such as: cloud, Kubernetes

The system's paging configuration can also impact scalability even when ample memory resources have been added to a system. Although this is operating system software setting and not hardware, it can play a crucial part in the running of many concurrent ArcSOC instances. Be sure to set this accordingly to handle a workload with many instances or instances with a large memory footprint.

There can be situations where performance is limited and appears to be CPU bound (e.g., a bottleneck due to limited processing power). Further inspection may reveal the "culprit" to be one or more slow queries which were suboptimal to begin with or an expensive operation called too frequently (through a periodic administrative tasks). In such a case, it may be more effective to address to the "bad" queries to improve performance and scalability.

Note: These expansion strategies are for overcoming general processing and memory limitations. But, disk and network (bandwidth, latency) resources can also be bottlenecks. For some environments, these can be more complicated to expand upon requiring additional steps to upgrade.

A General Approach to Scaling

How much should you scale up...two servers, five servers? Without details on average response times and the anticipated number of users to support, it’s difficult to provide a concise answer.

However, a simplistic, general approach would be to just try doubling the current amount (memory and/or physical processing cores) and observing the impact.

For many cases, this probably works well up to 16 CPUs. At that point, adding another machine may be more advantageous.

Observability

"Quantifying ArcGIS" is a great strategy...a personal favorite. It defines what resources were being requested and how fast were the responses to fulfill these requests. It is important for obtaining an understanding of general system performance. If system resource utilization can also be captured, the analysis can be further elevated.

Once analysis has been conducted for a deployment, reports can be typically generated that highlight which map services might be of interest due to:

- The observed response times being slower than expected

- The number of requests issued for the resource

- Both response time and number of requests

For an ArcGIS Site with many services, knowing which ones are statistically slow or are consuming the most resources help focus tuning efforts. With such reports, the GIS administrator has turned data into valuable information and are now better informed at making decisions for improving the user experience. That said, examining logs and statistics is just one (important) slice of the analysis pie.

A Challenge with Common Observability Tools

Many tools for system observability and monitoring focus the analysis on requests and responses for services. This is a good approach and definitely assists administrators with quantifying ArcGIS, but it can have a limitation. The limitation can appear with an assumption that a slow map service can be "fixed" by simply adding processing cores. More cores might improve some aspects of the situation, but it is recommended that the service be examined (or even reexamined) in more depth before more resources are obtained.

This goes back to the "Focus the Map" section, for example:

- Ensure the data for the service is not being shown at too small of scales

- Avoid suboptimal queries

Detailed query analysis can help show the occurrence of these behaviors that might get masked with general service reporting. However, while the break down of service request parameters and the underlying queries can improve the analysis it can add complexity to the reporting itself (e.g., more time to execute, more views of what to look at, the understanding of the views). Additionally, not all observability tools perform this type of inspection.

Some recent efforts that are gaining traction are attempting to tackle this issue. They are based on a bottom up approach of analysis where the starting point is on the underlying database queries themselves through mechanism known as "query datastore". Query datastore analysis is powerful and does not impact the database performance like a trace can but it does require some knowledge of the queries themselves and their purpose. Look to this type of analysis capability in the future to help get the most from your observability tools.

Conclusion

There is no single item to easily adjust for boosting performance and scalability of an ArcGIS Enterprise Site. However, this Article lists some common strategies that can be applied together for improving it. It is also important to understand these are items that should be periodically revisited and acted upon. User habits change over time as does the popularity of a web application or service. Resources that were assigned to a particular service can be reevaluated or reduced to make room for the next featured item in your Site.

ArcGIS performance analysis can be fun but it also a continuous effort for maintaining the best user experience.

Attribution

Resource: File:Grayson_running_the_4x100.jpg

Description: English:Grayson running the first leg of the 4x100 at the 2010 Tigered invite

Author: Graysonbay

Created: 02:02, 29 November 2010

License: This file is licensed under the Creative Commons Attribution 3.0 Unported license

Resource: File:Kurvimeter_1_fcm.jpg

Author: Frank C. Müller, Baden-Baden

License: This file is licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

Resource: File:My_Opera_Server.jpg

Description: A server used for the My Home

Author: William Viker, william.viker@gmail.com (c) 2006

License: The copyright holder of this file allows anyone to use it for any purpose, provided that the copyright holder is properly attributed. Redistribution, derivative work, commercial use, and all other use is permitted.

Resource: File:Samsung-1GB-DDR2-Laptop-RAM.jpg

Description: A 1 gigabyte stick of DDR2 667 MHz (PC2-5300) laptop RAM, made by Samsung and pulled from a 2007 MacBook laptop.

Author: Evan-Amos

Created: 1 August 2018

License: Public Domain

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.