Intro

The Applications Prototype Lab was asked to create an app that would collect and record cellular signal strength at various locations around the new Jack and Laura Dangermond Preserve. Two of us did just that: my colleague Al Pascual wrote the iOS version, and I wrote the Android version.

Though we used very different approaches—some of them dictated by the differences between the two mobile platforms—the app performs the same basic function on each operating system. It very simply gathers the device's location and the strength of its cellular connection at specified time and space intervals, and saves those observations to a feature service layer hosted on ArcGIS Online.

Once the app was done, we realized that it could be adapted to collect more than just cell signal strength; it can save just about anything a mobile device is capable of detecting. So we’re making the source available to those interested in modifying it.

Note: it’s built to save results to a feature layer hosted on ArcGIS.com. Unless you want to modify it to save to a different back-end storage mechanism, you’ll need to create and publish your own hosted feature layer in your ArcGIS Online organization.

Preparation: Create a hosted feature layer to hold the results

You'll need a hosted feature layer to hold the collected data. We’ve provided an empty template database to hold location, cell signal strength, and a few device details.

- Download the template file geodatabase here: https://www.arcgis.com/home/item.html?id=a6ea4b56e9914f82a2616685aef94ec0

- Follow the instructions to publish it here: https://doc.arcgis.com/en/arcgis-online/share-maps/publish-features.htm#ESRI_SECTION1_F878B830119B44...

iOS: how to use it

1. Requirements

- Fork and then clone the repo. Don't know how? Get started here.

- Build and run the project to create a single app containing all of the samples.

2. Settings

Go to device settings, find the app CellSignal in the list to change the feature service layer you've created and hosted. The User ID and password settings are for using the service services.

3. Features

The app needs to be running on the foreground to work, will measure the cell coverage and will send that information to your feature service or, when offline, store it in the device until, connection to the feature service is being restored. The user does not need to interact with the app, only needs to make sure the app is running on the foreground.

The chart will show a historical view of the measurements. The scale is from 0 to 4, depending on the cell bars received. A custom map can show the intended extent as well as a simple rendering of the data.

To change how we capture the cell service information, please refer to this function.

private func getSignalStrengthiOS11() -> Int {

let application = UIApplication.shared

if let statusBarView = application.value(forKey: "statusBar") as? UIView {

for subbiew in statusBarView.subviews {

if isiPhoneX() {

return getSignalStrengthiPhoneX()

} else {

if subbiew.classForKeyedArchiver.debugDescription ==

"Optional(UIStatusBarForegroundView)" {

for subbiew2 in subbiew.subviews {

if subbiew2.classForKeyedArchiver.debugDescription ==

"Optional(UIStatusBarSignalStrengthItemView)" {

let bars = subbiew2.value(forKey: "signalStrengthBars") as! Int

return bars

}

}

}

}

}

}

return 0 //NO SERVICE

}

4. Source code

For more information and for the source code, see the GitHub repository here:

https://github.com/Esri/CellSignal

Android: how to use it

1. Requirements

- Android Studio 3+

- An Android device running Android 18 or above (JellyBean 4.3) and having GPS hardware

2. Installing and sideloading

This app will run on devices that are running Android 4.3 (the last version of "Jelly Bean") or above. It will only run on Google versions of Android--not on proprietary versions of Android, such as the Amazon Kindle Fire devices. If you're running Android 4.3 or later on a device that has the Google Play Store app, you should be able to run this. (Oh, you'll need a functional cell plan as well.)

One way to run the app is to build and run the source code in Android Studio and deploy it to a device connected to the development computer.

If you don’t want to build it, you can download the precompiled .apk available in the GitHub releases section; you'll need to install this app through an alternative process called "sideloading".

3. Settings

Tap the Feature Service URL item and enter the address of the feature service layer you've created and hosted. There are two settings affecting the logging frequency. You can set a distance between readings in meters and you can set a time between readings in seconds. Readings will be taken no more often than the combination of these settings. For example, a setting of ten meters and ten seconds means that the next reading won't be taken until the user has moved at least ten meters and at least ten seconds have passed. If you want to only limit readings by distance, you can set the seconds to zero. Please don't set both time and distance to zero.

The User ID and password settings are for using secured services. If you are using your own ArcGIS Enterprise Portal (not ArcGIS.com), and you want to log to a secured service, you'll need to enable enter your own portal's token generator URL into the Token Generator Service URL setting.

Start logging by tapping the switch control at the top of the settings page. You should see a fan-shaped icon (a little like the wifi icon) in the notification bar. That tells you that the app is logging readings in the background.

It will continue logging until you tap the switch control again to turn logging off. The notification item also displays the number of unsychronized local records. As the app gathers new readings, it will update a chart on the main activity showing the last fifteen signal strength readings.

You can turn the screen off or use other apps during logging, since it runs as a background service. An easy way to get back to the settings screen is to pull down the notification bar and tap the logger notification item. Features are logged to a local database, and then sent to the feature service when the internet is available.

4. Synchronization

There are three events that cause a synchronization:

- There is a setting for the synchronization interval; the app will sync whenever that many minutes have passed;

- When internet connectivity has been lost and then restored;

- When the logging switch is turned off

5. Source code

For more information and for the source code, see the GitHub repository here:

https://github.com/markdeaton/SignalStrengthLogger-android/

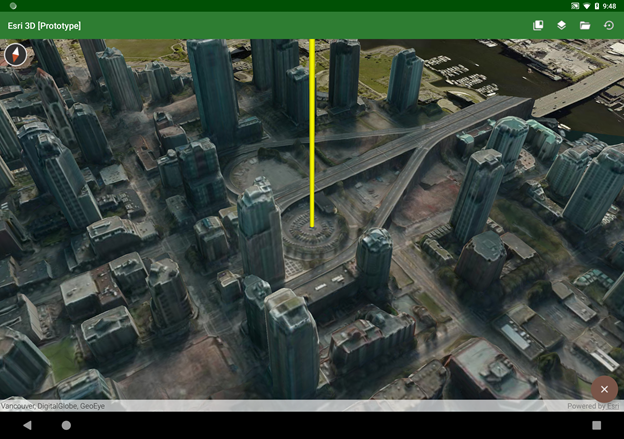

Once the camera moves down into the scene, the floating action button becomes a return button, which will take the camera back to its original point in space.

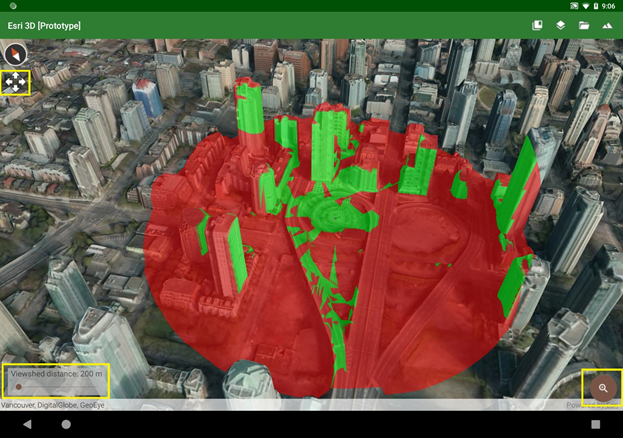

Once the camera moves down into the scene, the floating action button becomes a return button, which will take the camera back to its original point in space. There’s also a slider in the lower left of the screen which lets you interactively change the viewshed distance. You can use it to explore different visibility scenarios in different scenes; you might want to use a smaller value for dense urban areas or a much larger value for unimpeded rural landscapes.

There’s also a slider in the lower left of the screen which lets you interactively change the viewshed distance. You can use it to explore different visibility scenarios in different scenes; you might want to use a smaller value for dense urban areas or a much larger value for unimpeded rural landscapes.

You can stop the rotation by tapping anywhere on the display; then you can tap or drag a new point to start again. This tool uses the SDK’s

You can stop the rotation by tapping anywhere on the display; then you can tap or drag a new point to start again. This tool uses the SDK’s