- Home

- :

- All Communities

- :

- Products

- :

- Geoprocessing

- :

- Geoprocessing Questions

- :

- Re: Why isn't my model iterating for all features ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why isn't my model iterating for all features (2 iterators using sub models)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have built a Model (with 2 sub-models) that is meant to follow the following logic for preparing data for use in Network Analysis route solving (Stops):

- 2 input point feature classes (Origin points and Destination points)

- For each Origin (i.e. A/B/C/D) and Destination (i.e. W/X/Y/Z) point combination, generate a Route Name (i.e. A_W, A_X, A_Y, A_Z). Each Origin point goes to many destinations and needs to be copied with the unique Route Name applied to each copy to match a destination point that gets the same Route Name applied.

- Append all Origin and Destination points to an output feature class (Stops)

The final table will have what was originally 4 Origins copied in 4 times each with the unique route name to each Destination, plus each destination point copied in 4 times to pair with each origin location (A_W, A_X, A_Y, A_Z, B_W, B_X, ..., D_Y, D_Z).

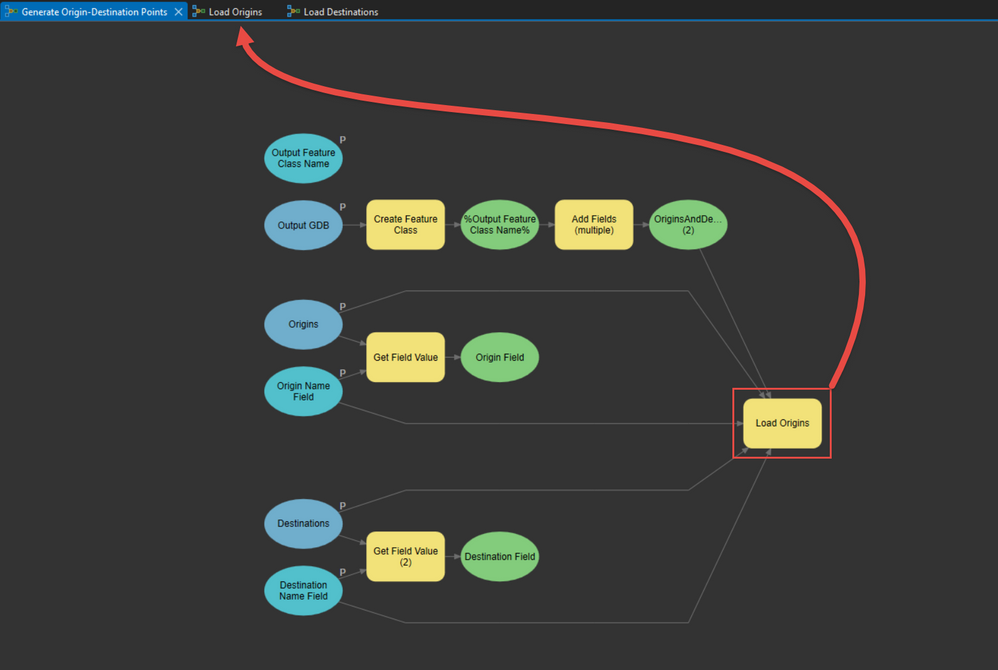

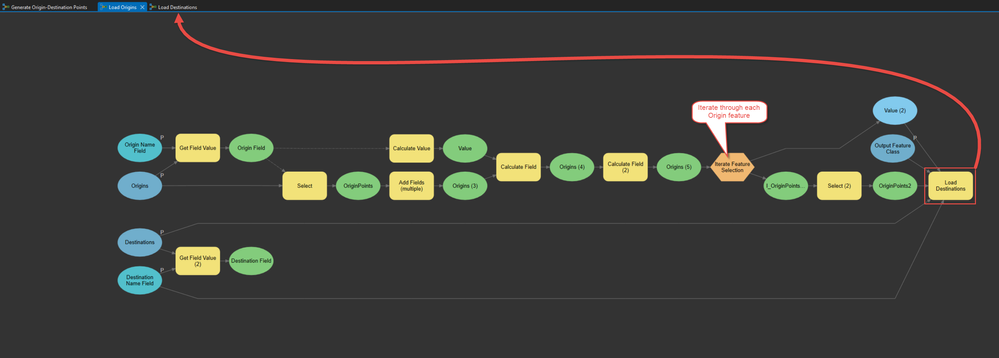

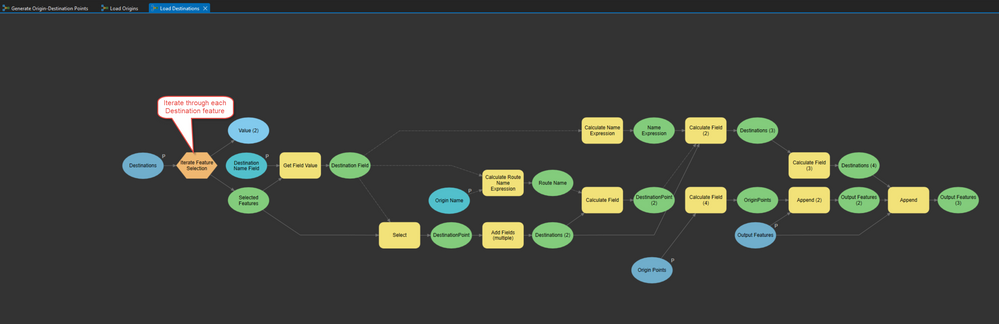

Graphics below show the main model which has input parameters for selecting the Origin and Destination feature classes, then a sub-model which iterates through each Origin location, and that has within it another sub-model which iterates through each Destination location to achieve the required value creation and data copying.

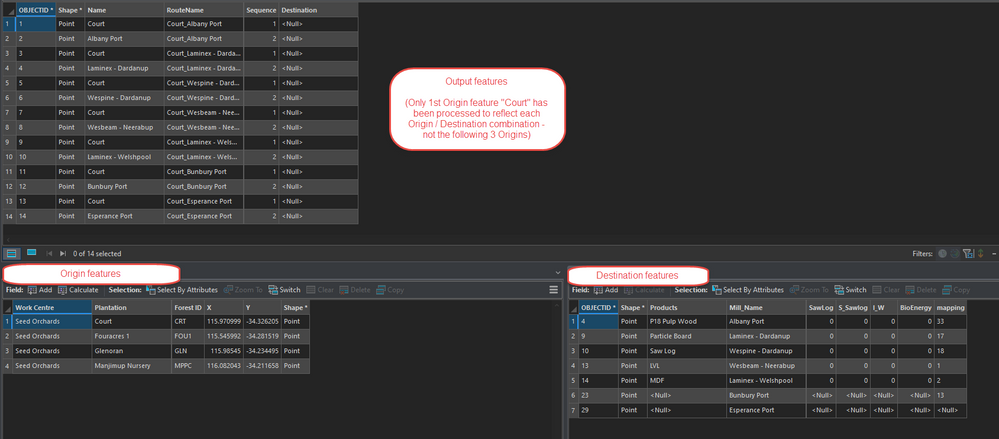

The issue I've found is that whilst the model works as expected for the first Origin point, generating unique Origin/Destination combinations for all destinations, it doesn't then continue on to do the same for the next Origin location (the point of the first sub-model/iterator), resulting in the output FC only having a portion of the data required.

Sorry if this is very wordy, but couldn't figure out how to write it any more clearly. Ultimately, I'm trying to create the required data for generating multiple routes in one hit using Network Analyst.

GIS Officer

Forest Products Commission WA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

No ideas? Suggestions?

I tried moving the steps in the 1st sub model preceding the iteration into the main model to reduce unnecessary processing. No discernable difference on my sample dataset (same results).

I also tried using Collect Values on the iterator outputs to pass back to the main model, but I just ended up with 14 copies of the last point processed.

GIS Officer

Forest Products Commission WA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

So in lieu of finding a solution to this direct issue (of nested submodels and iterators), I came up with an alternate plan (that I believe works better anyway). I have now separated my model into the following 3 sections:

- Main Model (selects input data and parameters, fills out basic field calculations and gets the initial count of records from my 2 input datasets)

| OID | Dataset 1 | OID | Dataset 2 | |

| 1 | Bunbury | 1 | Perth | |

| 2 | Collie | 2 | Busselton | |

| 3 | Manjimup | 3 | Kojonup | |

| 4 | Albany | 4 | Geraldton | |

| 5 | Kalgoorlie | |||

| Count = 4 | Count = 5 |

- Sub-Model 1 (inside Main Model which replicates the first dataset to match A*B (i.e. A=4 & B=5 then C=20) by repeatedy appending a copy of the input dataset to itself until it has 20 total records (5 times in this example)).

| OID | Dataset 1 |

| 1 | Bunbury |

| 2 | Collie |

| 3 | Manjimup |

| 4 | Albany |

| 5 | Bunbury |

| 6 | Collie |

| 7 | Manjimup |

| 8 | Albany |

| 9 | Bunbury |

| 10 | Collie |

| 11 | Manjimup |

| 12 | Albany |

| 13 | Bunbury |

| 14 | Collie |

| 15 | Manjimup |

| 16 | Albany |

| 17 | Bunbury |

| 18 | Collie |

| 19 | Manjimup |

| 20 | Albany |

| Count = 20 |

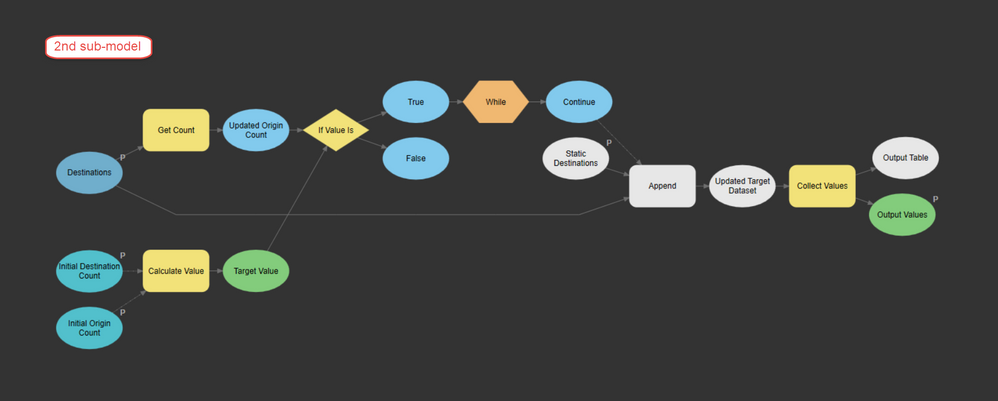

- Sub-Model 2 (also inside Main Model and NOT Sub-Model 1 like in my first attempt. This does the same as the 1st Sub-Model except on the second dataset, appending a copy of the 2nd dataset to the input dataset until it has reached 20 records total (4 times in this example)).

| OID | Dataset 2 |

| 1 | Perth |

| 2 | Busselton |

| 3 | Kojonup |

| 4 | Geraldton |

| 5 | Kalgoorlie |

| 6 | Perth |

| 7 | Busselton |

| 8 | Kojonup |

| 9 | Geraldton |

| 10 | Kalgoorlie |

| 11 | Perth |

| 12 | Busselton |

| 13 | Kojonup |

| 14 | Geraldton |

| 15 | Kalgoorlie |

| 16 | Perth |

| 17 | Busselton |

| 18 | Kojonup |

| 19 | Geraldton |

| 20 | Kalgoorlie |

| Count = 20 |

The main model then sorts the first replicated dataset output by Sub-Model 1 by a name field which groups all spatially colocated features together in the table with new ObjectID's.

| OID | Unsorted | >>>>> | OID | Sorted |

| 1 | Bunbury | 1 | Albany | |

| 2 | Collie | 2 | Albany | |

| 3 | Manjimup | 3 | Albany | |

| 4 | Albany | 4 | Albany | |

| 5 | Bunbury | 5 | Albany | |

| 6 | Collie | 6 | Bunbury | |

| 7 | Manjimup | 7 | Bunbury | |

| 8 | Albany | 8 | Bunbury | |

| 9 | Bunbury | 9 | Bunbury | |

| 10 | Collie | 10 | Bunbury | |

| 11 | Manjimup | 11 | Collie | |

| 12 | Albany | 12 | Collie | |

| 13 | Bunbury | 13 | Collie | |

| 14 | Collie | 14 | Collie | |

| 15 | Manjimup | 15 | Collie | |

| 16 | Albany | 16 | Manjimup | |

| 17 | Bunbury | 17 | Manjimup | |

| 18 | Collie | 18 | Manjimup | |

| 19 | Manjimup | 19 | Manjimup | |

| 20 | Albany | 20 | Manjimup |

It then joins it to the 2nd dataset by ObjectID. This join means that for each copy of a record in a group of records in dataset 1, it has a unique matching record from dataset 2. Field calculations are then done to preserve the attributes from dataset 2, the join removed and the final data exported to a new feature class.

| OID | Joined | Dataset |

| 1 | Albany | Perth |

| 2 | Albany | Busselton |

| 3 | Albany | Kojonup |

| 4 | Albany | Geraldton |

| 5 | Albany | Kalgoorlie |

| 6 | Bunbury | Perth |

| 7 | Bunbury | Busselton |

| 8 | Bunbury | Kojonup |

| 9 | Bunbury | Geraldton |

| 10 | Bunbury | Kalgoorlie |

| 11 | Collie | Perth |

| 12 | Collie | Busselton |

| 13 | Collie | Kojonup |

| 14 | Collie | Geraldton |

| 15 | Collie | Kalgoorlie |

| 16 | Manjimup | Perth |

| 17 | Manjimup | Busselton |

| 18 | Manjimup | Kojonup |

| 19 | Manjimup | Geraldton |

| 20 | Manjimup | Kalgoorlie |

Another small tip - use "memory " as the output location for working data instead of a .gdb (including %scratchgdb%) - it works so much faster!

GIS Officer

Forest Products Commission WA