- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Documents

- :

- Overwrite ArcGIS Online Feature Service using Trun...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Overwrite ArcGIS Online Feature Service using Truncate and Append

Overwrite ArcGIS Online Feature Service using Truncate and Append

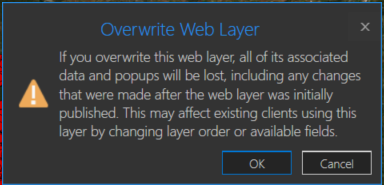

You may have a need to overwrite an ArcGIS Online hosted feature service due to feature and/or attribute updates. However, this could cause some data loss such as pop-ups, symbology changes, etc in the hosted feature service. For example, you will receive a warning about this when you try to overwrite a feature service in ArcGIS Pro:

One way around this is to use the ArcGIS API for Python. If you are the data owner, or Administrator, you can truncate the feature service, and then append data. This is essentially an overwrite of the feature service. The below script will do this by specifying a local feature class and the item id of the feature service you wish to update. The script will then execute the following steps:

- export the feature class to a temporary File Geodatabase

- zip the File Geodatabase

- upload the zipped File Geodatabase to AGOL

- truncate the feature service

- append the zipped File Geodatabase to the feature service

- delete the uploaded zipped File Geodatabase in AGOL

- delete the local zipped File Geodatabase

- delete the temporary File Geodatabase

Here is an explanation of the script variables:

- username = ArcGIS Online username

- password = ArcGIS Online username password

- fc = path to feature class used to update feature service

- fsItemId = the item id of the ArcGIS Online feature service

- featureService = True if updating a Feature Service, False if updating a Hosted Table

- hostedTable = True is updating a Hosted Table, False if updating a Feature Service

- layerIndex = feature service layer index

- disableSync = True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

- updateSchema = True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

- upsert = True will not truncate the feature service, requires a field with unique values

- uniqueField = Field that contains unique values

Note: For this script to work, the field names in the feature class must match the field names in the hosted feature service. The hosted feature service can have additional fields, though.

Video

Script

import arcpy, os, time, uuid, arcgis

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Variables

username = "jskinner_rats" # AGOL Username

password = "********" # AGOL Password

fc = r"c:\DB Connections\GIS@PLANNING.sde\GIS.Parcels" # Path to Feature Class

fsItemId = "a0ad52a76ded483b82c3943321f76f5a" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = 0 # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = True # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

upsert = True # True will not truncate the feature service, requires a field with unique values

uniqueField = 'PIN' # Field that contains unique values

# Environment Variables

arcpy.env.overwriteOutput = True

arcpy.env.preserveGlobalIds = True

def zipDir(dirPath, zipPath):

'''

Zips File Geodatabase

Args:

dirPath: (string) path to File Geodatabase

zipPath: (string) path to where File Geodatabase zip file will be created

Returns:

'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

def updateFeatureServiceSchema():

'''

Updates the hosted feature service schema

Returns:

'''

# Get required fields to skip

requiredFields = [field.name for field in arcpy.ListFields(fc) if field.required]

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

def divide_chunks(l, n):

'''

Args:

l: (list) list of unique IDs for features that have been deleted

n: (integer) number to iterate by

Returns:

'''

# looping till length l

for i in range(0, len(l), n):

yield l[i:i + n]

if __name__ == "__main__":

# Start Timer

startTime = time.time()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

if arcgis.__version__ < '2.4.0':

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

elif arcgis.__version__ >= '2.4.0':

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Append features from featureService class/hostedTable

if upsert == True:

# Check if unique field has index

indexedFields = []

for index in fLyr.manager.properties['indexes']:

indexedFields.append(index['fields'])

if uniqueField not in indexedFields:

print(f"{uniqueField} does not have unique index; creating")

fLyr.manager.add_to_definition({

"indexes": [

{

"fields": f"{uniqueField}",

"isUnique": True,

"description": "Unique field for upsert"

}

]

})

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Append features

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=True, upsert_matching_field=uniqueField, field_mappings=[])

# Delete features that have been removed from source

# Get list of unique field for feature class and feature service

entGDBList = [row[0] for row in arcpy.da.SearchCursor(fc, [uniqueField])]

fsList = [row[0] for row in arcpy.da.SearchCursor(fLyr.url, [uniqueField])]

s = set(entGDBList)

differences = [x for x in fsList if x not in s]

# Delete features in AGOL service that no longer exist

if len(differences) > 0:

print('Deleting differences')

if len(differences) == 1:

if type(differences[0]) == str:

features = fLyr.query(where=f"{uniqueField} = '{differences[0]}'")

else:

features = fLyr.query(where=f"{uniqueField} = {differences[0]}")

fLyr.edit_features(deletes=features)

else:

chunkList = list(divide_chunks(differences, 1000))

for list in chunkList:

chunkTuple = tuple(list)

features = fLyr.query(where=f'{uniqueField} IN {chunkTuple}')

fLyr.edit_features(deletes=features)

else:

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

hasViews = False

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[

{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[

{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if maxOBJECTID != None and minOBJECTID != None:

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

Updates

3/3/2023: Added the ability to add/remove fields from feature service keeping schemas in-sync. For example, if a field(s) is added/removed from the feature class, it will also add/remove the field(s) from the feature service

10/17/2024: Added upsert functionality. Deleted features/rows from the source feature class/table will also be deleted from feature service. This is helpful if you do not want your feature service truncated at all. In the event of a failed append, the feature service will still contain data. A prerequisite for this is for the data to have a unique id field.

@JakeSkinner No, I used this kind of script

which should work since it's from ESRI 'documentation'

You can post your script, or I would recommend trying the one in this document to see if you get the same error.

@neomapper can you share the AGOL service to a Group and invite my AGOL account (jskinner_rats)? I can take a look.

This is an excellent script - thought seems a little beefy for a simple truncate and append if your schema is the same. Seems like doing it with two methods is simpler. However I was trying to understand if this was supported in truncate/appending large feature classes in AGOL.

def truncate_portal_data(fl_url:str)->object:

"""Truncates a feature layer or able through the REST endpoint even if sync is enabled

Args:

fl_url (str): REST endpoint of the feature layer

Returns:

object: results object

"""

ids = fl_url.query(return_ids_only=True)['objectIds']

results = fl_url.edit_features(deletes=ids) if len(ids)>0 else {"results":"No features to delete"}

return results

def update_portal_data(df:pd.DataFrame, fl_url:str, truncate:bool=True, chunk_size:int=500)->object:

import numpy as np

"""Adds features from a dataframe to a Portal / AGOL feature service.

Args:

df (pd.DataFrame): DataFrame from which to update features

fl_url (str): Feature service URL

truncate (bool, optional): Truncate table before updating. Defaults to True.

Returns:

object: results of update operation

"""

if truncate:

truncate_portal_data(fl_url)

numchunks = int(len(df)/chunk_size) or 1

chunks = np.array_split(df,numchunks)

return list(map(lambda x: fl_url.edit_features(adds=x.spatial.to_featureset()), chunks))

@Jay_Gregory the best way to find out would be to publish a sample service and test your script. I incorporated the upsert option so the feature service is not truncated. This is helpful if the Append operation fails, you are not left with an empty feature service.

is there an option to maintain the globalid's i have a feature service with 9 related features so it uses the globalid & parentglobalid's to link them together

thanks

Stu

@StuartMoore yes, there is an Environment Variable set to True in this script to preserver GlobalIDs:

This script will be a huge lifesaver for me I think. Mine though keeps getting hung up on the Appending features step. I saw some other commentors had the same "Unknown Error (Error Code: 500)" as me a little while ago and it was a bug with AGO but it looked like that was fixed? Has the bug returned to haunt us? I am appending ~20k records so could it be an issue with the size of the data?

Hi @OliviaE, no, the size of the data should not be an issue. Can you share your service to an AGOL group and invite my account (jskinner_rats)? I can take a look to see if anything jumps out at me.

Hi @JakeSkinner I appreciate your help! I sent the invite. The service is blank (the truncate part works lol) so I also shared the file gdb that the script creates and uploads to AGOL as well, in case you need to look at the data.

@OliviaE the File Geodatabase is empty when I download and extract the Zip. Can you upload another copy?

@JakeSkinner Hm interesting. I will try but that would definitely mess up the Append if I'm trying to append nothing at all lol.

@JakeSkinner new zipped gdb uploaded. That one does have the right layer in it.

@OliviaE thanks for the new data. I downloaded the FGD and published the feature class as a new service. I was then able to successfully run the truncate/append script. Can you try publishing the data as a new service, and test updating the new service using the script?

@JakeSkinner it worked! My existing service must have been the problem. Thank you so so much! This script will be so useful to us.

Great script! Looking to implement it as well. Running into an issue while trying to Truncate and Append a Hosted Feature Table on an ArcGIS Enterprise 10.9.1 Portal.

I receive a

AttributeError: 'NoneType' object has no attribute 'tables' on line 168 for fLyr = serviceLayer.tables[layerIndex]

Has anyone encountered this error for tables and know if there's something that needs to be modified? As far as I know I have all the portal and layer parameters filled out correctly.

@Henry the layerIndex parameter is a bit confusing. This number will correspond to the order of the layer/table. For example, if I publish a service with a single layer, but say I manually specify the layer index to be 6. The layerIndex in this script will still be 0.

Hey @JakeSkinner ,

I first discovered this post back in the summer and it's been working great. I ran into a warning, for the first time, this morning. It's a DeprecatedWarning related to add.

Warning (from warnings module):

File "C:\Users\BIHARIDA\AppData\Local\ESRI\conda\envs\arcgispro-py3-clone\Lib\idlelib\run.py", line 579

exec(code, self.locals)

DeprecatedWarning: add is deprecated as of 2.3.0 and has been removed in 3.0.0. Use `Folder.add()` instead.

It suggests using Folder.add. but I am unable to find any documentation on Folder.add() anywhere. I assume the "add" that it is referring to is part of this line of code:

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

I should add that I just upgraded my ArcGIS Pro to 3.4 this week. Not sure if that caused the warning.

Any help or direction that you could provide would be greatly appreciated.

Thanks Jake

@DJB I think this is a False warning. The Folder.add() method the message references should be used for adding a new Folder, not an item. This can be safely ignored.

@JakeSkinner ,

Amazing!

I just want to say how appreciative I am. Looking at this post and other posts of yours, you always respond to everyone's questions and do it in a very quick turnaround. You have made my job and life so much easier.

I just want to say how grateful I am for all your help.

Cheers!

I am using one of your previous scripts to update a single Hosted Feature Layer. Part of the script disables the sync feature on the FL in AGOL.

How do I go about re-enabling the sync at the end of the script? Without the FL having Sync Enabled, it is giving our Workforce Project(s) real problems with the layers.

Appreciate your help as always

The script should disable and re-enable Sync in this portion of the script:

Sounds like this is not happening on your end?

Here is one of the original scripts I have been using, for some reason when the script finishes, the sync enabled is unchecked and I have to recheck it via AGOL each time.

# Variables

prjPath = r"C:\Users\cwafstet\Documents\MODERN GIS WORKING FILES\ELECTRIC\ArcGIS PRO PROJECTS\AGOL UPDATE - DATASET - ELECTRIC.aprx" # Path to Pro Project

map = 'MODERN ELECTRIC AGOL UPDATES' # Name of map in Pro Project

serviceDefID = 'a57db3b4e0db4cddafc7dacaf33cd317' # Item ID of Service Definition

featureServiceID = '0694901da5ce44bbba3d0cec88e1bb6c' # Item ID of Feature Service

portal = "https://www.arcgis.com" # AGOL

user = "xyzx" # AGOL username

password = "xyzx" # AGOL password

preserveEditorTracking = True # True/False to preserve editor tracking from feature class

unregisterReplicas = False # True/False to unregister existing replicas

# Set Environment Variables

arcpy.env.overwriteOutput = 1

# Disable warnings

requests.packages.urllib3.disable_warnings()

# Start Timer

startTime = time.time()

print(f"Connecting to AGOL")

gis = GIS(portal, user, password)

arcpy.SignInToPortal(portal, user, password)

# Local paths to create temporary content

sddraft = os.path.join(arcpy.env.scratchFolder, "WebUpdate.sddraft")

sd = os.path.join(arcpy.env.scratchFolder, "WebUpdate.sd")

sdItem = gis.content.get(serviceDefID)

# Create a new SDDraft and stage to SD

print("Creating SD file")

arcpy.env.overwriteOutput = True

prj = arcpy.mp.ArcGISProject(prjPath)

mp = prj.listMaps(map)[0]

serviceDefName = sdItem.title

arcpy.mp.CreateWebLayerSDDraft(mp, sddraft, serviceDefName, 'MY_HOSTED_SERVICES',

'FEATURE_ACCESS', '', True, True)

arcpy.StageService_server(sddraft, sd)

# Reference existing feature service to get properties

fsItem = gis.content.get(featureServiceID)

flyrCollection = FeatureLayerCollection.fromitem(fsItem)

properties = fsItem.get_data()

# Get thumbnail and metadata

thumbnail_file = fsItem.download_thumbnail(arcpy.env.scratchFolder)

metadata_file = fsItem.download_metadata(arcpy.env.scratchFolder)

# Unregister existing replicas

if unregisterReplicas:

if flyrCollection.properties.syncEnabled:

print("Unregister existing replicas")

for replica in flyrCollection.replicas.get_list():

replicaID = replica['replicaID']

flyrCollection.replicas.unregister(replicaID)

# Overwrite feature service

sdItem.update(data=sd)

print("Overwriting existing feature service")

if preserveEditorTracking:

pub_params = {"editorTrackingInfo" : {"preserveEditUsersAndTimestamps":'true'}}

fs = sdItem.publish(overwrite=True, publish_parameters=pub_params)

else:

fs = sdItem.publish(overwrite=True)

# Update service with previous properties

print("Updating service properties")

params = {'f': 'pjson', 'id': featureServiceID, 'text': json.dumps(properties), 'token': gis._con.token}

agolOrg = str(gis).split('@ ')[1].split(' ')[0]

postURL = f'{agolOrg}/sharing/rest/content/users/{fsItem.owner}/items/{featureServiceID}/update'

r = requests.post(postURL, data=params, verify=False)

response = json.loads(r.content)

# Update thumbnail and metadata

print("Updating thumbnail and metadata")

fs.update(thumbnail=thumbnail_file, metadata=metadata_file)

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print(f"Script completed in {elapsedTime} minutes")

The script that you updated, will not run saying it cannot run with a sync enabled hosted feature layer. I am getting this message when running the updated script with a Hosted Feature Layer that has sync enabled:

fc = r"C:\Users\cwafstet\Documents\MODERN GIS WORKING FILES\ELECTRIC\GEODATABASES\MEWCo ELECTRIC SYSTEM.gdb\AGOL_ELECTRIC_DATASET\UG_PRIMARY_CONDUCTOR_FEEDER" # Path to Feature Class

fsItemId = "dbf687af06b649d3afafbc8469e8d448" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = 0 # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = False # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

upsert = True # True will not truncate the feature service, requires a field with unique values

uniqueField = 'GlobalID' # Field that contains unique values

# Environment Variables

arcpy.env.overwriteOutput = True

arcpy.env.preserveGlobalIds = True

Appreciate any feedback on either of the (2) scripts.

@ModernElectric try the latest script here. I updated the code to re-enable sync on the feature service if it was previously enabled:

Good to go again. Appreciate this.

Verified the Hosted Feature Layer had the Sync re-enabled after the script was ran. Also, shows the modified date was also updated in AGOL.

Exactly what was needed.

@DJB, I received the same Deprecated Warning. Similarly, this is the first time I've run this script since updating to Pro 3.4.0. The Hosted Feature Layer in AGO updated as expected and as @JakeSkinner mentioned, Folder.add() is not a replacement. I'm going to treat the warning as erroneous and ignore it.

Edit: After looking at Folder Object | ArcGIS API for Python, I'm not sure about what I wrote previously and struck through. Create will create a new folder. Add seems to be used to add content to a folder. Accessing and creating content | ArcGIS API for Python says: "To create new items on your GIS, use the add() method on a Folder instance. You can get an individual folder using the Folders.get() method.".

@BrianShepard, That's what I did and haven't had any issues to report. My services are still being updated as expected. When writing scripts, I always hate it when I see any kind of red pop up. But other than my anxiety rising, it's still working great for me.

@JakeSkinner, can you confirm that add hasn't been deprecated in favor of Folder.add()? If I understand correctly, the example given in the Developer Docs (Accessing and creating content | ArcGIS API for Python) adds a .csv to a folder. It doesn't appear to create a folder.

@BrianShepard @DJB that help document is helpful and looks like the new way forward is to use .add from the Folders method, however, I could not get this to work:

I even tried the sample code here and received the same error.

@JakeSkinner @DJB, I'm out of my depth here, but I was able to get it to work - replacing "data" with "file" and adding a line to define the root folder.

Here's my updated snippet:

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

The expected fgdb was added to AGO.

Add documentation:

@BrianShepard good find. I think there are still some issues. The help states this should return arcgis.gis.Item, but during my testing it's returning concurrent.futures._base.Future. Which will cause the append operation to fail:

@JakeSkinner I modified one of my production scripts with:

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

#fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

Both add methods upload a zipped fgdb to my Home folder in AGO. The rest of the script ran as expected and my target Hosted Feature Layer has the expected number of features after append...it didn't appear to fail.

It seems to be working aside from getting a warning about my local .zip file being unclosed:

C:\Program Files\ArcGIS\bin\Python\envs\arcgispro-py3\Lib\concurrent\futures\thread.py:58: ResourceWarning: unclosed file <_io.BufferedReader name='T:\\General Eng\\GIS\\ArcGIS Pro Projects\\Tax Lots\\scratch\\664ba992-b1bc-11ef-a21f-b07b2506e44a.gdb.zip'> result = self.fn(*self.args, **self.kwargs) ResourceWarning: Enable tracemalloc to get the object allocation traceback

@BrianShepard thank you for posting your code snippet. I was missing the .result() for the add method. I've updated the script and zip file on this page.

@JakeSkinner glad that worked.

Do you also get a warning about the .zip file being unclosed? I received that consistently when I was testing yesterday and see that it looks like the .zip file is closed at the end of the Function to Zip FGD (zipf.close()), but the warning seems to indicate that it isn't closed. There wouldn't be a need to close it again, at a later point, would there?

@BrianShepard I've gotten that error before, the script fails trying to remove the zip file from disk. It seems to resolve itself after sometime, and I haven't been able to figure out what's causing it. As a workaround, you can add a command at the beginning of the script to delete any zip files in the scratch directory. There will still be a leftover zip file after the script executes, but there won't be several as the script runs over time.

@JakeSkinner I appreciate your being active on this thread. This script has been a great help. I typically run this monthly as I update tax lots. I'll look into that again in January.

I've only received the "unclosed" warning when using the folder.add() method. It did prompt me to check my scratch folder and I realized that since I've been running this script it has never deleted my temp fgdb or the .zip file. I'm guessing it's a similar issue to others that have commented about delete issues. I don't believe it's a permissions issue as I can delete the files manually with my user account, and I have write permission on the folder. The truncate/append scripts are a portion of my overall tax lot update notebook, so I may just add another cell at the end of my notebook to clean everything up.

I'm receiving another error (possible an ArcPy version issue). I've tested running this script in Python versions (3.7.11) and (3.9.16) for an Enterprise Portal 10.9.1.

I've received the following error -

AttributeError: 'ContentManager' object has no attribute 'folders'

I've taken a quick look at the ContentManager documentation and from my first glance, it looks like this should be supported. Any thoughts?

@Henry delete line 158:

root_folder = gis.content.folders.get()Then change line 159 from:

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()to:

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

This script was really handy. Easy to configurate, only issue was to get the layer index right. After some searching in the discussion in this thread, I found the answer: Just count from the top, starting with zero.

I tried a different approach first. I made a model builder, deleted all features in a hosted feature service, then appending from a local fgdb. Then I exported that to python. That also works, but it was much more fiddeling to manipulate this script than your script.

I attach the code of my rough script, if you are interested. The "Print"-text is in Norwegian, sorry for that. This script deletes all features in to datasets in a hosted feature service, appends new features to the same datasets.

# -*- coding: utf-8 -*-

"""

Generated by ArcGIS ModelBuilder on : 2025-02-25 15:37:17

"""

import arcpy

def OppdaterPlandataSlettLeggTil(): # Delete features and then append new

# To allow overwriting outputs change overwriteOutput option to True.

arcpy.env.overwriteOutput = True

RpJuridiskLinje_2_ = "C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje"

L71RpJuridiskLinje_2_ = "https://services-eu1.arcgis.com/bUDP8dxTEFYEUa8w/arcgis/rest/services/ReguleringsplanForslagVN2_Bodo/FeatureServer/71"

RpArealformalOmrade = "C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade"

L74Arealformål_Pbl_12_5_2_ = "https://services-eu1.arcgis.com/bUDP8dxTEFYEUa8w/arcgis/rest/services/ReguleringsplanForslagVN2_Bodo/FeatureServer/74"

print ('Sletter alle features i Regplanforslag VN2 og legg til nye lasta ned frå NAP')

# Process: Delete Features (Delete Features) (management)

L71RpJuridiskLinje = arcpy.management.DeleteFeatures(in_features=L71RpJuridiskLinje_2_)[0]

# Process: Append (Append) (management)

L71RpJuridiskLinje_3_, Appended_Row_Count, Updated_Row_Count = arcpy.management.Append(inputs=[RpJuridiskLinje_2_], target=L71RpJuridiskLinje, schema_type="NO_TEST", field_mapping="objtype \"objtype\" true true false 32 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,objtype,0,31;kommunenummer \"arealplanId.NasjonalArealplanId.kommunenummer\" true true false 4 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,kommunenummer,0,3;planidentifikasjon \"arealplanId.NasjonalArealplanId.planidentifikasjon\" true true false 16 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,planidentifikasjon,0,15;juridisklinje \"juridisklinje\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,juridisklinje,-1,-1;vertikalniva \"vertikalnivå\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,vertikalniva,-1,-1;forstedigitaliseringsdato \"førsteDigitaliseringsdato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,forstedigitaliseringsdato,-1,-1;oppdateringsdato \"oppdateringsdato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,oppdateringsdato,-1,-1;malemetode \"kvalitet.Posisjonskvalitet.målemetode\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,malemetode,-1,-1;noyaktighet \"kvalitet.Posisjonskvalitet.nøyaktighet\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,noyaktighet,-1,-1;omradeid \"kopidata.Kopidata.områdeId\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,omradeid,-1,-1;originaldatavert \"kopidata.Kopidata.originalDatavert\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,originaldatavert,0,99;kopidato \"kopidata.Kopidata.kopidato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,kopidato,-1,-1;lokalid \"identifikasjon.Identifikasjon.lokalId\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,lokalid,0,99;navnerom \"identifikasjon.Identifikasjon.navnerom\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,navnerom,0,99;versjonid \"identifikasjon.Identifikasjon.versjonId\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpJuridiskLinje,versjonid,0,99")

print ('Sletta og lagt til Juridiske linjer')

# Process: Delete Features (2) (Delete Features) (management)

L74Arealformål_Pbl_12_5 = arcpy.management.DeleteFeatures(in_features=L74Arealformål_Pbl_12_5_2_)[0]

# Process: Append (2) (Append) (management)

L74Arealformål_Pbl_12_5_3_, Appended_Row_Count_2_, Updated_Row_Count_2_ = arcpy.management.Append(inputs=[RpArealformalOmrade], target=L74Arealformål_Pbl_12_5, schema_type="NO_TEST", field_mapping="objtype \"objtype\" true true false 32 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,objtype,0,31;kommunenummer \"arealplanId.NasjonalArealplanId.kommunenummer\" true true false 4 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,kommunenummer,0,3;planidentifikasjon \"arealplanId.NasjonalArealplanId.planidentifikasjon\" true true false 16 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,planidentifikasjon,0,15;arealformal \"arealformål\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,arealformal,-1,-1;vertikalniva \"vertikalnivå\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,vertikalniva,-1,-1;eierform \"eierform\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,eierform,-1,-1;utnyttingstype \"utnyttingstype\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,utnyttingstype,-1,-1;utnyttingstall \"utnyttingstall\" true true false 0 Double 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,utnyttingstall,-1,-1;utnyttingstall_minimum \"utnytting.RpUtnytting.utnyttingstall_minimum\" true true false 0 Double 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,utnyttingstall_minimum,-1,-1;beskrivelse \"beskrivelse\" true true false 120 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,beskrivelse,0,119;feltbetegnelse \"feltbetegnelse\" true true false 20 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,feltbetegnelse,0,19;uteoppholdsareal \"uteoppholdsareal\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,uteoppholdsareal,-1,-1;byggverkbestemmelse \"byggverkbestemmelse\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,byggverkbestemmelse,-1,-1;avkjorselsbestemmelse \"avkjørselsbestemmelse\" true true false 0 Short 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,avkjorselsbestemmelse,-1,-1;forstedigitaliseringsdato \"førsteDigitaliseringsdato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,forstedigitaliseringsdato,-1,-1;oppdateringsdato \"oppdateringsdato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,oppdateringsdato,-1,-1;omradeid \"kopidata.Kopidata.områdeId\" true true false 0 Long 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,omradeid,-1,-1;originaldatavert \"kopidata.Kopidata.originalDatavert\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,originaldatavert,0,99;kopidato \"kopidata.Kopidata.kopidato\" true true false 8 Date 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,kopidato,-1,-1;lokalid \"identifikasjon.Identifikasjon.lokalId\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,lokalid,0,99;navnerom \"identifikasjon.Identifikasjon.navnerom\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,navnerom,0,99;versjonid \"identifikasjon.Identifikasjon.versjonId\" true true false 100 Text 0 0,First,#,C:\\Kart\\Plan\\geonorge\\Plandata_Ferdig\\RpReguleringsplanForslag_VN2.gdb\\RpArealformalOmrade,versjonid,0,99")

if __name__ == '__main__':

# Global Environment settings

with arcpy.EnvManager(scratchWorkspace="C:\\Kart\\Plan\\geonorge\\PlandataAGO.gdb", workspace="C:\\Kart\\Plan\\geonorge\\PlandataAGO.gdb"):

OppdaterPlandataSlettLeggTil()

I will once again aplaude this script. The fact that you can update hosted feature services with views make data administration so much easier.

One example:

I had to fix som errors in a feature service (repair geometry in arcgis pro). To do this, I had to download the hosted feature service. This hfs is part of a system with several views.

I could just run the script after fixing the data in arcgis pro. And everything worked.

Hi @JakeSkinner, this script has been working for me, but I have a large dataset that it fails on. I works on my smaller datasets, but fails on our Parcel Layer that is over 200,000 features. The only error I get is the "Error code 500: Unknown Error" after the feature deletion phase and during the appending phase. I believe it happens on the . I have tried using the upsert method, but I get this error:

Unable to add feature service layer definition.

Invalid definition for System.Collections.Generic.List`1[ESRI.ArcGIS.SDS.FieldIndex]

Invalid definition for System.Collections.Generic.List`1[ESRI.ArcGIS.SDS.FieldIndex]

(Error Code: 400)

Can you provide any assistance? I can invite you to a AGOL group if you have time to assist.

Hi @cartolizard you can invite my agol account (jskinner_rats) to a group and I will check it out.

@cartolizard looks like the feature service is empty. Could you append data to the service (you can try using ArcGIS Pro's Append), or overwrite the service?

@JakeSkinner You can disregard my issue. Turns out the problem was a schema change from the source table. 10 digit zip codes were added to a field, but the character limit for the field in AGOL was 9.

Are there any changes to python after upgrading to ArcGISpro 3.5? My update scripts fails on the last step - deleting the local version of the fgdb.

You see the report from the script below:

Connecting to AGOL

Creating temporary File Geodatabase

Exporting RpJuridiskLinje to temp FGD

Zipping temp FGD

Uploading File Geodatabase

Get feature service fields

Get feature class/table fields

Truncating Feature Service

Appending features

Deleting uploaded File Geodatabase

Deleting temporary FGD and zip file

Traceback (most recent call last):

File "C:\Kart\Plan\geonorge\Skript_Nedlasting\Python_Skript\OppdaterPlandataAGO\BB1_OppdaterBebyggelsesplan_JurLinje.py", line 289, in <module>

os.remove(gdb + ".zip")

PermissionError: [WinError 32] Prosessen får ikke tilgang til filen fordi den brukes av en annen prosess: 'C:\\Users\\35450\\AppData\\Local\\Temp\\scratch\\3ccaa57f-317d-11f0-beb9-2079182894fb.gdb.zip'

I tried to restart the computer. With no success. It seems like the services are updated, its the local cleanup-prosess that crashes.

Regards!

Sveinung

@SveinungBertnesRåheim I've seen several users run into this issue, including myself. I have been unable to find a solution on how to resolve. On my current server it seems to resolve itself automatically. To workaround the issue, I add the below portion to the beginning of the script and remove from the end of the script:

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")After the script is executed there will be a File Geodatabase and zip file leftover, but adding this to the beginning will stop from these files accumulating.