- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Feature Layer Corrupts When Overriding on Portal O...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Feature Layer Corrupts When Overriding on Portal Only

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have a script that runs daily and updates a csv with new records and then overwrites two identical feature layers, but one is on my company's AGOL site and the other is on the ESRI Portal site. This process has run for a while and has almost always run fine with the occasional time where the feature layer would corrupt on the portal and need to be replaced.

Ever since my company upgraded to Enterprise/Portal 10.9.1 the Portal feature layer seems to corrupt basically every time the feature layer is overwritten the csv. I will show a reference to the code I run below that performs this overwrite:

# Sign in to ArcGIS with the credentials given and the portal url

gisUser = "USER"

gisPass = "PASS"

target = GIS("PORTAL URL", gisUser, gisPass)

# making data frame from csv file

data = pd.read_csv(CSV PATH)

# change the date columns in the DataFrame which are currently in String format to datetime64

data["DateColumn"] = pd.to_datetime(data["DateColumn"])

data["DateColumn2"] = pd.to_datetime(data["DateColumn2"])

# pull the feature layer to append to

lyr = target.content.get("ITEM ID").layers[0]

# GeoAccessor class adds a spatial namespace that performs spatial operations on the given Pandas DataFrame

# "Longitude" and "Latitude" are the exact names of my spatial columns in my csv

sdf = GeoAccessor.from_xy(data, "Longitude", "Latitude")

# convert column names from csv to match lower case format on the ESRI portal

cols = {

"Column1": "column1",

"Column2": "column2",

"Column3": "column3",

}

# rename the column in the DataFrame, this will not change the base csv

sdf.rename(columns=cols, inplace=True)

sdf.columns.to_list()

# truncate all records from the feature layer

lyr.manager.truncate()

# apply new records to layer in 200-feature chunks

i = 0

while i < len(sdf):

fs = sdf.loc[i : i + 199].spatial.to_featureset()

updt = lyr.edit_features(adds=fs)

msg = updt["addResults"][0]

# print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

if "error" in msg:

print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

print(msg["error"]["description"])

i += 200

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Oh, good thinking! You just have to be careful with automating that sort of thing, on the off chance that items 0 and 1 in your search list don't end up being the items you think they will.

To give you a jump start on the truncate/append approach, here's a snippet from a similar process we use:

# read file to dataframe, convert to spatial dataframe

df = pandas.read_csv('path-to-csv')

sdf = arcgis.features.GeoAccessor.from_xy(df, 'lon', 'lat')

# get layer and drop its records

item = gis.content.get('itemid of layer')

lyr = item.layers[0] # or whatever layer index you're updating

lyr.manager.truncate()

# apply new records to layer in 200-feature chunks

i = 0

while i < len(sdf):

fs = sdf.loc[i:i+200].spatial.to_featureset()

updt = lyr.edit_features(updates=fs)

msg = updt['updateResults'][0]

print(f"Rows {i:4} - {i+200:4} : {msg['success']}")

if 'error' in msg:

print(msg['error']['description'])

n += 200

Note that this relies on using pandas, and makes certain assumptions about your data. You'll have to tweak this a bit for your situation.

I apply my edits in chunks, as the API docs recommend not applying large numbers of edits at once. There's also the append function, but I've yet to get that to work as intended.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

We haven't done this since upgrading to 10.9.1, but a while back we actually moved a lot of our similar scripts from an overwriting model to either truncate/append or selectively identify adds/updates/deletes.

I know it doesn't answer your question directly, but you might consider truncating the existing layer and appending new records to it. As long as the schema isn't changing it should work just fine.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Esri has a Python script called OverwriteFS that is supposed to make it easy to overwrite existing services, but I have not used it myself and it is designed for ArcGIS Online. It might let you continue using a CSV without it becoming corrupted. Here is a link to an ArcGIS blog post talking about it: https://www.esri.com/arcgis-blog/products/arcgis-living-atlas/data-management/overwrite-feature-serv...

Personally I switched from overwriting a service published from a CSV file to one published from a feature class and my corrupt service issues went away. At least until Esri changed the commands to overwrite a service published from a feature class 🙂 . I updated my script to the new commands and the service is overwriting again. I am starting to look at using the OverwriteFS tool, but I have not had time to test it yet. I am publishing to ArcGIS Enterprise 10.9.1.

I hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I agree with @jcarlson . If you are looking to overwrite a feature service, I recommend performing a truncate/append. Here is a tool that can help:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

After reading through the responses, it seems evident that this append/truncate method must be the way to go. I plan on looking into this more next week and will update with my findings.

I should mention for anyone else running into this thread with a similar problem that I did find a quick fix solution for my purposes that might help others as well so I will explain what I did. Instead of overwriting the portal feature layer and having it corrupt each time, I instead search for the layer and the delete both the layer and csv from the portal and create a new layer with the same name but with new data in the csv.

This allows the ESRI dashboard that the feature layer connects with to still work as usual since the name of the feature layer is the same and that seems to be what connects the Dashboard and Feature Layer. It is inelegant for sure but definitely functional in my case. I will post the code here:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Oh, good thinking! You just have to be careful with automating that sort of thing, on the off chance that items 0 and 1 in your search list don't end up being the items you think they will.

To give you a jump start on the truncate/append approach, here's a snippet from a similar process we use:

# read file to dataframe, convert to spatial dataframe

df = pandas.read_csv('path-to-csv')

sdf = arcgis.features.GeoAccessor.from_xy(df, 'lon', 'lat')

# get layer and drop its records

item = gis.content.get('itemid of layer')

lyr = item.layers[0] # or whatever layer index you're updating

lyr.manager.truncate()

# apply new records to layer in 200-feature chunks

i = 0

while i < len(sdf):

fs = sdf.loc[i:i+200].spatial.to_featureset()

updt = lyr.edit_features(updates=fs)

msg = updt['updateResults'][0]

print(f"Rows {i:4} - {i+200:4} : {msg['success']}")

if 'error' in msg:

print(msg['error']['description'])

n += 200

Note that this relies on using pandas, and makes certain assumptions about your data. You'll have to tweak this a bit for your situation.

I apply my edits in chunks, as the API docs recommend not applying large numbers of edits at once. There's also the append function, but I've yet to get that to work as intended.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Josh,

I tried implementing this code into my script and wasn't able to get it to work so I was wondering if you have ever seen this error before or know where I can read more on the subject.

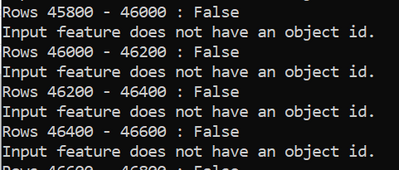

The script that ran was looking to add 200~ records to a 50,000 record csv of data and this is the message I got for every group of 200 records. The issue must come from the .truncate() or appending feature but I am not sure why I get the object id message. When I got to the feature layer after, it is completely blank but not corrupt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Major facepalm moment. It should be

lyr.edit_features(adds=fs)

msg = updt['addResults'][0]

It was trying to update existing features, using an object ID to do so, but your FeatureSet wouldn't have any object ID, hence the error. Try it with the modifications above.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Josh,

This change did help the script run successfully, but now when I check the feature layer's data, the correct number of rows are present but every cell of data is blank. Any idea why that would be?

I tried debugging it by printing 'fs' and 'updt' in the while loop and each one looks perfectly fine during the while loop's run, but somehow when something about edit_features just seems to now include the actual data when adding. Here is an example of a 'updt' result:

{'success': True, 'objectId': 16281}], 'updateResults': [], 'deleteResults': []}

When I look at the actual feature layer map, everything is plotted where it should be too, so the actual spatial data is being saved but just not the other data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Okay, so we're getting closer. If you're getting some of the attributes (the shape), but not the others, odds are the dataframe's column names aren't matching up with the destination layer.

Some of the spatial dataframe functions automatically "sanitize" column names, giving you things like "some_column" instead of "Some Column". If you're scripting in an environment where you can debug it, pause the script and just see what sdf.columns.to_list() returns, then compare it against the schema of the destination layer.

If that is the issue, then just insert something like this into the script:

cols = {

'old_column_1': 'NewColumn1',

'old_column_2': 'NewColumn2',

...

}

sdf.rename(columns=cols, inplace=True)

Kendall County GIS