- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Feature Layer Corrupts When Overriding on Portal O...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Feature Layer Corrupts When Overriding on Portal Only

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have a script that runs daily and updates a csv with new records and then overwrites two identical feature layers, but one is on my company's AGOL site and the other is on the ESRI Portal site. This process has run for a while and has almost always run fine with the occasional time where the feature layer would corrupt on the portal and need to be replaced.

Ever since my company upgraded to Enterprise/Portal 10.9.1 the Portal feature layer seems to corrupt basically every time the feature layer is overwritten the csv. I will show a reference to the code I run below that performs this overwrite:

# Sign in to ArcGIS with the credentials given and the portal url

gisUser = "USER"

gisPass = "PASS"

target = GIS("PORTAL URL", gisUser, gisPass)

# making data frame from csv file

data = pd.read_csv(CSV PATH)

# change the date columns in the DataFrame which are currently in String format to datetime64

data["DateColumn"] = pd.to_datetime(data["DateColumn"])

data["DateColumn2"] = pd.to_datetime(data["DateColumn2"])

# pull the feature layer to append to

lyr = target.content.get("ITEM ID").layers[0]

# GeoAccessor class adds a spatial namespace that performs spatial operations on the given Pandas DataFrame

# "Longitude" and "Latitude" are the exact names of my spatial columns in my csv

sdf = GeoAccessor.from_xy(data, "Longitude", "Latitude")

# convert column names from csv to match lower case format on the ESRI portal

cols = {

"Column1": "column1",

"Column2": "column2",

"Column3": "column3",

}

# rename the column in the DataFrame, this will not change the base csv

sdf.rename(columns=cols, inplace=True)

sdf.columns.to_list()

# truncate all records from the feature layer

lyr.manager.truncate()

# apply new records to layer in 200-feature chunks

i = 0

while i < len(sdf):

fs = sdf.loc[i : i + 199].spatial.to_featureset()

updt = lyr.edit_features(adds=fs)

msg = updt["addResults"][0]

# print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

if "error" in msg:

print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

print(msg["error"]["description"])

i += 200

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hmm, I would have thought that would have been the issue too, but some time ago I changed all the column names to camel case because of a similar issue. I printed sdf.columns.to_list() and then also printed dtypes of the dataframe and both lists were the exact same. Is there somewhere else I should look at the name of the columns to see if they differ or is there a chance something else is the problem?

I could also still try the renaming columns like you mentioned if you think that might work, but from what it seems, the columns should all be matching up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The dtypes and the sdf columns should be the same, I think. But I'm concerned with whether they match the schema of the destination feature service or not. There's still a chance it's something else, but nothing obvious comes to mind.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

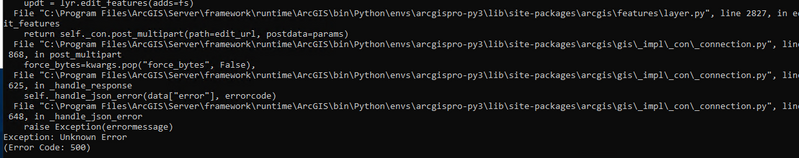

So I noticed that the field names in the ESRI portal would change from camel case to all lower case after running this append script, and I'm assuming that is why all the data comes in as blank. I tested out changing the data source csv to have all lowercase field names and then creating a new feature layer with this csv and it created fine, but when trying to run the same append script I now get this:

The error doesn't give much info but it is different than before which seems somewhat like progress haha. Do you think this change of capitalization was the problem between the field names to begin with? Would trying to rename the columns help? Or is there a way to stop it from going to all lower case to begin with?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello,

I thought I'd try to reach out one more time to see if you have any thoughts on the current snag I've run into. To summarize, when I was running the script before with edit_features(adds=fs) and my csv had camel case field names, the data would wind up keeping its spatial information but each other cell shows up as blank. When I run the script with edit_features and I manually changed the csv to have all lower case field names (to match what it looks like in the portal under Fields in Data), then the script fails almost immediately on the edit_features(adds=fs) line with a generic 500 error with no information. The only other thing to mention is that if I go the feature layer page itself and manually hit update and append new data and choose the csv, it works fine after matching the fields so I imagine there is still some field matching problem going on but I can't tell where or how to debug it further.

Finally, if I run the section of you code you gave about renaming sdf cols on the csv with camel case field names, when it tries to do the appending in chunks it breaks immediately again with a 500 error. I see that the portal always converts field names to lower case, is there something else I can do to get around these columns not matching right? Also, is there an append() method I can just use instead of edit_features() that would maybe circumvent this issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hard to say for sure what's going on. What you might do is query the hosted layer prior to applying your edits to get the schema, then compare and adjust your sdf schema to match it.

schema = arcgis.features.GeoAccessor.from_layer(lyr).dtypes

There is an append function if you're working with AGOL or a more up-to-date Enterprise version, but I have not gotten it to work as intended. By all means give it a whirl, though!

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

EDIT: There was one small error in the script below that would cause the script to duplicate every 200th record, just change loc[i : i + 200] to loc[i : i +199] like I have changed below to fix.

I was able to get it to work with the help of this so I will update with what my solution was for anyone that stumbles upon this thread.

So in my case, I have a csv with camel case field names, which is not how it is stored in the ESRI portal database, so I did need to rename the columns in the dataframe using the snippet from @jcarlson

But I also found that for my date columns that I pull in from a separate datasource's api, they were being stored as strings in my csv but were datetime64 types on the portal so I also had to convert those columns in the DataFrame after having pandas read the csv. Here is what my code looked like:

# Sign in to ArcGIS with the credentials given and the portal url

gisUser = "USER"

gisPass = "PASS"

target = GIS("PORTAL URL", gisUser, gisPass)

# making data frame from csv file

data = pd.read_csv(CSV PATH)

# change the date columns in the DataFrame which are currently in String format to datetime64

data["DateColumn"] = pd.to_datetime(data["DateColumn"])

data["DateColumn2"] = pd.to_datetime(data["DateColumn2"])

# pull the feature layer to append to

lyr = target.content.get("ITEM ID").layers[0]

# GeoAccessor class adds a spatial namespace that performs spatial operations on the given Pandas DataFrame

# "Longitude" and "Latitude" are the exact names of my spatial columns in my csv

sdf = GeoAccessor.from_xy(data, "Longitude", "Latitude")

# convert column names from csv to match lower case format on the ESRI portal

cols = {

"Column1": "column1",

"Column2": "column2",

"Column3": "column3",

}

# rename the column in the DataFrame, this will not change the base csv

sdf.rename(columns=cols, inplace=True)

sdf.columns.to_list()

# truncate all records from the feature layer

lyr.manager.truncate()

# apply new records to layer in 200-feature chunks

i = 0

while i < len(sdf):

fs = sdf.loc[i : i + 199].spatial.to_featureset()

updt = lyr.edit_features(adds=fs)

msg = updt["addResults"][0]

# print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

if "error" in msg:

print(f"Rows {i:4} - {i+199:4} : {msg['success']}")

print(msg["error"]["description"])

i += 200

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »