- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Dissolve/merge features without creating new f...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Dissolve/merge features without creating new feature class python

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have a feature class that can have many features within it i would like to merge all the features in this feature class into just one based on the "BUFF_DIST" like you would in ArcMap when you start editing, select all the features and go to edit, merge and you get the popup "Chose the feature with which other features will be merged:" i am trying to avoid creating a new feature class. Anyone done this or have an example of some python code?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Correct this is would not be a result of buffering, i don't wan to recreate a new feature class and I want to merge the attributes similar to the what you do in Arcmap when you select features,start editing, click editor tool bar- merge. This would all take place in ArcMap's Python not stand alone. I came close to this with the code i posted but i can't get it correct.

Thanks for the reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The data access editor provides some limited capabilities, but It isn't clear whether geometry changes are fully supported

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Since you want to overwrite the features that were included in the dissolve/union, you will always have the potential of data loss should something go wrong. If it were me I'd look to convert the searchCursor to JSON (there's a SHAPE@JSON property available), run the dissolve on that to create a new output, remove the original features then use the JSON of dissolved features to update the original feature class.

You'd have to look into the Reading geometries—ArcPy Get Started | ArcGIS Desktop and Writing geometries—ArcPy Get Started | ArcGIS Desktop to get full context. Plus you'd need to come up with the union process -- I just use the GeometryServer/union task on one of our ArcGIS Servers to perform that process on input features like so,

def runUnion(geomEntity):

queryURL = "https://YourArcGISServerSite/rest/services/Utilities/Geometry/GeometryServer/union"

params = urllib.urlencode({'f': 'json',

'geometries': geomEntity,

'geometryType': 'esriGeometryPolygon',

'sr': 3857

})

req = urllib2.Request(queryURL, params)

response = urllib2.urlopen(req)

jsonResult = json.load(response)

return jsonResultI think in the end someone mentioned it will take a bit of coding and question if it's worth the effort, which is always the case I guess. For me, I've simply made the switch from localized data to working 100% with REST services which took a lot of effort to get migrate all of our python implementations off of SDE/FGDB/Local data. However, in the end it's worth it for us because it opens up the amount of data available to a global scale.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

At this point i would like to just merge the features without the attributes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi 2 Quiker ,

See the example below:

def main():

import arcpy

fc = r'C:\GeoNet\DissolvePy\data.gdb\pols_test'

fld_dissolve = 'Class'

# get unique values from field

unique_vals = list(set([r[0] for r in arcpy.da.SearchCursor(fc, (fld_dissolve))]))

for unique_val in unique_vals:

geoms = [r[0] for r in arcpy.da.SearchCursor(fc, ('SHAPE@', fld_dissolve)) if r[1] == unique_val]

if len(geoms) > 1:

diss_geom = DissolveGeoms(geoms)

# update the first feature with new geometry and delete the others

where = "{} = '{}'".format(fld_dissolve, unique_val)

cnt = 0

with arcpy.da.UpdateCursor(fc, ('SHAPE@'), where) as curs:

for row in curs:

cnt += 1

if cnt == 1:

row[0] = diss_geom

curs.updateRow(row)

else:

curs.deleteRow()

def DissolveGeoms(geoms):

sr = geoms[0].spatialReference

arr = arcpy.Array()

for geom in geoms:

for part in geom:

arr.add(part)

diss_geom = arcpy.Polygon(arr, sr)

return diss_geom

if __name__ == '__main__':

main()A couple of thing to keep in mind:

- Try this on a copy of your data!

- It will only works on polygons in this case

- It assumes that your field to dissolve on is a text field

- check the where clause to have it match the syntax of your workspace

- It will put the dissolved polygon in the first of the features for each list of features to dissolve, so any additional fields will have the first value. No intelligence is applied to mimic any merge rules of the fields

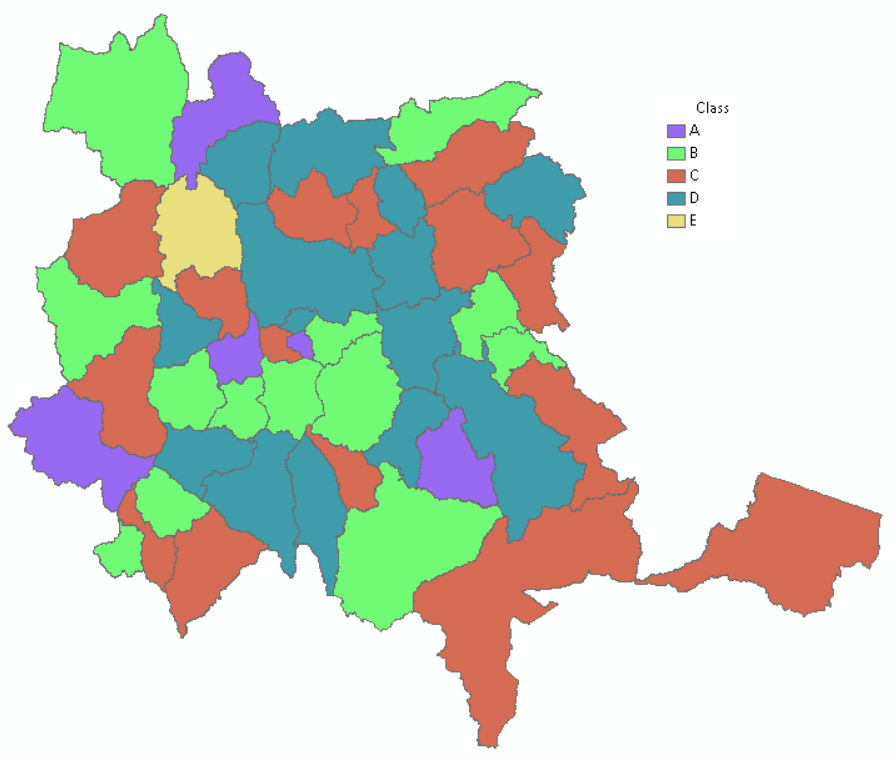

Input polygons for testing:

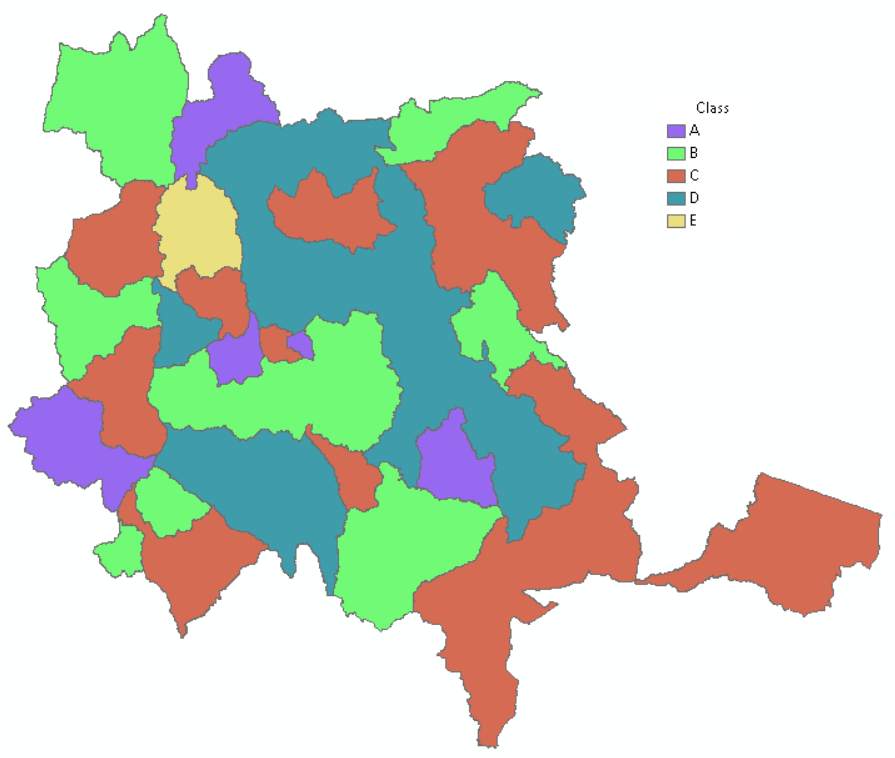

resulting polygons:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Xander,

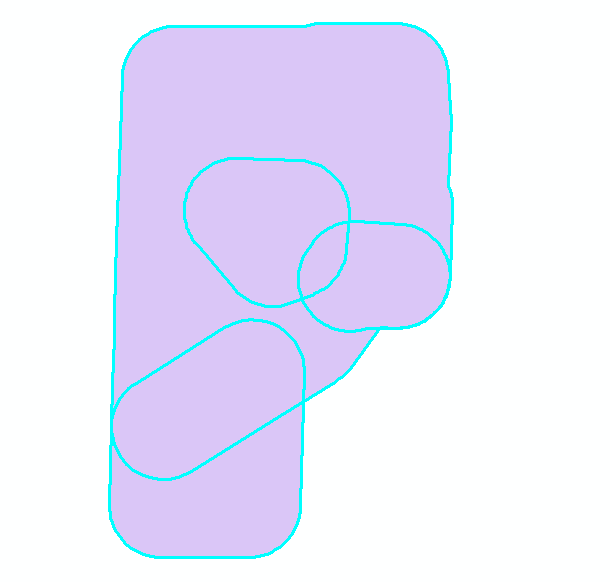

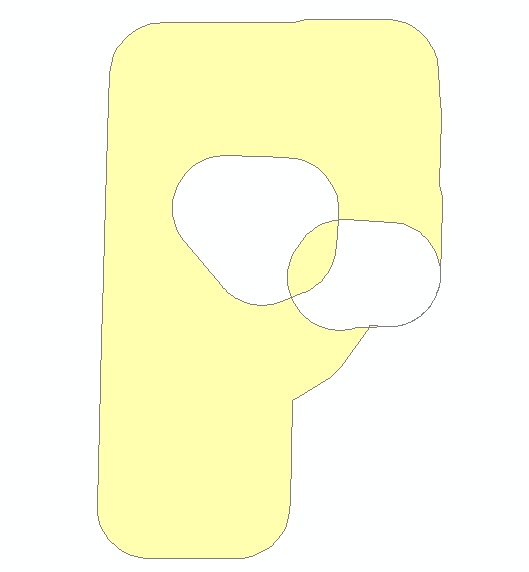

Thanks for the reply and code, after running your example i noticed that the result is the same as my script. I thought i posted pics of my code results but i guess i didn't. It seems as both your and my code cuts out the over lapping polygons. I forgot to mention that there are some over lapping poygons.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi 2CQuiker ,

Can you change the function "DissolveGeoms" for this one below and try again (on a copy of your data)?:

def DissolveGeoms(geoms):

cnt = 0

for geom in geoms:

cnt += 1

if cnt == 1:

diss_geom = geom

else:

diss_geom = diss_geom.union(geom)

return diss_geom- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Here is some code I have used in the past, similar but a bit different to Xander's. My code does two passes through the data set with the same cursor, the first pass gets the union-ed geometries, and the second pass updates and deletes rows.

from arcpy import da

from itertools import groupby

from operator import itemgetter

from functools import reduce

fc = # path to feature class or table

case_fields = ["field1", "field2"] # field(s) to group records for merging

sort_field, sort_order = "OBJECTID", "ASC"

shape_field = "SHAPE@"

fields = case_fields + [sort_field, shape_field]

sql_orderby = "ORDER BY {}, {} {}".format(", ".join(case_fields), sort_field, sort_order)

with da.UpdateCursor(fc, fields, sql_clause=(None, sql_orderby)) as cur:

case_func = itemgetter(*range(len(case_fields)))

merged_polys = {

key:reduce(arcpy.Geometry.union, (row[-1] for row in group))

for key, group

in groupby(cur, case_func)

}

cur.reset()

for key, group in groupby(cur, case_func):

row = next(group)

cur.updateRow(row[:-1] + [merged_polys[key]])

for row in group:

cur.deleteRow()

If the data set is extremely large, where building a dict of merged polygons might not be practical, there is another approach using an update and search cursor in parallel.

from arcpy import da

from itertools import groupby

from operator import itemgetter

from functools import reduce

fc = # path to feature class or table

case_fields = ["field1", "field2"] # field(s) to group records for merging

sort_field, sort_order = "OBJECTID", "ASC"

shape_field = "SHAPE@"

fields = case_fields + [sort_field, shape_field]

sql_orderby = "ORDER BY {}, {} {}".format(", ".join(case_fields), sort_field, sort_order)

kwargs = {"in_table":fc, "field_names":fields, "sql_clause":(None, sql_orderby)}

with da.UpdateCursor(**kwargs) as ucur, da.SearchCursor(**kwargs) as scur:

case_func = itemgetter(*range(len(case_fields)))

ugroupby, sgroupby = groupby(ucur, case_func), groupby(scur, case_func)

for (ukey,ugroup),(skey,sgroup) in zip(ugroupby,sgroupby):

poly = reduce(arcpy.Geometry.union, (row[-1] for row in sgroup))

row = next(ugroup)

ucur.updateRow(row[:-1] + [poly])

for row in ugroup:

ucur.deleteRow()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am getting an error and " The field BUFF_DIST is a double, file is a shapefile.

Runtime error

Traceback (most recent call last):

File "<string>", line 24, in <module>

File "<string>", line 22, in <dictcomp>

RuntimeError: A column was specified that does not exist

Never mind I forgot to change OBJECTID to FID, since it's a shapefile.

Everything works, thanks for sharing.