- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Survey123

- :

- ArcGIS Survey123 Questions

- :

- Re: Downloading image/attachment batches by specif...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Downloading image/attachment batches by specific survey submission

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I work for a small "baby-blue collar" tech startup that uses various Esri tools to survey and report on telecommunications infrastructure. We take a LOT of photos during the course of a single survey. I have been successful in automating report templates for the many times we need to produce reports on specific sites; however, we would also like to be able to download just the images from each site surveyed so that we can store the raw images from each site audit in their own directory.

I have made use of the Jupyter Notebook found here, which I know many are familiar with. While the notebook does indeed extract all the image attachments from a survey, it does so at the survey level and not the form/object ID level within the survey. Our surveys contain many nested repeats and subsections, which causes the notebook to generate about 15 different Excel tables and 15 different subfolders, each with a smorgasborg of images inside that are coming from every single submission to that survey. This is a brute force method that, on the post-processing end, took me several hours the other day to sort out which photos correspond to which specific site - and I only had seven sites to sort out! This will become a massive headache in the near future, when our database reaches into the hundreds and even thousands of records.

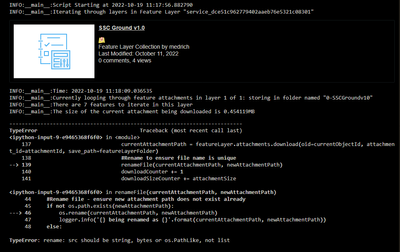

Searching for a better way to do this, I found the extremely useful script written by @MichaelKelly (here), and even found some comments he made about how to change snippets of code to make use of GlobalID's, which I think is the sort of pathway that I need here. Unfortunately, I wasn't able to get the code provided by Michael to work for me - I have attached a screenshot of the issue I can't seem to work out. This error occurs after a single image is downloaded to the specified directory.

Is there any way I can use the method provided by Esri (via ArcGIS Pro), or by @MichaelKelly, to download attachments for a specified record in a survey database? My coding skill is somewhere a little past beginner, but that's all more geared towards data science applications. I admit that I am fairly lost in this endeavor at this point and would really really appreciate some support and guidance here. Thank you all!

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure! Here's the "safe to share" bits of the script that handle attachments.

# modules

from arcgis import GIS

import requests

import shutil

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

import os

# Set portal

gis = GIS(profile='some_profile')

# layers

fl = gis.content.get('itemid')

records = fl.layers[0]

rel_a = fl.tables[0]

rel_b = fl.tables[1]

# filter statement

where = "record_number LIKE '22-01%'"

# Columns we want in export, in order

export_cols = [

'columns',

'for',

'export'

]

# Prepare output dir

out_dir = os.path.expanduser('~/Desktop/')

export_name = f"records_{datetime.now().strftime('%Y%m%d')}"

file = os.path.join(out_dir, f'{export_name}.rtf')

# Query layer

c_df = records.query(

where,

out_fields=export_cols,

return_geometry=False

).sdf[export_cols]

# Fill n/a values with empty strings

c_df.fillna('', inplace=True)

# Check if any of the records have attachments

all_records = ','.join(["'" + r + "'" for r in c_df['RowID'].astype('str').to_list() if r])

all_rel_b = rel_b.query(where=f"parentrowid in ({all_records})", return_ids_only=True)['objectIds']

atts = rel_b.attachments.search(object_ids=all_rel_b)

# If attachments are found, create dir for them

has_atts = len(atts) > 0

if has_atts > 0:

atts_dir = os.path.join(out_dir, export_name + '_attachments')

try:

os.mkdir(os.path.join(out_dir, atts_dir))

except FileExistsError:

print('Attachment dir already exists')

investigations

print(f'{len(c_df)} records to export.')

## Iterate over records and populate file contents

i = 0

while i < len(c_df):

c = c_df.loc[i].to_dict()

n_df = rel_b.query(

f"parentrowid = '{c['RowID']}'",

out_fields = ['rel_b_date', 'notes', 'interaction_type', 'parentrowid'],

order_by_fields='rel_b_date ASC'

).sdf

# Check if complaint has any attachments, create necessary subdirectory

c_atts = [x for x in atts if x['PARENTOBJECTID'] in n_df['ObjectID'].to_list()]

if len(c_atts) > 0:

print('\tPulling attachments')

atts_subdir = os.path.join(atts_dir, c['record_number'])

try:

os.mkdir(atts_subdir)

except FileExistsError:

print('\tAttachment subdirectory already exists.')

k = 0

while k < len(n_df):

n = n_df.loc[k].to_dict()

# Get specific attachments for rel_b entry

n_atts = [x for x in atts if x['PARENTOBJECTID'] == n['ObjectID']]

# If any attachments exist, add a line to the document

if len(n_atts) > 0:

# create rel_b-specific subdir; adjust for time zone

n_atts_subdir = os.path.join(atts_subdir, (n['Date']-timedelta(hours=6)).strftime('%d-%b-%Y_%H%M'))

try:

os.mkdir(n_atts_subdir)

except FileExistsError:

print('\trel_b subdirectory already exists.')

for n_att in n_atts:

furl = n_att['DOWNLOAD_URL']

fname = n_att['NAME']

r = requests.get(furl)

with open(os.path.join(n_atts_subdir, fname), 'wb') as f:

f.write(r.content)

k += 1

i += 1

# Zip up attachments, remove source folder

if has_atts:

print('Zipping attachments')

shutil.make_archive(atts_dir, 'zip', atts_dir)

shutil.rmtree(atts_dir, ignore_errors=True)

print('Done!')

Hopefully there's something in that you can use!

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a script that does something like this. I can't share it in its entirety, but it loops through a set of items, then loops through each item's corresponding related table entries, where the attachments also happen to be.

For each entry with attachments, the script creates a sub-directory named for the entry, then downloads the attachments to that directory. At the very end, the script zips all the directories into a single archive. The folder structure looks like this:

- Export ZIP

- Feature 1

- Related entry A

- attachment 1

- attachment 2

- attachment 3

- Related entry B

- attachment 4

- Related entry A

- Feature 2

- Related entry C

- attachment 5

- attachment 6

- Related entry C

- Feature 1

It's very much tailored to our needs and the particular schema, but I could try to share some of the more generalized parts if you think it might help you.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

DM'd 😎

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jcarlson may I get a copy of your script too? 😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure! Here's the "safe to share" bits of the script that handle attachments.

# modules

from arcgis import GIS

import requests

import shutil

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

import os

# Set portal

gis = GIS(profile='some_profile')

# layers

fl = gis.content.get('itemid')

records = fl.layers[0]

rel_a = fl.tables[0]

rel_b = fl.tables[1]

# filter statement

where = "record_number LIKE '22-01%'"

# Columns we want in export, in order

export_cols = [

'columns',

'for',

'export'

]

# Prepare output dir

out_dir = os.path.expanduser('~/Desktop/')

export_name = f"records_{datetime.now().strftime('%Y%m%d')}"

file = os.path.join(out_dir, f'{export_name}.rtf')

# Query layer

c_df = records.query(

where,

out_fields=export_cols,

return_geometry=False

).sdf[export_cols]

# Fill n/a values with empty strings

c_df.fillna('', inplace=True)

# Check if any of the records have attachments

all_records = ','.join(["'" + r + "'" for r in c_df['RowID'].astype('str').to_list() if r])

all_rel_b = rel_b.query(where=f"parentrowid in ({all_records})", return_ids_only=True)['objectIds']

atts = rel_b.attachments.search(object_ids=all_rel_b)

# If attachments are found, create dir for them

has_atts = len(atts) > 0

if has_atts > 0:

atts_dir = os.path.join(out_dir, export_name + '_attachments')

try:

os.mkdir(os.path.join(out_dir, atts_dir))

except FileExistsError:

print('Attachment dir already exists')

investigations

print(f'{len(c_df)} records to export.')

## Iterate over records and populate file contents

i = 0

while i < len(c_df):

c = c_df.loc[i].to_dict()

n_df = rel_b.query(

f"parentrowid = '{c['RowID']}'",

out_fields = ['rel_b_date', 'notes', 'interaction_type', 'parentrowid'],

order_by_fields='rel_b_date ASC'

).sdf

# Check if complaint has any attachments, create necessary subdirectory

c_atts = [x for x in atts if x['PARENTOBJECTID'] in n_df['ObjectID'].to_list()]

if len(c_atts) > 0:

print('\tPulling attachments')

atts_subdir = os.path.join(atts_dir, c['record_number'])

try:

os.mkdir(atts_subdir)

except FileExistsError:

print('\tAttachment subdirectory already exists.')

k = 0

while k < len(n_df):

n = n_df.loc[k].to_dict()

# Get specific attachments for rel_b entry

n_atts = [x for x in atts if x['PARENTOBJECTID'] == n['ObjectID']]

# If any attachments exist, add a line to the document

if len(n_atts) > 0:

# create rel_b-specific subdir; adjust for time zone

n_atts_subdir = os.path.join(atts_subdir, (n['Date']-timedelta(hours=6)).strftime('%d-%b-%Y_%H%M'))

try:

os.mkdir(n_atts_subdir)

except FileExistsError:

print('\trel_b subdirectory already exists.')

for n_att in n_atts:

furl = n_att['DOWNLOAD_URL']

fname = n_att['NAME']

r = requests.get(furl)

with open(os.path.join(n_atts_subdir, fname), 'wb') as f:

f.write(r.content)

k += 1

i += 1

# Zip up attachments, remove source folder

if has_atts:

print('Zipping attachments')

shutil.make_archive(atts_dir, 'zip', atts_dir)

shutil.rmtree(atts_dir, ignore_errors=True)

print('Done!')

Hopefully there's something in that you can use!

Kendall County GIS