- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Documents

- :

- Overwrite ArcGIS Online Feature Service using Trun...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

Overwrite ArcGIS Online Feature Service using Truncate and Append

Overwrite ArcGIS Online Feature Service using Truncate and Append

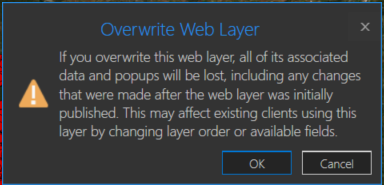

You may have a need to overwrite an ArcGIS Online hosted feature service due to feature and/or attribute updates. However, this could cause some data loss such as pop-ups, symbology changes, etc in the hosted feature service. For example, you will receive a warning about this when you try to overwrite a feature service in ArcGIS Pro:

One way around this is to use the ArcGIS API for Python. If you are the data owner, or Administrator, you can truncate the feature service, and then append data. This is essentially an overwrite of the feature service. The below script will do this by specifying a local feature class and the item id of the feature service you wish to update. The script will then execute the following steps:

- export the feature class to a temporary File Geodatabase

- zip the File Geodatabase

- upload the zipped File Geodatabase to AGOL

- truncate the feature service

- append the zipped File Geodatabase to the feature service

- delete the uploaded zipped File Geodatabase in AGOL

- delete the local zipped File Geodatabase

- delete the temporary File Geodatabase

Here is an explanation of the script variables:

- username = ArcGIS Online username

- password = ArcGIS Online username password

- fc = path to feature class used to update feature service

- fsItemId = the item id of the ArcGIS Online feature service

- featureService = True if updating a Feature Service, False if updating a Hosted Table

- hostedTable = True is updating a Hosted Table, False if updating a Feature Service

- layerIndex = feature service layer index

- disableSync = True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

- updateSchema = True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

- upsert = True will not truncate the feature service, requires a field with unique values

- uniqueField = Field that contains unique values

Note: For this script to work, the field names in the feature class must match the field names in the hosted feature service. The hosted feature service can have additional fields, though.

Video

Script

import arcpy, os, time, uuid, arcgis

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Variables

username = "jskinner_rats" # AGOL Username

password = "********" # AGOL Password

fc = r"c:\DB Connections\GIS@PLANNING.sde\GIS.Parcels" # Path to Feature Class

fsItemId = "a0ad52a76ded483b82c3943321f76f5a" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = 0 # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = True # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

upsert = True # True will not truncate the feature service, requires a field with unique values

uniqueField = 'PIN' # Field that contains unique values

# Environment Variables

arcpy.env.overwriteOutput = True

arcpy.env.preserveGlobalIds = True

def zipDir(dirPath, zipPath):

'''

Zips File Geodatabase

Args:

dirPath: (string) path to File Geodatabase

zipPath: (string) path to where File Geodatabase zip file will be created

Returns:

'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

def updateFeatureServiceSchema():

'''

Updates the hosted feature service schema

Returns:

'''

# Get required fields to skip

requiredFields = [field.name for field in arcpy.ListFields(fc) if field.required]

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

def divide_chunks(l, n):

'''

Args:

l: (list) list of unique IDs for features that have been deleted

n: (integer) number to iterate by

Returns:

'''

# looping till length l

for i in range(0, len(l), n):

yield l[i:i + n]

if __name__ == "__main__":

# Start Timer

startTime = time.time()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

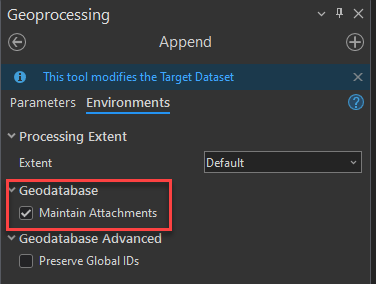

if arcgis.__version__ < '2.4.0':

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

elif arcgis.__version__ >= '2.4.0':

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Append features from featureService class/hostedTable

if upsert == True:

# Check if unique field has index

indexedFields = []

for index in fLyr.manager.properties['indexes']:

indexedFields.append(index['fields'])

if uniqueField not in indexedFields:

print(f"{uniqueField} does not have unique index; creating")

fLyr.manager.add_to_definition({

"indexes": [

{

"fields": f"{uniqueField}",

"isUnique": True,

"description": "Unique field for upsert"

}

]

})

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Append features

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=True, upsert_matching_field=uniqueField, field_mappings=[])

# Delete features that have been removed from source

# Get list of unique field for feature class and feature service

entGDBList = [row[0] for row in arcpy.da.SearchCursor(fc, [uniqueField])]

fsList = [row[0] for row in arcpy.da.SearchCursor(fLyr.url, [uniqueField])]

s = set(entGDBList)

differences = [x for x in fsList if x not in s]

# Delete features in AGOL service that no longer exist

if len(differences) > 0:

print('Deleting differences')

if len(differences) == 1:

if type(differences[0]) == str:

features = fLyr.query(where=f"{uniqueField} = '{differences[0]}'")

else:

features = fLyr.query(where=f"{uniqueField} = {differences[0]}")

fLyr.edit_features(deletes=features)

else:

chunkList = list(divide_chunks(differences, 1000))

for list in chunkList:

chunkTuple = tuple(list)

features = fLyr.query(where=f'{uniqueField} IN {chunkTuple}')

fLyr.edit_features(deletes=features)

else:

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

hasViews = False

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[

{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[

{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if maxOBJECTID != None and minOBJECTID != None:

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

Updates

3/3/2023: Added the ability to add/remove fields from feature service keeping schemas in-sync. For example, if a field(s) is added/removed from the feature class, it will also add/remove the field(s) from the feature service

10/17/2024: Added upsert functionality. Deleted features/rows from the source feature class/table will also be deleted from feature service. This is helpful if you do not want your feature service truncated at all. In the event of a failed append, the feature service will still contain data. A prerequisite for this is for the data to have a unique id field.

Why is the delete_features() function used if there are views associated with the FeaterLayerCollection? I have a similar workflow, only I call truncate() and then append() even though there are views associated with the FeatureLayerCollection. Is it possible that this could cause problems?

@nm_gridmetrics unless there were changes I'm not aware of, previously you could not execute truncate on a service that contained views. This may have changed, though. However, it should not matter whether you are using delete or truncate.

I use truncate and append to update layers in my organization that I own and some that I don't. They all have views. 1 issue I have is that maps using the views sometimes won't load in field maps. I simply re-add the layer to the map and save does the trick. Recently this tool stopped working from scheduler and could only be run Manually from pro for a week, but started working again yesterday

I've managed to get this to work as a standalone script which is great. I would however love to be able to package this up as a tool to use in a modelbuilder flow. Do you know if this would be possible?

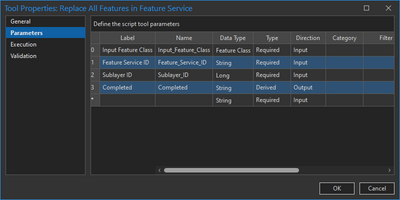

@RobertEisler I have modified the script as per below to include input and output parameters (Lines 10-12 & 144) which have replaced the variables that you edit at the beginning of the script.

(NOTE: I have also implemented the use of keyring to parse the login details for ArcGIS Online which means I don't need to store our password in the script: Lines 28-30)

import arcpy, os, time, uuid

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Overwrite Output

arcpy.env.overwriteOutput = True

# Parameters

infc = arcpy.GetParameter(0)

outfsID = arcpy.GetParameter(1)

sublayerID = arcpy.GetParameter(2)

# Variables

fc = str(infc) # Path to Feature Class

fsItemId = outfsID # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = sublayerID # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

# Start Timer

startTime = time.time()

# Create GIS object

print("Connecting to AGOL")

### Access the stored password with keyring and sign into the GIS # https://community.esri.com/t5/arcgis-online-blog/connect-to-the-gis-in-python-scripts-without/ba-p/889867

import keyring

pw = keyring.get_password("ArcGISOnline", "Username")

gis = GIS("https://fpcwa.maps.arcgis.com", "Username", pw)

print("Connected to the GIS")

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

# Function to Zip FGD

def zipDir(dirPath, zipPath):

'''Zip File Geodatabase'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

# Append features from featureService class/hostedTable

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

CompleteVariable = "Complete"

arcpy.SetParameter(3, CompleteVariable)

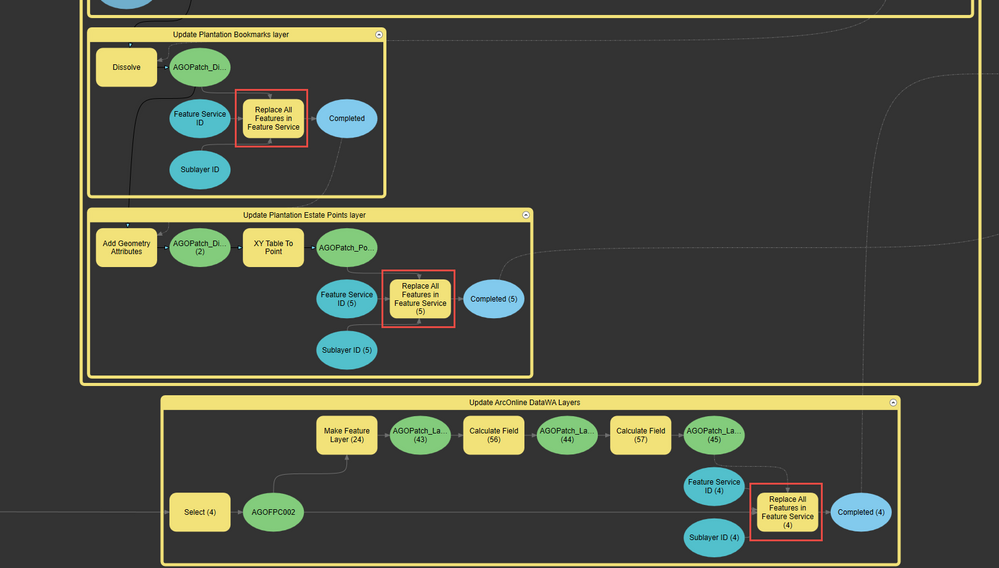

By doing this, I can then save the script as a Python Script Tool in a Toolbox which you can load into a Model and parse data in as required (feature class, feature service ID, sub layer ID) and get a "Complete" variable output (Line 144) so you know when it's done (can use as a precondition for further steps). This means you can also use the same script over and over as per my demo below.

@LindsayRaabe_FPCWA That's absolutely perfect, thank you so much! Just tested it and it works spot on. I've been looking for a modelbuilder method to overwrite online layers/tables that didn't involve the Delete Rows tool for ages and this is a great solution. I had a go at modifying the original script to use as a tool myself but I haven't quite wrapped my head around setting parameters in tools yet, this has also helped me with that so thank you again.

@RobertEisler glad it worked for you and your needs. Always good when you can find exactly what you're looking for. I find I'm usually cobbling together bits and pieces from all over the place to achieve an outcome. Slowly learning what works better as I find other examples of things I've been doing a certain easy for a while.

Thank you very much, this is exactly what we're looking for. One question. How do we get the password to she as asterisks? Thank you

@justin_peterson I just replaced the password with asterisks so it is hidden in the above example. You will need to enter the password in clear text. You could use base64 to encode the password, and enter that for the password variable. You would then need to decode the string to use it successfully within the script. This will prevent should-surfers from viewing your password when the script is open.

Why does the script fail on the Appending features step? Error code will be listed below. Thanks

Connecting to AGOL

Creating temporary File Geodatabase

Exporting P_Pipes to temp FGD

Zipping temp FGD

Uploading File Geodatabase

Disabling Sync

Truncating Feature Service

Enabling Sync

Get feature service fields

Get feature class/table fields

Deleting removed fields

Deleting field GlobalID

Appending features

Traceback (most recent call last):

File "C:\Users\jnmiller\Desktop\TruncateAppend.py", line 185, in <module>

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

File "C:\Program Files\ArcGIS\Server\framework\runtime\ArcGIS\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py", line 2384, in append

return self._check_append_status(res, return_messages)

File "C:\Program Files\ArcGIS\Server\framework\runtime\ArcGIS\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py", line 2395, in _check_append_status

sres = self._con.get(path=surl, params={"f": "json"})

File "C:\Program Files\ArcGIS\Server\framework\runtime\ArcGIS\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 763, in get

return self._handle_response(

File "C:\Program Files\ArcGIS\Server\framework\runtime\ArcGIS\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 900, in _handle_response

self._handle_json_error(data["error"], errorcode)

File "C:\Program Files\ArcGIS\Server\framework\runtime\ArcGIS\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 923, in _handle_json_error

raise Exception(errormessage)

Exception: Unknown Error

(Error Code: 500)

>>>

@neomapper it appears to be an unknown error. How many features are you trying to append?

We have 2 layers with pdf attachments. Our mainline pipe layer has approx. 20k segments with 600 attachments and our service line layer has roughly 32k segments with around 8k attachments. Is there a workaround when attachments are associated?

Thank you so much for the tool, but I have the same error a neomapper

Appending features

Traceback (most recent call last):

File "C:\Users\jmorales\AppData\Local\Temp\7zO8561FC75\TruncateAppend.py", line 210, in <module>

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", source_table_name=fc, upsert=False, field_mappings=[])

File "C:\Program Files\ArcGIS\Pro_JM\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py", line 2839, in append

return self._check_append_status(res, return_messages)

File "C:\Program Files\ArcGIS\Pro_JM\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py", line 2848, in _check_append_status

sres = self._con.get(path=surl, params={"f": "json"})

File "C:\Program Files\ArcGIS\Pro_JM\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 871, in get

return self._handle_response(

File "C:\Program Files\ArcGIS\Pro_JM\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 1008, in _handle_response

self._handle_json_error(data["error"], errorcode)

File "C:\Program Files\ArcGIS\Pro_JM\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 1031, in _handle_json_error

raise Exception(errormessage)

Exception: Unknown Error

(Error Code: 500)Is there a way to fix it?

Can both of you share the feature service you are trying to update to an AGOL Group, and then invite my user account, jskinner_CountySandbox, to this Group?

Hi All. I was using this python solution while I couldn't use the built in Truncate Table geoprocessing tool in ArcGIS Pro. I have received the below information that confirms it does work within the following parameters:

Esri Inc. have provided a response to this case. During in-house testing, Esri Inc. observed the following:

- Published a hosted feature layer to ArcGIS Online via ArcGIS Pro

- Accessed its item details page and enabled "Editing" privileges

- Accessed the admin JSON of the hosted feature layer > Update Definition > confirmed that "supportsTruncate : false"

- Now accessed the child layer > Update Definition > observed that "supportsTruncate : true" < I can't seem to access the Update Definition option for the child layer so couldn't confirm this

- In ArcGIS Pro, added it to a new map and was able to execute "Truncate Table" tool over it without any issues.

- In ArcGIS Pro the above tool (either as a model or geoprocessing) fails to execute if the "Enable sync" privileges are enabled for the hosted feature layer.

They provided a video of the testing steps which I have attached to this email (There is no sound on this video)

Check and confirm if the "Editing" privileges are enabled and "Sync" is disabled for the end user's layer in ArcGIS Online.

Additionally, Esri Inc. found the following bug details:

- BUG-000155843 - Using the REST API Update Definition to set "supportsTruncate : false" results in a successful JSON response, however the setting is unchanged

- Public status - New

To summarise, it doesn't matter what the supportsTruncate line is set to in the service definition, the tool in ArcGIS Pro will work as long as the data is not enabled for offline use.

Where I was using the early discussed python method of truncate/append and have replaced with the GP tools Truncate Table and Append, it has sped up the model as it's much faster to run. Unfortunately, where I update layers which are offline enabled, I still have to use the python solution, but at least the option is there! Now only 2 out of 5 sets of data use the python solution.

This is great and I've been using it, however the hosted feature layer size is growing every time we truncate and append the same amount of data and consuming a lot of my credits. How to avoid this and have the layer be the same size after every update? It's not even enabled for editing or offline use or any of that. Thoughts?

Also, is there a way to using the new UPSERT function of PRO 3.1, via Python to avoid replacing all rows, and only update the ones w/ changes? That would be beneficial to ESRI to as it would reduce the stress on their servers.

Pretty straightforward. I have the code updating our parcel polygon layer on AGOL. However, there is one big problem. After running this script, I can no longer use the search tools against the updated data in Experience Builder.

If I update the feature using the Overwrite Web Layer tool from ArcPro, the searches work great. If I use the script, the feature will update, but the service definition will not and the searches will not work anymore. To fix the issue, I have to run the Overwrite Web Layer tool which defeats the purpose.

Any help would be greatly appreciated.

@JoabelBarbieri with later releases of ArcGIS Pro, and updates made to arcpy, arcpy's Append tool is just as performant as uploading a File Geodatabase and appending the records from there. So, you could use this in the script instead.

You can also change the upsert=False to True in the Append function. If you do, you will also need to add a new parameter, upsert_matching_field, and set this to a unique field. That field must also have a unique index applied to it.

As for the size doubling, I could not reproduce this. I would test on another service to see if the same occurs.

@JulioGarrido I could not reproduce this. After running the script, an Experience Builder application that contained a search widget configured to that later worked successfully. I would create a test application to see if it's reproducible in the new application as well.

UPDATE:

I have resolved the issue. The problem was I had originally staged my hosted table with an Excel upload to AGOL. My script was trying to update from the uploaded zipped FGDB, and because that was not the same source type and name, it was failing. What is strange is that it still appended 1,000 records though. When I created a new hosted table directly in AGOL, the script worked as expected.

Original:

@JakeSkinner - thank you for the helpful script!

I've repurposed the core of this script to also take Excel tables, allow for dynamic field mapping, and added a rotating log file handler. All of which are working fine, but I am seeing an issue with the append process. After truncating all of the records in my target hosted table, the append begins to run, but fails. I have used the traceback module to get the exception, and it looks to be throwing an error after successfully appending 1,000 records. I have about 7500 records in my table so unsure where to look.

I've already updated the maxRecordCount values of the service definition to 20000 so there should be no issues reaching that cap. The uploaded zipped FGDB containing the output from the ExcelToTable conversion is only 1.5 MB so unsure what else the issue could be.

Great script! This helped me get around an issue of overwriting a hosted layer that had a view on it. Nice job!

This has already been asked, but I didn't see an answer or even a solution given, so I'm going to ask again. I'm trying to update my features on Portal and the append function fails. Is there an equivalent function (or methodology) I can use for features hosted in Portal, utilizing Enterprise 10.9.1?

Thank you for sharing!

Edit:

I should be a bit more specific, the append does not work in Portal if you're trying to utilize a FGDB. But if you don't have to worry about your fields getting truncated when you export to a shapefile, or if you don't mind setting up the field mapping, the posted script works great utilizing a shapefile, with some modifications. So, to be more specific, is there a way to append to a feature service utilizing a FGDB? Thanks!

@AnthonyAtkins2 yes, you can simply use ArcGIS Pro's Append tool.

Hi @JakeSkinner , thanks for your work and dedication on this post!

Question for you -- I'm trying to implement this script to update a parcel geometry layer and 6 related tables. I understand how to update the tables and can do so successfully, but when trying to update the geometry layer, I get an error `Unable to truncate layer data. There are no primary or candidate keys in the referenced table that match the referencing column list in the foreign key Could not create constraint or index. See previous errors. (Error Code: 400)`.

I then realized we will want to be eventually creating views from this layer so I made a test view and the script then ran successfully. However, as expected from the script, the ObjectID's did not reset. Is there any way to use views and allow the ObjectID's to reset? We would like to avoid having our parcels increment ObjectIDs.

@EthanSmith2 are you working with an ArcGIS Enterprise hosted feature service, or AGOL hosted feature service?

And, what version of the arcgis api for python are you using?

Jake:

Thank you for providing this script. It is very helpful. However, I am having (1) minor issue. When I set the disableSync variable to False, I get an error that says cannot disable sync on the feature layer due to Outstanding Replicas. However, If I leave it as false, the script runs properly, but does not appear to properly update the data from the ArcGIS Pro Feature Class.

Any suggestions?

@ModernElectric you can try to unregister the replicas. Ex:

import arcgis

from arcgis.gis import GIS

# Variables

username = 'jskinner_rats'

password = '********'

itemID = 'af9232b68fd247148239731c6a3fcd58'

gis = GIS('https://www.arcgis.com', username, password)

lyr = gis.content.get(itemID)

flc = arcgis.features.FeatureLayerCollection(lyr.url, gis)

replicas = flc.replicas.get_list()

print(replicas)

# Unregister replicas

for replica in replicas:

flc.replicas.unregister(replica['replicaID'])Jake: This appears to fix the problem.

Regarding the rest of the script:

It works on a Polyline feature class/feature service. However, on a point feature class/feature service with and without attachments, I get an Exception: Unknown Error (Error Code: 500) at Line 190 (Appending Features). The Field Mapping has not changed.

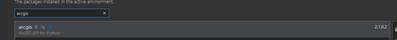

@ModernElectric it appears the ArcGIS API for Python will not work with attachments. I believe the reason is that is maintains Global IDs. However, you can use ArcGIS Pro's Truncate and Append tools to perform the same steps. In the Append tool's environment variables, Maintain Attachments is automatically checked:

Note: this tool will fail if you check the option Maintain Attachments and Preserve Global IDs. It has to be one or the other.

BINGO!! I went thru and recreated the Feature Class in ArcGIS Pro without the attachments, reshared it to AGOL and updated the script and it updated without crashing, I assume because I did not have an attachment table associated with the FC.

However, the attachment(s) are a key component to the feature(s). Is there a workaround to be able to include these in the script update process?

@ModernElectric create a model using the GP tools Truncate and Append. If needed, you can schedule the model to run on a scheduled basis, or export the model to a script, and execute the script on a scheduled basis.

Wanted to provide a follow-up and see if you can identify what I am still doing wrong.

First part of the batch file is to unregister the replicas. This works fine.

Next is to run the script you provided and update the feature layer from the GDB Feature Class (FC without attachments). DisableSyc = True. The script runs fine without crashing and it appears the Feature Layer is updated in AGOL.

However, here is the new issue I am having: These feature layers are used in ArcGIS Workforce and have editing abilities. When the project in workforce is opened, or attempted to be opened right after running the update script(s), it says the Feature Layer cannot be loaded. I have to go back in and remove and readd the layer and save the web map for it to work. Any ideas?

Update: I have been doing a bunch of trial and error with this issue and havent been able to figure out why its happening. Specifically, the Field Worker project on an android phone/tablet is where I am running into issues. After running the script, doing the Sync feature on the app, does not reload the fresh data. Backing out and doing a Reload of the project, gives the error.

Appreciate ALL of your help.

@JakeSkinner apologies for the long delay -- I am running 2.1.0.2 of the arcgis package in python. It is an AGOL hosted feature service.

Hi Jake,

Thank you for posting this and for your continued support in the comments. We have been using this script for some time now and it has been a life saver. We are now trying to apply this code to a group layer. I'm curious about your thoughts on modifying the code to iterate truncate/append to four sublayers, or if you recommend running four distinct instances of the code. All four sublayers' source feature class are in the same geodatabase.

How would you suggest tackling truncate/append on four layers rather than one?

Thank you!

Hi @ashleyf_lcpud,

You could create a dictionary of the feature class paths and the layer index number for which they should update. Then, iterate through the dictionary. Ex:

import arcpy, os, time, uuid

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Overwrite Output

arcpy.env.overwriteOutput = True

# Variables

username = "jskinner_rats" # AGOL Username

password = "********" # AGOL Password

fc1 = r"C:\Projects\GeoNET\GeoNET.gdb\GPGGA" # Path to Feature Class 1

fc2 = r"C:\projects\GeoNET\GeoNET.gdb\FACILITY_LOCATIONS" # Path to Feature Class 2

fsItemId = "12eefd574f64456a8f82edee35084a6f" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex1 = 0 # Layer Index 1

layerIndex2 = 1 # Layer Index 2

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = True # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

# Start Timer

startTime = time.time()

# Create dictionary

dataDict = {}

dataDict[fc1] = layerIndex1

dataDict[fc2] = layerIndex2

# Function to Zip FGD

def zipDir(dirPath, zipPath):

'''Zip File Geodatabase'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

for fc, layerIndex in dataDict.items():

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

# Schema Sync

if updateSchema == True:

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(gdbTable):

if field.type != 'OID' and field.type != 'Geometry':

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.type != 'OID' and field.type != 'Geometry' and 'Shape_' not in field.name:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

# Append features from featureService class/hostedTable

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))@JakeSkinner , wow, thank you! This is exactly what we were looking for! This works seamlessly. Thank you a ton.

@JakeSkinner thank you so much for this wonderful script! I was wondering if I could get some help with a few snags I have run into.

The script runs well until line 84 where it retrieves the minimum and maximum object id:

Exception Traceback (most recent call last) In [1]: Line 84: minOID = fLyr.query(out_statistics=[{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}]) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py, in query: Line 2263: return self._query(url, params, raw=as_raw) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py, in _query: Line 3673: raise queryException File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\layer.py, in _query: Line 3626: result = self._con.post( File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in post: Line 1524: return self._handle_response( File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in _handle_response: Line 1000: self._handle_json_error(data["error"], errorcode) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in _handle_json_error: Line 1023: raise Exception(errormessage) Exception: Field name [OBJECTID] does not exist. Did you mean [objectid]? (Error Code: 500)

The local layer's object id field is all caps like in the script, but the hosted layer is all lowercase (objectid) with the alias still uppercase (seems like the field name was changed to lowercase when it was uploaded to the portal). Changing OBJECTID in lines 85 and 88 to lowercase allows the script to continue but throws another error at line 157 when it attempts to delete fields from the service definition. After successfully deleting two fields it errors out, stating it cannot find the field name (I'm guessing objectid?):

Exception Traceback (most recent call last) In [11]: Line 157: fLyr.manager.delete_from_definition(update_dict) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\managers.py, in delete_from_definition: Line 3203: res = self._con.post(u_url, params) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in post: Line 1524: return self._handle_response( File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in _handle_response: Line 1000: self._handle_json_error(data["error"], errorcode) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py, in _handle_json_error: Line 1023: raise Exception(errormessage) Exception: ERROR: Field name not found. (Error Code: 500)

Do you have any tips on how I can tweak this to get it working? I'm on ArcGIS Pro 3.2.0 and my organization is on Enterprise 11.1.

Thanks for the help!

@BBarbs @JakeSkinner I would recommend replacing the explicit references to OBJECTID with the feature layer property, objectIdField. That will ensure you are using the correct field for that particular feature layer, regardless of what the actual name is.

@PeterKnoop is correct, I should have used the objectIdField property for the layer. The code above has been updated to use this. Give that a try and see if you get the same error.

@JakeSkinner I have been trying to get the multi-layer script you added to work, but it only runs the first layer in the list. I have 8 total that I need to schedule to update. I have them listed as fc1 - fc8 with the path to their location. I have layer indexes created for each one, zero to 7, and I have the dataDict set equal to the layer indexes. It seems to be running the first layer, then thinks it is finished. Is there something else I need to add to make it loop to update all 8 layers?

Any advice would be appreciated.

@MelissaJohnson can you share the service to a Group and invite my AGOL account, jskinner_rats?

@JakeSkinner You should have received an invite. Thanks for the quick response!

@MelissaJohnson I downloaded the OpenGov feature serivce, and then re-published to my Org. The below code work for me to update each layer in the feature service.

import arcpy, os, time, uuid

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Overwrite Output

arcpy.env.overwriteOutput = True

# Variables

username = "jskinner_rats"

password = "*******"

fc1 = r"c:\projects\GeoNET\GeoNET.gdb\AddressPoints"

fc2 = r"C:\projects\GeoNET\GeoNET.gdb\Streets"

fc3 = r"c:\projects\GeoNET\GeoNET.gdb\Parcels"

fc4 = r"c:\projects\GeoNET\GeoNET.gdb\UrbanBoundaryParcels"

fc5 = r"c:\projects\GeoNET\GeoNET.gdb\WaterDistricts"

fc6 = r"c:\projects\GeoNET\GeoNET.gdb\CityLimits"

fc7 = r"c:\projects\GeoNET\GeoNET.gdb\FireDistricts"

fc8 = r"c:\projects\GeoNET\GeoNET.gdb\SanitarySewerBasinsJC"

fc9 = r"c:\projects\GeoNET\GeoNET.gdb\FloodZones"

fsItemId = "1fe9a4e285af4c9385bb1071660a8d0c"

featureService = True

hostedTable = False

layerIndex1 = 0

layerIndex2 = 1

layerIndex3 = 2

layerIndex4 = 3

layerIndex5 = 4

layerIndex6 = 5

layerIndex7 = 6

layerIndex8 = 7

layerIndex9 = 8

disableSync = True

updateSchema = True

# Start Timer

startTime = time.time()

# Create dictionary

dataDict = {}

dataDict[fc1] = layerIndex1

dataDict[fc2] = layerIndex2

dataDict[fc3] = layerIndex3

dataDict[fc4] = layerIndex4

dataDict[fc5] = layerIndex5

dataDict[fc6] = layerIndex6

dataDict[fc7] = layerIndex7

dataDict[fc8] = layerIndex8

dataDict[fc9] = layerIndex9

# Function to Zip FGD

def zipDir(dirPath, zipPath):

'''Zip File Geodatabase'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

for fc, layerIndex in dataDict.items():

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("\n=========================\nCreating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

fgd_item = gis.content.add(item_properties=fgd_properties, data=gdb + ".zip")

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

# Schema Sync

if updateSchema == True:

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(gdbTable):

if field.type != 'OID' and field.type != 'Geometry':

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.type != 'OID' and field.type != 'Geometry' and 'Shape_' not in field.name:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

# Append features from featureService class/hostedTable

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

try:

arcpy.Delete_management(gdb)

except Exception as e:

print(e)

pass

try:

os.remove(gdb + ".zip")

except Exception as e:

print(e)

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))@JakeSkinner that worked! Thank you so much for your help....I think I may have had some of the layers in the wrong order in my code and it was causing failure.

@JakeSkinner I have been able to get the code to work on a GDB that does not have any attachments or relationship classes. However, when I move onto a different GDB that does have relationship classes, I get the Error Code: 400 which indicates the Layer Truncate isn't supported on an origin layer in a relationship.

When I go thru ArcGIS Pro to Share/Ovewrite the Hosted Feature Layer from the GDB that has attachments and relationships, works out fine. However, the purpose of doing via the Python Script outside of ArcGIS Pro is to be able to run it from a Batch file.

At this time, is there a work around for this, that way I can keep the relationship class intact?

Appreciate your help

@JakeSkinner I have been able to get the python code to run and the scheduled task set up. After they run, AGSOL displays the date updated as the correct date and time, but for some reason all but one of the layers are empty. I am using SDE data as the source. Does the data have to be in a file geodatabase for this to work?

When I run the python script in pro, it shows that it is truncating and appending the feature, then deleting the database. I see it is adding some Shape fields and deleting the global ID field, but it completes the process. I am also seeing a [WinError 32] The process cannot access the file because it is being used by another process: relating to the scratch gdb, but that is showing up at the end of the process after the Deleting temporary FGD & zip file part and not on all of the layers.

Your assistance is greatly appreciated...

@MelissaJohnson I have been testing out Jake's script this week and also had some struggles using SDE data as the source.

In my case, everything looked successful in AGOL until I tried to open the table of the updated layer. Then I would get met with a "Data Error." Other odd behavior, but nothing consistent.

I had a feeling that my error was related to the updateSchema section (I don't think it should be adding shape fields). So, I changed line 136 and 140 to reference the fields from the temporary exported feature class instead of the original SDE fields. I'm still doing some testing, but I think that may have solved my issues. Hopefully it's a similar issue for you! Let me know if you'd like me to post my modified script.

@BrantSollis2 Glad I am not the only one that had issues with SDE layers. I ended up building a model to delete and append which had its own issues I have been working with tech support on, but I think it is working now and will do what I need it to do.

I would like to see your modified script though, as I may need to go back to it later. Also, it may be helpful to someone else here! Thank you so much for responding.

@BrantSollis2 , I would also be interested in seeing your modified script if you are willing to share! When I began using this script, it was performing just fine. I recently started running into the same issues that @MelissaJohnson mentions (also working with an SDE database).

@ashleyf_lcpud @MelissaJohnson @BrantSollis2 I'm having trouble reproducing this behavior. Can anyone share a sample dataset with me in an AGOL group? You can invite my account (jskinner_rats).