- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS for Power Automate

- :

- ArcGIS for Power Automate Blog

- :

- Using ArcGIS Connectors for Power Automate to get ...

Using ArcGIS Connectors for Power Automate to get Custom Geometry in Power BI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Many customers have shared how they would like to bring their features into Power BI reports but have not been able to - until now.

The recent release of the ArcGIS Connector for Power Automate, users can now reference hosted feature layers and convert them to CSV files that can be added to Power BI reports. Flows can be scheduled or manually triggered to create a CSV file to support your business intelligence needs in Power BI.

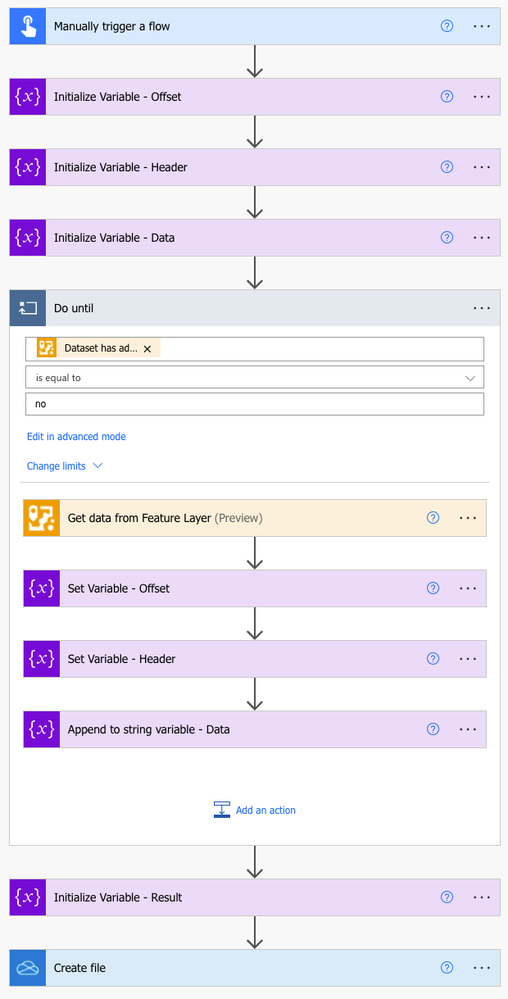

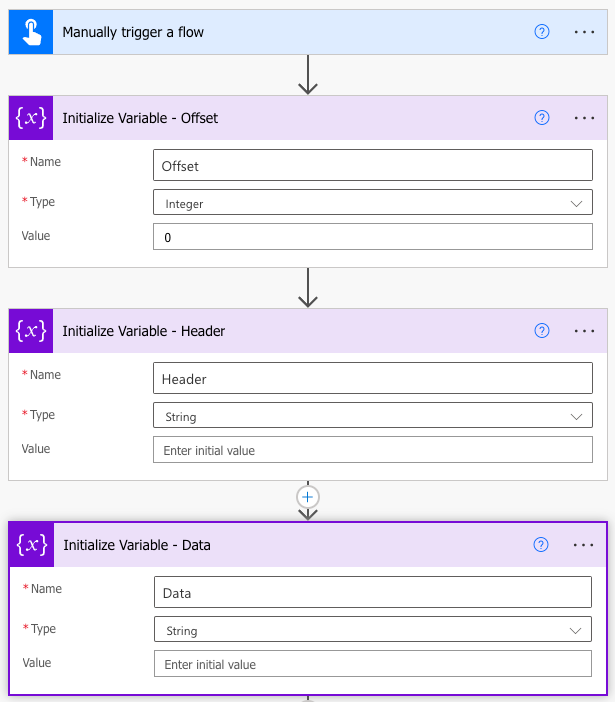

In this example, the flow is manually triggered and immediately creates a collection of variables to support the iteration through all the features in the feature layer.

Using the Initialize Variable action, the flow creates three variables supporting different aspects of the flow. The three variables are:

- Offset - an integer variable used to track which record the flow is working with. It supports the paging through records and keeps track of the count when there are more than 150 records and another call has to be made back to the service.

- Header - a string variable representing the column headers which will be used in writing the CSV file

- Data - a string variable that will hold the geometry and attribute information for the records

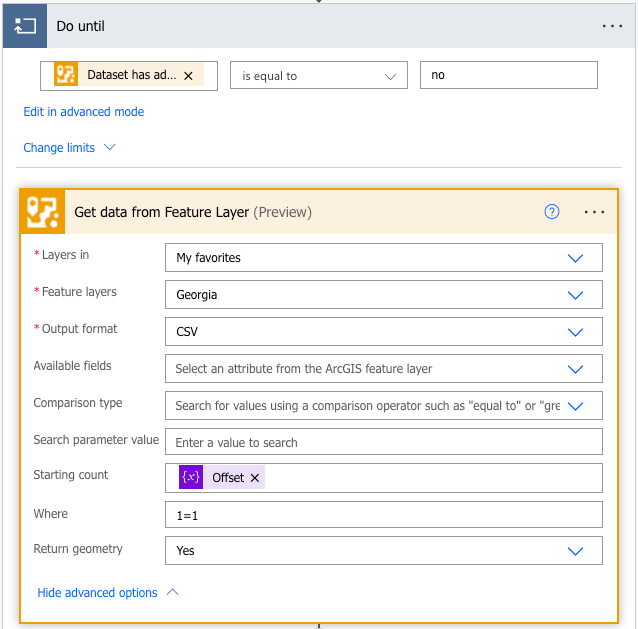

Next step in the flow is to get the data from the feature layer and iterate through all the records. To do this, use the Do until action to wrap the multiple records returned from the Get data from feature layer action.

The Get data from feature layer action needs a few user defined elements:

- where to discover layers in the users ArcGIS Online Organization for the Layers in dialog, options allow for selecting from the user's content, the broader organization, items the user favorited, or from a custom value

- which layer to select from Feature layers input

- how to format the data in the Output format input - in this scenario, CSV

- the Starting count to track where it is within the result set

- what records to return using a Where statement. To get all of the results use '1=1', for more information on forming Where queries, review the ArcGIS REST API documentation

- Set the Return geometry input to 'Yes' to get the geometry to display in the Power BI report

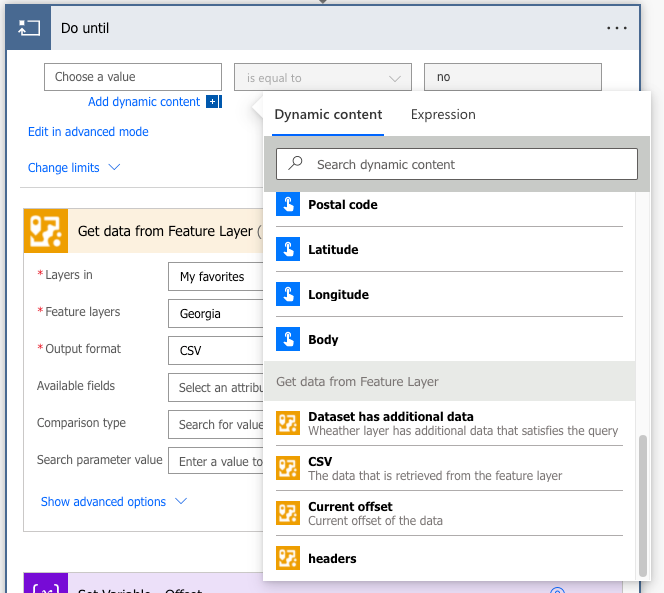

Now that the flow has data to work with, set the limit for the Do until. Click the Add dynamic content link in the Do Until action and select Dataset has additional data and set the conditional modifier to is equal to and define the modifier as no.

We are not done working in the Do until action - we need to set and append the variables defined at the beginning of the flow.

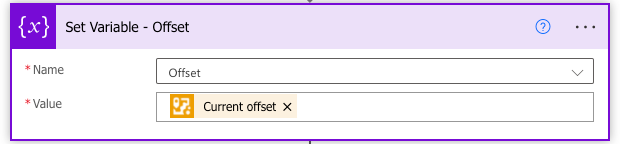

Set the Offset variable to the Current offset of the data

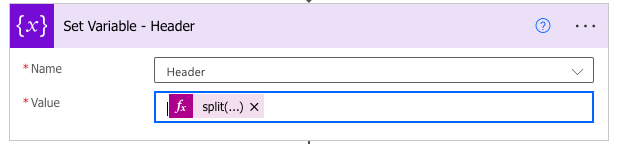

Setting the Header variable requires the flow to split the output from the data using the first row to get the column headers.

The value expression for setting the Header is:

split(outputs('Get_data_from_Feature_Layer')?['body'],decodeUriComponent('%0D%0A'))[0]

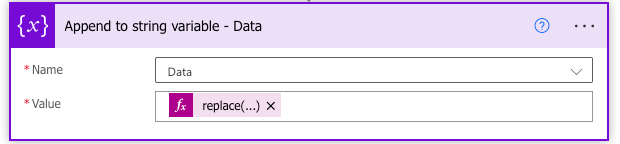

The Data variable is appended using an Append to string action replace function with the output from the dataset. This adds the geometry and attribute for each record which will be written to the resulting CSV file.

The value expression for setting the Data variable is:

replace(outputs('Get_data_from_Feature_Layer')?['body'],variables('Header'),'')

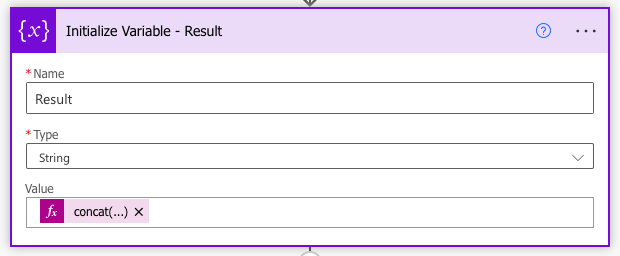

After the Do until action iterates through all the records, the flow will need to concatenate the header and data a write to to a file. To concatenate the Header and Data, the flow creates a new Result variable using the Initialize variable action and sets the value using a concatenation expression:

concat(variables('Header'),variables('Data'))

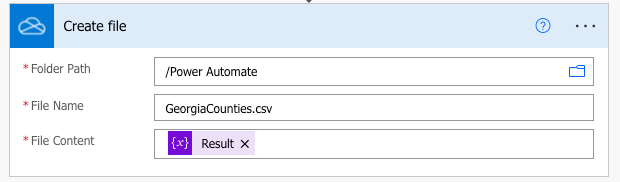

The final step is to write the Result to a CSV file using the Create file action.

The Create file action defines:

- the folder path where the file will be saved

- the name of the file to be saved

- the file content, which is the Result variable that concatenated the header and data

In the end, the flow looks like:

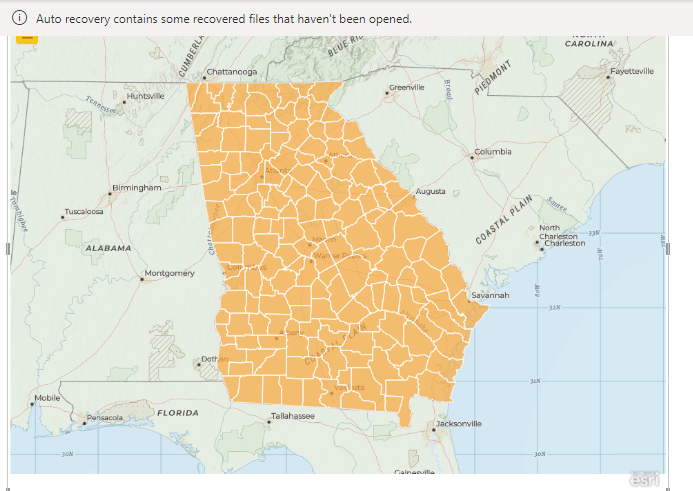

...and the visualization in Power BI looks like:

Tips & Lessons Learned

- It is always a best practice to name the actions, but depending on the action name, the reference in the downstream actions could change.

- There is a 32,767 character limit in Excel and Power BI fields. In testing, feature layers with large or complex geometry have exceeded the maximum number of characters and forced the record to overflow into a new line. If you notice missing features in the map, check the CSV file in Excel and see if it created a new row. If you do encounter this issue, you can go into ArcGIS Pro and generalize the feature class to reduce the number of vertices and republish the service

Thanks in advance and please let me know how this works for you or if you run into any issues.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.