- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Re: Delete features and error 504 "timed out&...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Delete features and error 504 and timed out

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm attempting to apply delete feature to a very large dataset in AGOL & it's timing out.

The dataset is a polygon feature class.

I can't use truncate because the feature class is sync enabled.

Couple of questions:

1. What is the maximum number of records the ArcGIS API can handle for delete and append functions — 10,000 records?

2. Any python tips and tricks to make this happen?

I'm new to python, so please provide scripts with comments 🙂

import arcpy

import arcgis

from arcgis.gis import GIS

from arcgis.features import FeatureLayer

gis = GIS("https://xx.arcgis.com", username="xx", password="xx")

feature_layer_item = gis.content.search('7a4b389c86f74ab8bd8da440e1a87db4', item_type = 'Feature Service')[0]

flayers = feature_layer_item.layers

flayer = flayers[0]

flayer.delete_features(where="OBJECTID >= 0")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Solved: ERROR - HTTP Error 504: Gateway Time-out - Esri Community

from the "Related" posts beside your post

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Right, so I need to add: asynchronous=False

I'm a newbie — where in the script do I add this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @NickShannon2,

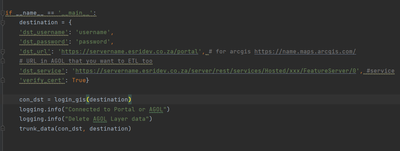

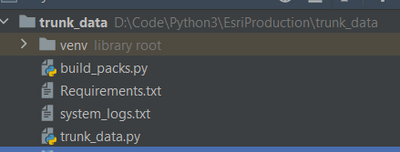

I have added a script to delete data, I usually break it up into packs of 2000, queries are very dependent on network performance. Just unzip the scripts into a python project an install ArcGIS for python pip install arcgis the script you want to fill in is trunk_data.py

then on line 42 fill in your server details and point to the service

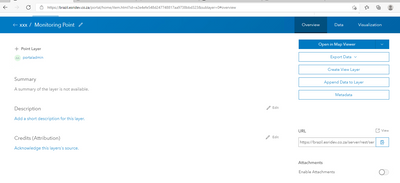

in agol you can get the service url details if you navigate to overview then url, you add /0 if there is only one service /1, /2 for the next etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks @HenryLindemann — this script is a big improvement over my original script.

However, while the script doesn't time-out with a warning message — it does not delete all records. For example, my dataset has 600k rows, the tunk_data script deletes 450k rows leaving 150k. I have to click 'run' a few times to successfully delete all records.

Any ideas how to fix this?

I'm running the script using Juptyer Notebooks.