- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Deep Learning error using the 2nd GPU. Bug?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi everyone.

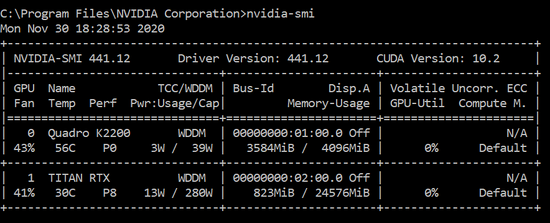

I have 2 GPUs on my system: #0, configured for display, and #1 which is a high-end NVidia Titan RTX for AI processing.

I am running ArcGIS Pro 2.6 with the Deep Learning frameworks installed using the installer provided by Esri here: https://github.com/Esri/deep-learning-frameworks/

I can train a model and detect objects using the GPU #0, so with the windows default GPU it works.That being said, when I ask Pro to detect objects using GPU #1 it says:

ERROR 999999: Something unexpected caused the tool to fail. Contact Esri Technical Support (http://esriurl.com/support) to Report a Bug, and refer to the error help for potential solutions or workarounds.

Parallel processing job timed out [Failed to generate table]

Failed to execute (DetectObjectsUsingDeepLearning).

I have my GPUs configured as follows:

As you can see, both in WDDM mode and both with ECC mode disabled. I have also tried setting the #1 Titan RTX to TCC mode, instead of WDDM:

With this last configuration, same error.

Any ideas about what should I check about the configuration of the second GPU? Is there any limitation right now in the software to process on GPU instance #1 or above??

Can any confirm that are no limitations to run processes on others GPU? Or do I have a misconfiguration on my side?

Any help will be appreciated.

Best regards.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Ok, I reply to myself:

Seems to be a problem when you specify the GPU number in Pro and you have set the environmental variable CUDA_VISIBLE_DEVICES

Some time ago I used the command Viewshed2 that uses CUDA processing for speeding up the visibility calculations using full geodetic solution. For harnessing the power of one of my GPUs I set the env CUDA_VISIBLE_DEVICES=0

This env variable seems to override whatever GPU you indicate in the Pro GUI, so if you have several GPUs on your system don't forget to leave the GPU number in Pro always as 0, and use the CUDA_VISIBLE_DEVICES variable to point to the GPU that you want to use. At least this has solved the issue for me.

Prior to this, I double checked that my CUDA drivers were properly installed, that the bandwith with my GPU was healthy, and so on. This is so complex but it works.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Ok, I reply to myself:

Seems to be a problem when you specify the GPU number in Pro and you have set the environmental variable CUDA_VISIBLE_DEVICES

Some time ago I used the command Viewshed2 that uses CUDA processing for speeding up the visibility calculations using full geodetic solution. For harnessing the power of one of my GPUs I set the env CUDA_VISIBLE_DEVICES=0

This env variable seems to override whatever GPU you indicate in the Pro GUI, so if you have several GPUs on your system don't forget to leave the GPU number in Pro always as 0, and use the CUDA_VISIBLE_DEVICES variable to point to the GPU that you want to use. At least this has solved the issue for me.

Prior to this, I double checked that my CUDA drivers were properly installed, that the bandwith with my GPU was healthy, and so on. This is so complex but it works.