- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- How to Improve performance of Model Builder?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Dear Friends,

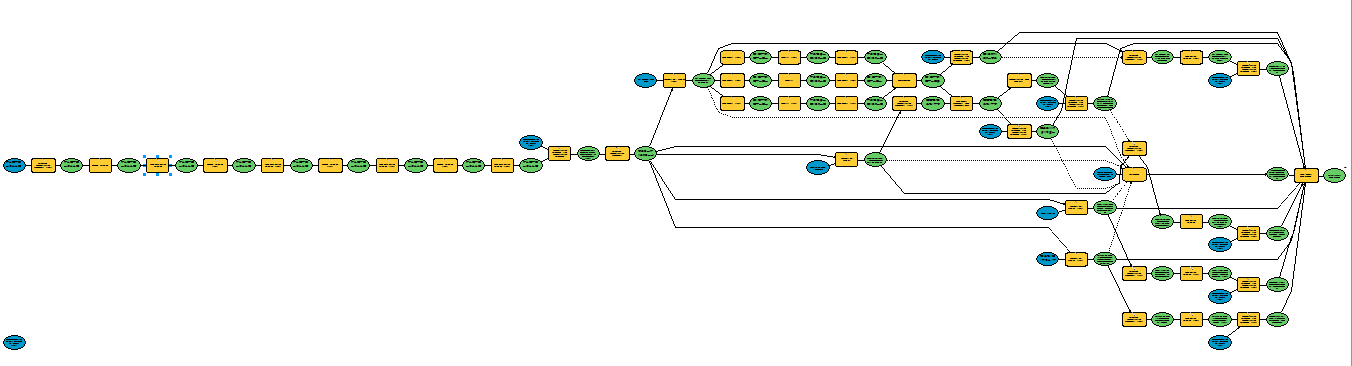

I have created Models in Model Builder. this basically does below things.

- Field Calculation to prepare relevant data.

- Spatial Join to compute Statistics.

- Applies Spatial filters.

- Create Summary data.

Please find the screenshot of the Model and sample Toolbox..

In all these steps It takes around 4 hours.

Is it possible that If I write this same model in Python and then execute, It will take less time to execute?

or is there any other way, through which I can improve the performance of this?

I have such 7 models.

Your help is appreciated.

--

Thanks

Ravindra Singh

1) Field Calculation to prepare relevant data.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I havn't looked in any detail (or at all really...) at your model, but there are many opportunities to improve processing rates by using python / arcpy. Anywhere between X10 & X100 is achievable.

Use in memory workspaces, da cursors, python dictionaries to manipulate data, run from a python ide etc etc.

It all depends on what your purpose is. If I just want to do some quick manipulation which I might want to run a couple of times to get the workflow right, then model builder is the way. For something more speedy and controllable, being able to get exactly what I want then I write python code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I havn't looked in any detail (or at all really...) at your model, but there are many opportunities to improve processing rates by using python / arcpy. Anywhere between X10 & X100 is achievable.

Use in memory workspaces, da cursors, python dictionaries to manipulate data, run from a python ide etc etc.

It all depends on what your purpose is. If I just want to do some quick manipulation which I might want to run a couple of times to get the workflow right, then model builder is the way. For something more speedy and controllable, being able to get exactly what I want then I write python code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

To point out a small example of the suggestions by Neil Ayres : in your model as you already indicated you perform 4 field calculations. In each field calculation it loops through the entire featureclass. When using the arcpy.da.UpdateCursor this step can be done in a single loop.

It might be tempting to export the model to Python, but in some (read "most") cases it might be more efficient to start programming from scratch instead of correcting the spaghetti code resulting from the export...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I've had a look at your model. One thing that would have improved performance is to ensure all your datasets have spatial indices but as all your data were file geodatabase feature classes you are already doing this.

Nothing particularly leaped out at me as performance blocking steps which leads me to ask about your data. You do not actually say anything about it? Is it millions of polygons or just a few thousand that are horribly multi-part? In a part of your model you are selecting and sorting then sub-selecting multiple times, if you are doing this on a table with 10,000,000 rows then the time taken is not surprising.

Whilst Neil Ayres is a good route to go for improving performance you'll be taking your work out of the model builder environment which is something you may not want to do?

You need to give more information about your data and it's context.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you Guys for prompt response.

Regarding my Data: As of now I am using FileGeodatabase. But ultimately, I will be using this model using data in ArcSDE With Oracle Database.

So I will write this model in python and then I will compare the performance of both model.

Thank you all of you for expert input.

--

Regards,

Ravindra Singh