- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Spatial Analyst

- :

- ArcGIS Spatial Analyst Questions

- :

- area solar radiation performance

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

area solar radiation performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

i am working on a intel core i9-13900k machine with 24 cores, 128gb ram and one of the fastests nvme ssds currently existing. and i am about to do some experiments with a test region of about 4000 x 4500 cells 10x10m each.

the expected reuslult set to "Whole year", all the other settings are left default exept for the "Diffusse proportion" - changed from 0.3 to 0.32 and "Transmittivity" from 0.5 to 0.48.

that calculation takes quite a long time and the most annoying thing is, that the taskmanager shows just one single core doing at least something - at an overall cpu workload of 7 - 9% and a rate of about 1,5 to 5.2 GHz.

but on top of that astonishingly poor performance one older machine with lower performance specs in any dimension is outperforming the modern machine by about 30% (!).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes, it warns you about that in the first paragraph under Usage

Area Solar Radiation (Spatial Analyst)—ArcGIS Pro | Documentation

But did you see the Environment settings that might improve the performance?

Analysis environments and Spatial Analyst—ArcGIS Pro | Documentation

What do you have yours set to?

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

thx, of course i have been reading the whole documentation in advance. and i have been experimenting with even smaller (than 4000 x 4000 cells) rasters. especially with the environment parameters for parallel processing - without any significant effect on the performance. and still the first issue is the overall performance of intel's flagship processor but the second is even more concerning - the less advanced machine is on par - both with about 7% workload. which means with more or less just one core working on the calculation job. that concept seems to be pretty outdated to me ... but i am still searching for workarounds and settings to bypass these limitations

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I move the thread to ArcGIS Spatial Analyst Questions

to see if any one from that team knows any specific settings that might be causing the issue

I assume that you have ruled out any dataset specific issues that would affect calculation speed

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you @robertkalasek for your post.

The current solar radiation analysis tools are not designed as multithreaded or honor the parallel processing environment setting. So yes have their known limitations.

The larger the raster (or greater the input resolution) the longer the processing time and are recommended for smaller landscape scales (less than one degree).

This is something we are actively working on to release new versions of the Solar tools hopefully in the next release of ArcGIS Pro. This will include support for larger processing areas, along with performance and algorithm improvements, multithread and GPU support.

We hope to have these new tools in your hands as soon as possible. In the mean time keep the communication open and let us know any specific questions or issues you have.

Kind regards,

-Ryan DeBruyn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

It's great you are working on it. Please keep us posted!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Same problem here. We did some calculations back in 2015 for a relatively large area. We worked by iterating an overlapping fishnet of our AOI and it took us about 3 months to process the whole thing.

Now we are doing it again using a new DSM. Our machines are way better now compared to the ones we used in 2015. We are performing first estimates on how long will it take us and it is not much less than 3 months. It's true we have more resolution now (our DSM has 4 times more resolution). But still, one would expect much more improvement having way better CPUs with more threads, cores, RAM, switching from 32 bits to 64, etc.

And yes, you take a look at the taskmanager and it doesn't seem to be doing much, probably uses 5% of resources.

This would be ridiculous in gaming. There have been a lot of improvements in the ESRI ecosystem but geoprocessing power has never been a top priority.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I did 800 square miles of Salt Lake County, Utah back in 2013 on 1 meter LiDAR. Yes, it's a heavy lift. I ran it for every month of the year on both Direct Hours and kwh/m2, so 24 individual runs. I was on 32 bit back then, 100 GB RAM, and 32 cores. That took a month running maxed out!

You CAN run "embarrassingly parallel" but you need a Spatial Analyst license for each core you'll run. We have a site license, so I'm not limited by SA licenses. So "embarrassingly parallel" means you write a script for each run, and kick them off separately. Have the result write to separate file locations and merge afterward. Done.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

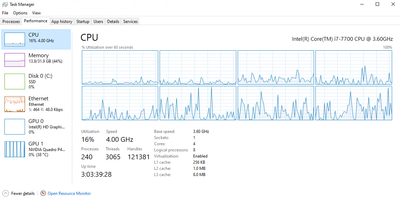

I'm having better luck with the new Pro 3.2 raster solar radiation tool that can use GPU and parallel processing - I just don't understand the tool parameters and outputs as much as the old one. The .crf output format seems like it has some nice possibilities, especially if I can publish it as an image service.

CPU:

GPU humming along nicely too.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@GBacon ... well actually i am not really impressed by an overall 16% utilization-indicator. would be interesting, what the processing-time benefits are. have you tested without parallel processing ?