- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Train Deep Learning Data 100% CPU usage 0% GPU

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Train Deep Learning Data 100% CPU usage 0% GPU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

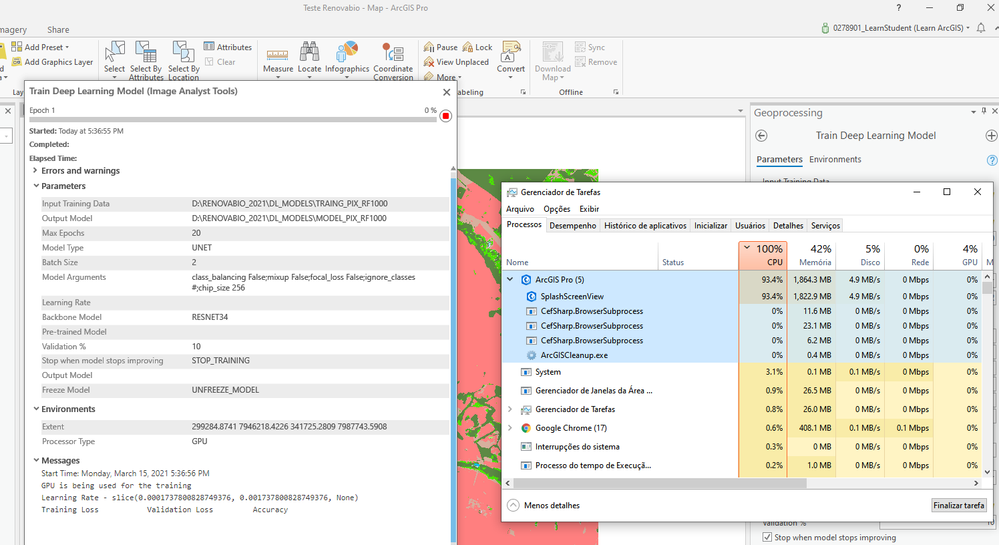

Although you have configured the Processor Type for GPU and the sample export has been performed with the GPU, when performing the Training the GPU is not being used, is this the expected behavior for this type of operation? See image.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@GeoprocessamentoCerradinho Yup! That is the expected behavior. As long as CUDA is in use for training and Nvidia smi also shows the GPU in use, we are good to go. If you have more resources left over, you may be able to bump up your batch size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Did you see associated threads?

Solved: Workstation specifications, for deep learning - Esri Community

and

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hii @GeoprocessamentoCerradinho ,

Could you tell us which specific GPU (Make and model) is in use for the training process? (Device Manager > Display Adapters OR Task Manager > Performance > GPU)

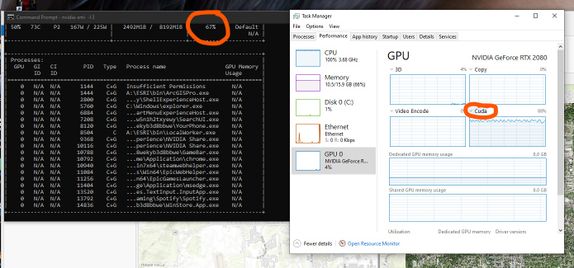

1. In the task manager, select the GPU that you are expecting to be used for the training process. You will see multiple windows such as 3D, copy, Video Encode etc. On the top-left of one of those windows, you should be able to see a drop down arrow. Change the value to 'CUDA'.

2.In command prompt > type 'nvidia-smi -l 3'. This should show us a detailed usage of the GPU during training (refreshed every 3 seconds)

While the training process is running, could you capture both and post a screenshot here?

Should look like this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks for reply, I believe that the result of the training is very wrong:

GPU is being used for the training

Learning Rate - slice(0.0001737800828749376, 0.001737800828749376, None)

Training Loss Validation Loss Accuracy

0.0 2.8250971440685335e-12 1.0

0.0 6.1832314442178404e-12 1.0

1.0186340659856796e-10 4.119045035611002e-11 1.0

9.458744898438454e-11 1.7390337433975667e-11 1.0

2.9103830456733704e-11 3.03431411941002e-11 1.0

3.637978807091713e-11 6.275180375325817e-11 1.0

9.458744898438454e-11 6.304497202069825e-11 1.0

{'accuracy': '1.0000e+00'}

NoData FNM Ref FM Ap GSec Ac A Res

precision 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

recall 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

f1 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Succeeded at Monday, March 15, 2021 7:59:11 PM (Elapsed Time: 2 hours 22 minutes 15 seconds)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hii @GeoprocessamentoCerradinho,

Based on your response on the other thread, I am guessing you were able to get the model working.

Were you able to replicate the above steps to ensure that GPU was indeed being used while training the deep learning model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes, I realized that although the CPU is being used to the maximum, the GPU is used in about 50% (CUDA value in task manager), I don't know if this is the expected behavior, I think so.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@GeoprocessamentoCerradinho Yup! That is the expected behavior. As long as CUDA is in use for training and Nvidia smi also shows the GPU in use, we are good to go. If you have more resources left over, you may be able to bump up your batch size.