- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Questions

- :

- Re: Geoevent Erroneously Imports Date Field

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have a comparatively simple Geoevent Service running. The 'watch a folder for new CSV files' connector is bring in data from a Tab-delimited text file to be written in a map service/feature service in a spatiotemporal data store through 'Update a Feature in a Spatiotemporal Big Data Store' output connector, no processor so far. The problem is, some of the date fields are automatically being assigned a date of "1/18/1970, 10:17" although the records are from 2020. The text file is an output from a SQL database. I am wondering if I am missing some simple steps and hence getting a default time set by the system.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello Shital,

There is no problem including multiple Field Calculator processors in a single GeoEvent Service. You sometimes have to "chain" a series of Field Calculators together to compute intermediate values and then perform calculations on those intermediate values. An example of this can be seen in the GeoNet thread How to switch positions on coordinates

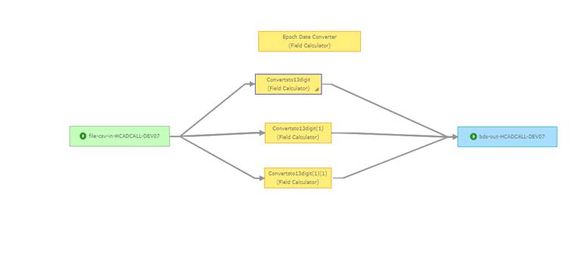

If you know that data you are receiving is in epoch seconds, you can scale the received Long integer value by multiplying by 1000 and write the computed result to a field whose type is Date. For example, illustrated below is a GeoEvent Service whose input receives a single long integer value. The GeoEvent Definition used by the input has two additional fields, another Long and a Date, who's values are adapted as null when no values are provided in the received data structure. The first Field Calculator multiplies dt_seconds (a Long) by 1000 and writes the result into a field dt_long (also a Long). The second Field Calculator uses the exact same expression but writes the result into a field dt_date which forces the Field Calculator to perform an implicit conversion from long integer to Date.

I've chosen to show the input as JSON received over REST and the output as delimited text as that makes it clear what the data values are.

Input: [{"dt_seconds": 1613600457}]

Output: JsonReceiver,1613600457,1613600457000,2021-02-17T14:20:57.000-08:00

Note that the name of the GeoEvent Definition used by all nodes in the GeoEvent Service is JsonReceiver (the TEXT outbound adapter prepends that to the comma delimited values is produces). Also, the TEXT output can be configured to format Date values as ISO 8601 (as shown). You can use https://www.epochconverter.com to convert either the dt_seconds or the computed dt_long to show that either can be used by a system to represent the date/time shown formatted as an ISO 8601 string.

I hope this helps --

RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you. That indeed helped. Instead of connecting field calculators along different paths, I stacked them one after another and that seems to have done the trick.

Regards,

Shital

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello Ctal -

I would ask that you please submit an incident with Esri Technical support so that someone can be assigned specifically to follow up with you. If you would please include a sample of the tab-delimited data your File/Text inbound connector is receiving, that will help reproduce the issue.

I'm going to take a guess and assume that you have configured a Watch a Folder for New CSV Files input using the input's Expected Date Format property to specify a Java SimpleDateFormat string. Data coming from your SQL database has only the "time" portion of a date/time string and thus is not one of the few well-known string formats GeoEvent Server recognizes for specifying a date/time value.

GeoEvent Server uses epoch values (in milliseconds) to specify Date values. Both date and time are inherently part of every Date value since the values are not a time-of-day string but rather a number of milliseconds since the Unix Epoch (Midnight Jan 1 1970). GeoEvent Server's inbound adapter will not be able to cast a string value such as "23:45:15" to a Date value "(today) 11:45:15 PM (time zone) 2020" unless the string representation of the date/time incorporates a date as well as a time.

Hope this information is helpful –

RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks, RJ. My database team has cleaned up the date field a little bit (they removed time from the date field) and this is how it looks like right now. Please notice that CALL_ENTRY_DATE has a different format than CALL_ENTRY_TIME. What format should I be using in the 'Expected Date Format' field?

| CALL_ENTRY_DATE | CALL_ENTRY_TIME | CALL_ENTRY_INT | CALL_DISPATCH_DATE |

| 12/31/2020 | 5126 | 1609375886 | 12/31/2020 |

| 12/31/2020 | 13230 | 1609378350 | 12/31/2020 |

| 12/31/2020 | 31518 | 1609384518 | 12/31/2020 |

Thanks,

-Shital (Ctal)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The Expected Date Format property is only applicable to a single attribute field whose data type, as specified by the event record's GeoEvent Definition, is a Date.

An inbound connector, like the one you are using (SystemFile/Text) would have to specify an existing GeoEvent Definition to use (vs. allowing the input to create one for you) in order to specify which attribute field(s) should be handled as Date values. However, you can only specify a single Expected Date Format mask, which means that both your "date" and your "time" would have to be part of a single attribute. An inbound adapter cannot handle the conversion of a "date" and a "time" when the values are in separate attribute fields.

None of the configurable processors in the GeoEvent Server releases up through the current release (10.8.1) provide a way to "cast" data from a String to a Date. This means your only opportunity to adapt a string representation of a date/time and create an actual Date value is as part of an inbound connector's adapter. Once data has passed from an inbound connector into a GeoEvent Service you won't be able to "cast" a value to a Date unless the value happens to be an epoch value such as 784041330000.

The good news, looking forward, is that the 10.9 release will have a toDate( ) function which both the Field Mapper and the Field Calculator will be able to use. The new function will allow two or more string values from separate attributes to be combined with a literal string to produce an ISO 8601 formatted date/time string. You will be able to send data to GeoEvent Server with a "time" and a "date" in separate fields ... for example: "dateString": "1994-11-05" and "timeString": "08:15:30-05:00" ... and write an expression such as toDate(dateString + 'T' + timeString) to create a single date/time string and write the result out to a field of type Date.

- RJ

reference: https://www.w3.org/TR/NOTE-datetime

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi RJ, so I was able to somewhat solve my issue by having date field come in as date and time field come in as date (but changed the format to string in Geoevent Definition). Thank you for your help.

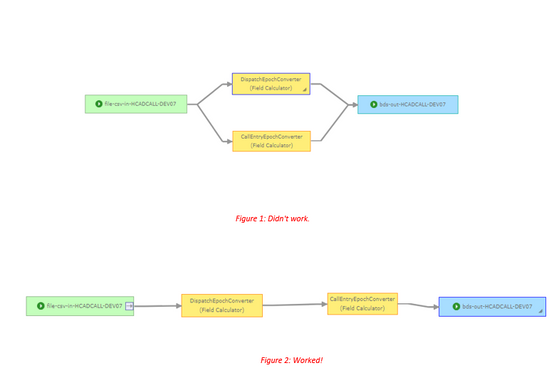

The issue I am having now is with Epoch Time. I was able to use a Field Calculator to convert my 10 digit epoch time value to 13 digits and Geoevent imports that fine in MM-DD-YYYY format. However, I have multiple such fields and when I use multiple Field Calculators, the conversion doesn't happen properly. Some fields retain epoch time while others show mixed results. So I guess my question is, can we have multiple Field Calculators in the same geoevent service?

Thanks,

Shital

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello Shital,

There is no problem including multiple Field Calculator processors in a single GeoEvent Service. You sometimes have to "chain" a series of Field Calculators together to compute intermediate values and then perform calculations on those intermediate values. An example of this can be seen in the GeoNet thread How to switch positions on coordinates

If you know that data you are receiving is in epoch seconds, you can scale the received Long integer value by multiplying by 1000 and write the computed result to a field whose type is Date. For example, illustrated below is a GeoEvent Service whose input receives a single long integer value. The GeoEvent Definition used by the input has two additional fields, another Long and a Date, who's values are adapted as null when no values are provided in the received data structure. The first Field Calculator multiplies dt_seconds (a Long) by 1000 and writes the result into a field dt_long (also a Long). The second Field Calculator uses the exact same expression but writes the result into a field dt_date which forces the Field Calculator to perform an implicit conversion from long integer to Date.

I've chosen to show the input as JSON received over REST and the output as delimited text as that makes it clear what the data values are.

Input: [{"dt_seconds": 1613600457}]

Output: JsonReceiver,1613600457,1613600457000,2021-02-17T14:20:57.000-08:00

Note that the name of the GeoEvent Definition used by all nodes in the GeoEvent Service is JsonReceiver (the TEXT outbound adapter prepends that to the comma delimited values is produces). Also, the TEXT output can be configured to format Date values as ISO 8601 (as shown). You can use https://www.epochconverter.com to convert either the dt_seconds or the computed dt_long to show that either can be used by a system to represent the date/time shown formatted as an ISO 8601 string.

I hope this helps --

RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you. That indeed helped. Instead of connecting field calculators along different paths, I stacked them one after another and that seems to have done the trick.

Regards,

Shital