- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Pgdata storage management

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello everyone,

We've been using ArcGIS entreprise for a few years now (classic deployement, single server). Those last few months, we consumed 20 additional Gb per month, which is not really sustainable on the long run. This increase coincides more or less with the 11.1 update, but I'm not sure if this is related.

When I monitor the D drive, where everything is installed, I see that most of that storage consumption (140go) is linked to the folder ArcGISDataStore>pgdata>base>16401. All files are capped at 1go and I don't understand how they relate to my Portal's items. I understand that pgdata is useful for postgresql functionning.

We've around 2000 items, including 500 maps configured for offline mode (sync enabled), around 25 dashboards and 200 users (mostly viewers). We don't use hosted rasters. We also regularly truncate and populate several hosted feature layers with data from Microsoft SQL server (daily FME scripts). NB: Those specific items appear as having 0b size on the Portal, despite being sometimes composed of dozens of thousands of polygons.

That storage consumption may be justified, but I need a way to manage it on our server. When I generate a report of all Portal's items, sorted by size, the total is only around 1Gb, which is far from the 140go of pgdata folder.

NB: ESRI's support has not been able to help me on this topic since July 2023. I'm trying to find a way to better manage my storage and to identify which items are consumming the most. Is it coming from the features layers themsleves, dashboards, offline sync, ghost backup, etc. ?

Thank you in advance

Arnaud

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @Arnaud_Leidgens , here is the code to iterate through the services, just copy it into excel. the size flag will probably only be useful for the hosted feature services.

as to fixing the problem, if you can make sure all the patches are up to date, I know there was a bug logged for this. The only method I know off currently it to override the service and to not use trunk and load but rather something like an ETL script that updates the service rather than trunk and load, that is what I wound up doing in my case.

import logging

from arcgis.gis import GIS

import arcgis

#logging.basicConfig(level=logging.INFO, format='%(asctime)s %(levelname)-8s %(message)s', datefmt='%Y-%m-%d %H:%M:%S')

con = GIS('https://dns.com/portal', 'username', 'password', verify_cert=False)

user_to_search = '!esri_'

print('Username;Folder;Title;Itemid;Size')

for user in con.users.search(user_to_search):

user_items = user.items(folder=None, max_items=100000)

for item in user_items:

if 'size' in item:

# 1 Megabytes = 1048576 Bytes

line = f"{user.username};root;{item.title};{item.itemid};{item.type};{round(item.size / 1048576)}"

else:

line = f"{user.username};root;{item.title};{item.itemid};{item.type}"

print(line)

for folder in user.folders:

user_items = user.items(folder=folder['title'], max_items=100000)

for item in user_items:

if 'size' in item:

# 1 Megabytes = 1048576 Bytes

line = f"{user.username};{folder['title']};{item.title};{item.itemid};{item.type};{round(item.size / 1048576)}"

else:

line = f"{user.username};{folder['title']};{item.title};{item.itemid};{item.type}"

print(line)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

In case somebody else has the same issue one day, indexes were indeed accumulating. Restoring a datastore backup has already reduced some of this backlog problem. Then I manually saved relevant hosted feature layers in GDB and overwrote them on the Portal (no impact on layer configurations, pop-up, dashboards, etc.). In FME, I now avoid using "truncate all / insert all" in the writer. Since AGOL recently added an way to "Rebuild spatial indexes", I hope that this feature will be implemented in the next Entreprise update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@Arnaud_Leidgens can you have a look if you have a large amount of Wall files, if you don't run regular backups then those can grow exponentially.

I am thinking it is failed replicas to your mobile devices that is causing the problem, because of the large sync volume, check this buy checking off sync it will tell you, you have x replicas waiting to replicate, but don't save the setting please make Backups before any changes.

you will have to probably have a look at the datastore tables, to get a better idea. you can query the size of each table in python.

Then trunk and load might also create the problem, I have seen features grow to 100's of GIG's with trunk and load scripts. What you can do is override the layer and see if the size drops.

Hope it helps.

Regards

Henry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@hlindemann Thank you for your answer. The whole server is backed-up, which is why we don't run regular back-ups in ArcGIS ecosystem. If we talk about 'walarchives' files, they indeed piled up despite webgisdr settings (also checked by ESRI support). I've a script to delete those weekly. The problem seems to be linked to the pgdata folder.

About the failed replicas, I've this feeling it could be the cause. We're operating in Africa, where internet connection is less reliable. We all use offline mode. I had several cases where the download failed on mobile (last %). We don't use predefined 'map areas' and we prefer the manual "add offline area" solution on mobile device for flexibility (and because 'map areas' packaging sometimes failed). NB: Note that all truncatted/rewritten layers (FME/MSSQL) are linked to offline areas, for the 500 web maps, since the objective is to display daily ERP data in pop-up windows, mainly in offline mode, so there is a lot of synchronization happening there.

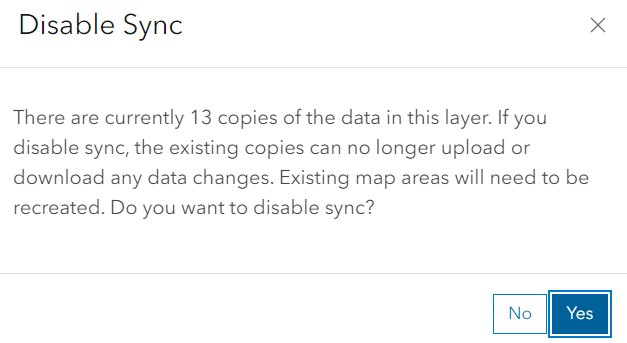

I tried to disable sync for one of our main layer. I've the message below, but I guess it is because I created a few views from this master layer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @Arnaud_Leidgens , I would like to explore the trunk and load script more, as the possible cause, but the replicas can also cause a problem data consumption wise.

In a previous support call we had there was an index that just kept on growing so even if there were 0 records the feature service was consuming gigs of data.

for a test can you do this create a new hosted feature service with a .gdb, then trunk the data in ArcGIS Pro and append the data again do this a few times and see if the feature size grows if it does then this is probably the problem.

you can also run the below script and see if it returns the size of the trunk load service just update line 14

import logging

import datetime

from arcgis.gis import GIS

import arcgis

date_time_stamp = datetime.datetime.now()

date_format = f"{date_time_stamp.year}_{date_time_stamp.month}_{date_time_stamp.day}_{date_time_stamp.hour}_{date_time_stamp.minute}"

logging.basicConfig(level=logging.DEBUG, format='%(asctime)s %(levelname)-8s %(message)s', datefmt='%Y-%m-%d %H:%M:%S')

con = GIS('https://dns.com/portal', 'username', 'password', verify_cert=False)

start_time = datetime.datetime.now()

end_time = datetime.datetime.now() + datetime.timedelta(days=-1)

# update the item id with your item id

my_item = arcgis.gis.Item(con, '6d23a0e590904181904ec70000000000', itemdict=None)

# 1 Megabytes = 1048576 Bytes

my_item = int(my_item.size / 1048576)

logging.info(f' size {my_item}')- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @hlindemann, thank you very much for your support. I think you found the problem ! I suspected this cause when I saw that truncating data was not decreasing the size of pgdata folder at all.

I created a local gdb (26000 features = 2 mb), uploaded the zip, deleted all features on ArcGIS pro. The item size is still 2mb, despite no records, on both the item page and with your script. What would be the solution ? Schedule a daily reindexing ?

Your script will already be very useful to monitor the individual size of items when it doesn't display correctly on the Portal. Since I've 2000+ items, could you eventually edit the script to loop over all items and sort them by size, with the item name ? I'm not proficient enough with Python yet but that would be useful for many purposes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @Arnaud_Leidgens , here is the code to iterate through the services, just copy it into excel. the size flag will probably only be useful for the hosted feature services.

as to fixing the problem, if you can make sure all the patches are up to date, I know there was a bug logged for this. The only method I know off currently it to override the service and to not use trunk and load but rather something like an ETL script that updates the service rather than trunk and load, that is what I wound up doing in my case.

import logging

from arcgis.gis import GIS

import arcgis

#logging.basicConfig(level=logging.INFO, format='%(asctime)s %(levelname)-8s %(message)s', datefmt='%Y-%m-%d %H:%M:%S')

con = GIS('https://dns.com/portal', 'username', 'password', verify_cert=False)

user_to_search = '!esri_'

print('Username;Folder;Title;Itemid;Size')

for user in con.users.search(user_to_search):

user_items = user.items(folder=None, max_items=100000)

for item in user_items:

if 'size' in item:

# 1 Megabytes = 1048576 Bytes

line = f"{user.username};root;{item.title};{item.itemid};{item.type};{round(item.size / 1048576)}"

else:

line = f"{user.username};root;{item.title};{item.itemid};{item.type}"

print(line)

for folder in user.folders:

user_items = user.items(folder=folder['title'], max_items=100000)

for item in user_items:

if 'size' in item:

# 1 Megabytes = 1048576 Bytes

line = f"{user.username};{folder['title']};{item.title};{item.itemid};{item.type};{round(item.size / 1048576)}"

else:

line = f"{user.username};{folder['title']};{item.title};{item.itemid};{item.type}"

print(line)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @hlindemann. Thank you very much for you help, much appreciated ! We've udpated to 11.2 since then.

According to the report (or python script), there is only 1Gb in total for all items of our Portal (file + feature size) while my pgdata folder is around 160Gb. If you're familair with FME, I understand that I should rather use "ChangeDetector" to assign one fme_operation to each feature and directly link those to a writer (as configured in attached picture).

This would fix any future problems, but not the past 'index' issues. How should I procede to really overwrite those layers in FME (thus freeing storage and improving performance) ? NB: Those are used in a production environnement and are linked to several dashboards I cannot afford to break. Thank you in advance.

NB: Similarly to your script, there is also the built-in report from the Portal, which provided the same results. I'm still not sure if I can trust those figures, since my biggest hosted feature layer, with more than 50 000 features, is estimated at around 1mb only (vs 70mb for the dbf file of the same local shapefile).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @Arnaud_Leidgens , unfortunately I don't use FME in my day to day, what I would have done in this situation is to publish a new hosted feature service, and use ArcGIS Online Assistant (esri.com) to rewire the map that is feeding the dashboard, you go into the json look for the itemid and url and replace it with the new service this then pulls through to the dashboard.

Regards

Henry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

In case somebody else has the same issue one day, indexes were indeed accumulating. Restoring a datastore backup has already reduced some of this backlog problem. Then I manually saved relevant hosted feature layers in GDB and overwrote them on the Portal (no impact on layer configurations, pop-up, dashboards, etc.). In FME, I now avoid using "truncate all / insert all" in the writer. Since AGOL recently added an way to "Rebuild spatial indexes", I hope that this feature will be implemented in the next Entreprise update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you. Interesting ! That seems great for developers but less great for users who do not use time-series. We didn't enable any spatio-temporal datastore in our infrastructure.