- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Folder path / Permission problem creating a Cl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Folder path / Permission problem creating a Cloud Store to an Azure Data Lake

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

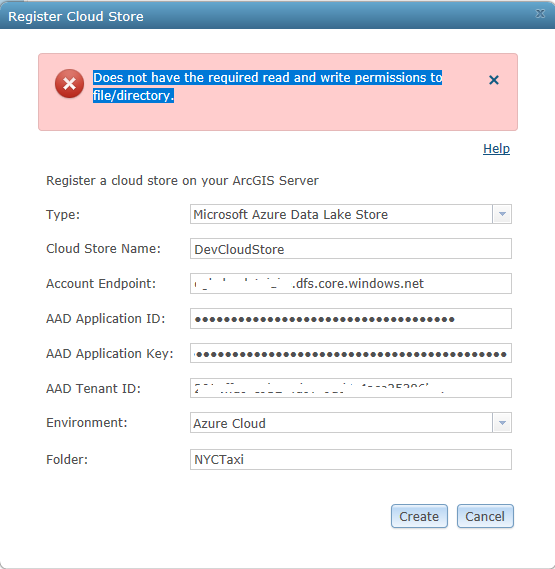

I'm trying to create a cloud store to an azure data lake (gen 2). I'm running ArcGIS Enterprise 10.6.1. I've gotten past my first hurdle and found working app keys and IDs (see that post). I think I have roles assigned that should provide permissions, but I don't see that in practice. My error looks like:

My data lake filesystem is called 'lakefilesystem', and under that I have a sample data folder called 'NYCTaxi'. I've tried all the combinations of 'lakefilesystem/NYCTaxi', 'lakefilesystem', 'NYCTaxi', and they all have the same result as shown above.

Has anyone got this working and can point me in the right direction? Your help is appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Are you able to share a screen shot of the folder structure of your Azure Data Lake? (or mock it up) I noticed you no longer have the same file name as your other post.Right now I am assuming you have:

-- Azure Data lake

----Folder (NYC Taxi)

------Taxi_data_1.csv

but from the other one I assumed:

--Azure Data Lake

----LakeFileSystem

------NYCTaxi

--------Taxi_data_1.csv

For example, this is the one I use:

The blacked out term is part of the account endpoint. "simple" is the folder I specified in the cloud registration. When I later register this as a big data file share, I will have 5 datasets (each a folder) show up in GeoAnalytics:

- Copy_to_Data_Store_for....

- Copy_to_Data_Store_.....

- earthquakes

- mineplant

- sdadsa

I have noticed mine is Gen1 - so I'll additionally try out a Gen 2 one today and let you know how it goes.

- Sarah Ambrose

Product Engineer, GeoAnalytics Team

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Here's a shot of my folders from Storage Explorer

The whole taxi tree looks like:

lakefilesystem

....NYCTaxi

........2018

............yellow_tripdata_2018-11.csv

............yellow_tripdata_2018-12.csv

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks Josh Hevenor,

As mentioned in the other thread, the Gen 2 Data Lake isn't supported.

If you moved this over to a blob store with a similar structure you would would register the blob container 'lakefilsystem', and not include a folder. The 4 datasets, AIS, flight_delays, NYCTaxi and reference would be recognized and registered in your big data file share. The NYC Taxi dataset would include every folder below as a single data.

If you wanted to create a new dataset for each year in NYC Taxi, like 2018 and then had folders for 2019, 2017 etc. You would set the folder as "NYCTaxi", and every subfolder at the next "level" (so what you see when you browse into NYXTaxi), would then be represented as an individual dataset.

- Sarah

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks again,

I thought as a workaround I could connecting using HDFS instead of as a data lake, but I see in the help that secured stores aren't supported. That might be a quicker path for Gen2 support than writing a specific connector.