- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Best Practice for ArcGIS Enterprise Multi-Mach...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Best Practice for ArcGIS Enterprise Multi-Machine Deployment

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi ArcGIS Enterprise experts,

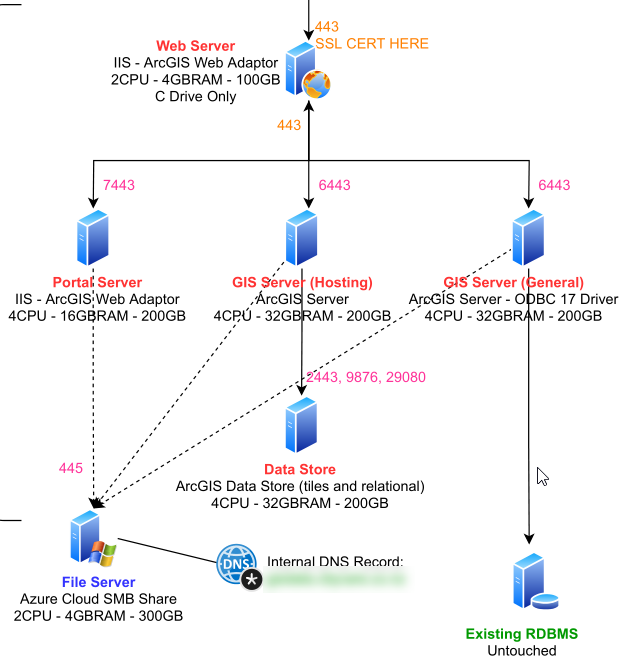

We are about to start an on-premise multiple-machine deployment (Windows Server). Here is the high-level architecture of the ArcGIS Enterprise part behind the firewall.

- Web Server (3 x Web Adaptor): 2 CPU - 4GB - C:100GB

- Portal: 4CPU - 16GB - C:100GB - D:100GB

- GIS Hosting: 4CPU - 32GB - C:100GB - D:100GB

- GIS General: 4CPU - 32GB - C:100GB - D:100GB

- Data Store: 4CPU - 32GB - C:100GB - D:100GB

The question is around hardware resource planning. What is the best practice in terms of:

1. Proper disk size for each of the boxes above? e.g. I can find the minimum requirements from documentation say 10GB spare size. But what is the optimal size for such deployment? What's the factor of planning disk size? What contributes to file size growth?

2. Installation location. Installation on the system drive i.e. C drive? Is it recommended to install ArcGIS to a different drive than C Drive? If so, what's the major benefit of putting the ArcGIS installation to a different drive?

Thanks for the help.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

1.

It primarily depends on data and what privileges users have to publish data. For example, if you are expecting users to host imagery (raster) on the hosting server then you will need cater for that. Vector data is quite small and you can probably ignore handling this at scale.

The tile cache data store can become quite big if you have significant 3D data holdings as well. If you deploy at 10.8.1 you can leverage a NAS or S3 for this though.

2.

It is generally recommended to leave C drive for the OS.

Install all software to the D drive.

All locally hosted data should be on the E drive.

This logical separation means that a windows patch (download, install, delete installer) doesn't cause the C drive to max out and then crash the GIS if they're installed to the same disk. Same for user data.

My recommendation:

C drive 100 GB

D drive 150 GB

E drive 250 GB.

Depending on your internal business processes, extending the drives to meet user demand is trivial. You'll need some good monitoring thrown into the mix for this though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

For all of the ArcGIS Enterprise components, the data (content, logs, services, caches, databases etc.) is all written to directories outside of the installation path, and this will grow as your usage of ArcGIS Enterprise grows - there's no real cap on how large they could become. By default these directories are c:\arcgisportal, c:\arcgisserver, and c:\arcgisdatastore, but you can (and should) change them to another drive (or a network file share for HA, noting that some of these directories must still live on the local machine) as part of the installation.

Your 100GB per machine on the D-drive more than covers this for a clean installation, but it's nearly impossible to tell you how quickly you'll use this up, other than to say that tile caches could eat away at the ArcGIS Server's disk space very quickly if you're using them.

There are some log files which are written to the installation directories, so while they won't grow as rapidly as the data directories, it would be incorrect to say that they don't grow at all, just not significantly. The minimum requirements listed in the support documentation should more than cover this.

You can install the ArcGIS Enterprise components to another drive if required - usually this done more out of organizational policy to segregate programs/data from the OS drive, but as stated above, the installation directories shouldn't grow that much. Definitely create the data directories on D-drive though, this is a good practice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Presumption: Your Hosting and general ArcGIS servers are participating in the same site? If so, where do you plan to host the config store to allow the servers to maintain synch? Also, where are you storing the Portal content directories? For your proposed environment, the location of the Portal content and server config store are critical failure points that must be as nearly 100% accessible with exceptionally low latency to Portal, Server (hosting) and Server (General) as possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

the Trust website provides this paper "ArcGIS Enterprise Web Application Filter Rules"

that lists a suggested list of endpoints that can be blocked to external access - you can apply these rules at the External Load Balancer in your proposed layout.

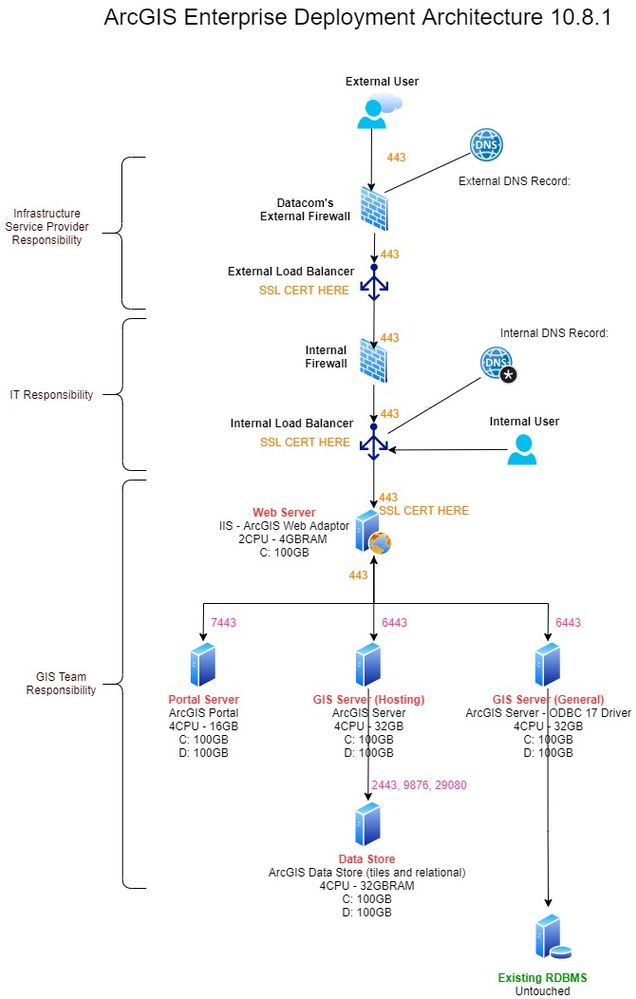

Angus's comment about where the web adaptors sit is valid - but running all WA's on Portal tier also works - and allows you to remove the need for an internal Load Balancer - you can have the SSL cert on the IIS site hosting the WA's.

unless in future you are planning to add additional virtual machine at Portal tier to provide High Availability?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you all for your input. I will mark this as solved. To summarise from replies, the good practices are (windows server, 10.8.1 multi-machine on-prem single site non-HA deployment)

1, separate ArcGIS installation to a different drive than OS drive as a general IT practice

2, keep the data/content as close as the ArcGIS server, performance-wise is almost local > cloud share.

3, disk size vary depending on type and volume of data. Since we are mostly 2D maps with less than 40GB caching, it is safe to start from 100GB dedicated disk size for Portal, GIS and Data Store Server with a monitoring mechanism so they can be adjusted if needed.

4, Configuration store is a single point of failure for single-site deployment so make sure it is accessible all time. We plan to put it on our Azure SMB share.

5, We decide to separate the WA tier for simplicity. But can be dissolved into the existing Portal to save the need for a box running WA.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

1.

It primarily depends on data and what privileges users have to publish data. For example, if you are expecting users to host imagery (raster) on the hosting server then you will need cater for that. Vector data is quite small and you can probably ignore handling this at scale.

The tile cache data store can become quite big if you have significant 3D data holdings as well. If you deploy at 10.8.1 you can leverage a NAS or S3 for this though.

2.

It is generally recommended to leave C drive for the OS.

Install all software to the D drive.

All locally hosted data should be on the E drive.

This logical separation means that a windows patch (download, install, delete installer) doesn't cause the C drive to max out and then crash the GIS if they're installed to the same disk. Same for user data.

My recommendation:

C drive 100 GB

D drive 150 GB

E drive 250 GB.

Depending on your internal business processes, extending the drives to meet user demand is trivial. You'll need some good monitoring thrown into the mix for this though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

For all of the ArcGIS Enterprise components, the data (content, logs, services, caches, databases etc.) is all written to directories outside of the installation path, and this will grow as your usage of ArcGIS Enterprise grows - there's no real cap on how large they could become. By default these directories are c:\arcgisportal, c:\arcgisserver, and c:\arcgisdatastore, but you can (and should) change them to another drive (or a network file share for HA, noting that some of these directories must still live on the local machine) as part of the installation.

Your 100GB per machine on the D-drive more than covers this for a clean installation, but it's nearly impossible to tell you how quickly you'll use this up, other than to say that tile caches could eat away at the ArcGIS Server's disk space very quickly if you're using them.

There are some log files which are written to the installation directories, so while they won't grow as rapidly as the data directories, it would be incorrect to say that they don't grow at all, just not significantly. The minimum requirements listed in the support documentation should more than cover this.

You can install the ArcGIS Enterprise components to another drive if required - usually this done more out of organizational policy to segregate programs/data from the OS drive, but as stated above, the installation directories shouldn't grow that much. Definitely create the data directories on D-drive though, this is a good practice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I back up all that Angus & Craig have said - but I have a question about your architecture.

What are you intending to put in the Azure SMB share that you need to access from the in-house servers?

This share will almost certainly have relatively high latency for any read/write - so would not be recommended for Server System directories. May be OK for Portal Content, but I wouldn't want to use for registered File Data Stores used by ArcGIS Server.

This may be an good location for Backup files - there may be for Portal, Server and Data Stores. If you use webgisdr for creating backups of your entire site, you will certainly be looking for space to keep the large outputs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks for the replies, @DavidHoy , @CraigRussell , @AngusHooper1 . Great insight, exactly what I need to know.

A bit more background - We are deploying 10.8.1. We are mainly hosting 2D maps for urban infrastructures and utilities. We do host imagery but not a lot, current total caching under 40GB. The whole reason why I am asking is that I am heavily constrained by the VM storage capacity from IT at the moment, so even 50GB that I can save can speed up the implementation. I am trying to find the right balance between disk size and performance&scalability.

My key takeaways from your replies:

1. Let C drive be OS drive, 100GB. This is also what IT suggested.

2. For the Portal server, 2 x ArcGIS server and the Data Store server - put ArcGIS installation on D drive. Also, put data on D drive (d:\arcgisportal, d:\arcgisserver, and d:\arcgisdatastore). 100GB to start but can be upsized easily in the future if needed.

3. For the webserver, which runs three web adaptors, I will keep the installation on the C drive of 100GB, as it doesn't produce many contents in the file system.

To answer your question @DavidHoy - we plan to put registered file folders and file gdbs in the Azure SMB share to replace the on-prem file server. I had the same concern about latency. But I was told by IT the performance test results were surprisingly good for small files e.g. less than 100MB. Due to the lack of on-prem storage so I am planning to give it a try. Worst case is to switch back to on-prem.

Another question for Web Apdator - Is it a good practice to install it on a separate/dedicated box? What if install it on an existing web server or reverse proxy server, any impact?

Thanks heaps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello,

Echo comments already shared. I'll add that, testing the access and latency to your designed data storage locations, be they file based or RDBMS is critical before finalizing your design. In my experience, the performance claims of 3rd party storage solutions, particularly if there is geographic separation of the Enterprise GIS app stack and the storage (aka many connections in path, sometimes unpredictable latency) are usually too optimistic for Enterprise GIS as our needs differ from the office worker use cases that many of those performance claims are based on.

Rule of thumb best practice: Keep your data as close to the application stack using the most direct path as possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I share Todd's scepticism about performance claims for access to cloud storage from in-house servers.

But having said that, Portal Content may not be as critical a location - think of full Cloud deployments where AWS S3 or Azure Blob is an approved solution in the Esri templates for Portal Content and for Map Tile Cache. These are also "slow" storage and perhaps do well enough.

But - local storage is always best practice - and is certainly mentioned for Map Tile Cache storage in an optimal Server configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Well,

under those constraints - may I suggest you reduce the D:drive on the Portal server to (say) 40 GB and provision 60 GB on the Azure Share for the Portal Content directory. That way, you could bring the Data Folders back to an increased D:drive on the GIS Server.

regarding Web Adaptors, in a non HA deployment like this, I would normally install the Web Adaptors on the Portal Server and not have a separate tier. Unless that is, you have a need for a DMZ isolation for incoming requests from outside your network.

If you have an existing Reverse Proxy outside the firewall, I would suggest that can give sufficient isolation - passing requests to the Web Adaptors running on the Portal Server. Or - there is no real problem with installing the Web Adaptors on your existing Web Server.

Either way - I dont think you need a dedicated Web Adaptor tier.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks. I think we are clear on the disk sizes and install location. I want to take this opportunity to extend the topic a little to the overall architecture.

Here is the full picture from which I cut in my original post. However, I have simplified it by removing the file server (also my intention was to use it as a configuration store) out of the equation, and want to focus on the main tiers.

This is a multi-machine with 2 x ArcGIS Server deployment, non-HA. We chose to put everything behind the firewall with a separate web adaptor tier for below reasons:

1, simplicity on the architecture design

2, less firewall configuration from IT or infrasture service provider, easier to scale horizontally

3, able to use webgisdr tool (I think if to put web adaptor in DMZ, there is an issue running the webgisdr tool?)

However, we hope to disable the REST API web interface and Service Admin API if accessed externally. Is this achievable with the above setup?

Also, if removing the dedicated web adaptor tier and installing the 3 x web adaptor on the Portal Server as you would normally do, I can see the benefit of resource-saving. Is there any other benefit from doing that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Presumption: Your Hosting and general ArcGIS servers are participating in the same site? If so, where do you plan to host the config store to allow the servers to maintain synch? Also, where are you storing the Portal content directories? For your proposed environment, the location of the Portal content and server config store are critical failure points that must be as nearly 100% accessible with exceptionally low latency to Portal, Server (hosting) and Server (General) as possible.