- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Object detection with ArcGIS Python API: invalid a...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Object detection with ArcGIS Python API: invalid argument 0: Sizes of tensors must match except in dimension 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello,

For our use case, we are trying to detect a type of pipe join/weld in an image. The input images are all non-georeferenced.

Based on prior recommendations by Esri, we labeled the input images (~900) using the LabelImg tool and the labels get saved as xml documents. I tried to train the model with the input images and the generated labels by following this tutorial: (https://developers.arcgis.com/python/sample-notebooks/automate-road-surface-investigation-using-deep...).

One thing to point out.. on the prepare_data(data_path, batch_size=8, chip_size=500, seed=42, dataset_type='PASCAL_VOC_rectangles'), data_path points to source images and it's annotations. It is not clear from the tutorial if we need to generate and use image chips instead. But it didn't throw any errors.

After downloading SSD model, when I try to call ssd.show_results(thresh=0.2), I get the error below:

Do we need to export the training data as image chips prior to calling the prepare_data()? Does it have any relation to the error above?

Thanks for any input!

Anusha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am using Pro 2.7.2 with the Deep Learning Framework installed using the esri installer. I was able to complete that road surface investigation sample ok without any problems. It could be a problem with your input data. The prepare data function expects a folder with 2 subfolders called images and labels

In the images folders should be all your images eg: Adachi_20170906093835.jpg

In the labels folder, you should have a corresponding xml label file for each image with the same name eg: Adachi_20170906093835.xml

Each XML file should have something like the following:

<annotation>

<folder>Adachi</folder>

<filename>Adachi_20170906093835.jpg</filename>

<size>

<width>600</width>

<height>600</height>

</size>

<segmented>0</segmented>

<object>

<name>D20</name>

<bndbox>

<xmin>87</xmin>

<ymin>281</ymin>

<xmax>226</xmax>

<ymax>432</ymax>

</bndbox>

</object>

</annotation>Are your XML files the same format as this? What format and width\height are your images?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes, there are 2 sub folders for images and labels. The labels xml document has a similar structure to the one above.

All the input images are jpeg's and the width/height measurements are not the same and they vary across images. Does the size (width/height) has to be uniform for all the images?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes I think that in order to batch images together they may have to be the same size. There are a couple of things you could try, if the first one doesn't work try the second:

- Add resize_to=500 into prepare_data arguments

- Set batchsize=1 in prepare_data arguments

resize_to - Optional integer. Resize the images to a given size. Works only for “PASCAL_VOC_rectangles”, “Labelled_Tiles” and “superres”. First resizes the image to the given size and then crops images of size equal to chip_size. Note: If resize_to is less than chip_size, the resize_to is used as chip_size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

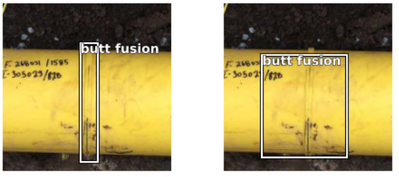

Thanks @Tim_McGinnes . I tried the resize_to argument in the prepare_data function and it worked without any errors. I was able to train the model successfully. When I ran the ssd.show_results(rows=10, thresh=0.2, nms_overlap=0.5) to detect the weld in the validation set, it returns sample results below:

Ground truth Predictions

The prediction result for the top image is close to the ground truth (labeled data), but it is completely off for the bottom image. The labeled image does not have the weld, but the trained model has a false positive.

Do we need more training data to improve the model accuracy? We used around 900 images to train the model on 1 class and the model accuracy is 0.54.

Also, I want to point out that in ArcGIS Pro, we export the training data as image chips before training the model. It is not clear from the tutorial if we must use the image chips instead of source images for model training. Can you please clarify on this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That's great - glad you got the model working. No, you do not need image chips for this type of project - the training data is just the source images as used in, or exported from, LabelImg.

How many epochs did you train the model for? Is the accuracy still improving? The first thing to try would be to train it a bit longer, paying attention to the accuracy values and loss graphs.

The next thing to try would be to use a different model - both FasterRCNN and YOLOv3 are object detection models and may perform better than SSD. Just take a copy of your notebook and you should only need to change a couple of lines, from memory for FasterRCNN it is something like:

- from arcgis.learn import

SingleShotDetectorFasterRCNN, prepare_data - ssd =

SingleShotDetectorFasterRCNN(data)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

ok, thanks for clarifying.

I trained the model for 30 epochs. The average precision score is 0.56 for one class. The validation loss trended downwards the first few epochs and increased in between, but overall the loss continued to go down with each epoch.

I did model inference using the predict method on an image (part of training set) and got the result below:

Since the image was from the training data set, I expected that the result would be almost accurate. But, the detected results are very inaccurate. It detected the class everywhere but the actual weld. I don't understand why this is the case. Can you explain what could be the reason?

Also, I trained the model on the same data set using YOLOv3 for 30 epochs. The model accuracy is 0.14, which is much less compared to the SSD.