- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Re: Correct workflow for publishing then updating ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Correct workflow for publishing then updating hosted feature layer with spatially enabled dataframe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am curious as to the correct workflow to

a) publishing a spatially enabled data frame containing polygon data as a hosted feature layer (one time only to create the feature layer)

b) overwrite and/or update the hosted feature layer with a new spatially enabled dataframe - same schema but different data.

There are multiple ways to achieve a - sdf.spatial.to_featurelayer being the easier, but I have been unable to overwrite / update the resultant layer.

From https://developers.arcgis.com/python/sample-notebooks/publishing-sd-shapefiles-and-csv/, it seems like my only option is to export the sdf to a shapefile, zip up the shapefile, publish from that, and then follow the same process (export to shapefile and zip) to update?

Is that my only option?

I hesitate to use shapefiles because of some of the nuances of their field names, date types, etc. etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Another issue is that I don't have access to Arcpy on this machine, so according to https://developers.arcgis.com/python/guide/introduction-to-the-spatially-enabled-dataframe/

I can only export to shapefile.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @Jay_Gregory

The script I've written reads a GDB feature class and updates a web feature layer i.e. appends new data to it.

You just need to change/update the fields of attribute table.

from arcgis import GIS

import pandas as pd

from arcgis.features import GeoAccessor, GeoSeriesAccessor

from arcgis import geometry

from copy import deepcopy

gis = GIS('url', 'username', 'password')

sdf_to_append = pd.DataFrame.spatial.from_featureclass(r"path to gdb feature class")

#search for the hosted feature layer/service

featureLayer_item = gis.content.search('type: "Feature Service" AND title:"xxxxx"')

#access the item's feature layers

feature_layers = featureLayer_item[0].layers

#query all the features

fset = feature_layers[0].query()

features_to_be_added = []

template_hostedFeature = deepcopy(fset.features[0])

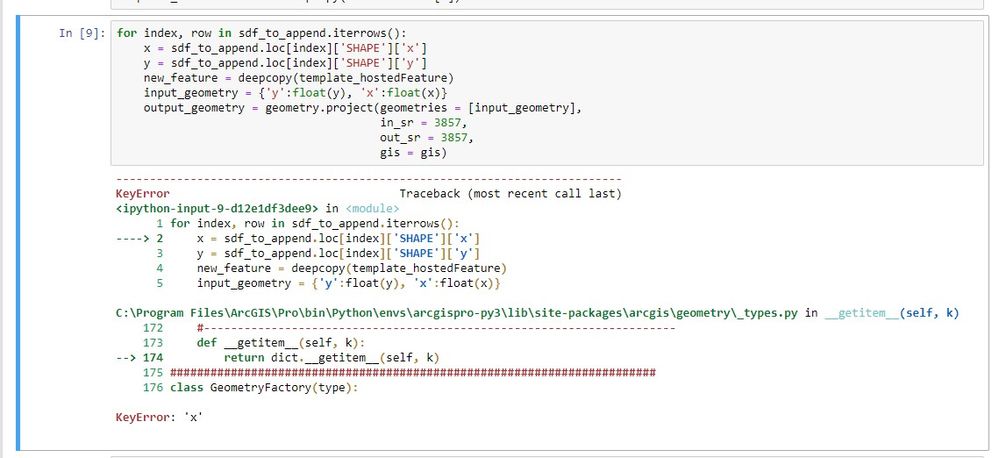

for index, row in sdf_to_append.iterrows():

x = sdf_to_append.loc[index]['SHAPE']['x']

y = sdf_to_append.loc[index]['SHAPE']['y']

new_feature = deepcopy(template_hostedFeature)

input_geometry = {'y':float(y), 'x':float(x)}

output_geometry = geometry.project(geometries = [input_geometry],

in_sr = 3857,

out_sr = 3857,

gis = gis)

# assign the updated values

new_feature.geometry = output_geometry[0]

new_feature.attributes['HoleID'] = row['HoleID']

new_feature.attributes['Project'] = row['Project']

new_feature.attributes['InterFrom'] = row['InterFrom']

new_feature.attributes['InterTo'] = row['InterTo']

new_feature.attributes['Grade'] = row['Grade']

new_feature.attributes['GramMetre'] = row['GramMetre']

new_feature.attributes['RegoProf'] = row['RegoProf']

new_feature.attributes['MidPoint'] = row['MidPoint']

new_feature.attributes['IntercptNo'] = row['IntercptNo']

new_feature.attributes['Overlap'] = row['Overlap']

new_feature.attributes['EndDate'] = row['EndDate']

new_feature.attributes['EAST'] = row['EAST']

new_feature.attributes['NORTH'] = row['NORTH']

new_feature.attributes['RL'] = row['RL']

new_feature.attributes['Width'] = row['Width']

features_to_be_added.append(new_feature)

feature_layers[0].edit_features(adds = features_to_be_added)

print("Hosted feature layer is updated with gdb feature class!")

However if you want to overwrite a feature layer, it's recommended to truncate it first then do an overwrite:

from arcgis.gis import GIS

from arcgis.features import FeatureLayerCollection

gis = GIS("url", "username", "password")

# get the feature layer

flc_item = gis.content.get("item_id")

fLyr = flc_item.layers[0]

fLyr.manager.truncate()

# get the gdb item

gdb_item = gis.content.get("gdb_item_id")

feature_layer_collection = FeatureLayerCollection.fromitem(flc_item)

feature_layer_collection.manager.overwrite(gdb_item)

I hope that's helpful.

Cheers

Mehdi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

In your first solution, you read from a file geodatabase. The machine I'm working on only has the Python API installed, not arcpy, and according to https://developers.arcgis.com/python/guide/introduction-to-the-spatially-enabled-dataframe/ it seems like I'll be unable to work with gdb feature classes. Regarding the second option, my source data is a spatially enabled dataframe, which I can't publish natively using the Python api. So will I need to export it to a shapefile to do this? Publish as a shapefile, then on each subsequent overwrite (I need to overwrite not update), export to a shapefile, zip up, then overwrite?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You can also input shapefiles in sdf = pd.DataFrame.spatial.from_featureclass("shapefiles or gdb fc").

If you use the following line, it publishes the spatially enabled dataframe to AGOL or Portal to a folder you specify.

hosted_feature_lyr = sdf.spatial.to_featurelayer('feature_layer_name', folder='folder_name')

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Have you tested your second method to see that it will actually overwrite the old content with the new gdb_item? The overwrite will return 'success' = True and the time stamp on the online feature will update but in actuality, the data seems to be restored to what was originally published and not the gdb_item data. As per: https://community.esri.com/t5/arcgis-api-for-python-questions/overwrite-not-working-wrong-number-of-...

Thoughts?

Brian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks for the script.

Could you help me out with this bit...

KeyError 'x'.

Is that because your script is for point data? I would like to append to a polygon dataset.