- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- ArcGIS for Python and arcpy - drive time areas res...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ArcGIS for Python and arcpy - drive time areas result and local data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello,

I am new to the ArcGIS for Python API but I am kind of expert in arcpy.

My aim would be to use the ArcGIS for Python API to perform drive-time-areas analysis (DTA) like explained here and then use the result to perform spatial selections with arcpy on some local data (e.g. ShapeFiles or others).

My doubts:

- Do I need to upload all the input data or is there a way to avoid this step?

- If I have to, is it possible via the API?

- Will I then be able to use the result from DTA to perform spatial selection with arcpy against local data or do I have to upload the local data/download the result locally?

As regards this last point, I guess one thing to handle would be the JSON format of the resulting SHAPE field from DTA.

I know that from ArcGIS Pro one can use Feature Layers from AGOL directly to perform this kind of operations, as opposed to ArcMap where one has to make a copy of it. But, what about via a python script?

Is there any better option? Thanks in advance for any hints or advice.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

DISCLAIMER : Use the provided code at your own risk or you'll blown your credits away!!! (see my comment below the answer)

Answering myself.

It's been tough but I did it.

First of all, I have to say the ArcGIS for Pytho documentation is not very good, at least for the create_drive_time_areas function.

Anyway, the way I performed DTA analysis with a local FeatureClass was:

- converting it to a FeatureSet

- Converting the FeatureSet into a JSON and then into a dict

- use this dict as input to the create_drive_time_areas function

I am going to convert the result of the analysis (now a hosted feature layer in my organizational AGOL account), which is now in the form of a FeatureSet, back to a (in_memory) FeatureClass and do my stuff with arcpy.

Before posting the code I have to warn you:

- as of now, there's no hint in the docs about what should be the format of the input feature of the create_drive_time_areas. From my trial and errors I saw this message

Exception: Invalid format of input layer. url string, feature service Item, feature service instance or dict supported- the "estimate=True" which should tell you the credit consumption does not work.

Failed to execute (EstimateCredits).

Below is the code I used (adapted from Drive time analysis for opioid epidemic | ArcGIS for Developers , sorry I don't know how to format the code in this site...).

I only left the part where I use the result to perform spatial query with local data (it's up to you but I should have given you enough information so far).

import arcgis, arcpy

from datetime import datetime

from arcgis.features import FeatureLayer

import json

USERNAME="YOUR_USERNAME"

PASSWORD="YOUR_PASSWORD"

IN_FC = r"example.gdb\test"

# Create a FeatureSet object and load in_memory feature class

feature_set = arcpy.FeatureSet()

feature_set.load(IN_FC)

# Connect to GIS

from arcgis.gis import GIS

gis = GIS("https://www.arcgis.com", USERNAME, PASSWORD)

feature_json = feature_set.JSON

feature_dict = json.loads(feature_json)

print(feature_dict)

from arcgis.features.use_proximity import create_drive_time_areas

# Generate service areas

result = create_drive_time_areas(feature_dict, [5],

output_name="TEST"+ str(datetime.now().microsecond),

travel_mode="Walking",

overlap_policy='Dissolve')

# Check type of result

type(result)

# Share results for public consumption

result.share(everyone=True)

# Convert it to a FeatureSet

drive_times_all_layer = result.layers[0]

driveAll_features = drive_times_all_layer.query()

print('Total number of rows in the service area (upto 5 mins) dataset: '+ str(len(driveAll_features.features)))

# Get results as a SpatialDataFrame

driveAll = driveAll_features.sdf

driveAll.head()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I've made some improvements.

From the same sample notebook I mentioned in my question, I get that I can store my result in a FeatureSet and do something with it.

# Convert it to a FeatureSet drive_times_all_layer = result.layers[0] driveAll_features = drive_times_all_layer.query()

Maybe the best thing I can do then is saving this FeatureSet in a temporary local FeatureClass like explained in Quick Tips: Consuming Feature Services with Geoprocessing

arcpy.CopyFeatures_management(fs, r"c:\local\data.gdb\permits")

Still need to try it with some actual data though, will keep you up to date with my findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

DISCLAIMER : Use the provided code at your own risk or you'll blown your credits away!!! (see my comment below the answer)

Answering myself.

It's been tough but I did it.

First of all, I have to say the ArcGIS for Pytho documentation is not very good, at least for the create_drive_time_areas function.

Anyway, the way I performed DTA analysis with a local FeatureClass was:

- converting it to a FeatureSet

- Converting the FeatureSet into a JSON and then into a dict

- use this dict as input to the create_drive_time_areas function

I am going to convert the result of the analysis (now a hosted feature layer in my organizational AGOL account), which is now in the form of a FeatureSet, back to a (in_memory) FeatureClass and do my stuff with arcpy.

Before posting the code I have to warn you:

- as of now, there's no hint in the docs about what should be the format of the input feature of the create_drive_time_areas. From my trial and errors I saw this message

Exception: Invalid format of input layer. url string, feature service Item, feature service instance or dict supported- the "estimate=True" which should tell you the credit consumption does not work.

Failed to execute (EstimateCredits).

Below is the code I used (adapted from Drive time analysis for opioid epidemic | ArcGIS for Developers , sorry I don't know how to format the code in this site...).

I only left the part where I use the result to perform spatial query with local data (it's up to you but I should have given you enough information so far).

import arcgis, arcpy

from datetime import datetime

from arcgis.features import FeatureLayer

import json

USERNAME="YOUR_USERNAME"

PASSWORD="YOUR_PASSWORD"

IN_FC = r"example.gdb\test"

# Create a FeatureSet object and load in_memory feature class

feature_set = arcpy.FeatureSet()

feature_set.load(IN_FC)

# Connect to GIS

from arcgis.gis import GIS

gis = GIS("https://www.arcgis.com", USERNAME, PASSWORD)

feature_json = feature_set.JSON

feature_dict = json.loads(feature_json)

print(feature_dict)

from arcgis.features.use_proximity import create_drive_time_areas

# Generate service areas

result = create_drive_time_areas(feature_dict, [5],

output_name="TEST"+ str(datetime.now().microsecond),

travel_mode="Walking",

overlap_policy='Dissolve')

# Check type of result

type(result)

# Share results for public consumption

result.share(everyone=True)

# Convert it to a FeatureSet

drive_times_all_layer = result.layers[0]

driveAll_features = drive_times_all_layer.query()

print('Total number of rows in the service area (upto 5 mins) dataset: '+ str(len(driveAll_features.features)))

# Get results as a SpatialDataFrame

driveAll = driveAll_features.sdf

driveAll.head()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

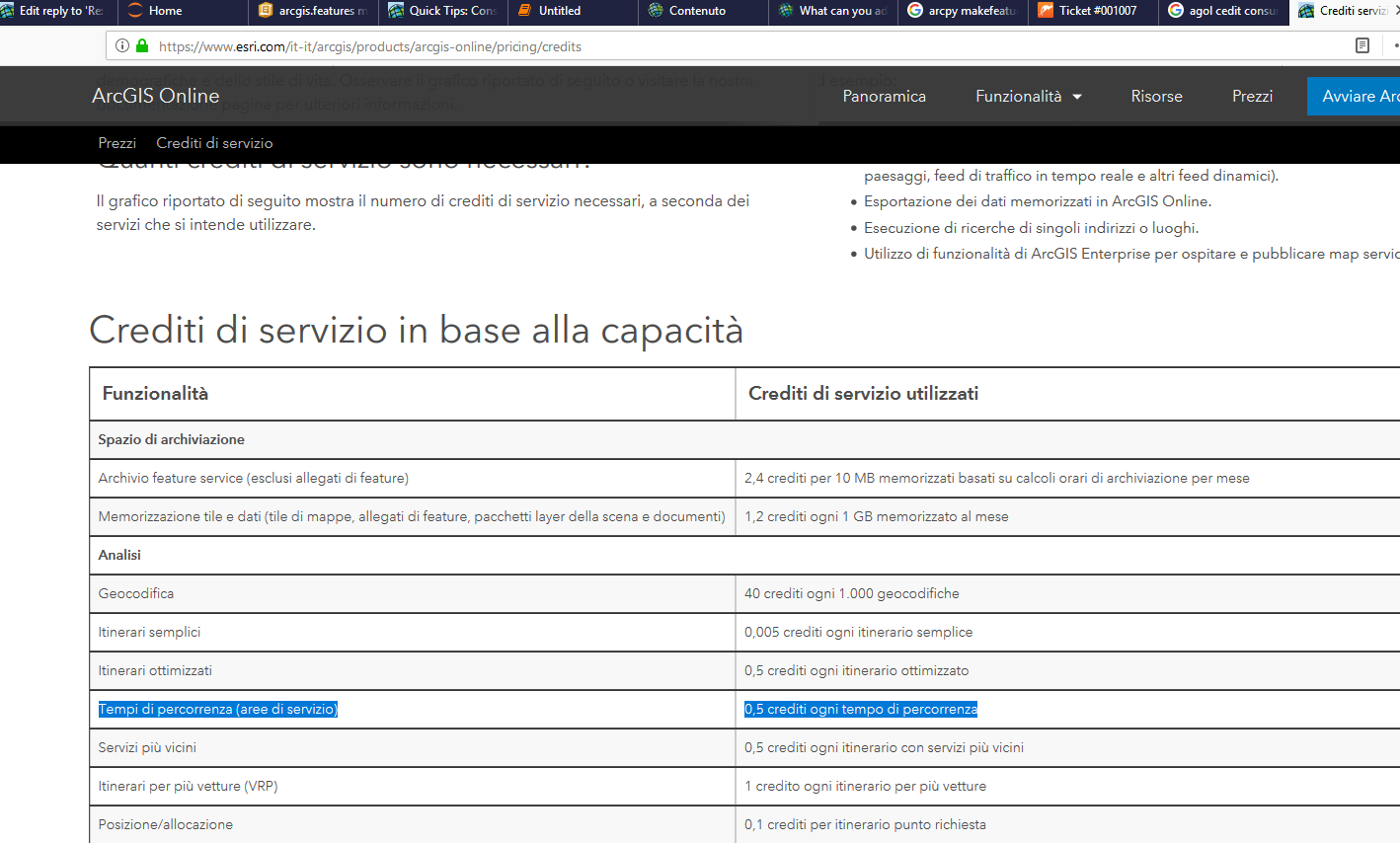

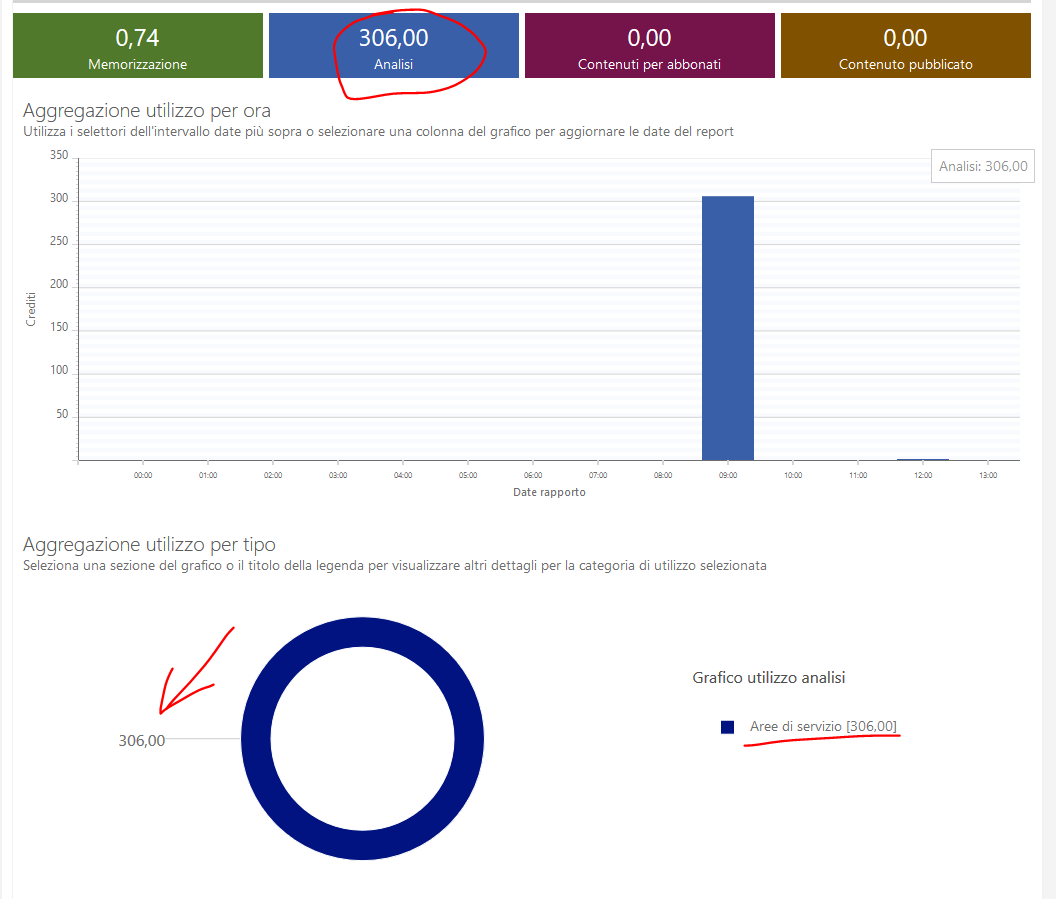

the "estimate=True" which should tell you the credit consumption does not work.

Not only it does not work, but it consumes hundreds of credits!!!

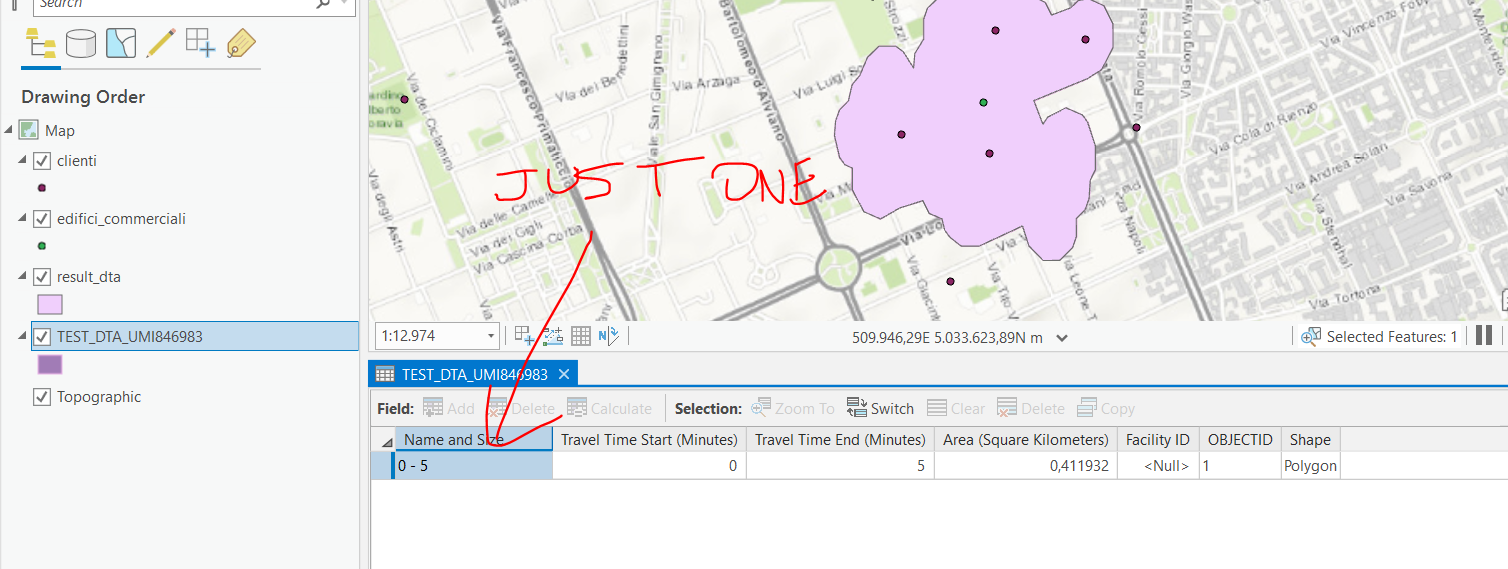

I only used a feature set with ONE point to do that and I consumed more than .... 300 credits WTF??!!

Use the provided code very carefully or you'll blown your credits away!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Replying to myself again,

actually the credits consume came from probably accidentally running the code in the example provided in Drive time analysis for opioid epidemic | ArcGIS for Developers as I found a layer named "DriveTimeToClinics_All_<DATETIME>" in my hosted layers.

I know how the credits in AGOL works and I probably should have paid more attention on my tests. However, there should be a warning on the credit consumption in the sample or at least a sample that does not consume 300 credits, so that if someone like me is using the sample for testing purpose will not consume all its credits.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm currently struggling with this as well - the documentation being horrible. My issue is that I don't know what the data looks like that is being imported and then created into a FeatureSet, so I have no idea how to adapt this for my use. Also, I don't have access to ArcPy, so that is not an option for me. What I am trying to do, is just have the ability to enter in an address or set of coordinates and retrieve the drive time analysis surrounding that location. Where to even begin doing that with what is provided here?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Like you, I was able to answer my own question, so my issue is resolved.