- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Online

- :

- ArcGIS Online Documents

- :

- Overwrite ArcGIS Online Feature Service using Trun...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overwrite ArcGIS Online Feature Service using Truncate and Append

Overwrite ArcGIS Online Feature Service using Truncate and Append

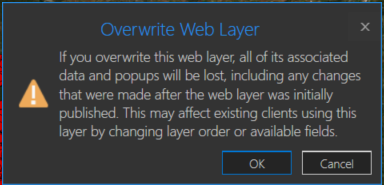

You may have a need to overwrite an ArcGIS Online hosted feature service due to feature and/or attribute updates. However, this could cause some data loss such as pop-ups, symbology changes, etc in the hosted feature service. For example, you will receive a warning about this when you try to overwrite a feature service in ArcGIS Pro:

One way around this is to use the ArcGIS API for Python. If you are the data owner, or Administrator, you can truncate the feature service, and then append data. This is essentially an overwrite of the feature service. The below script will do this by specifying a local feature class and the item id of the feature service you wish to update. The script will then execute the following steps:

- export the feature class to a temporary File Geodatabase

- zip the File Geodatabase

- upload the zipped File Geodatabase to AGOL

- truncate the feature service

- append the zipped File Geodatabase to the feature service

- delete the uploaded zipped File Geodatabase in AGOL

- delete the local zipped File Geodatabase

- delete the temporary File Geodatabase

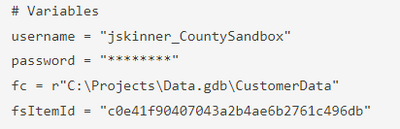

Here is an explanation of the script variables:

- username = ArcGIS Online username

- password = ArcGIS Online username password

- fc = path to feature class used to update feature service

- fsItemId = the item id of the ArcGIS Online feature service

- featureService = True if updating a Feature Service, False if updating a Hosted Table

- hostedTable = True is updating a Hosted Table, False if updating a Feature Service

- layerIndex = feature service layer index

- disableSync = True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

- updateSchema = True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

- upsert = True will not truncate the feature service, requires a field with unique values

- uniqueField = Field that contains unique values

Note: For this script to work, the field names in the feature class must match the field names in the hosted feature service. The hosted feature service can have additional fields, though.

Video

Script

import arcpy, os, time, uuid

from zipfile import ZipFile

from arcgis.gis import GIS

import arcgis.features

# Variables

username = "jskinner_rats" # AGOL Username

password = "********" # AGOL Password

fc = r"c:\DB Connections\GIS@PLANNING.sde\GIS.Parcels" # Path to Feature Class

fsItemId = "a0ad52a76ded483b82c3943321f76f5a" # Feature Service Item ID to update

featureService = True # True if updating a Feature Service, False if updating a Hosted Table

hostedTable = False # True is updating a Hosted Table, False if updating a Feature Service

layerIndex = 0 # Layer Index

disableSync = True # True to disable sync, and then re-enable sync after append, False to not disable sync. Set to True if sync is not enabled

updateSchema = True # True will remove/add fields from feature service keeping schema in-sync, False will not remove/add fields

upsert = True # True will not truncate the feature service, requires a field with unique values

uniqueField = 'PIN' # Field that contains unique values

# Environment Variables

arcpy.env.overwriteOutput = True

arcpy.env.preserveGlobalIds = True

def zipDir(dirPath, zipPath):

'''

Zips File Geodatabase

Args:

dirPath: (string) path to File Geodatabase

zipPath: (string) path to where File Geodatabase zip file will be created

Returns:

'''

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

if 'lock' not in file:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

def updateFeatureServiceSchema():

'''

Updates the hosted feature service schema

Returns:

'''

# Get required fields to skip

requiredFields = [field.name for field in arcpy.ListFields(fc) if field.required]

# Get feature service fields

print("Get feature service fields")

featureServiceFields = {}

for field in fLyr.manager.properties.fields:

if field.type != 'esriFieldTypeOID' and 'Shape_' not in field.name:

featureServiceFields[field.name] = field.type

# Get feature class/table fields

print("Get feature class/table fields")

featureClassFields = {}

arcpy.env.workspace = gdb

if hostedTable == True:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

else:

for field in arcpy.ListFields(fc):

if field.name not in requiredFields:

featureClassFields[field.name] = field.type

minusSchemaDiff = set(featureServiceFields) - set(featureClassFields)

addSchemaDiff = set(featureClassFields) - set(featureServiceFields)

# Delete removed fields

if len(minusSchemaDiff) > 0:

print("Deleting removed fields")

for key in minusSchemaDiff:

print(f"\tDeleting field {key}")

remove_field = {

"name": key,

"type": featureServiceFields[key]

}

update_dict = {"fields": [remove_field]}

fLyr.manager.delete_from_definition(update_dict)

# Create additional fields

fieldTypeDict = {}

fieldTypeDict['Date'] = 'esriFieldTypeDate'

fieldTypeDict['Double'] = 'esriFieldTypeDouble'

fieldTypeDict['Integer'] = 'esriFieldTypeInteger'

fieldTypeDict['String'] = 'esriFieldTypeString'

if len(addSchemaDiff) > 0:

print("Adding additional fields")

for key in addSchemaDiff:

print(f"\tAdding field {key}")

if fieldTypeDict[featureClassFields[key]] == 'esriFieldTypeString':

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]],

"length": [field.length for field in arcpy.ListFields(fc, key)][0]

}

else:

new_field = {

"name": key,

"type": fieldTypeDict[featureClassFields[key]]

}

update_dict = {"fields": [new_field]}

fLyr.manager.add_to_definition(update_dict)

def divide_chunks(l, n):

'''

Args:

l: (list) list of unique IDs for features that have been deleted

n: (integer) number to iterate by

Returns:

'''

# looping till length l

for i in range(0, len(l), n):

yield l[i:i + n]

if __name__ == "__main__":

# Start Timer

startTime = time.time()

# Create GIS object

print("Connecting to AGOL")

gis = GIS("https://www.arcgis.com", username, password)

# Create UUID variable for GDB

gdbId = str(uuid.uuid1())

print("Creating temporary File Geodatabase")

gdb = arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, gdbId)[0]

# Export featureService classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print(f"Exporting {fcName} to temp FGD")

if featureService == True:

arcpy.conversion.FeatureClassToFeatureClass(fc, gdb, fcName)

elif hostedTable == True:

arcpy.conversion.TableToTable(fc, gdb, fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(gdb, gdb + ".zip")

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':gdbId, 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

root_folder = gis.content.folders.get()

fgd_item = root_folder.add(item_properties=fgd_properties, file=gdb + ".zip").result()

# Get featureService/hostedTable layer

serviceLayer = gis.content.get(fsItemId)

if featureService == True:

fLyr = serviceLayer.layers[layerIndex]

elif hostedTable == True:

fLyr = serviceLayer.tables[layerIndex]

# Append features from featureService class/hostedTable

if upsert == True:

# Check if unique field has index

indexedFields = []

for index in fLyr.manager.properties['indexes']:

indexedFields.append(index['fields'])

if uniqueField not in indexedFields:

print(f"{uniqueField} does not have unique index; creating")

fLyr.manager.add_to_definition({

"indexes": [

{

"fields": f"{uniqueField}",

"isUnique": True,

"description": "Unique field for upsert"

}

]

})

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Append features

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=True, upsert_matching_field=uniqueField, field_mappings=[])

# Delete features that have been removed from source

# Get list of unique field for feature class and feature service

entGDBList = [row[0] for row in arcpy.da.SearchCursor(fc, [uniqueField])]

fsList = [row[0] for row in arcpy.da.SearchCursor(fLyr.url, [uniqueField])]

s = set(entGDBList)

differences = [x for x in fsList if x not in s]

# Delete features in AGOL service that no longer exist

if len(differences) > 0:

print('Deleting differences')

if len(differences) == 1:

if type(differences[0]) == str:

features = fLyr.query(where=f"{uniqueField} = '{differences[0]}'")

else:

features = fLyr.query(where=f"{uniqueField} = {differences[0]}")

fLyr.edit_features(deletes=features)

else:

chunkList = list(divide_chunks(differences, 1000))

for list in chunkList:

chunkTuple = tuple(list)

features = fLyr.query(where=f'{uniqueField} IN {chunkTuple}')

fLyr.edit_features(deletes=features)

else:

# Schema Sync

if updateSchema == True:

updateFeatureServiceSchema()

# Truncate Feature Service

# If views exist, or disableSync = False use delete_features. OBJECTIDs will not reset

flc = arcgis.features.FeatureLayerCollection(serviceLayer.url, gis)

hasViews = False

try:

if flc.properties.hasViews == True:

print("Feature Service has view(s)")

hasViews = True

except:

hasViews = False

if hasViews == True or disableSync == False:

# Get Min OBJECTID

minOID = fLyr.query(out_statistics=[

{"statisticType": "MIN", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MINOID"}])

minOBJECTID = minOID.features[0].attributes['MINOID']

# Get Max OBJECTID

maxOID = fLyr.query(out_statistics=[

{"statisticType": "MAX", "onStatisticField": "OBJECTID", "outStatisticFieldName": "MAXOID"}])

maxOBJECTID = maxOID.features[0].attributes['MAXOID']

# If more than 2,000 features, delete in 2000 increments

print("Deleting features")

if maxOBJECTID != None and minOBJECTID != None:

if (maxOBJECTID - minOBJECTID) > 2000:

x = minOBJECTID

y = x + 1999

while x < maxOBJECTID:

query = f"OBJECTID >= {x} AND OBJECTID <= {y}"

fLyr.delete_features(where=query)

x += 2000

y += 2000

# Else if less than 2,000 features, delete all

else:

print("Deleting features")

fLyr.delete_features(where="1=1")

# If no views and disableSync is True: disable Sync, truncate, and then re-enable Sync. OBJECTIDs will reset

elif hasViews == False and disableSync == True:

if flc.properties.syncEnabled == True:

print("Disabling Sync")

properties = flc.properties.capabilities

updateDict = {"capabilities": "Query", "syncEnabled": False}

flc.manager.update_definition(updateDict)

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Enabling Sync")

updateDict = {"capabilities": properties, "syncEnabled": True}

flc.manager.update_definition(updateDict)

else:

print("Truncating Feature Service")

fLyr.manager.truncate()

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(gdb)

os.remove(gdb + ".zip")

endTime = time.time()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

Updates

3/3/2023: Added the ability to add/remove fields from feature service keeping schemas in-sync. For example, if a field(s) is added/removed from the feature class, it will also add/remove the field(s) from the feature service

10/17/2024: Added upsert functionality. Deleted features/rows from the source feature class/table will also be deleted from feature service. This is helpful if you do not want your feature service truncated at all. In the event of a failed append, the feature service will still contain data. A prerequisite for this is for the data to have a unique id field.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

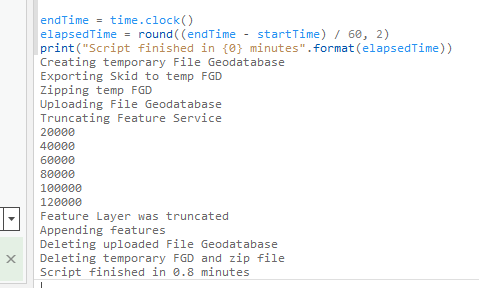

Thanks Jake, when i run it i get the following error

So I Frankensteined it a bit and it ran successfully

import arcpy, os, time

from zipfile import ZipFile

from arcgis.gis import GIS

arcpy.env.overwriteOutput = True

startTime = time.clock()

# Variables

username = "abc" # AGOL Username

password = ***** # AGOL Password

fc = r"L:\Data\Engineering.sde\Engineering.DBO.Roads_Skids\Engineering.DBO.Skid" # Path to Feature Class

fsItemId = "" # Feature Service Item ID to update

# Create GIS object

gis = GIS("https://www.arcgis.com", username, password)

# Function to Zip FGD

def zipDir(dirPath, zipPath):

zipf = ZipFile(zipPath , mode='w')

gdb = os.path.basename(dirPath)

for root, _ , files in os.walk(dirPath):

for file in files:

filePath = os.path.join(root, file)

zipf.write(filePath , os.path.join(gdb, file))

zipf.close()

print("Creating temporary File Geodatabase")

arcpy.CreateFileGDB_management(arcpy.env.scratchFolder, "TempGDB")

# Export feature classes to temporary File Geodatabase

fcName = os.path.basename(fc)

fcName = fcName.split('.')[-1]

print("Exporting {0} to temp FGD".format(fcName))

arcpy.conversion.FeatureClassToFeatureClass(fc, os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb"), fcName)

# Zip temp FGD

print("Zipping temp FGD")

zipDir(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb"), os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"))

# Check if FGD exists, if True, delete item

searchResults = gis.content.search('title:tempFGD AND owner:{0}'.format(username), item_type='File Geodatabase')

if len(searchResults) > 0:

item = searchResults[0]

item.delete()

# Upload zipped File Geodatabase

print("Uploading File Geodatabase")

fgd_properties={'title':'tempFGD', 'tags':'temp file geodatabase', 'type':'File Geodatabase'}

fgd_item = gis.content.add(item_properties=fgd_properties, data=os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"))

# Truncate Feature Service

print("Truncating Feature Service")

premiseLayer = gis.content.get(fsItemId)

fLyr = premiseLayer.layers[0]

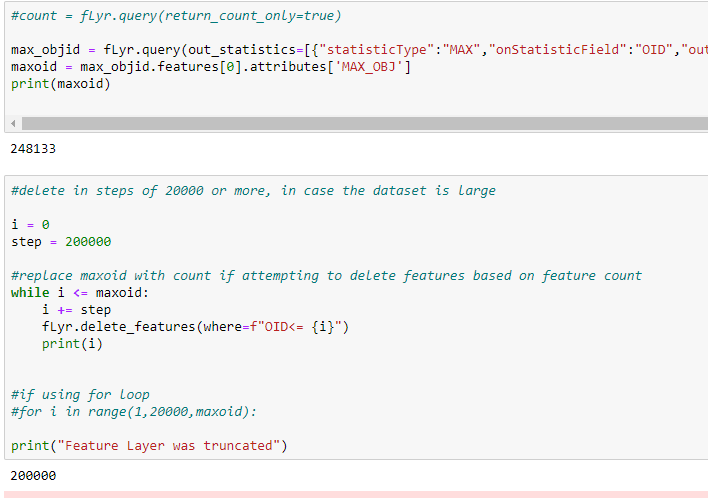

#count = fLyr.query(return_count_only=true)

max_objid = fLyr.query(out_statistics=[{"statisticType":"MAX","onStatisticField":"OID","outStatisticFieldName":"MAX_OBJ"}], return_geometry=False)

maxoid = max_objid.features[0].attributes['MAX_OBJ']

#delete in steps of 20000 or more, in case the dataset is large

i = 0

step = 20000

#replace maxoid with count if attempting to delete features based on feature count

while i <= maxoid:

i += step

fLyr.delete_features(where=f"OID<= {i}")

print(i)

#if using for loop

#for i in range(1,20000,maxoid):

print("Feature Layer was truncated")

# Append features from feature class

print("Appending features")

fLyr.append(item_id=fgd_item.id, upload_format="filegdb", upsert=False, field_mappings=[])

# Delete Uploaded File Geodatabase

print("Deleting uploaded File Geodatabase")

fgd_item.delete()

# Delete temporary File Geodatabase and zip file

print("Deleting temporary FGD and zip file")

arcpy.Delete_management(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb"))

os.remove(os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"))

endTime = time.clock()

elapsedTime = round((endTime - startTime) / 60, 2)

print("Script finished in {0} minutes".format(elapsedTime))

The only issue i found was that it would not work if the feature layer was empty which would be to do with the query. I would love to use truncate() but understand this is a logged bug in that it is turned off by default in the metadata on AGOL.

Cheers

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

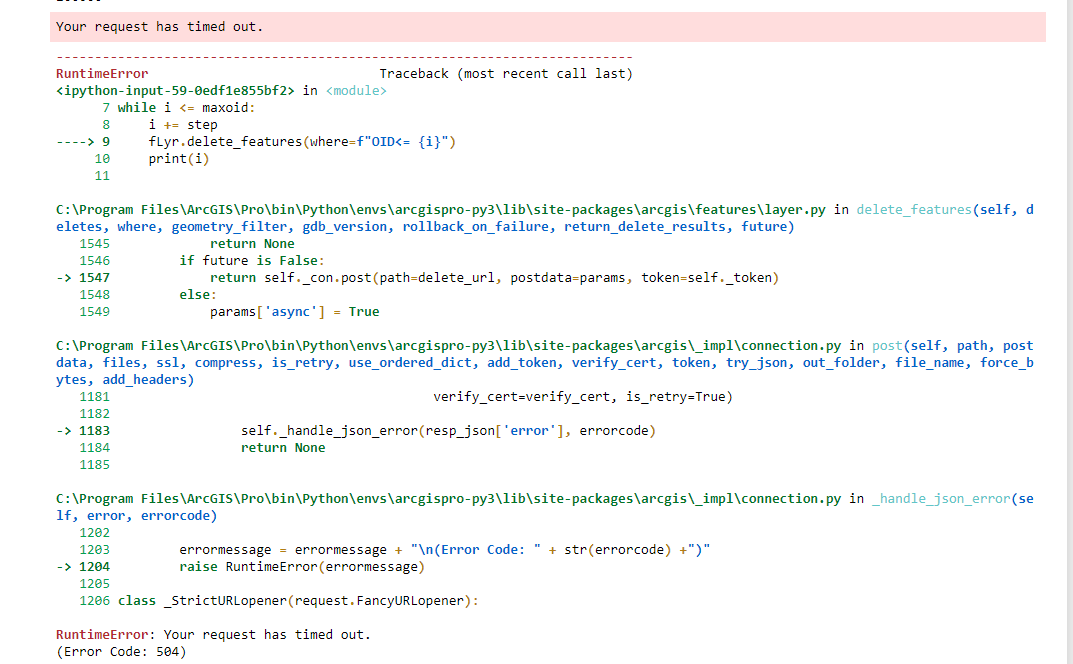

Jake Skinner just wondering if you have encountered runtime errors using delete features, the script runs through and deletes all features some times then other times it stops almost like its timed out

Cheers

Rory

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Rory Bennison I actually have never used ArcGIS API for Python's delete features, so I couldn't say. As an alternative, you can try using the arcpy's Delete Rows to see if this gives more consistent results. However, I've never tried using the Delete Rows on a hosted view, so not sure if that is supported.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

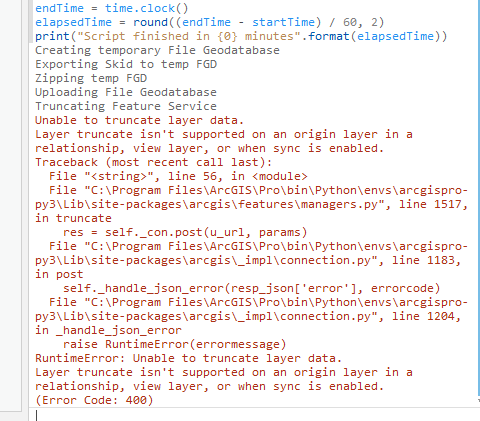

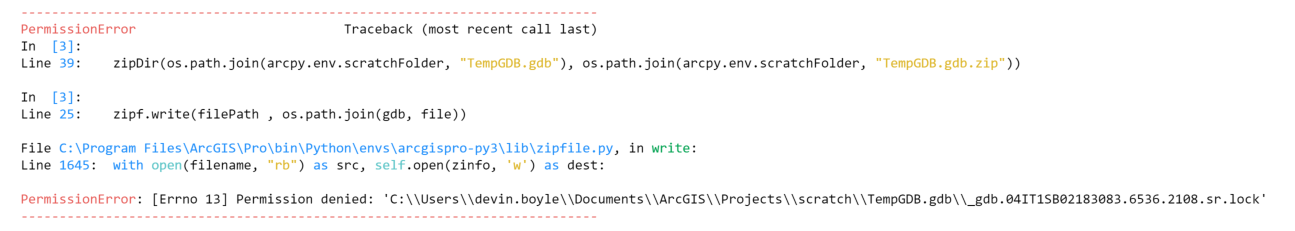

Admittedly I'm rather new with python but whenever I run this script using a layer coming directly off my desktop I get this error:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Devin Boyle I went ahead and updated the code above. It should no longer try to zip the lock files in the File Geodatabase. Try the updated version.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That did the trick. Thank you

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

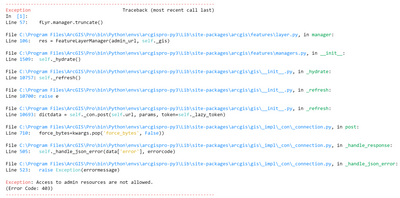

@JakeSkinner, this worked with a layer coming off of a GDB on my desktop, however when trying to do that same a SQL server geodatabase I keep receiving this error:

I am in fact the admin, so I'm unsure why this is happening.

Any help would be great, or a redirect to a more appropriate thread.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@DevinBoyle_PCMCdo you know what line of the script it's failing at? A 403 would indicate an unauthorized access to the feature service, but this should not matter if you're using a File Geodatabase or Enterprise Geodatabase feature class.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@DevinBoyle_PCMCit looks like it's failing on the truncate. Be sure you have the correct item id referenced in the variables. The below code should work if you are able to truncate the service:

from arcgis.gis import GIS

# Variables

username = "jskinner_CountySandbox" # AGOL Username

password = "********" # AGOL Password

fsItemId = "c0e41f90407043a2b4ae6b2761c496db" # Feature Service Item ID to update

# Create GIS object

gis = GIS("https://www.arcgis.com", username, password)

# Truncate Feature Service

featureLayer= gis.content.get(fsItemId)

fLyr = featureLayer.layers[0]

fLyr.manager.truncate()

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

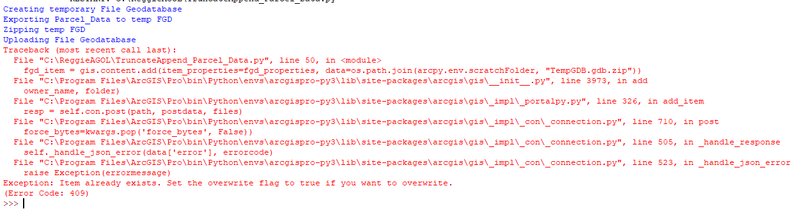

Tried moving my service over and getting an error, figured something was configured wrong but ran it on my old machine and it also wasn't working any more.

this is the error

Creating temporary File Geodatabase

Exporting Parcel_Data to temp FGD

Zipping temp FGD

Uploading File Geodatabase

Traceback (most recent call last):

File "C:\ReggieAGOL\TruncateAppend_Parcel_Data.py", line 50, in <module>

fgd_item = gis.content.add(item_properties=fgd_properties, data=os.path.join(arcpy.env.scratchFolder, "TempGDB.gdb.zip"))

File "C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\__init__.py", line 3973, in add

owner_name, folder)

File "C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_portalpy.py", line 326, in add_item

resp = self.con.post(path, postdata, files)

File "C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 710, in post

force_bytes=kwargs.pop('force_bytes', False))

File "C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 505, in _handle_response

self._handle_json_error(data['error'], errorcode)

File "C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\gis\_impl\_con\_connection.py", line 523, in _handle_json_error

raise Exception(errormessage)

Exception: Item already exists. Set the overwrite flag to true if you want to overwrite.

(Error Code: 409)

>>>

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Is it possible to automatically run the script every night, week etc.?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@SarahHartholt yes, take a look at the following document on how to do this:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

searchResults = GIS.content.search('title: tempFGD AND owner:{0}'.format(username), item_type='File Geodatabase')

Traceback (most recent call last):

File "<string>", line 1, in <module>

AttributeError: 'property' object has no attribute 'search'

Any suggestions for the above error?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

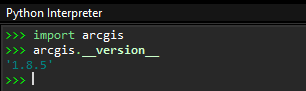

@akjones3 what version of the ArcGIS API for Python are you running? You can check by running the following:

import arcgis

arcgis.__version__

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner ArcGIS Pro "tasks" would work, right? I saw an Esri rep demo a few years ago, if I recall right, where he 'recorded' doing some actions and it automatically created the script. Can I hit Record, select a feature class in a local gdb, hit Share Web Layer to overwrite a hosted feature layer, and it will make the Task? It would record whatever publishing arameterization is set of course, as well. Then I can save the Task and schedule it with Windows Task Scheduler? If this is possible in theory, I will give this a try, before jumping into scripting.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@KevinMacLeodCAI no, I don't believe tasks will work. I'm not an expert, but from what I've seen and know, tasks simply provides a tutorial of the steps. It does not execute them, or create a script. Here is some further documentation on tasks:

https://pro.arcgis.com/en/pro-app/latest/help/tasks/create-a-simple-task.htm

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Ah, I see. I thought I recalled them showing recording actions, like a Macro from the MS Office of old. That would be so incredibly useful. I guess I can submit an idea for Tasks to 'record' actions and compile into a python script or model.

I was hopeful that automating the update of a hosted feature layer from local data would be more turnkey out of the box. Does it still require python, scripting, REST, etc? There's no "button" so to speak? Just wanted to check before a deep-dive, thanks! 🙂

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@KevinMacLeodCAI you would have 2 options:

1. Overwrite the hosted feature service

2. Use the script above. All you have to do is update the 4 variables:

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I tried to run this script on ArcGIS Enterprise (portal). The script failed at Append.

Exception: Append only available on ArcGIS Online.

Do you have any suggestions or alternatives for Portal?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you, @JakeSkinner , for this thread--which is very helpful and goes a long way toward keeping data up-to-date.

I have found an intermittent problem when using this truncate-and-append approach. My script captures the result objects of truncate() and append() so that it can determine if the truncate() and append() are successful:

the_result = f_layer.manager.truncate()

#if the_result isn't {'success': True}, script handles the situation (re-tries or exits)

the_result = f_layer.append()

#if the_result isn't True, script handles the situation (re-tries or exits)

The script usually works great. However, sometimes--seemingly w/ a large dataset, the script doesn't complete the truncate() and append(), and disappears (crash and disappear). This only happens sometimes w/ the exact-same dataset. Sometimes it happens; sometimes it doesn't.

Could trying to capture the result objects from the truncate() and append() into variables be a factor (stressing the method)? Any advice?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@VCGIvanBrown for large datasets, I would recommend setting asynchronous to True for truncate. That should help avoid potential timeouts that might occur running synchronously, resulting in the behavior you're describing.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

i had the same issues with the truncate, i don't think it wanted to run on a feature with 12 million records so i followed the above suggestions of deleting it in small chunks but one extra step i did was once they were all deleted i then truncated the feature service without issue

i also kept getting the 504 errors so i introduced a try / except into the notebook which kept it running till it had finished

here's my code if anyone is interested

jump = 10000

while True:

try:

filterq = "OBJECTID <{0}".format(jump)

print(filterq)

la_df = lry.delete_features(where = filterq)

la_df

jump = jump + 10000

print(str(la_df).count("success"))

if jump > 13000000:

lry.manager.truncate()

break

except:

print("error")

Stu

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you @JakeSkinner for your attention to this issue. We are trying to use this method to refresh source layers that power an Insights dashboard. Unfortunately, when we update the feature layers (with geometry), the connection in our Insights notebook fails. Interestingly, it doesn't fail with all of the tables (without geometry) in the same hosted feature layer.

I assume this means that something in the truncate/append process is breaking the geometry linkages, since again Are there parameters we should be setting in the truncate/append process to preserve the linkages with Insights?

Thanks very much.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@StaciSutermaster I don't have much experience with Insights, but the script is simply performing a truncate/append. I'm not sure how this would affect anything with the Insights application. Does this occur for any feature service in any insight notebook?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks @JakeSkinner . It's also baffled us! It broke in our first notebook, and we created a second notebook to replace. It also broke there. So this seems like a platform-level issue rather than one with our specific (simple) notebooks. We haven't tried with multiple feature services, so it's possible that there's something peculiar with our source layer. But the fact that all feature classes within the layer would break is what led us to believe it could be an issue.

For the time being we've worked around the issue by using a public feature class & joining our custom data tables to its geometry within Insights. The table connections are still unaffected by the T&A process.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is fantastic, Jake @JakeSkinner . Thank you!! Worked on the first try.

I had/have a nasty Feature Class with dozens of domains and very complicated symbology (Feature Class came for an outside source). I tried using the FeatureSharingDraft class every way you can think of - and it was failing every time with a 999999 general function failure.

I think this just might do the trick.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Does your solution work when you have view layers and sync?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JonathanWhite1 I updated the code to work with hosted feature services that contain views, or have sync enabled.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner One last question.. I have a parcel layer and 3 related tables in a one to many relationship. Would I just use this code 4 times on the AGOL hosted feature layer, once for each layer/table [0], [1], [2], [3]?

I had things setup with delete rows and append in model builder but the ObjectID grew too large for the ObjectID field.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner I was able to get it to run by enabling Sync. That way the code would use delete_features instead of Truncate. The issue I keep running into is delete_features and ArcGIS Pro Delete Rows keeps the end of the previous OBJECTID order. With a large dataset the OBJECTID number becomes too large for the field. I don't see a way to reset OBJECTID back to 1 besides Truncate and Truncate will not work with sync, views or features with related data.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I'm not sure why I'm getting this error. I'm logged into AGOL with the same credentials.

Exception: Unable to generate token.

Invalid username or password.

(Error Code: 400)

>>>

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@MikeOnzay your username is case sensitive. For example, my username is jskinner_CountySandbox. In a web browser I can log in using jskinner_countysandbox, but if I try that iteration of the username in the above code, it would fail.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is awesome!!!! However I'm also running into the problem mentioned above - the ever incrementing the OBJECTID sequence.

I need to reset the Objectid and restart from 0 every time I delete_features /append.

Does anyone know how I can do that (keeping Sync Enabled for Offline usage) in Python??

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JoabelBarbieri @JonathanWhite1

I've updated the script to disable sync, perform the truncate/append, and then re-enable sync once complete. There's is also a video of the workflow posted as well. This should eliminate the issue of OBJECTIDs growing too large.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner Thanks! Could I just leave the layer specification blank as in [] instead of [1] and the path to just the parent feature server if I have multiple tables within a hosted feature service? Would that preform the update on all the tables in the feature service?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JonathanWhite1 no, you would have to execute the script separately for each table.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner This is great! Thank you. It's already saved me a bunch of time and headache.

Do you know if there is anyway to do the truncate/append in a sort of transaction - so that it all completes or none of it completes? I'm thinking of a worst case scenario where the Truncate works perfectly, but append fails (maybe there's an internet outage at the exact perfectly wrong time). A similar use case might be that i am updating multiple layers within the same AGOL hosted feature service and one of the updates works but the other one does not. In that case, my data is out of sync with one sublayer updated and the other not.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Trippetoe your best bet is to simply execute the tool again if it were to fail.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner my apologies for bugging you, but i'm not able to find the Help i'm looking for in the Esri documentation nor in the community website (highly possible i'm just not looking in the right place).

My call to the feature layer 'append()' function fails on a large-ish dataset (about 9K rows) that has attachments (mostly jpeg photos). Other feature layers without attachments work fine. So, i am assuming that there is a timeout issue happening, although the error message is not clear about what went wrong. So, i'm trying to use the 'Future' object option, assuming that's an async way of doing the append, but when i include that option in the append() arguments, per the API documentation, i get the error message

append() got an unexpected keyword argument 'future'

So, now i'm even more confused. Any thoughts on how i can extend the timeout window of the append call, or make the call asynchronously.

I am running the script in a Pro Notebook, version 2.8.6

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Trippetoe can you share the service to an AGOL group and invite my account (jskinner_CountySandbox)? I will download the service, and publish to my AGOL org to try and reproduce.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks @JakeSkinner . I added you to our orgs 'Esri Support Testing' group and named the file 'For Jake Skinner'.

Let me know if you can't access the file or have any questions about what's in there.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey @JakeSkinner . I've spent some additional time poking around at the append() method, and it seems like append() doesnt work as expected with attachments - that is, the attachments aren't associated with their features.

I reduced the file geodatabase down to a much more manageable number of around 100 or so features and around 200 or so attachments. The append() operation works fine when using that file geodatabase EXCEPT that none of the features have attachments. I verified that file geodatabase export in the ArcGIS Pro project has the attachments. And i published the file geodatabase as a new hosted feature service in AGOL. The publishing operation worked as expected - the features had attachments.

I'm happy to talk via email if that works for you, or i can submit a Tech Support request if you think that's a better approach.

Thank you for your help.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Trippetoe , you are right, the Append operation is not honoring attachments. This is my first attempt at using Append with attachments, and none came through. It would be worth logging a case with Tech Support. I'm not sure if this is a bug, or intended functionality. If the latter, hopefully this is in the road map.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner Thanks for the follow up. I suspected there was a "bug" with the attachments so i opened a tech support case late last week. If you want to follow along, it's case #03125736. I'll reply back here if i learn anything relevant.

Thanks again for all your help and sharing of code. I greatly appreciate it.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Can this script be set to update all layers and tables in a feature service? Or would the script have to be run independently for each item? For instance in my case I have a parcels feature layer and a hosted table in the feature service.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is awesome.

@JakeSkinner Any chance you can get it to preserve the time zone of date fields? If I publish from Pro I can set the time zone, but when I run this script they seem to wind up being in UTC.

Or is there a post process that can be run to update the service with the appropriate time zone?

Bruce

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@TK4211 looks like the time zone information is not maintained with the Append operation. There is an ArcGIS Idea posted about this.

I would recommend appending some code after the "Appending Features" portion to update the date field. In the below example, it's adding 4 hours to a date field:

from datetime import datetime, timedelta

with arcpy.da.UpdateCursor(fLyr.url, ['<date field>']) as cursor:

for row in cursor:

row[0] = row[0] + timedelta(hours=4)

cursor.updateRow(row)

del cursor

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@JakeSkinner Thank you. This is a less than ideal solution. I'm assuming I'd have to account for daylight savings and add 6 or 7 hours depending on time of year.

When you publish it from Pro you have the option of setting a parameter. So a parameter must exist. Is there any way of updating that parameter after the service has been published? I tried messing around with the service definition but without much success.

I tried adding something like this:

{

"preferredTimeReference": {

"timeZone": "Mountain Standard Time",

"respectsDaylightSaving": True

}

}

I suppose if it were that easy, everyone would be doing it. 😉 Thank you and we'll work with this for now. And I'll follow the idea.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Really handy and will do nicely until ESRI fix the Truncate function for feature services!