- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: The workspace Work.gdb does not exist. Failed ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

The workspace Work.gdb does not exist. Failed to execute (JSONToFeatures).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I want to learn automatic update real time data with python in ESRI training (https://learn.arcgis.com/en/projects/update-real-time-data-with-python/) but i have some trouble. Here I attach the error and the script that was made. Here my script

import sys, os, tempfile, json, logging, arcpy, shutil

import datetime as dt

from urllib import request

from urllib.error import URLError

def feedRoutine (url, workGDB, liveGDB):

# Log file

logging.basicConfig(filename="coral_reef_exercise.log", level=logging.INFO)

log_format = "%Y-%m-%d %H:%M:%S"

# Create workGDB and default workspace

print("Starting workGDB...")

logging.info("Starting workGDB... {0}".format(dt.datetime.now().strftime(log_format)))

arcpy.env.workspace = workGDB

gdb_name = os.path.basename(workGDB)

if arcpy.Exists(arcpy.env.workspace):

for feat in arcpy.ListFeatureClasses ("alert_*"):

arcpy.management.Delete(feat)

else:

arcpy.management.CreateFileGDB(os.path.dirname(workGDB), os.path.basename(workGDB))

# Download and split json file

print("Downloading data...")

logging.info("Downloading data... {0}".format(dt.datetime.now().strftime(log_format)))

temp_dir = tempfile.mkdtemp()

filename = os.path.join(temp_dir, 'latest_data.json')

try:

response = request.urlretrieve(url, filename)

except URLError:

raise Exception("{0} not available. Check internet connection or url address".format(url))

with open(filename) as json_file:

data_raw = json.load(json_file)

data_stations = dict(type=data_raw['type'], features=[])

data_areas = dict(type=data_raw['type'], features=[])

for feat in data_raw['features']:

if feat['geometry']['type'] == 'Point':

data_stations['features'].append(feat)

else:

data_areas['features'].append(feat)

# Filenames of temp json files

stations_json_path = os.path.join(temp_dir, 'points.json')

areas_json_path = os.path.join(temp_dir, 'polygons.json')

# Save dictionaries into json files

with open(stations_json_path, 'w') as point_json_file:

json.dump(data_stations, point_json_file, indent=4)

with open(areas_json_path, 'w') as poly_json_file:

json.dump(data_areas, poly_json_file, indent=4)

# Convert json files to features

print("Creating feature classes...")

logging.info("Creating feature classes... {0}".format(dt.datetime.now().strftime(log_format)))

arcpy.conversion.JSONToFeatures(stations_json_path, os.path.join(gdb_name, 'alert_stations'))

arcpy.conversion.JSONToFeatures(areas_json_path, os.path.join(gdb_name, 'alert_areas'))

# Add 'alert_level ' field

arcpy.management.AddField(os.path.join(gdb_name, "alert_stations"), "alert_level", "SHORT", field_alias="Alert Level")

arcpy.management.AddField(os.path.join(gdb_name, "alert_areas"), "alert_level", "SHORT", field_alias="Alert Level")

# Calculate 'alert_level ' field

arcpy.management.CalculateField(os.path.join(gdb_name, "alert_stations"), "alert_level", "int(!alert!)")

arcpy.management.CalculateField(os.path.join(gdb_name, "alert_areas"), "alert_level", "int(!alert!)")

# Deployment Logic

print("Deploying...")

logging.info("Deploying... {0}".format(dt.datetime.now().strftime(log_format)))

deployLogic(workGDB, liveGDB)

# Close Log File

logging.shutdown()

# Return

print("Done!")

logging.info("Done! {0}".format(dt.datetime.now().strftime(log_format)))

return True

def deployLogic(workGDB, liveGDB):

for root, dirs, files in os.walk(workGDB, topdown=False):

files = [f for f in files if '.lock' not in f]

for f in files:

shutil.copy2(os.path.join(workGDB, f), os.path.join(liveGDB, f))

if __name__ == "__main__":

[url, workGDB, liveGDB] = sys.argv[1:]

feedRoutine (url, workGDB, liveGDB)

Here the error message :

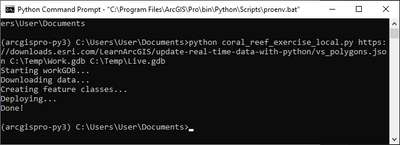

(arcgispro-py3) C:\Users\User\Documents>python coral_reef_exercise_local.py https://downloads.esri.com/LearnArcGIS/update-real-time-data-with-python/vs_polygons.json C:\Temp\Work.gdb C:\Temp\Live.gdb

Starting workGDB...

Downloading data...

Creating feature classes...

Traceback (most recent call last):

File "coral_reef_exercise_local.py", line 80, in <module>

feedRoutine (url, workGDB, liveGDB)

File "coral_reef_exercise_local.py", line 50, in feedRoutine

arcpy.conversion.JSONToFeatures(stations_json_path, os.path.join(gdb_name, 'alert_stations'))

File "C:\Program Files\ArcGIS\Pro\Resources\ArcPy\arcpy\conversion.py", line 576, in JSONToFeatures

raise e

File "C:\Program Files\ArcGIS\Pro\Resources\ArcPy\arcpy\conversion.py", line 573, in JSONToFeatures

retval = convertArcObjectToPythonObject(gp.JSONToFeatures_conversion(*gp_fixargs((in_json_file, out_features, geometry_type), True)))

File "C:\Program Files\ArcGIS\Pro\Resources\ArcPy\arcpy\geoprocessing\_base.py", line 512, in <lambda>

return lambda *args: val(*gp_fixargs(args, True))

arcgisscripting.ExecuteError: ERROR 999999: Something unexpected caused the tool to fail. Contact Esri Technical Support (http://esriurl.com/support) to Report a Bug, and refer to the error help for potential solutions or workarounds.

CreateFeatureClassName: The workspace Work.gdb does not exist.

Failed to execute (JSONToFeatures).

I'm very happy if someone can help me with this problem. Thank you

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm not sure why you're creating a path such as

os.path.join(gdb_name, 'alert_stations')when gdb_name is already set as your workspace. just try writing to the workspace without specifying the workspace.

arcpy.conversion.JSONToFeatures(stations_json_path, 'alert_stations')and do the same for any similar lines, i.e. stop joining gdb_name to a path.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

have you created a workspace under that name?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

can you give me an example?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You've specified a workspace path via sys args for main() with what looks like c:\Temp\Work.gdb , maybe it has to be created first (I'm not a fan of reading screenshotted code so I can't tell).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

But Work.gdb is automatically appear when i run the previous script :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

It's still a mess to read, look at code formatting options.

at this moment I can only guess at

arcpy.conversion.JSONToFeatures(stations_json_path, os.path.join(gdb_name, 'alert_stations'))

arcpy.conversion.JSONToFeatures(areas_json_path, os.path.join(gdb_name, 'alert_areas'))

being a potential issue, as you already have a workspace set, and gdb_name isn't a full path.

also Exists(workGDB) may be more logical and mitigates unintended effects such as env.workspace defaulting to something if workGDB doesn''t exist, messing up your logic.

if arcpy.Exists(arcpy.env.workspace):

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm sorry because I'm a beginner in understanding programming languages, especially python. When I follow your suggestion, the result is still an error

import sys, os, tempfile, json, logging, arcpy, shutil

import datetime as dt

from urllib import request

from urllib.error import URLError

def feedRoutine (url, workGDB, liveGDB):

# Log file

logging.basicConfig(filename="coral_reef_exercise.log", level=logging.INFO)

log_format = "%Y-%m-%d %H:%M:%S"

# Create workGDB and default workspace

print("Starting workGDB...")

logging.info("Starting workGDB... {0}".format(dt.datetime.now().strftime(log_format)))

arcpy.env.workspace = workGDB

gdb_name = os.path.basename(workGDB)

if arcpy.Exists(arcpy.env.workspace):

for feat in arcpy.ListFeatureClasses ("alert_*"):

arcpy.management.Delete(feat)

else:

arcpy.management.CreateFileGDB(os.path.dirname(workGDB), os.path.basename(workGDB))

# Download and split json file

print("Downloading data...")

logging.info("Downloading data... {0}".format(dt.datetime.now().strftime(log_format)))

temp_dir = tempfile.mkdtemp()

filename = os.path.join(temp_dir, 'latest_data.json')

try:

response = request.urlretrieve(url, filename)

except URLError:

raise Exception("{0} not available. Check internet connection or url address".format(url))

with open(filename) as json_file:

data_raw = json.load(json_file)

data_stations = dict(type=data_raw['type'], features=[])

data_areas = dict(type=data_raw['type'], features=[])

for feat in data_raw['features']:

if feat['geometry']['type'] == 'Point':

data_stations['features'].append(feat)

else:

data_areas['features'].append(feat)

# Filenames of temp json files

stations_json_path = os.path.join(temp_dir, 'points.json')

areas_json_path = os.path.join(temp_dir, 'polygons.json')

# Save dictionaries into json files

with open(stations_json_path, 'w') as point_json_file:

json.dump(data_stations, point_json_file, indent=4)

with open(areas_json_path, 'w') as poly_json_file:

json.dump(data_areas, poly_json_file, indent=4)

# Convert json files to features

print("Creating feature classes...")

logging.info("Creating feature classes... {0}".format(dt.datetime.now().strftime(log_format)))

arcpy.conversion.JSONToFeatures(stations_json_path, os.path.join(gdb_name, 'alert_stations'))

arcpy.conversion.JSONToFeatures(areas_json_path, os.path.join(gdb_name, 'alert_areas'))

# Add 'alert_level ' field

arcpy.management.AddField(os.path.join(gdb_name, "alert_stations"), "alert_level", "SHORT", field_alias="Alert Level")

arcpy.management.AddField(os.path.join(gdb_name, "alert_areas"), "alert_level", "SHORT", field_alias="Alert Level")

# Calculate 'alert_level ' field

arcpy.management.CalculateField(os.path.join(gdb_name, "alert_stations"), "alert_level", "int(!alert!)")

arcpy.management.CalculateField(os.path.join(gdb_name, "alert_areas"), "alert_level", "int(!alert!)")

# Deployment Logic

print("Deploying...")

logging.info("Deploying... {0}".format(dt.datetime.now().strftime(log_format)))

deployLogic(workGDB, liveGDB)

# Close Log File

logging.shutdown()

# Return

print("Done!")

logging.info("Done! {0}".format(dt.datetime.now().strftime(log_format)))

return True

def deployLogic(workGDB, liveGDB):

for root, dirs, files in os.walk(workGDB, topdown=False):

files = [f for f in files if '.lock' not in f]

for f in files:

shutil.copy2(os.path.join(workGDB, f), os.path.join(liveGDB, f))

if __name__ == "__main__":

[url, workGDB, liveGDB] = sys.argv[1:]

feedRoutine (url, workGDB, liveGDB)- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm not sure why you're creating a path such as

os.path.join(gdb_name, 'alert_stations')when gdb_name is already set as your workspace. just try writing to the workspace without specifying the workspace.

arcpy.conversion.JSONToFeatures(stations_json_path, 'alert_stations')and do the same for any similar lines, i.e. stop joining gdb_name to a path.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you for your help, now the problem is solved.