- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Running a model outside of ArcGIS Pro?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

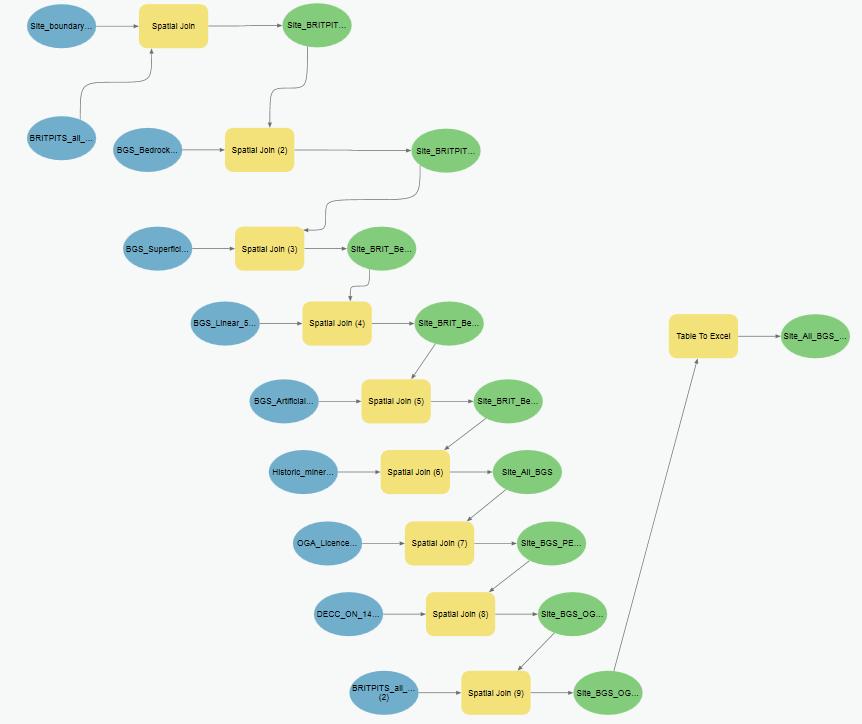

Hi I have a ArcGIS Pro model which takes a polygon shapefile (Site_boundary_master) and spatially joins various layers to it, finally exporting the final output to an Excel spreadsheet:

All the joining layers are kept in various File Geodatabases.

I saved the model as 'AutomationModel' (but in the properties dialogue for it, it's called 'Model222'... wtf?) in a toolbox called 'Automation.tbx'.

Running the model from Model Builder works fine.

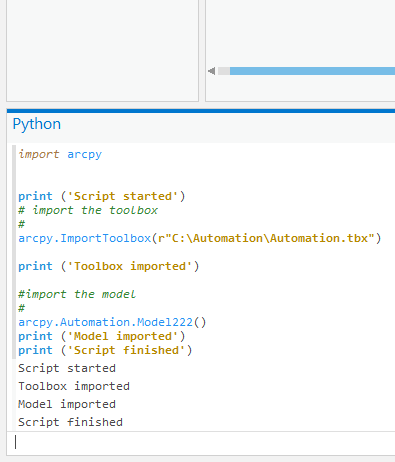

I then created a .py python file (from a blank .txt file) and wrote a script to run this model this way:

import arcpy

print ('Script started')

# import the toolbox

#

arcpy.ImportToolbox(r"C:\Automation\Automation.tbx")

print ('Toolbox imported')

#import the model

#

arcpy.Automation.Model222()

print ('Model imported')

print ('Script finished')

Pasting this code into the ArcGIS Pro python console, it work fine. Again.

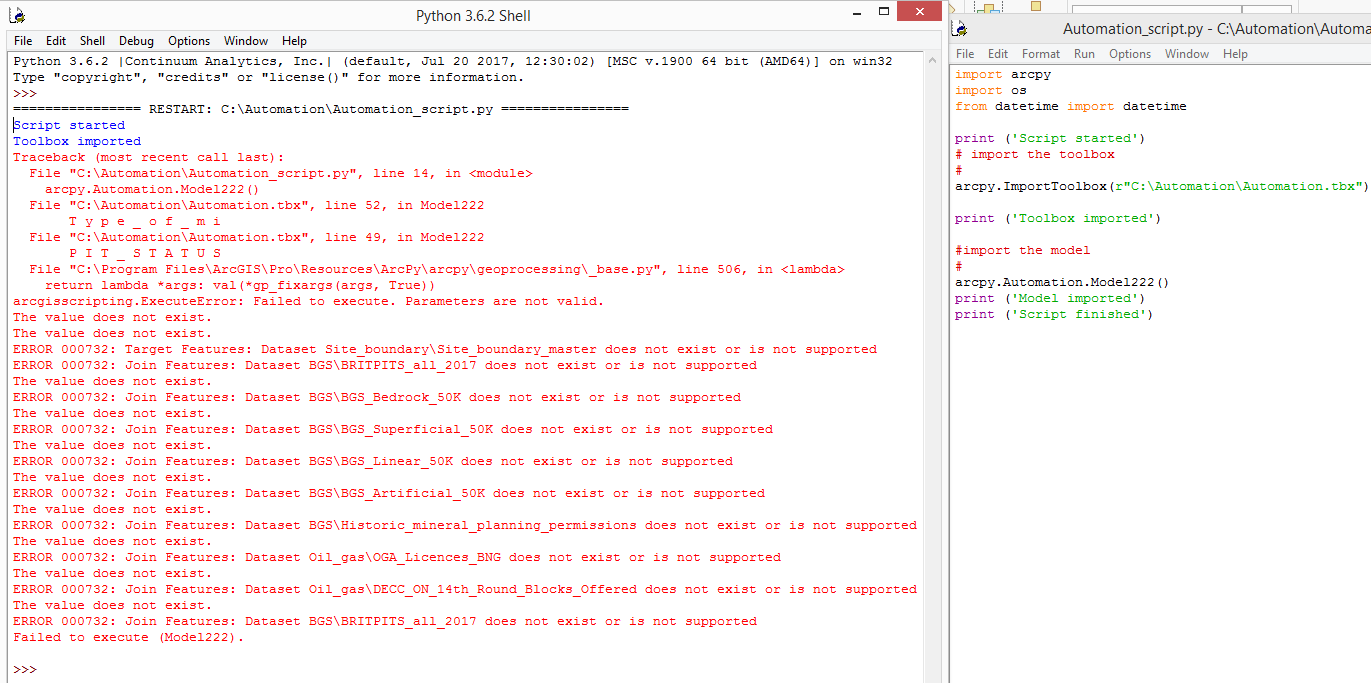

However, when I edit the .py file in IDLE (ArcGIS Pro) and then run the script, it doesn't work at all:

Can someone help me fix this? Is it because I need to tell the script where all the input layers are stored? If so, how would I write this? I'm still a novice with python.

Thank you!

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes.. the model and script run outside, have no clue where the data are.

arcpy.env.workspace = r"\C:\folder\containing\the_data"

if the data resides in one folder

Using a geodatabase would make things easier as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yes.. the model and script run outside, have no clue where the data are.

arcpy.env.workspace = r"\C:\folder\containing\the_data"

if the data resides in one folder

Using a geodatabase would make things easier as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks Dan, I'll try setting the environment.

Some of the joining layers are in shapefile format (they need to be for reasons too long to go into here!) but most of the data (apart from the original target layer for the join) are in Geodatabases. Each Geodatabase is in it's own folder.

So is it possible to set the workspace to multiple GDBs?

Thanks Dan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I would check the model to see if the full paths are set to the files, otherwise you may have to set the inputs as parameters to allow the user model to navigate to them. If the model is converted to a script, you will probably find they are set that way. If you intend to use the model with data in the exact same location you may not have issues. However, if you create a model and intend to distribute it for others to use, then model components would have to use the same folder structure. This is where models are good to get an idea of the workflow but really need to be used as a 'shell' to edit as a script tool so you can generalize the location of data inputs and outputs so that they can be found in locations 'relative to' the location of the script tool.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The paths are referring to the layers in the TOC. I should have set the model paths to look directly at the files/FCs when I created the Model.

The data should never move from it's current location, so that's not an issue.

The model will only run from my machine.

It's a shame I can't export an ArcGIS Pro model to python script like I could in ArcMap. It would make it quicker to re-source all my data sources.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

If I have a script and I package data with it and the toolbox, I often put the folder containing the data and the gdb in a subfolder within the path. When you run the script (or script tool), you can get the path to the data 'relative to' the script location by exploiting the fact that the first system parameter will be the script that is running... so in the example below, I ran the script (line 5) and the data were located in a subfolder relative to the script location.

import sys

script = sys.argv[0] # print this should you need to locate the script

script

'C:/Git_Dan/arraytools/tools.py'

files = script.replace("tools.py", "Data/numpy_demos.gdb")

files

'C:/Git_Dan/arraytools/Data/numpy_demos.gdb'- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

neat trick! Will look to implement that going forward.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

So changing all the model's target and output to direct source locations (as opposed to TOC layers) has fixed this for me.

My final script which opens my model looks like this FYI:

import arcpy

import os

from datetime import datetime

print ('Script started')

# import the toolbox

#

arcpy.ImportToolbox(r"C:\Automation\Automation.tbx")

print ('Toolbox imported')

#import the model

#

arcpy.Automation.Model()

print ('Model imported')

print ('Script finished')

I run this via task scheduler overnight, outside of ArcGIS Pro, and it works fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Good... because everything is 'relative' to the toolbox location I suspect... don't move anything unless you modify hardcoded paths to data though (use my tip if you go to scripts)