- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Python Script - How to Define Query after extr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Python Script - How to Define Query after extract it all from zipped folder??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hello,

I have ArcGIS Pro 2.7.1 and ArcMap 10.7.1 along with python 2.7 and 3x and model builder. I have a python script but I am missing something. In script after shapefiles/GDB/feature classes extract it all from zipped folder, how do I define query in some specific feature classes? I can provide more info if needed. Please kindly advise.

Thank you,

Alex

#Create the table

try:

table_stm = """DROP TABLE sde.TEST."""+table_name+""" """

cursor.execute(table_stm)

connection.commit()

print table_name+' Table Deleted'

except Exception as crt:

print str(crt)

try:

table_stm = """CREATE TABLE sde.TEST."""+table_name+"""("""+columns+""") """

cursor.execute(table_stm)

connection.commit()

print table_name+' Table created'

except Exception as crt:

print str(crt)

#Read lines and Insert records

inserted_records = 0

line_id = 0

breakLoop = 0

for line in open(data_filename, 'r'):

line_string = line.rstrip() # strip off newline and any other trailing whitespace

values = line_string.split("|")

#if breakLoop:

#break

if len(values) > 0:

if values[0].strip()[:2] == '35':

breakLoop = 1

#values.pop()

#for each value in the file line

for i in xrange(len(values)):

#_q = ", ".join(["%s"] * len(values))

col_type = types[i]

#value = "'"+values[i].strip()+"'"

value = values[i].strip()

if value == ".0" or value == ".00":

value = "0"

#if value == " " or value == "":

#value = NULL

# if 'NUMERIC' in col_type:

# if value == " " or value == "":

#value = "null"

# else:

# value = 'CONVERT('+col_type+','+value+')'

#else:

value = "'"+value.replace("'","''")+"'"

values[i] = value

try:

values_string = ','.join(values)

SQLCommand = ("INSERT INTO sde.TEST."+table_name+" VALUES ("+values_string+")")

line_id = line_id + 1

cursor.execute(SQLCommand)

connection.commit()

inserted_records = inserted_records + 1

except Exception as inst:

log_write(log_file, '..Error inserting record in'+table_name+':'+str(line_id) +' Command :'+SQLCommand)

except Exception as inst:

log_write(log_file, '...Error processing '+data_filename+' file:'+str(inst))

else:

connection.commit()

cursor.close()

connection.close()

log_write(log_file, '.Finished processing '+data_filename+' file. Total records inserted:'+str(inserted_records))

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That script will throw errors in python 3 which is used by arcgis pro.

What errors did you get?

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

What's your end game here? It looks like you are using some SQL cursors to create some tables and insert some records yet you mention making queries on either shapefiles or fgdb feature classes after extracting them from a zipfile.

Start with the basics like:

1. I want to .....

2. Then I want to.....

3. Once that is done, I want to....

As Dan alludes to, you need to pick which platform you want this to execute in: ArcMap Python, ArcMap Model Builder, ArcGIS Pro Python, ArcGIS Pro Model Builder. Until you cover the basics it's kind of tough to offer any sort of advice or solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Ok thank you i am checking on my end and will get it back.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

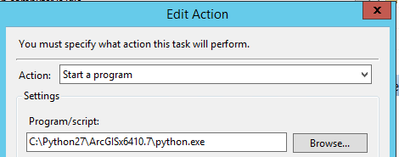

@JoeBorgione @DanPatterson in this case, please screenshot. I hope this is answer your question. have sql server. It is extract to sde. The goal here is to define a query feature class, for example= this field needs to be define query where FIELD1= HELLO then apply a result just like in ArcMap. I just not sure what to write for that in python script standalone.

Also, because original it is fgdb. that part is working fine except either original .dat/.txt isn't update to sde table. it used to work and now it stopped working. the zipped folder included other files such as .dat and .txt plus fgdb. i took out of this piece of script . because the script is very long.

##### Parse and Copy from .dat file ######

if COPY_PARCELDATA_DAT == "YES":

#Path to the *.dat

for k, v in MDOtherFiles.items():

if '.txt' in k:

conf_filename = server_processing_directory + "\\" + "MDShapes\\PA_Data\\"+k

data_filename = server_processing_directory + "\\" + "MDShapes\\PA_Data\\"+k.replace(".txt", ".dat")

table_name = k.replace(".txt","").strip()

table_name = table_name.replace(".dat","").strip()

#print table_name

#start_time = str(datetime.datetime.now()) # get the current date & time

log_write(log_file, '.Started processing '+data_filename+' file')

columns = ''

types = []

try:

with open(conf_filename, 'r') as f:

for index, line in enumerate(f):

#replace datatypes

line = line.strip()

#line = line.replace('VARCHAR2', 'VARCHAR')

#line = line.replace('NUMBER', 'NUMERIC')

types.append(line.split(" ")[1].strip())

columnName = line.split(" ")[0].strip()

if index >= 1:

columns = columns +','+ columnName +' varchar(100) NULL'

else:

columns = columns + columnName +' varchar(100) NULL'

#print columns

connection = pypyodbc.connect('Driver={SQL Server};'

'Server=test;'

'Database=test;'

'uid=test;pwd=test')

cursor = connection.cursor()