- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Polyline NumPy Array to Pandas DataFrame: StEl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Polyline NumPy Array to Pandas DataFrame: StElevation and EndElevation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have converted a feature class (polylines) to a numpy array and have expoded the polylines to vertices using the "explode_to_points" method. I would like to convert the output numpy array to a pandas dataframe that will replicate the line segments that make up the polyline so that I land up with the following columns:

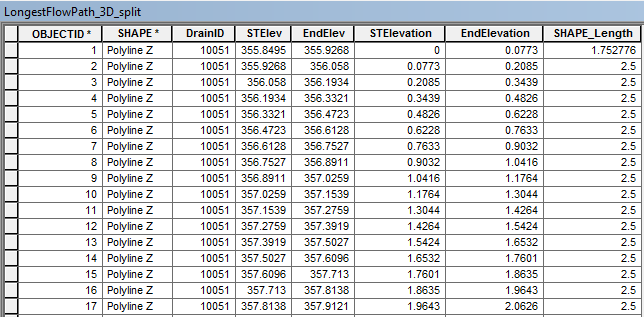

The table above was achieved by using "Split Line At Vertices". The fields "STElev" and "EndElev" are the Start Elevation and End Elevation of the line segments determined using "Calculate Geometry". The field "STElevation" and "EndElevation" is the change in elevation determined by subtracting the elevation from the first "STElev" which in this case is 355.8495.

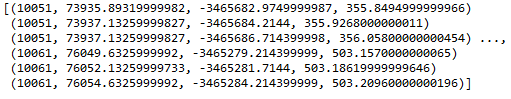

The output numpy array from converting my feature class (polylines) and exploding the features to vertices is:

The values are:

[(DrainID, X, Y, Z)....] for each vertice

'''

Created on 01 Jul 2017

@author: PeterW

'''

# import modules and site-packages

import numpy as np

import pandas as pd

from pathlib import Path

import arcpy

# set environment settings

arcpy.env.overwriteOutput = True

# check out extensions

arcpy.CheckOutExtension("3D")

def longestflowpath_3d(dtm, lfp_2d):

"""Generate LongestFlowPath 3D

from LongestFlowPath 2D and DTM"""

output_gdb = str(Path(lfp_2d).parents[0])

lfp_3d = "{0}\\{1}".format(output_gdb, "lfp_3d")

lfp_3d = arcpy.InterpolateShape_3d(dtm, lfp_2d, lfp_3d, vertices_only=True)

arcpy.FlipLine_edit(lfp_3d)

return lfp_3d

def area_under_profile(lfp_3d):

"""Determine the Equal Area Slope

for each Drainage Area's Longest

Flowpath"""

arr = arcpy.da.FeatureClassToNumPyArray(lfp_3d, ["DrainID", "SHAPE@X", "SHAPE@Y", "SHAPE@Z"], explode_to_points=True) # @UndefinedVariable

print(arr)

def equal_area_slope(dtm, lfp_2d):

"""Determine the Equal Area Slope

for each Drainage Area's Longest

Flowpath"""

lfp_3d = longestflowpath_3d(dtm, lfp_2d)

area_under_profile(lfp_3d)

if __name__ == "__main__":

dtm = r"E:\Projects\2016\G113665\geoHydro\DTM2\raw1"

lfp_2d = r"E:\Projects\2016\G113665\geoHydro\Model02\Results.gdb\LongestFlowPath_2D_Orig"

equal_area_slope(dtm, lfp_2d)My current Python Module

How can I convert the current numpy array to a pandas dataframe that will restructure the vertices (DrainID, X, Y, Z) to (DrainID, STElevation (change in elevation), EndElevation (change in elevation), DistM (Length of segment)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Peter ...and from the world of numpy... can be greatly simplified if needed. Values can be tied to segment lengths, but I will save that for a blog.

"""

:polyline_demo.py

"""

import numpy as np

import arcpy

ft = {'bool': lambda x: repr(x.astype('int32')),

'float': '{: 0.2f}'.format}

np.set_printoptions(edgeitems=3, linewidth=80, precision=3,

suppress=True, threshold=100, formatter=ft)

def e_leng(a):

"""Length/distance between points in an array using einsum

: details in one of my blogs... this can be simplified to 4 lines for 2D

:-----------------------------------------------------------------------

"""

def cal(diff):

""" magic happens for 1D, 2D, 3D and 4D arrays...

"""

d_arr = np.sqrt(np.einsum('ijk,ijk->ij', diff, diff))

d_leng = d_arr.flatten()

length = np.sum(d_leng)

return length, d_leng

# ----

diffs = []

a = np.atleast_2d(a)

if a.shape[0] == 1:

return 0.0

if a.ndim == 2:

a = np.reshape(a, (1,) + a.shape)

if a.ndim == 3:

diff = a[:, 0:-1] - a[:, 1:]

length, d_leng = cal(diff)

diffs.append(d_leng)

if a.ndim == 4:

length = 0.0

for i in range(a.shape[0]):

diff = a[i][:, 0:-1] - a[i][:, 1:]

leng, d_leng = cal(diff)

diffs.append(d_leng)

length += leng

return length, diffs

def report(subs):

"""print out the results"""

for i in range(len(subs)):

total, segs = e_leng(subs[i])

frmt = """

3D distances along polyline {}

Segment distances

{}

Total = sum of segments ? {}

"""

print(frmt.format(total, segs, total == np.sum(segs)))

# ---- inputs ----

fc = r'C:\GIS\Geometry_projects\polyline_demo\polyline_demo.gdb\polylines' # change

flds = ['OID@', 'SHAPE@X', 'SHAPE@Y', 'SHAPE@Z'] # these are horibble

SR = arcpy.Describe(fc).spatialreference

a = arcpy.da.FeatureClassToNumPyArray(fc,

field_names=flds,

spatial_reference=SR,

explode_to_points=True)

dt = [('ID', '<i4'), ('X', '<f8'), ('Y', '<f8'), ('Z', '<f8')] # these are readable

a.dtype = dt # simplify the dtype

ids = np.unique(a['ID']) # get the unique id values

p_lines = [a[a['ID'] == i] for i in ids] # collect polylines

subs = [np.c_[p['X'], p['Y'], p['Z']] for p in p_lines] # stack coordinates

report(subs) # print the results

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Peter, did you finish this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Dan

Unfortunately not yet, I started working through your code and got stuck in understanding your reasoning behind reshaping the array from two dimensional to three dimensional lines 31 to 36. I still have quiet a bit to learn about NumPy and haven't needed to work with anything more than a two dimensional array.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Peter, there is a caveat in that function which states ... this can be simplified to 4 lines for 2D ....

That is the full code when needing to determine lengths/distances in higher dimensions....

You need not worry about it unless you are working with 3D data (conventional X,Y,Z) or 4D data(3D shifted by a distance over time)

Plus the 'cal' function uses einsum which is a bit of an overkill to determine distance between points, for instance, that can be written much shorter if one is using polygons that one is normally going to encounter. The methods that you will find on the forum will crawl when you are working with objects with millions of vertices (beyond the scope of ArcMap in any event). I just like to use what works for the cases that I use.

Pythagorean theorem is good enough to determine 2D length if that is all you need.

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »