- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Overwrite Hosted Table with new data

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Overwrite Hosted Table with new data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi,

I am having a challenging time trying to write a code using the ArcGIS Python API in Notebooks to update or overwrite an existing hosted table with new data obtained via a Survey123 form. The ultimate goal will be to set this to update automatically at regular intervals.

I have tried to resolve using similar previous posts (e.g. Overwrite Hosted Table), but to no avail.

My initial steps were to run my analysis code and add the output table as a CSV to my organisational AGOL account, which I then manually published as a Hosted Table. However, when I run the below steps, the output messages appears to be fine, but when I go to check the hosted table data in my 'Content', it has not updated. Code is in the image, below:

As additional context:

- Given my analysis is all scripted, the hosted table name, properties and schema are remain the same.

- Code runs ALL Survey123 data each time, rather than just those new records submitted since last run (there's are <1000 records).

Any assistance would be much appreciated as I am still relatively new to Notebooks and python language. Happy to include additional information if needed.

Thanks!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

@FredMitchell can you share the service to an AGOL group and invite my account (jskinner_rats)? I can take a look to see if anything jumps out at me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @JakeSkinner, I just invited you! Also, for context here is my python script minus our credentials:

Thanks in advance!

import arcpy, os, uuid

import pandas as pd

import datetime

from datetime import date, timedelta

from zipfile import ZipFile

from arcgis.features import GeoAccessor, GeoSeriesAccessor, FeatureLayerCollection

from arcgis.gis import GIS

# get table from AGOL

gis = GIS('https://kirklandwa.maps.arcgis.com', 'user', 'pw')

CrimeTrendsTable = gis.content.get('{78e4a92c5c324dd18cc6eee11cb6b2f7}')

# still trying to figure this part out whether it be an overwrite or a delete and append

CrimeTrendsTable.delete_features(where="1=1", return_delete_results=True)

# import feature class and create slice of the data set in a new dataframe

df = pd.DataFrame.spatial.from_featureclass(r"local path of larger feature class from sde")

df = df.sort_values(['OFFENSES_YEAR', 'CRIME_STAT_TYPE', 'FROM_DATE'], ascending= [True, True, True], ignore_index=True)

df = df.loc[:, ['OFFENSES_YEAR', 'CRIME_STAT_TYPE', 'FROM_DATE']]

# set variables for today and the previous 5 years

today = datetime.datetime.today()

todate1 = today - timedelta(365)

todate2 = today - timedelta(730)

todate3 = today - timedelta(1095)

todate4 = today - timedelta(1460)

todate5 = today - timedelta(1825)

this_year = datetime.datetime.today().year

one_year_ago = this_year - 1

two_years_ago = this_year - 2

three_years_ago = this_year - 3

four_years_ago = this_year - 4

five_years_ago = this_year - 5

# create new dataframes for current and each previous year to date

df0 = df.loc[(df['OFFENSES_YEAR']==this_year) & (df['FROM_DATE'] < today)]

df1 = df.loc[(df['OFFENSES_YEAR']==one_year_ago) & (df['FROM_DATE'] < todate1)]

df2 = df.loc[(df['OFFENSES_YEAR']==two_years_ago) & (df['FROM_DATE'] < todate2)]

df3 = df.loc[(df['OFFENSES_YEAR']==three_years_ago) & (df['FROM_DATE'] < todate3)]

df4 = df.loc[(df['OFFENSES_YEAR']==four_years_ago) & (df['FROM_DATE'] < todate4)]

df5 = df.loc[(df['OFFENSES_YEAR']==five_years_ago) & (df['FROM_DATE'] < todate5)]

# create tables for each current and previous year grouped by crime type, add together previous year tables and average

table0 = df0.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count().reset_index()\

.rename(columns={"OFFENSES_YEAR" : this_year})

table1 = df1.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table2 = df2.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table3 = df3.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table4 = df4.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table5 = df5.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

avgtable = pd.concat([table1, table2, table3, table4, table5]).groupby('CRIME_STAT_TYPE').mean().reset_index()\

.rename(columns={"OFFENSES_YEAR" : "previous5yearAverage"})

trendstable = avgtable.join(table0.set_index('CRIME_STAT_TYPE'), on='CRIME_STAT_TYPE').fillna(0)

trendstable.to_csv(r"local path.csv", sep='\t', encoding='utf-8')

# hoping to add a line here that would update my hosted table from the trendstable dataframe...

trendstable.to_featurelayer('CrimeTrends')

print(trendstable)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Try the following:

import arcpy, os, uuid

import pandas as pd

import datetime

from datetime import timedelta

from arcgis.features import GeoAccessor, FeatureLayer

from arcgis.gis import GIS

# Variables

url = 'https://kirklandwa.maps.arcgis.com'

username = 'user'

password = 'pw'

hostedTableID = '78e4a92c5c324dd18cc6eee11cb6b2f7'

outputCSVFile = r'local path to CSV'

# get table from AGOL

gis = GIS(url, username, password)

CrimeTrendsTable = gis.content.get(hostedTableID)

CrimeTrendsTableLyr = CrimeTrendsTable.tables[0]

crimeLayer = FeatureLayer(CrimeTrendsTableLyr.url, gis=gis)

# Truncate table

CrimeTrendsTableLyr.manager.truncate()

# import feature class and create slice of the data set in a new dataframe

df = pd.DataFrame.spatial.from_featureclass(r"local path of larger feature class from sde")

df = df.sort_values(['OFFENSES_YEAR', 'CRIME_STAT_TYPE', 'FROM_DATE'], ascending= [True, True, True], ignore_index=True)

df = df.loc[:, ['OFFENSES_YEAR', 'CRIME_STAT_TYPE', 'FROM_DATE']]

# set variables for today and the previous 5 years

today = datetime.datetime.today()

todate1 = today - timedelta(365)

todate2 = today - timedelta(730)

todate3 = today - timedelta(1095)

todate4 = today - timedelta(1460)

todate5 = today - timedelta(1825)

this_year = datetime.datetime.today().year

one_year_ago = this_year - 1

two_years_ago = this_year - 2

three_years_ago = this_year - 3

four_years_ago = this_year - 4

five_years_ago = this_year - 5

# create new dataframes for current and each previous year to date

df0 = df.loc[(df['OFFENSES_YEAR']==this_year) & (df['FROM_DATE'] < today)]

df1 = df.loc[(df['OFFENSES_YEAR']==one_year_ago) & (df['FROM_DATE'] < todate1)]

df2 = df.loc[(df['OFFENSES_YEAR']==two_years_ago) & (df['FROM_DATE'] < todate2)]

df3 = df.loc[(df['OFFENSES_YEAR']==three_years_ago) & (df['FROM_DATE'] < todate3)]

df4 = df.loc[(df['OFFENSES_YEAR']==four_years_ago) & (df['FROM_DATE'] < todate4)]

df5 = df.loc[(df['OFFENSES_YEAR']==five_years_ago) & (df['FROM_DATE'] < todate5)]

# create tables for each current and previous year grouped by crime type, add together previous year tables and average

table0 = df0.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count().reset_index()\

.rename(columns={"OFFENSES_YEAR" : this_year})

table1 = df1.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table2 = df2.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table3 = df3.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table4 = df4.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

table5 = df5.groupby('CRIME_STAT_TYPE')['OFFENSES_YEAR'].count()

avgtable = pd.concat([table1, table2, table3, table4, table5]).groupby('CRIME_STAT_TYPE').mean().reset_index()\

.rename(columns={"OFFENSES_YEAR" : "previous5yearAverage"})

trendstable = avgtable.join(table0.set_index('CRIME_STAT_TYPE'), on='CRIME_STAT_TYPE').fillna(0)

trendstable.to_csv(outputCSVFile, sep='\t', encoding='utf-8')

# update hosted table from csv file

csvDF = GeoAccessor.from_table(outputCSVFile)

adds_fs = csvDF.spatial.to_featureset()

adds_dict = adds_fs.to_dict()

adds = adds_dict["features"]

crimeLayer.edit_features(adds=adds)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Jake,

Thanks for looking into this for me and adding these key pieces of code. When I run line 66 I get the following error:

--------------------------------------------------------------------------- KeyError Traceback (most recent call last) In [50]: Line 1: adds_fs = csvDF.spatial.to_featureset() File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\geo\_accessor.py, in to_featureset: Line 3573: return FeatureSet.from_dict(self.__feature_set__) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\geo\_accessor.py, in __feature_set__: Line 3297: if self.sr is None: File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\arcgis\features\geo\_accessor.py, in sr: Line 3503: for g in self._data[self.name] File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\pandas\core\frame.py, in __getitem__: Line 3505: indexer = self.columns.get_loc(key) File C:\Program Files\ArcGIS\Pro\bin\Python\envs\arcgispro-py3\lib\site-packages\pandas\core\indexes\base.py, in get_loc: Line 3631: raise KeyError(key) from err KeyError: None ---------------------------------------------------------------------------

Any thoughts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Can you share the CSV you're using to one of the AGOL groups you invited me to?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

It's shared!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

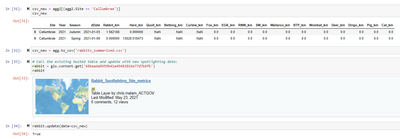

It's something with how the CSV is being created. When trying to read the CSV file, it is not being read correctly. See below:

I'm not sure if it has to do with the delimiter being used or something else, but this is where you will need to troubleshoot further. Sorry, I'm not an expert with dataframes, so not sure how much help I will be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @JakeSkinner,

Thanks for getting me this far! I ended up making a new post here which I think you may have already noticed from your kudos: https://community.esri.com/t5/python-questions/overwriting-hosted-table-in-arcgis-online-with-csv/m-... - just wanted to post here too and close the loop unless anyone else is having a similar issue. I'm not sure if the issue was with the delimiter, separator, or header or something else in how CSVs are created and read back in. During my research I found some weird behavior with the CSV. For example, when I opened it in Excel all the data would appear fine. Same for if I viewed it using the print command in Python. However, when trying to read it in using a command or open it as a table in ArcGIS Pro some columns would have null data. Using XLS instead worked great so I'm glad to have a solution but would like to understand the cause better at some point. Thanks again for all your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Chris,

Saw your post over on Overwrite Hosted Table but it disappeared.

I replied over there since there was already a chain of conversation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am struggling with this same issue.

gis = GIS(url='https://ArcGIS Enterprise Portal Server', username=portalUser, password=portalUserPass)

existingFS_item = gis.content.get("294c153f5ad64856b275b162eb78731b")

sql_query=pd.read_sql_query("""SELECT ....

******very long SQL query******* """)

sdf=pd.DataFrame.spatial.from_xy(df=sql_query,x_column='Longitude',y_column='Latitude',sr=4326)

existingFS_item.layers[0].manager.truncate()

test = existingFS_item.layers[0].edit_features(adds=sdf)

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »