- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS API for Python

- :

- ArcGIS API for Python Questions

- :

- Overwrite Hosted Table

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Overwrite Hosted Table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi, I'm having trouble overwriting a hosted table using the python api in Jupyter Notebooks.

I've logged in to the organizational account in the script where I'm an administrator and here is the rest of the code

oldcsv = gis.content.get('ecc751eadabe43c083360c07bb7cghbc')

oldcsvFLC = FeatureLayerCollection.fromitem(oldcsv)

oldcsvFLC.manager.overwrite(r'C:\path\out4.csv')

And the response below. This test was done on 40 records but I eventually want to use a dataset with >100,000

---------------------------------------------------------------------------Exception Traceback (most recent call last)<ipython-input-51-7974c8cc0fe0> in <module>----> 1 oldcsvFLC.manager.overwrite(r'C:\path\out4.csv')~\AppData\Local\ESRI\conda\envs\arcgispro-py3-clone1\lib\site-packages\arcgis\features\managers.py in overwrite(self, data_file) 1324 #region Perform overwriting 1325 if related_data_item.update(item_properties=params, data=data_file):-> 1326 published_item = related_data_item.publish(publish_parameters, overwrite=True) 1327 if published_item is not None: 1328 return {'success': True}~\AppData\Local\ESRI\conda\envs\arcgispro-py3-clone1\lib\site-packages\arcgis\gis\__init__.py in publish(self, publish_parameters, address_fields, output_type, overwrite, file_type, build_initial_cache) 9030 return Item(self._gis, ret[0]['serviceItemId']) 9031 else:-> 9032 serviceitem_id = self._check_publish_status(ret, folder) 9033 return Item(self._gis, serviceitem_id) 9034 ~\AppData\Local\ESRI\conda\envs\arcgispro-py3-clone1\lib\site-packages\arcgis\gis\__init__.py in _check_publish_status(self, ret, folder) 9257 #print(str(job_response)) 9258 if job_response.get("status") in ("esriJobFailed","failed"):-> 9259 raise Exception("Job failed.") 9260 elif job_response.get("status") == "esriJobCancelled": 9261 raise Exception("Job cancelled.")Exception: Job failed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I found a solution. If you add the csv file to AGOL without publishing it then publish it (in my case as a table without geocoding), the overwrite method works (oldcsvFLC.manager.overwrite(r'C:\path\out4.csv'). It is when I publish the table from ArcGIS Pro (presumably ArcMap too) that the overwrite method doesn't work. I suspect it has something to do with a service definition file which I do not see if I publish from within AGOL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Brian,

Encountering the same issue as you: Job Failed. But not finding your solution to be a fix.

I never published from ArcPro but encountered the issue. I uploaded a non-spatial csv to ArcGIS Online. Then published it as a hosted table in a separate step. I wanted to update the data in that hosted table.

The FeatureLayerCollection.manager.overwrite method is resulting in failed job. I ensure the file name was identical to the one originally used (which is a very strange requirement). The file schema is identical to original since the same process generated the original and my update file.

I can do so with the arcgis.features.Table delete and add features methods. But, there is a note that if you are working with more than 250 features the append method should be used. Problem is the append method is not working and seems to be a sticking point for many. Was examining this as an alternative.

just adding this info in case someone else comes along with same issue.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Replying to a deleted post that was reposted over at https://community.esri.com/t5/python-questions/overwrite-hosted-table-with-new-data/m-p/1060818

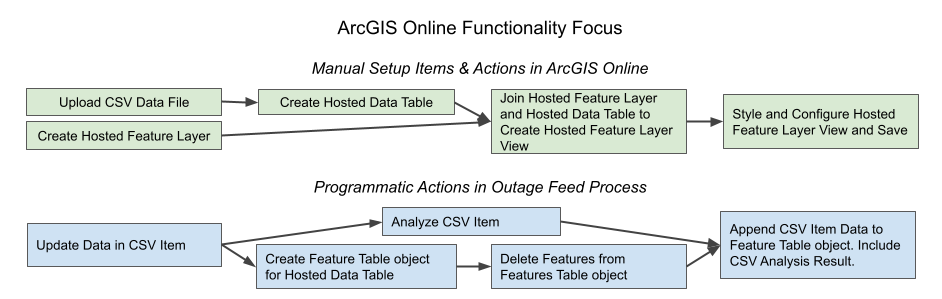

This is the flow for a process we have automated. The blue portion is the programmatic portion.

This was developed after encountering issues with the .overwrite() method. See my earlier reply, though I hadn't gotten the append method to work at that time. Hope this helps.

The programmatic portion of the process goes as follows:

Create a connection object: gis_connection =

arcgis.gis.GIS(your info)

Then get csv item by id:

gis_connection.content.get(itemid=item_id)Update the csv item using a local file (because the process requires a path):

csv_item.update(data=temp_csv_path)Get the hosted table item by id:

gis_connection.content.get(itemid=item_id)Create a feature table item:

arcgis.features.Table.fromitem(hosted_table_item)

Analyze the item:

gis_connection.content.analyze(item=csv_item.id)Delete the existing features:

features_table.delete_features(where="1=1", return_delete_results=True)

Append the new data:

attempt_ceiling = 3

for i in range(0, attempt_ceiling):

try:

append_result = self.features_table.append(

item_id=self.csv_item.id,

upload_format='csv',

source_info=self.analyze_result,

upsert=False,

)

except Exception as e:

# Esri exception is generic so there is no specific type to look for.

print(f"ATTEMPT {i + 1} OF {attempt_ceiling} FAILED. Exception in ESRI arcgis.features.Table.append()\n{e}")

time.sleep(2)

continue

else:

print(f"Append Result: {append_result}")

break

NOTE on the Append portion:

Esri update of an existing hosted feature layer using append functionality

NOTE:

The append function periodically throws an exception, and the ESRI code is not specific and is just

a type Exception. Was occurring 1-3 times per day out of 97 runs. Added a loop with sleep to introduce a

small amount of wait before retrying the append. Features in existing hosted table have been deleted prior

to the appending of new records so important to not fail out and leave an empty table in cloud.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Discovered that my above lengthy process is only necessary because we were working with a hosted table joined to a spatial layer that was made into a hosted view. The view breaks the ability to use the simpler process described by @Kara_Shindle in https://community.esri.com/t5/python-questions/overwrite-hosted-table-with-new-data/m-p/1106710#M626... . That process works on a hosted layer that is a join of a hosted table and hosted spatial layer until you move to a view.

commRecTable = gis.content.get('{insert item ID here')

commRec_collection = FeatureLayerCollection.fromitem(commRecTable)

commRec_collection.manager.overwrite(commRecCSV)