- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Listing all feature classes in an Enterprise D...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Listing all feature classes in an Enterprise Database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi all,

TLDR; how do you create a list of data sets that lists everything in an enterprise geodatabase, schemawise?

I would like to generate a list of all the data sets (e.g, relationship classes, tables, feature classes, including the feature classes within Feature Datasets) in an enterprise database in each schema (.sde connection file) periodically.

I have used the arcpy.da.Walk module with keyword "Any" and with each datatype mentioned; no success yet.

Software used:

Python 3.7.11

PostgresSQL 11.16

PostGIS 2.4.5

ArcSDE Geodatabase 10.7.1

This is the code that I tried so far.

# -*- coding: utf-8 -*-

"""

Lists the contents of Cloud Enterprise DataBase (CEDB) and writes into an MS Excel

Sorts in an alphabetical ascending order

Created on 09 May 2021

Last update 24 Nov 2022

@author: thiru

"""

#List the contents of CEDB UAT

#import modules

import arcpy

import pandas as pd

import re

import openpyxl

#assign maximum processing power

arcpy.env.parallelProcessingFactor = "100%"

###List the folder where the sde connection files are

sdeDir = r"C:/SDE/"

##CEDB schemas as sde connection files

workspaceList = [

"alpha@cedb(uat).sde",

"beta@cedb(uat).sde",

"gamma@cedb(uat).sde",

"theta@cedb(uat).sde"

]

vardatatype = [

'CadDrawing',

'CadastralFabric',

'Container',

'FeatureDataset',

'FeatureClass',

'GeometricNetwork',

'LasDataset',

'Layer',

'Locator',

'Map',

'MosaicDataset',

'NetworkDataset',

'PlanarGraph',

'RasterCatalog',

'RasterDataset',

'RelationshipClass',

'RepresentationClass',

'Style',

'Table',

'Terrain',

'Text',

'Tin',

'Tool',

'Toolbox',

'Topology'

]

#create empty sets, this will remove duplicates

files = []

schemas = []

#iterate through each schema and list contents

for ws in workspaceList:

workspace = sdeDir + ws

da_owner= ws[:ws.index("@")]

print (workspace)

schemas.append(workspace)

for dt in vardatatype:

walk = arcpy.da.Walk(workspace, datatype=[dt])

for dirpath, dirnames, filenames in walk:

for filename in filenames:

filename.lower()

filename = filename+"."+dt

files.append(filename)

print(filename)

df = pd.DataFrame(files)

print("Number of features", len(files))

print("Number of schemas", len(schemas))

#Check if dataframe is empty

if df.empty:

print('List is empty!')

else:

# dropping null value columns to avoid errors

df.dropna(inplace = True)

#Name the column name

df.columns = ['LayerName']

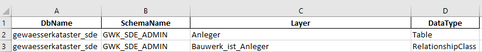

df[['DbName', 'SchemaName', 'Layer', 'DataType']] = df['LayerName'].str.split('.', expand=True)

#Remove the redundant column

df = df.drop(columns=['LayerName'])

#Sort the columns based on Schema, LayerName

df.sort_values(by=['SchemaName', 'Layer'], ascending=(True, True), inplace=True, ignore_index=True)

df.to_excel(r"C:/Temp/Lists/CEDB_alltypes_Listing.xlsx", index = False)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Your code works perfectly for me. What problems do you encounter?

Have a great day!

Johannes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you for trying the code.

Did it list the FeatureDatasets and the FeatureClasses inside Feature Datasets?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Does not add the Feature Dataset to the Excel file, but it finds them and adds the contained Feature Classes.

R_