- Home

- :

- All Communities

- :

- Developers

- :

- Python

- :

- Python Questions

- :

- Re: Clearing Arc/Arcpy workspace locks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Clearing Arc/Arcpy workspace locks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have previously posted on this with a solution that sometimes helps reduce the problem, but is unable to eliminate it completely. That is using arcpy.Exists() and arcpy.Compact() in sequence upon the workspace. This combination of tools, when used in ArcGIS 10.0, seemed adequate, however it is no longer sufficient in 10.1 to prevent issues 100% of the time.

In response to this I have developed a new method for clearing tricky locks which, for my purposes, works 100% of the time. It is a small function that can be added to the start of any arcpy script, and it requires the psutil Python library, which can be acquired here: http://code.google.com/p/psutil/. When using multiprocessing the code seems to work fine with the Parallel Python library, but will hang indefinitely if you are using the Multiprocessing library due to (I guess) the method in which child processes are spawned. However, the Parallel Python library has other issues, for example, I have had trouble with it when getting it to run checked out extensions such as Network Analyst.

The following codeblock shows the necessary imports, the function block and an example of the usage.

import arcpy

import os

import psutil

def clearWSLocks(inputWS):

'''Attempts to clear ArcGIS/Arcpy locks on a workspace.

Two methods:

1: if ANOTHER process (i.e. ArcCatalog) has the workspace open, that process is terminated

2: if THIS process has the workspace open, it attempts to clear locks using arcpy.Exists, arcpy.Compact and arcpy.Exists in sequence

Notes:

1: does not work well with Python Multiprocessing

2: this will kill ArcMap or ArcCatalog if they are accessing the worspace, so SAVE YOUR WORK

Required imports: os, psutil

'''

# get process ID for this process (treated differently)

thisPID = os.getpid()

# normalise path

_inputWS = os.path.normpath(inputWS)

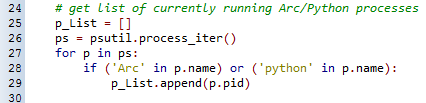

# get list of currently running Arc/Python processes

p_List = []

ps = psutil.process_iter()

for p in ps:

if ('Arc' in p.name) or ('python' in p.name):

p_List.append(p.pid)

# iterate through processes

for pid in p_List:

p = psutil.Process(pid)

# if any have the workspace open

if any(_inputWS in pth for pth in [fl.path for fl in p.get_open_files()]):

print ' !!! Workspace open: %s' % _inputWS

# terminate if it is another process

if pid != thisPID:

print ' !!! Terminating process: %s' % p.name

p.terminate()

else:

print ' !!! This process has workspace open...'

# if this process has workspace open, keep trying while it is open...

while any(_inputWS in pth for pth in [fl.path for fl in psutil.Process(thisPID).get_open_files()]):

print ' !!! Trying Exists, Compact, Exists to clear locks: %s' % all([arcpy.Exists(_inputWS), arcpy.Compact_management(_inputWS), arcpy.Exists(_inputWS)])

return True

#######################################

## clearWSLocks usage example #########

#######################################

# define paths and names

workspace = 'C:\\Temp\\test.gdb'

tableName = 'testTable'

# create table

arcpy.CreateTable_management(workspace, tableName)

# usage of clearWSLocks

clearWSLocks(workspace)

I have also attached the code as a separate module (clearWSLocks.py), which can be used in the following fashion:

import arcpy import clearWSLocks # define paths and names workspace = 'C:\\Temp\\test.gdb' tableName = 'testTable' # create table arcpy.CreateTable_management(workspace, tableName) # usage of clearWSLocks clearWSLocks.clear(workspace)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm mainly replying here so that I am subscribed to this thread, but also uncover possible alternatives (for me) as other comments come in as I am new to Python development. Plus I will likely need to implement the code example you've provided!

I've actually had a run-in with the locking issue, but was able to mitigate (actually, eliminate) the problem by reading/writing intermediate data to the IN_MEMORY space, then move that processed data to it's final location(s) in their relevent workspaces and repositories (PGDB and FDGB's).

I am wondering, is there something in your application architecture that is limiting your ability to utilize this IN_MEMORY space to read/write intermediate data?

Is the IN_MEMORY space inadequate for some reason (eg, the data is simply too large)?

The multiprocessing requirements do not allow for the use of the IN_MEMORY space?

Anyway -- great topic and useful code.

Take Care,

j

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

It is very hard to make some processes give up the file locks.

At 10.1 it has been suggested in the technical sessions to use the 'with' construct which is guaranteed to close cursors instead of relying on garbage collection after a del cursor.

Any other hints and experiences?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

They really should implement something to let the program definitely close the file (like a .close() function), such as discussed by Sean Gillies in his excellent blog here. I guess the issue occurs because files are just represented by paths, not file objects as they are in GDAL/OGR.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Is the IN_MEMORY space inadequate for some reason (eg, the data is simply too large)?

I have seen a few people talk about IN_MEMORY, but I have never seen any help files or code examples using it, so I have never tried it out! Sounds like something I should look into!

Cheers,

Stacy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks James,

I have seen a few people talk about IN_MEMORY, but I have never seen any help files or code examples using it, so I have never tried it out! Sounds like something I should look into!

Cheers,

Stacy

In addition to relief from the locking issue I was having, the in_memory space performs much better. However, I do see that threre are limitations to it as well and it can be a double-edged sword as you will then be upping the RAM usage (I think). But the idea is to do the processing there, then write out the final output to where you need the result(s) to be.

It is very easy to implement, just set your workspace to it and process as normal. Here is an example of a def() I use before I start any processing to "clear" out the IN_MEMORY sapce (although, I am not so sure this is even required. But because I am still unsure how garbage collection fully works when tools are being run from ArcCatalog in succession -- so I just clear it out anyway).

I am implementing arcgisscripting(9.3) for ArcGIS 9.3.1 here

def ClearINMEM():

## clear out the IN_MEMORY workspace of any featureclasses

try:

gp.Workspace = "IN_MEMORY"

### clear in_memory of featureClasses

fcs = gp.ListFeatureClasses()

### for each FeatClass in the list of fcs's, delete it.

for f in fcs:

gp.Delete_management(f)

gp.AddMessage("deleted feature class: " + f)

### clear in_memory of tables

tables = gp.ListTables()

for tbl in tables:

gp.Delete_management(tbl)

gp.AddMessage("deleted table: " + tbl)

gp.AddMessage("in_memory space cleared")

except:

gp.AddMessage("The following error(s) occured attempting to ClearINMEM " + gp.GetMessages())

return

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Late to the party but....

Stacy, thanks for the post.

I've inherited some python code that makes use of your 10.0 ClearWSLocks so very helpful to find this "newer" version since we're still running servers on 10.1

Just to add a contribution, the IN_MEMORY is very useful, IF you have sufficient RAM on the processing device.

I wrote a script to pickup a file geodB of roads for a large county (almost 1200 Sq Miles) and dump part of it out into an Excel file. Using IN_MEMORY really sped it up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Stacy,

I am trying to update a feature class by running a python script after hours.

Unfortunately I am having trouble with file locks caused by other users.

I have tried your clearWSLocks.py, but it runs with this error:

So it is complaining about this section of code:

Do you have any idea what could be causing this?

I am using ArcGIS 10.2... not sure if that makes a difference or not.

Thanks for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Scott, did you download psutil (or do you have it already?) Looks like you can get it

https://pypi.python.org/pypi/psutil/#downloads

If that isn't imported, then is will have an issue with line 26 in your code above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi there,

Yes I have installed psutil... any other ideas?

Thanks.