- Home

- :

- All Communities

- :

- Products

- :

- Deep Learning Studio

- :

- Deep Learning Studio Questions

- :

- Image Server 11: Deep Learning Studio - Inferencin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Image Server 11: Deep Learning Studio - Inferencing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I set up ArcGIS Image Server in my Enterprise Environment and installed the Deep Learning Framework.

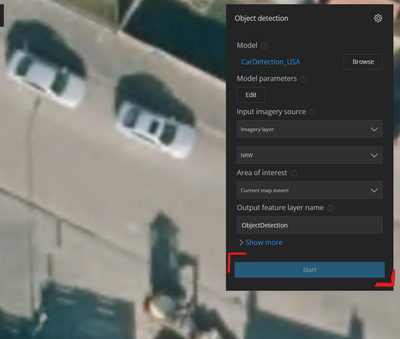

Everything works fine, except the inferencing. When loading a model in the inferencing app, the Start button is supposed to turn active, but it does not:

I don't have a dedicated GPU, but I set the mode to CPU in the settings of the widget above.

Do I miss something? I tried to use the Esri sample deep learning packages and restarted the servers, but no success.

Is the Math Kernel Library required for inferencing in Portal? Configure ArcGIS Image Server for deep learning raster analytics—ArcGIS Image Server | Documentation...

https://github.com/Esri/deep-learning-frameworks/blob/master/README.md

states the framework will fall back to the cpu mode when there is no gpu, but also recommends to install the Math Kernel Library, but without more information on how to do it and if it is necessary for use with Portal.

@VinayViswambharan @AkshayaSuresh

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Simon - Did you find a solution for the output Catalog path error? I'm getting the same error when running the inferencing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The issue with the Button is a known bug and can be resolved by choosing another Input imagery source (and revert back to the original). This will activate the button.

If someone runs into issues, setting up Deep Learning Studio, here are the steps:

- install DLS runtime from Github on the Image Server Machine (for CPU-Only this alone will do)

- add File RasterStore to Image Server Data Stores (Server Manager)

- Activate "Raster Analysis Server" in addition to "Image Hosting Server" Role in Portal

(If you do not activate it, you will get a message, that DLS Project could not be created)

- Inferencing Limitation: to use an existing DLPK in the inferencing interface, you need to run a least one training, then open the Inferencing App to change the model. At the moment there seems to be no way to just open the inferencing app. I assume this will be added in 11.1. However, models can be used in the old Map Viewer.

- note the Inferencing Bug mentioned above

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »