- Home

- :

- All Communities

- :

- Products

- :

- Data Management

- :

- Data Management Questions

- :

- Re: dbf-to-shp attribute join gives dofferent resu...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

dbf-to-shp attribute join gives dofferent result than shp-to-shp attribute join.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Why is it that a dbf-to-shp attribute join results in a different number of joins than a shp-to-shp attribute join of the same data? When I join the attribute table of one shapefile to another shapefile I get a certain number of joins. But when I export the table to dbf and join it the the same shapefile, I get a different (greater) number of joins. Shouldn't both routines result in the same number of joins? It is the same data, after all. Please help, this is really messing up my analysis. I am using ArcGIS Desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

In ArcGIS Pro its called "Overlay"... and in ArcGIS Desktop its called "Intersect". The overall aspect of the tool is a spatial function, but the output is your key to generating the data table you want. After you intersect the layers, the data will be output to a single feature (shapefile) which you can then use the table to table geoprocessing tool to extract the tabular data of your choice. Here is a link to read up on exactly what it does:

https://desktop.arcgis.com/en/arcmap/10.6/tools/analysis-toolbox/how-intersect-analysis-works.htm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am familiar with intersect, but I am not sure that the correct records will get matched. I have not used it in this way before.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Maybe just try it and see if it works? Its worth a shot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Just a suggestion and you may have already done this... but sometimes doing a google search based on keywords of what you are trying to accomplish with the words ArcGIS Desktop 10.6.1 included. GeoNet is a great resource but you may find another source with the information you are looking for.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Attribute Joins do not display the full set of records that actually result from a one-to-many or many-to-many join. The table shown by ArcMap is functionally a one-to-one or many-to-one set of records, meaning that new records are not created in the parent table to match all of the records in the joined table. For all intents and purposes it is hiding from you the true number of denormalized records that would result from the full output of that relational join for performance reasons.

However, when you do an export the true denormalized record set of the one-to-many or many-to-many records is generated, which inserts records that you would not see in a layer attribute join. This is a desired behavior, since this is the best way to make ArcMap behave like Access when outputting a result from a one-to-many or many-to-many relationship. The export increases the number of records to convert these relationships to a true one-to-one output. Creating a one-to-one representation of the data in the ?-to-many relationships is often useful for a variety of analysis purposes, and I have made use of this export behavior many times.

The difference in your results is exposing an erroneous assumption on your part that the Census Block shape file has only unique values for each Census Block field value. If you do a Summary of your Census Block field in the Census Block table you almost certainly will discover their are duplicate values for one or more of your Census Blocks. If even one Census Block value is duplicated in the shape file that should only have unique values it will double the number of records in the export for that value, and if it is duplicated on 3 features it will triple the values in the output of the export for that value. So if the shapefile that allows duplicate values has 100,000 features with that Census Block value you will end up with 100,000 more records than you expect when you do an export if that value is duplicated and not actually unique.

You need to clean up your features to merge together all parts of the Census Block into a single multipart feature to actually have a many-to-one relationship like the Attribute Join shows you. The Dissolve tool can do the merge and output a new feature class or you can Edit the existing FC by using the Merge option under the Editor button when you have selected all features for a single Census Block value. That will make that shapefile conform to your relationship assumption and it will behave the same as the Attribute Join when you do an export, since then it will create a many-to-one join and not a many-to-many join with denormalized records hidden by the Attribute Join.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks. I don't fully understand your reply, specifically regarding denormalization, but I do know that my data contains duplicates. That is the whole point - I am using an attribute join to get rid of duplicates, so that only one instance of each unique Census block results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

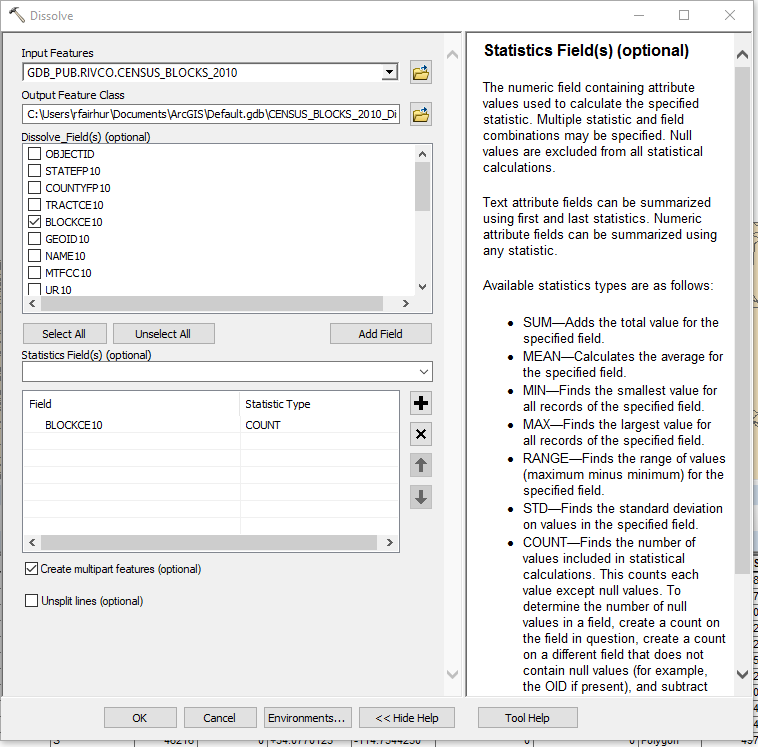

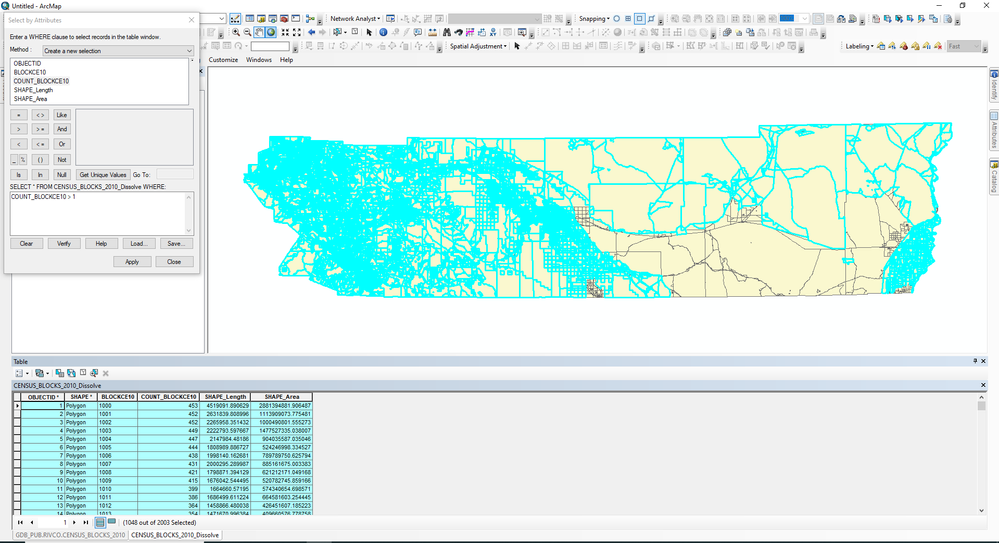

Use the Dissolve tool (Data Management Tools, Generalization Toolbox) on the shapefile that is only supposed to have one shape for each Census Block. Only use the Census Block field as a Dissolve field in the tool and add the Census Block field as a Statistics field with a Statistics Type of Count The output will have a single shape for each Census Block and export the results you are are wanting. Anything in the output Count field with a value greater that 1 was split into more than one piece in your original Census Block shapefile. Below is how I would set up the tool for my Census Block feature class and the output with all Census Blocks that collapsed from many features for those Census Block values to a single feature.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

No, I don't want or need splitting or dissolving of Census block boundaries. The two files both contain identical 2020 Census block boundaries - one with 519,723 unique features (one feature for each unique Census block) and containing population, households, and housing units data in the attribute table; the other with 1,054,633 features (which include duplicate features for each Census block) and containing broadband data in the attribute table. I want to calculate how many households "have access to" any kind of broadband over 200kbps (the FCC standard). If I join the Census data to the broadband data, the households in each Census block will be counted multiple times when I sum that field. So I must join the broadband data to the Census data so that the households in each Census block get counted only once. However, the number of features joined varies depending on whether I join shapefile-to-shapefile or attribute table-to-shapefile. I have already determined that the method which yields the correct results is to join the broadband attribute table to the Census shapefile. I have done this same exercise with smaller data sets in the past and got the same results with both methods. My question is "why is it not working now?"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Your Census Block feature are not unique in the shapefile or the dbf table that you say has only one feature/record per Census Block value. The only way I will accept that you have proven me wrong is if you do the Dissolve I have shown you or you do a Summary of the Census Blocks and find that the count of every Census Block value is 1. If you refuse to do that your on your own to figure this out. But I can assure you that the problem is not with the software, it is with your unproven assumptions about your data.

A difference in the presence or absence of an FID field in the shapefile and table is another factor that can affect the export behavior, but it would be unusual for either if those file types to lack an FID field.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I reexamined your methodology and think there my be an alternative explanation of this behavior that still involves the difference in the behavior of a layer attribute join and the export of that join. The Attribute Join of a layer in Arcmap is in reality a one-to-one join that only considers the first record in the one-to-many relationship when it applies the definition query. So if the first record it encounters does not meet your query criteria the record is filtered out, even if the second record of the join would have met your criteria. However, an export examines all records in the joined table and will include matches that fail to meet your criteria on the first record, but succeed on the second record or any other records than the first. The export may also create duplicates of the Census Blocks if more than one record in the join table meets your query definition (if the inputs of the join came from the same file geodatabase this duplication of records would definitely occur). Both methods can produce incorrect results for what you want to achieve, which makes the use of ?-to many joins unreliable and unsuitable for this kind of analysis.

The proper way to do this analysis to ensure all records that meet your criteria are included and no duplication happens is to do the following steps:

Create a definition query in the broadband shapefile to show only Census blocks at 25/3 or greater. This reduces the size of the attribute table from 982,015 records to 654,582 records. Perform a Summary on the Census Blocks field of that shapefile to get a table that contains only one record for each unique Census Block value. You could include the first and last or min and max values in the 24/3 fields in the output, but that is optional. Then perform an attribute join of the Census Block shapefile as the target table to the Summary output as the join table, keeping only joined records. Both the Attribute Join and the export will show the same number of records that the Summary table contains (provided all of the Census Block shapefile features are in fact unique) since both will be the output of a true one-to-one join. This methodology will reliably and consistantly give the sum of households for all Census Blocks that actually meet your criteria.

MS Access would also require you to do the same step of creating a Summary query first, and then using that summary output for creating a one-to-one relationship with the Census Blocks in order to get the sum of households you want, so this is the standard database methodology for solving this problem. MS Access is incapable of providing you with the sum of households you want directly from any ?-to-many relationship.

- « Previous

-

- 1

- 2

- Next »

- « Previous

-

- 1

- 2

- Next »