- Home

- :

- All Communities

- :

- Developers

- :

- ArcGIS REST APIs and Services

- :

- ArcGIS REST APIs & Services Ques.

- :

- Re: Is it possible to create a feature set from fe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is it possible to create a feature set from feature service objects selected by an area of interest?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Can anyone out there help me?

I'm generating a feature set for display and analysis in ArcGIS 10.2.2 from a large ESRI feature class and have written python code to achieve this based on the standard source URL and query of the kind:

RestURL = "<my URL>"+ service['name'] +"/FeatureServer/0/query"

where = '1=1'

fields = '*'

query = "?where={}&outFields={}&returnGeometry=true&f=json&token={}".format(where, fields, token)

fsURL = RestURL + query

While this is working, the process is slow and I am only interested in a small set of these features. Is there any way an area of interest can be integrated into the query to access only a subset of these features?

Any advice at this stage would be really helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

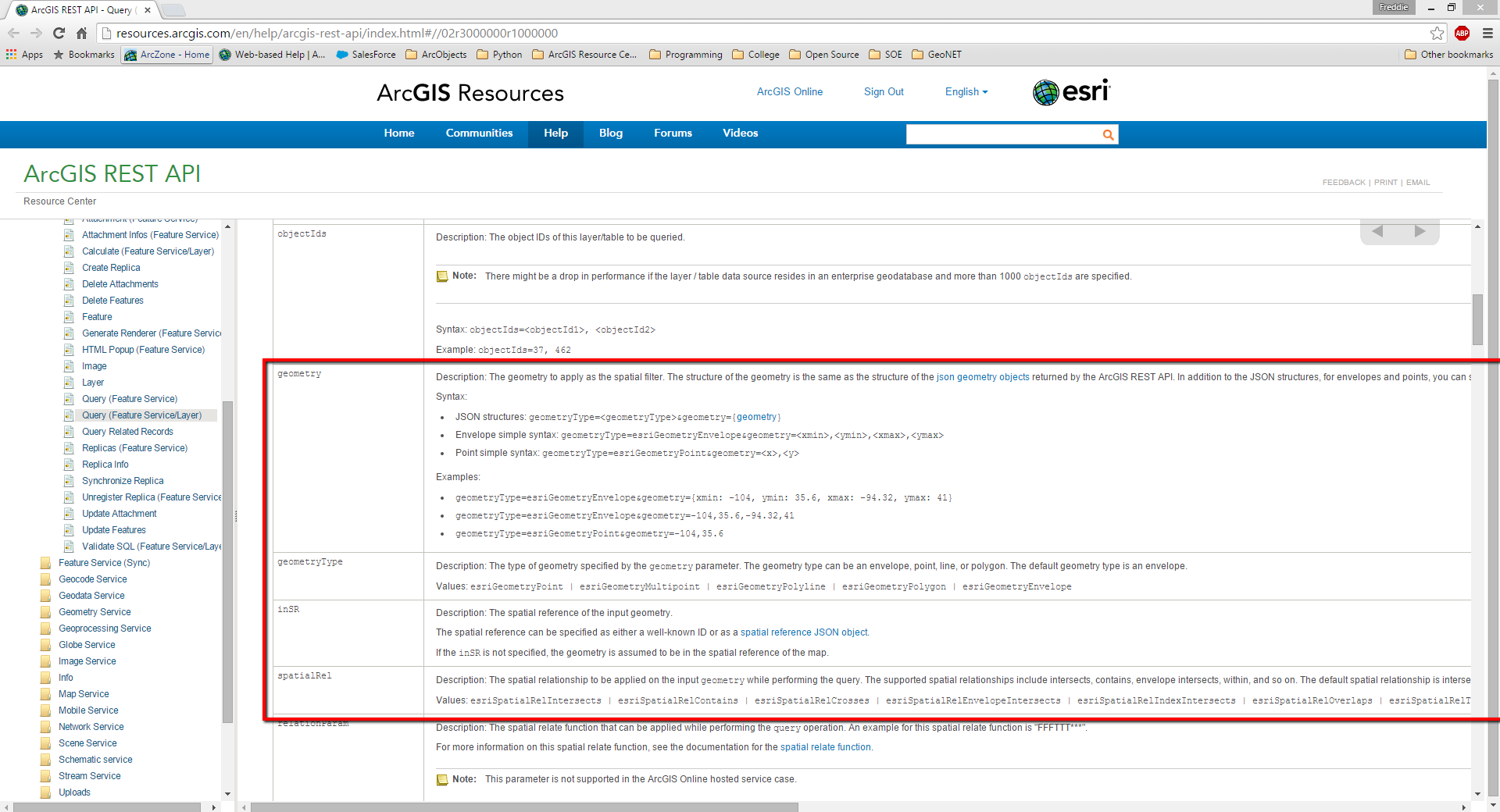

Have you tried supplying a spatial filter in your REST call?

ArcGIS REST API: Query (Feature Service/Layer)

http://resources.arcgis.com/en/help/arcgis-rest-api/index.html#//02r3000000r1000000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Unofficial

Thanks Freddie. I'll follow up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

UNCLASSIFIED

Thanks Freddie for your suggestion.

To give you more background, I'm new to this, am filling in the gaps, and have developed the following code to retrieve the total feature service content:

RestURL = "http:///arcgis/rest/services/"+ "richard/ILUA_nat_svc" +"/FeatureServer/0/query"

where = '1=1'

fields = '*'

query = "?where={}&outFields={}&returnGeometry=true&f=json&token={}".format(where, fields, token)

fsURL = RestURL + query

fs = arcpy.FeatureSet()

fs.load(fsURL)

This works fine but its slow speed makes it impractical.

I note the parameters in an approach similar to your suggestion, providing an overlay JSON string - fGeom:

params = urllib.urlencode({'f': 'json', 'geometryType': 'esriGeometryPolygon',

'geometry': fGeom, 'spatialRel': 'esriSpatialRelIntersects',

'where': '1=1', 'outFields': '', 'returnGeometry': 'true'})

req = urllib2.Request(RestURL,params)

response = urllib2.urlopen(req,"?f=json&token=" + token)

For some reason this request fails. I'm also not sure if there is any variation on how to load the results of this (should I ever get it to work).

Do you have any thoughts?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Where do you have the data hosted? What is the speed you're seeing to execute the query? And could you either provide a sample of your data to test against or describe it enough so that I can create similar data on my end?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

UNCLASSIFIED

Thanks for the reply, Freddie.

The data are hosted as feature services. The Australian government may not be happy with server details. However, the identical data is publicly available from http://www.ntv.nntt.gov.au/intramaps/download/download.asp

ILUA_Nat has 1085 records

NTDA_Register_Nat has 271 records.

The process takes approx. 3 mins 48 secs to extract these 2 feature services to layers.

Below is some very basic code - apologies for the lack of commenting and general untidiness.

***************************

baseUrl = "http:///arcgis/rest/services/"+ service['name'] +"/FeatureServer/0/query"

where = '1=1'

fields = '*'

query = "?where={}&outFields={}&returnGeometry=true&f=json&token={}".format(where, fields, token)

fsURL = RestURL + query

fs = arcpy.FeatureSet()

fs.load(fsURL)

lname = service['name']

FeatureLayer = arcpy.MakeFeatureLayer_management(fs,lname[lname.find("/")+1:])

NewLayer = arcpy.mapping.Layer(lname[lname.find("/")+1:])

arcpy.mapping.AddLayer(df,NewLayer)

**************************************************************

The aim of this is ultimately to integrate feature services a into broader code that looks for and computes overlap statistics for a standard set of data, including the above. I am hoping to replace the current overlap and intersect processes with equivalent processes on the feature service side, but still provide detailed reports.