- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Tab delimited import stripping leading zeros

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi All,

I have a tab delimited file that I am the using table-to-table tool to bring into Pro. I change one of the fields to type "text" (since all the records in that field are numbers) before running the tool. Opening the new table in Pro show the field as type text but all the leading zeros have been stripped. Just checked, and I get the same error with ArcMap. I can import the file into Excel and get the correct formatting, I'd like to skip the extra step. Am I doing it wrong, or is this a bug that didn't get fixed in Pro?

Thanks,

Tycho

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

From my experience, importing text-based data is a gamble at best, and I rarely get it to go off without a hitch or requiring some post-processing. The tool tries to save you time and guess at the field types, but I wish it wouldn't.

If a bit of Python isn't out of the question, I'd suggest reading the file into a DataFrame using pandas. It tends to do a much better job with its inferences, and gives you more control over the fields and data types. Plus with the ArcGIS Python API's DataFrame.spatial capabilities, you can write the results directly to a new or existing feature class.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

From my experience, importing text-based data is a gamble at best, and I rarely get it to go off without a hitch or requiring some post-processing. The tool tries to save you time and guess at the field types, but I wish it wouldn't.

If a bit of Python isn't out of the question, I'd suggest reading the file into a DataFrame using pandas. It tends to do a much better job with its inferences, and gives you more control over the fields and data types. Plus with the ArcGIS Python API's DataFrame.spatial capabilities, you can write the results directly to a new or existing feature class.

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

One can also use MS Excel?

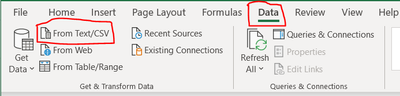

1. Open a blank Excel Sheet. Go to Data tab > From Text/ CSV.

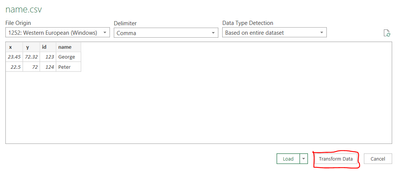

2. Select the CSV > Click Import

3. In the new dialog box, click Transform Data. You may choose "Tab" for Delimiter, if required.

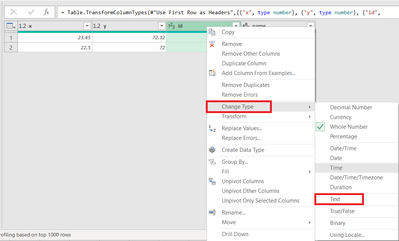

4. In "Transform Data" dialog box, right-click the field (you want the leading zeros), > Change Type > Text.

Click "Replace Current" for Change Column Type.

5. Click Close and Load.

6. Save the Excel file.

7. In ArcGIS Pro, execute Excel to Table.

*Oops. I should have read your post completely. 😅

Think Location

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That's the step he's trying to eliminate from his process, though. Also, Excel doesn't really enforce data types, no matter what the column formatting is set to, and Pro is still just "guessing" on import.

@TychoGranvillewhere's the initial source of this data, by the way? Do you have any control over its format, or other means of accessing it?

Kendall County GIS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

yes. I realized that.

It's really embarrassing 😶

Think Location

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

The file is exported from my Clerk's software, there are no options for any formatting. After I do my edits it gets fed back into the same software so I'm trying to limit the number of times/software I use to tweak the format - errors always seem to creep in. I know a smattering of python, this'll give me a chance to learn more 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

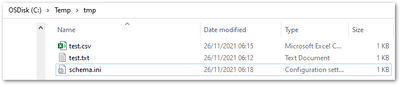

I'm not sure how releevant this is for tab-delimited data, but I've noticed on occasion that a csv import (ArcMap 10.7) results in the SILENT creation of a schema.ini, which affects how data is imported. An example of what Table to Table created :

[BNE_GEOHUB_DATABASE_GIS.csv]

Col3=Project_No Double

Col10=DateDrilled Date

Col13=Bearing Double

Col14=Dip Double

I'd check to see if one exists, as these things persist and do not update if you change the structure of your input data. If you don't notice or edit schema.ini it can screw up all future imports if your column order or data types are different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

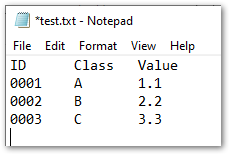

Anything special one has to do to check if this schema.ini is getting created? Will this file be visible to Windows File Explorer in the same location as my CSV?

I'm having the same problem using Table to Table in Pro 2.7. I have already transformed the data in Excel, as described above, so that my ID field is text with leading zeros saved as a .CSV. I've even opened the CSV in Notepad to verify that the values are being stored with the leading zeros. When I use the Table to Table tool in ArcGIS Pro, I use Field Map->Properties to force this field to come in as type text. Even after all these steps, my leading zeros get truncated in the resultant table!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

schema.ini is visible in the file system if it is created. It's not always created though, but you can create it manually.

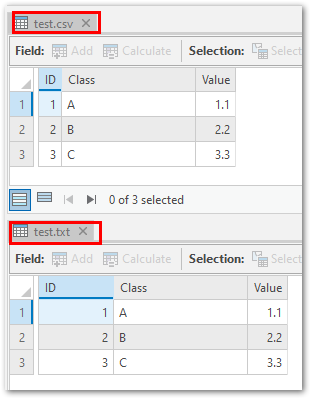

For example, consider two test files, one comma and one tab delimited, each with with one attribute with leading zeros:

Without a schema.ini, in Pro 2.8.3, you get this - no leading zeros for either:

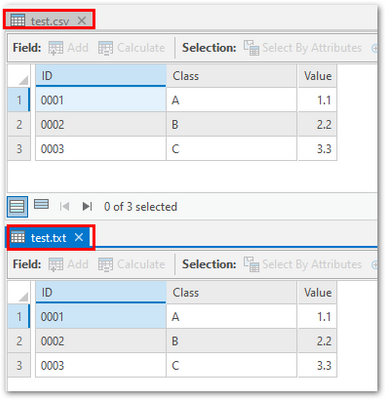

Create a schema.ini file in the same folder as the data, and explicitly define the data type for the attribute with leading zeros as Text. You do not need to define the data type for all columns, just the columns you wish to change the type of:

Then reopen the data in Pro, you'll see the data typing is honored:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Works perfect, good solution