- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Selecting the most recent records based on uni...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Selecting the most recent records based on unique values in another field

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi,

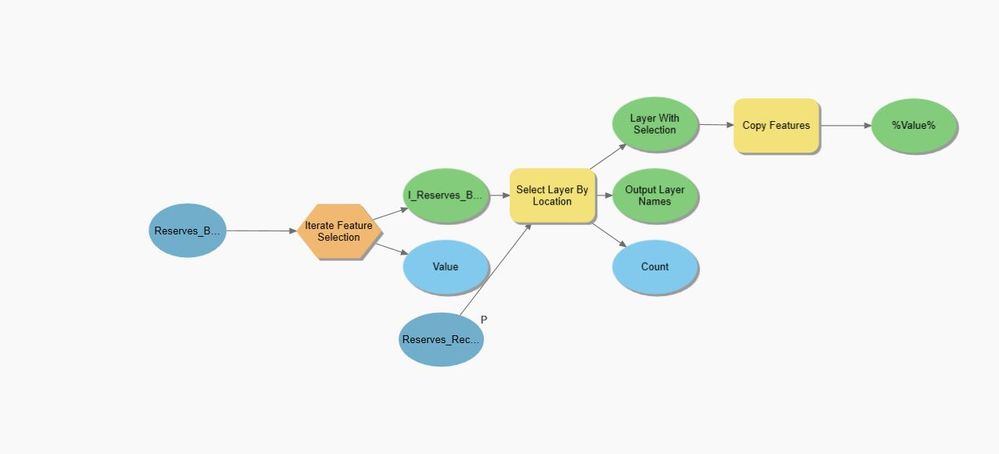

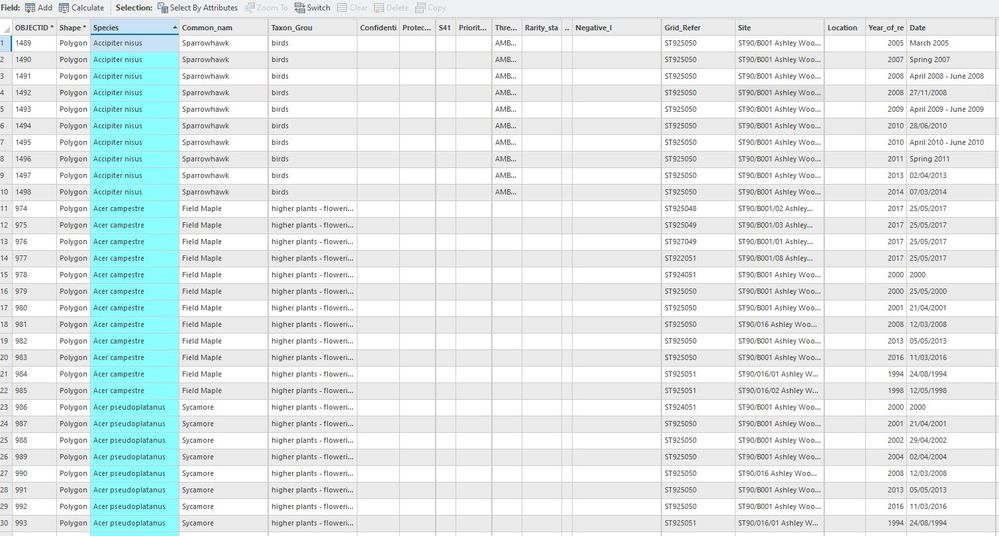

I have built the model in the picture, which works fine. But the next step I want to do is for each of the new feature classes that are created, I want only the most recent record of each unique record from the species field. But I want all the associated fields to come through into the new feature class. See table picture below, for example I only want the most recent record of Sparrowhawk.

I am not sure how to do this and if I can add it into the model as part of the process?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks @DavidPike and @RichardHowe for the collect value suggestions. How do I run the select by attributes tool, to select for max year or rec before collecting the values? I cant add a workspace as an input. If I was to collect the values and then run it, all I'd be doing is splitting and recombine the dataset. Maybe i need to go back to the original dataset before splitting them out into the individual sites?

Sorry this is bit painful! Feel free not to respond!

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

We already established that query wouldn't work on the initial dataset because you can't subquery the group. The split is important first so that then you can ask it for the newest value within each group.

If colect values isn't allowed as an input the "create feature layer" (a quick look suggest it might not be) then I go back to my original suggestion. Save what you have, create a brand new blank model and then drag and drop your saved model from catalog into the new model and iterate over the output gdb for each feature class and make those the inputs for the "create feature layer"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Still trying in model builder. The above I tried with just one of the feature classes I need iterate through to get to my result. So this will go through the input feature class and split by attribute on 'species', works they all end up in the output geodatabase as separate feature classes. But then I need to iterate through these using the select by attribute to get the max year of record. But it fails at this point as the output of iterator won't go in. It says 'table not found'.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

You will have to create a layer from the feature classes ("create feature layer" GP tool) then use the select by attribute on that

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Yeh I had a look at this and you'll need to go with Richard's suggestion of a submodel as you can't have 2 iterators. The collect values wont work so you need to have an iterator to go over the feature classes in that workspace (output from split by attributes). The iterator would run that select by attributes query for each feature class (which Richard supplied earlier) -> Export to new feature classes -> Collect values -> Merge.

This kinda stuff is where you'd really want Python instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

This is likely not the fastest, best or most logical way to do it, but it should do the job.

import arcpy

#input feature class

in_fc = r'C:\path to your FC'

#dictionary to store objectid of newest sighting date for each species

date_dict = {}

#cursor to iterate through each row in fc

#if date is more recent than current species value for key

#update to the matching oid of that date in dictionary

with arcpy.da.SearchCursor(in_fc, ['OBJECTID', 'your species field', 'your date field']) as cursor:

for row in cursor:

#unpack row

oid, species, date = row

#if no dictionary value yet, add 1st entry

if species not in date_dict:

date_dict[species]=[date, oid]

#check existing date is older, if so - replace it

elif date > date_dict[species][0]:

date_dict[species]=[date, oid]

#create string of object ids for selection query input

oid_list = [value[1] for value in date_dict.values()]

selection_string = '(' + ','.join(map(str,oid_list)) +')'

#turn into feature layer for selection

feature_layer = arcpy.MakeFeatureLayer_management(in_fc, "feature_layer")

#select by attributes using oid list/string

selection = arcpy.management.SelectLayerByAttribute(feature_layer, "NEW_SELECTION", f'OBJECTID IN {selection_string}')

#save your new fc

out_fc = r'C:\path to new fc output'

arcpy.CopyFeatures_management(selection, out_fc)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

David, some Python-related thoughts on your code:

- Python defaultdict removes the need to check for a keys existence before comparing a key's value to a new value.

- Python min and max functions are purpose-built for comparing values and selecting the lowest or highest

- Tuple/list unpacking can be done in the for loop, a separate line isn't needed.

- Generator expressions were partially introduced to reduce the need to use filter() and map(), whose functional-programming style/nature somewhat contrasts with many other aspects of the Python language.

import arcpy

import datetime

from collections import defaultdict

# input feature class

in_fc = r'C:\path to your FC'

# default dictionary to store objectid of newest sighting date for each species

date_dict = defaultdict(lambda: (datetime.datetime.min, -1))

# cursor to iterate through each row in fc

# store the maximum (most recent) date for each species and its ObjectID

with arcpy.da.SearchCursor(in_fc, ['OBJECTID', 'your species field', 'your date field']) as cursor:

for oid, species, date in cursor:

date_dict[species] = max(date_dict[species], (date, oid))

# create sql statement for selecting ObjectIDs

sql = f"OBJECTID IN ({','.join(str(oid) for date,oid in date_dict.values())})"

# turn into feature layer for selection

feature_layer = arcpy.MakeFeatureLayer_management(in_fc, "feature_layer")

# select by attributes using oid list/string

selection = arcpy.management.SelectLayerByAttribute(feature_layer, "NEW_SELECTION", f'OBJECTID IN {selection_string}')

# save your new fc

out_fc = r'C:\path to new fc output'

arcpy.CopyFeatures_management(selection, out_fc)- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks Joshua, much neater (and optimised). I wonder about the (date, oid) tuple and whether something like having 'date' and 'oid' as keys in their own dictionary (these keys being values of date_dict..) and if that would be faster or slower.

My only other thoughts are another idea of turning the feature class into some data format that could work with the SQL subquery with max(date) and groupBy species, but the juice might not be worth the squeeze on that one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I did a quick test using nested dicts instead of tuples in a dict, and it ran about 3x faster than my code. But, then I refactored my tuple-in-dict code to cut out the max() function and use an if to compare the first value only, and it ran a bit faster than nested dicts. I have some ideas why the max() function is noticeably slower, but regardless the cursor will be the slowest part of the code by an order of magnitude or more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Here's a way to do it using Summary Statistics, joins, and a definition query. It has more steps than I'd like. And it outputs and utilizes static tables. But it does seem to work.

Steps:

Create a Summary Statistic table that groups the rows by species and includes a max date field.

Join from SPECIES_RECORDS to MAX_DATE_PER_SPECIES where T_SPECIES=T_SPECIES. Uncheck the "Keep all input records" option.

Hide all the join fields except MAX_t_date.

Create a definition query on SPECIES_RECORDS.

species_records.t_date = max_date_per_species.MAX_t_date

That gives us the latest rows per species.

But there are multiple rows with the same date per species. If that's ok, then we can stop here. But if we want to break the ties, then we can do some more steps.

Use the SPECIES_RECORDS table (which still has a join and a definition query) in Summary Statistics.

I chose to use the first unique ID per species. But I could have chosen the last. It doesn't matter, since it's arbitrary. When there are multiple rows per species with the same date, we choose an arbitrary row to break the tie. We don't have a PRIORITY field, or something like that, that we can use further refine the tie breaking.

Join from SPECIES_RECORDS to FIRST_UNIQUE_ID_PER_SPECIES where T_UNIQUE_ID = T_UNIQUE_ID. Uncheck the "Keep all input records" option.

Hide all the joined fields.

Now we have the latest row per species, and ties are excluded (arbitrarily).

That seems to work. Ideally, someone will think of a simpler way.

Source: GIS Stack Exchange - File Geodatabase SQL expression to get greatest n per group