- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Detect Objects Using Deep Learning on 0% after...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Detect Objects Using Deep Learning on 0% after over 15 hours

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Good morning,

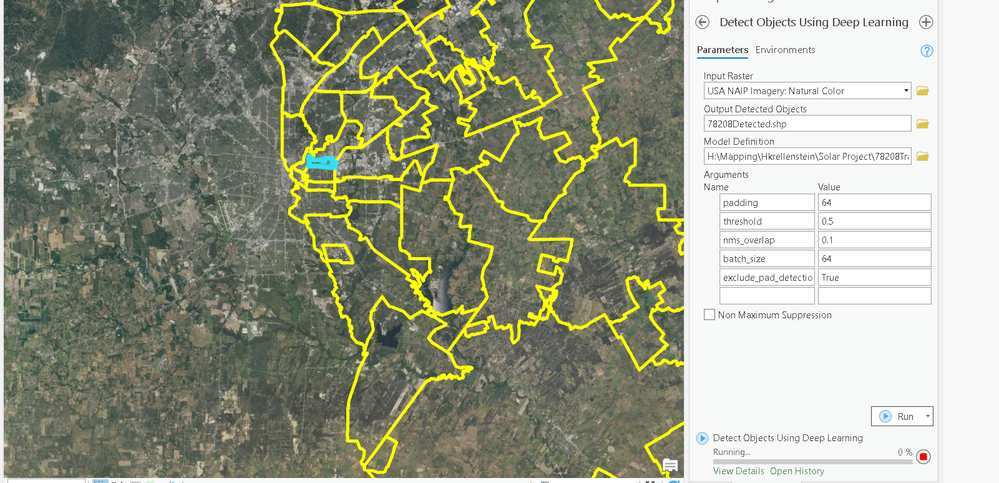

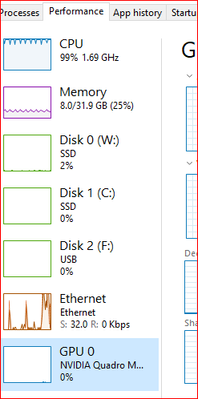

I am trying to run the Detect Objects Using Deep Learning tool to detect solar panels in NAIP imagery. I am using ArcGIS Pro 2.7, and I have selected one small zip code area to detect the objects. However, the tool has been running for over 15 hours and is still at 0% completed. My CPU is at almost 100%, so the tool is definitely running. Has anyone else had this problem or knows any solutions? Please help if you can!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

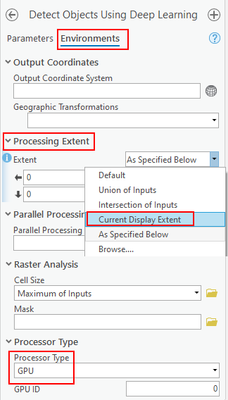

Even though you have selected a zip code, the tool doesn't know that - it is trying to process the entire image. You have a couple of options: Clip out just the area you want to process and use that in the tool, or use the Extent settings in the Environments tab. The easiest option may be to zoom in to your required area and choose Current Display Extent.

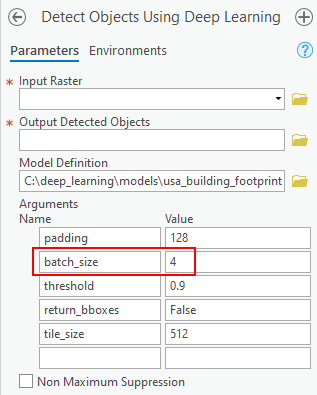

Using the CPU for deep learning will be really slow anyway. Do you have a dedicated graphics card (preferably NVidia)? If so, in the Environments tab you should set the Processor Type to GPU. You may also have to reduce the Batch Size parameter according to the amount of memory available on your graphics card as well (probably start at 4 and reduce if you get any out of memory errors).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Tim, I have a similar related question regarding CPU/GPU performance. I'm running the Train Model for Deep Learning tool in ArcGIS Pro 2.8 and while setting the GPU as Processor Type, my Task Manager shows almost no activity for GPU and huge activity in CPU and Ethernet. (I'm using Remote Desktop to connect to a PC with an NVIDIA graphics card (which we use specifically for the NVIDIA capability). I am at Epoch 1 (0%) for 3 hours and the activity in Task Manager tells me something is going on, it sure seems that I am getting very little use of the GPU with its NVIDIA card.

Thanks for any input you or anyone else may have.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hey @MartyRyan

First of all, make sure you have installed the Pro 2.8.1 patch that just came out - it fixes an issue with Deep Learning and GPUs.

Pro will automatically drop back to using the CPU if the GPU is not detected. First find out your GPU model and memory - easiest way is in the Nvidia Control Panel. Click on the System Info in the lower left. Here's mine:

The important info is the card model and dedicated memory. The requirements section on the Esri Deep Learning Github site recommends minimum 2Gb dedicated memory (but you really need at least 4Gb to do anything serious). You also need CUDA Compute Capability 3.5 minimum, 6.1 or higher recommended. Find your GPU on the Nvidia CUDA GPU page and check your capabilities. Here's mine:

If your card meets the requirements, then I would recommend updating to the latest graphics card drivers if they haven't been updated in a while. Now we'll make sure it is recognised in ArcGIS Pro. Open Pro and open a new Notebook. Paste the following code into a cell and run it.

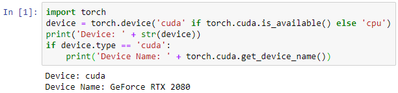

import torch

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print('Using Device: ' + str(device))

if device.type == 'cuda':

print('Device Name: ' + torch.cuda.get_device_name())If the output says cuda and prints your graphics card model you should be ok. If it says cpu then it wasn't recognised. Here's mine:

See how you go. There could be technical problems with the card or drivers, or even issues with your Pro install.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Tim,

Can you point me to where I can get the Pro 2.8.1 patch? I'm just running the 2.8 version from Github.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Never mind, I was thinking it was an update for deep learning tools and an ArcGIS pro update. Sorry, figured it out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Tim,

Sorry I haven't responded sooner - I checked and I am seeing this when running that code

Using Device: cuda Device Name: Quadro M4000

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Update: I have more questions regarding GPU\CPU usage after a failure of the Train Model tool. I am again Exporting Training Data for Deep Learning. My Train Model failure was shown as:

RuntimeError: cuda runtime error (101) : invalid device ordinal at ..\torch\csrc\cuda\Module.cpp:59

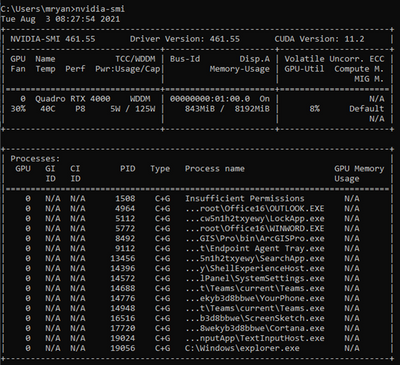

I chose GPU for Processor Type and "1" for GPU ID: 1 (for the NVIDIA GPU as shown below)

The tool ran for 42 minutes before showing this error. I have tried to ensure I am identifying the correct GPU in the Train Model tool - I thought I chose "1" correctly. However, when I check using nvidia-smi in Command Prompt, I get:

It appears here that the GPU is identified as "0".

How do I reconcile this apparent conflict? How do I ensure the NVIDIA GPU is being used most appropriately and efficiently? I am getting very slow performance in the Export Training Data tool (about 1% progress every hour) and am not sure my NVIDIA GPU is being used nearly enough - it's usage never goes above 11% or so. I need to know the correct GPU setting when I train my next model.

Thanks for any help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Not sure why nvidia-smi is showing 0 for everything. The Export Training Data tool doesn't use GPU, just the Train Model and Detect Objects\Classify Pixels etc.

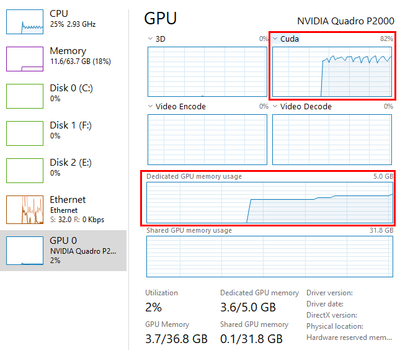

I generally just use Windows task manager to monitor in the Performance tab. You can set one of the graphs to show Cuda stats to see which GPU is being used. The dedicated memory is the other one - you can increase batch size to use more memory, which may make training faster (I don't know if this is actually the case or not).

How many epochs are you training the model for? You should be able to see how long each epoch is taking (will be output below the cell in a notebook, or in the messages of the geoprocessing tool).

How many training samples do you have? More samples = longer training time.

Maybe start with a very small set of training data and a few epochs and run through the entire process choosing different options and checking what GPU is being used.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Tim,

Thank you - this is very helpful and I will keep it in mind when training my next model. I am still running Export Training Data for Deep Learning (progress seems slow to me, but I have a lot more data, so I am not sure.) Everything is on my local machine, so I expect better performance when training a model and classifying when the time comes.