- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Pro

- :

- ArcGIS Pro Questions

- :

- Re: Buffer around features of varying geometry

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hey all,

I'm trying to come up with an easy way to buffer around a collection of features.

The use case is I have a site where we collected areas, lines, and points.

I'd like to buffer all of them into one area.

Is there a way to do this? One way I think it could work is buffering related features, but I'm not sure if that's possible.

Thanks!

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

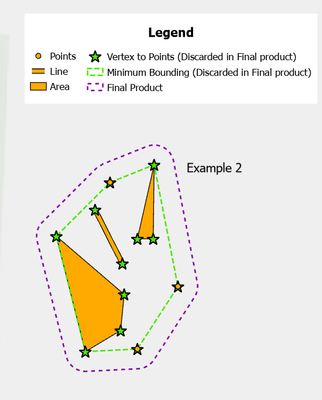

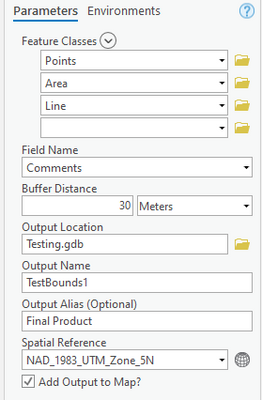

So, I ended up scripting it.

General workflow:

- Non-point features: Vertex to point(), Point features: get copied into a temporary FC

- Combine all those points

- Minimum Bounding Geometry()

- Buffer()

All in all, it's pretty fast. It'd be faster if I didn't have some validation going to prevent errors.

- With 86 total features, the entire thing runs about 35-40 seconds.

- I'd like it to be faster, but it looks like the bottleneck is during the clean-up process; each interim file takes about 3.5 seconds to delete, and there are 2+X interim files for each run. So combining 3 feature classes means there's about 20 seconds spent just cleaning.

I could definitely stand to figure out how to get the spatial reference to default to the map's spatial reference.

I also had to manually prevent the user from using ObjectID, Shape, etc. as identifying fields. I'd definitely like to figure out how to just get them out of the field list in the first place.

Pictures:

Code:

I'm not sure what's allowed for posting files here, but I can wrap this all up in a toolbox for anyone who wants.

*Edited 3/9/22 to enforce a hard stop on shapefiles; the previous code ended up deleting them.

#-------------------------------------------------------------------------------

# Name: SiteBuffers

# Purpose: Create one unified buffer around features from different feature classes sharing the same field value.

# E.g. A buffer around a collection of points, lines, and polygons belonging to "Site 1001".

# This does not work on Shapefiles.

# Author: Alfred Baldenweck, ALKA

# Date: March 9, 2022

#-------------------------------------------------------------------------------

def main():

import arcpy, os, datetime

from os.path import join

'''Set-up'''

inFCs = arcpy.GetParameter(0)

nameField = arcpy.GetParameterAsText(1)

buffDist = arcpy.GetParameter(2)

outputLocation = arcpy.GetParameterAsText(3)

outputName = arcpy.GetParameterAsText(4)

lyrAlias = arcpy.GetParameterAsText(5)# Optional Parameter

spatRef= arcpy.GetParameter(6)

addOutput = arcpy.GetParameterAsText(7)

if lyrAlias == "": #if left blank, just call it the FC name.

lyrAlias= outputName

arcpy.env.workspace = outputLocation

scratchDB = arcpy.env.scratchWorkspace #This gets called to prevent issues when exporting to a shapefile.

start= datetime.datetime.now() #begin time, used for tracking progress throughout.

'''Validation'''

outputName= arcpy.ValidateTableName(outputName)

if ((nameField.lower() == "OBJECTID".lower()) or ("Shape".lower() in nameField.lower()) or (nameField.lower() == "GlobalID".lower()) or (nameField.lower() == "GUID".lower())):

arcpy.AddError(f"Field name \"{nameField}\" is Invalid. Please choose a different field")

return

fcList = [] # Create list of unique FCs in the input. Prevents it from running twice if you added from the map and the GDB on accident.

reportShps= [] # Create list of Shapefiles that are skipped.

reportHfcs = [] # Create list of Hosted Feature Classes that are skipped.

for FC in inFCs: #Finds the data source for each item in the input

arcpy.AddMessage(f"{FC}")

if arcpy.Describe(FC).dataType == "FeatureClass":

if ("https://" in f"{FC}"): #Check if hosted 1

reportHfcs.append(f"{FC}")

else:

FC = str(FC).replace("'","") #Fixes issues with spaces in GDB names

fcList.append(FC)

elif arcpy.Describe(FC).dataType == "ShapeFile": #shapefile check part 1

reportShps.append(f"{FC}")

elif arcpy.Describe(FC).dataType == "FeatureLayer":

if ("https://" in FC.dataSource): #Check if hosted 2

reportHfcs.append(f"{FC}")

elif (".gdb\\" not in FC.dataSource): #Shapefile check part 2

reportShps.append(f"{FC}")

else: #Anything left at this point should be a feature class

FC = FC.dataSource

fcList.append(FC)

arcpy.AddMessage(f" Just finished grabbing unique features: {datetime.datetime.now()- start}")

fcList = list(set(fcList)) # a UNIQUE() function on the list of FCs

reportShps = list(set(reportShps)) # a UNIQUE() function on the list of shapefiles

reportHfcs = list(set(reportHfcs)) # a UNIQUE() function on the list of Hosted Features

reportSkips = [] #List of invalid FCs.

cleanPoints= [] #empty list, used to contain the vertex point feature classes.

'''Begin work'''

if len(fcList) == 0:

arcpy.AddWarning(f"No valid feature classes were input.")

return

for FC in fcList:

if nameField in[field.name for field in arcpy.ListFields(FC)]: #Don't bother if the field doesn't exist.

desc= arcpy.Describe(FC)

tempOut = join(outputLocation,f"{FC}_temp")

if desc.shapeType == 'Point':

FC = arcpy.management.Copy(FC, tempOut)

cleanPoints.append(FC)

else:

FC = arcpy.management.FeatureVerticesToPoints(FC, tempOut, "ALL")

cleanPoints.append(FC)

else:

reportSkips.append(FC) #send invalid FCs to the report list.

arcpy.AddMessage(f" Just finished prepping points for merging: {datetime.datetime.now()- start}")

if len(cleanPoints) >0:

#create blank feature class to use to append stuff

pointsMerge = arcpy.management.CreateFeatureclass(scratchDB, "pointsMerge_TOOLDerive", "POINT", spatial_reference = spatRef )

#create a file path for the convex hull used as the minimum site boundary

minSiteBound = join(outputLocation, "minSiteBound_TOOLDerive")

#add the name field (Site Name) to the temporary class so those values are preserved.

arcpy.management.AddField(pointsMerge, nameField, "TEXT")

arcpy.AddMessage(f" Just finished adding the name field and creating blank files: {datetime.datetime.now()- start}")

#append all the points to the same blank feature

arcpy.management.Append(cleanPoints, pointsMerge, 'NO_TEST')

arcpy.AddMessage(f" Just finished merging all the points into one file: {datetime.datetime.now()- start}")

#create a minimum site boundary around the site.

minSiteBound = arcpy.management.MinimumBoundingGeometry(pointsMerge, minSiteBound, 'CONVEX_HULL', 'LIST', nameField)

arcpy.AddMessage(f" Just finished making minimum boundary: {datetime.datetime.now()- start}")

#create a buffer at the specified distance

siteBound= arcpy.Buffer_analysis(minSiteBound, outputName, buffDist)

arcpy.AddMessage(f" Just finished the buffer: {datetime.datetime.now()- start}")

arcpy.management.DeleteField(siteBound, "ORIG_FID") #We don't need to have this field

arcpy.AddMessage(f" Just deleted an unnecessary field: {datetime.datetime.now()- start}")

''' Clean up the temp files'''

for clean in cleanPoints:

arcpy.management.Delete(clean)

#arcpy.AddMessage(f" Just deleted {clean}: {datetime.datetime.now()- start}")

arcpy.management.Delete(pointsMerge)

arcpy.management.Delete(minSiteBound)

arcpy.AddMessage(f" Just finished cleaning up interim files: {datetime.datetime.now()- start}")

'''Add layer to map'''

p = arcpy.mp.ArcGISProject("CURRENT")

mapA = p.activeMap

if mapA and (addOutput == "true"):

lyr = arcpy.management.MakeFeatureLayer(siteBound, lyrAlias).getOutput(0)

mapA.addLayer(lyr)

arcpy.AddMessage(f" Just added the product to the map: {datetime.datetime.now()- start}")

'''Reporting'''

if len(reportHfcs) >0:

if len(reportHfcs) == 1:

reportHfcs = (" ").join(reportHfcs)

arcpy.AddWarning(f"Skipped: {reportHfcs} is a hosted feature class and could not be processed. Please export to a local GDB and try again.")

elif len(reportHfcs) >1:

reportHfcs = (", ").join(reportHfcs)

arcpy.AddWarning(f"Skipped: {reportHfcs} are hosted feature classes and could not be processed. Please export to a local GDB and try again.")

if len(reportShps) >0:

if len(reportShps) == 1:

reportShps = (" ").join(reportShps)

arcpy.AddWarning(f"Skipped: {reportShps} is a shapefile and could not be processed.")

elif len(reportShps) >1:

reportShps = (", ").join(reportShps)

arcpy.AddWarning(f"Skipped: {reportShps} are shapefiles and could not be processed.")

if len(reportSkips) >0:

if len(reportSkips) == 1:

reportSkips = (" ").join(reportSkips)

elif len(reportSkips) >1:

reportSkips = (", ").join(reportSkips)

arcpy.AddWarning(f"Skipped: {reportSkips}. {nameField} not found")

else:

arcpy.AddWarning(f"Tool Unsuccessful. Field: {nameField} not found in any of the input layers.")

if __name__ == '__main__':

main()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

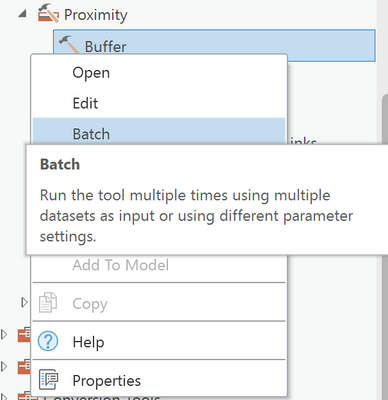

you ruled out a batch buffer?

right-click on the tool, select batch

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Sorry, I should have been more clear.

I want to buffer them as one unit.

I suppose I could just buffer each of them and then dissolve, but I'm hoping there's a more elegant solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That won't happen. Converting to points will only make matters worse even with a dissolve of the overlapping buffers. Batch buffer to get the buffers for each geometry, then union the featureclasses together and dissolve the overlaps from there.

... sort of retired...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

So, I ended up scripting it.

General workflow:

- Non-point features: Vertex to point(), Point features: get copied into a temporary FC

- Combine all those points

- Minimum Bounding Geometry()

- Buffer()

All in all, it's pretty fast. It'd be faster if I didn't have some validation going to prevent errors.

- With 86 total features, the entire thing runs about 35-40 seconds.

- I'd like it to be faster, but it looks like the bottleneck is during the clean-up process; each interim file takes about 3.5 seconds to delete, and there are 2+X interim files for each run. So combining 3 feature classes means there's about 20 seconds spent just cleaning.

I could definitely stand to figure out how to get the spatial reference to default to the map's spatial reference.

I also had to manually prevent the user from using ObjectID, Shape, etc. as identifying fields. I'd definitely like to figure out how to just get them out of the field list in the first place.

Pictures:

Code:

I'm not sure what's allowed for posting files here, but I can wrap this all up in a toolbox for anyone who wants.

*Edited 3/9/22 to enforce a hard stop on shapefiles; the previous code ended up deleting them.

#-------------------------------------------------------------------------------

# Name: SiteBuffers

# Purpose: Create one unified buffer around features from different feature classes sharing the same field value.

# E.g. A buffer around a collection of points, lines, and polygons belonging to "Site 1001".

# This does not work on Shapefiles.

# Author: Alfred Baldenweck, ALKA

# Date: March 9, 2022

#-------------------------------------------------------------------------------

def main():

import arcpy, os, datetime

from os.path import join

'''Set-up'''

inFCs = arcpy.GetParameter(0)

nameField = arcpy.GetParameterAsText(1)

buffDist = arcpy.GetParameter(2)

outputLocation = arcpy.GetParameterAsText(3)

outputName = arcpy.GetParameterAsText(4)

lyrAlias = arcpy.GetParameterAsText(5)# Optional Parameter

spatRef= arcpy.GetParameter(6)

addOutput = arcpy.GetParameterAsText(7)

if lyrAlias == "": #if left blank, just call it the FC name.

lyrAlias= outputName

arcpy.env.workspace = outputLocation

scratchDB = arcpy.env.scratchWorkspace #This gets called to prevent issues when exporting to a shapefile.

start= datetime.datetime.now() #begin time, used for tracking progress throughout.

'''Validation'''

outputName= arcpy.ValidateTableName(outputName)

if ((nameField.lower() == "OBJECTID".lower()) or ("Shape".lower() in nameField.lower()) or (nameField.lower() == "GlobalID".lower()) or (nameField.lower() == "GUID".lower())):

arcpy.AddError(f"Field name \"{nameField}\" is Invalid. Please choose a different field")

return

fcList = [] # Create list of unique FCs in the input. Prevents it from running twice if you added from the map and the GDB on accident.

reportShps= [] # Create list of Shapefiles that are skipped.

reportHfcs = [] # Create list of Hosted Feature Classes that are skipped.

for FC in inFCs: #Finds the data source for each item in the input

arcpy.AddMessage(f"{FC}")

if arcpy.Describe(FC).dataType == "FeatureClass":

if ("https://" in f"{FC}"): #Check if hosted 1

reportHfcs.append(f"{FC}")

else:

FC = str(FC).replace("'","") #Fixes issues with spaces in GDB names

fcList.append(FC)

elif arcpy.Describe(FC).dataType == "ShapeFile": #shapefile check part 1

reportShps.append(f"{FC}")

elif arcpy.Describe(FC).dataType == "FeatureLayer":

if ("https://" in FC.dataSource): #Check if hosted 2

reportHfcs.append(f"{FC}")

elif (".gdb\\" not in FC.dataSource): #Shapefile check part 2

reportShps.append(f"{FC}")

else: #Anything left at this point should be a feature class

FC = FC.dataSource

fcList.append(FC)

arcpy.AddMessage(f" Just finished grabbing unique features: {datetime.datetime.now()- start}")

fcList = list(set(fcList)) # a UNIQUE() function on the list of FCs

reportShps = list(set(reportShps)) # a UNIQUE() function on the list of shapefiles

reportHfcs = list(set(reportHfcs)) # a UNIQUE() function on the list of Hosted Features

reportSkips = [] #List of invalid FCs.

cleanPoints= [] #empty list, used to contain the vertex point feature classes.

'''Begin work'''

if len(fcList) == 0:

arcpy.AddWarning(f"No valid feature classes were input.")

return

for FC in fcList:

if nameField in[field.name for field in arcpy.ListFields(FC)]: #Don't bother if the field doesn't exist.

desc= arcpy.Describe(FC)

tempOut = join(outputLocation,f"{FC}_temp")

if desc.shapeType == 'Point':

FC = arcpy.management.Copy(FC, tempOut)

cleanPoints.append(FC)

else:

FC = arcpy.management.FeatureVerticesToPoints(FC, tempOut, "ALL")

cleanPoints.append(FC)

else:

reportSkips.append(FC) #send invalid FCs to the report list.

arcpy.AddMessage(f" Just finished prepping points for merging: {datetime.datetime.now()- start}")

if len(cleanPoints) >0:

#create blank feature class to use to append stuff

pointsMerge = arcpy.management.CreateFeatureclass(scratchDB, "pointsMerge_TOOLDerive", "POINT", spatial_reference = spatRef )

#create a file path for the convex hull used as the minimum site boundary

minSiteBound = join(outputLocation, "minSiteBound_TOOLDerive")

#add the name field (Site Name) to the temporary class so those values are preserved.

arcpy.management.AddField(pointsMerge, nameField, "TEXT")

arcpy.AddMessage(f" Just finished adding the name field and creating blank files: {datetime.datetime.now()- start}")

#append all the points to the same blank feature

arcpy.management.Append(cleanPoints, pointsMerge, 'NO_TEST')

arcpy.AddMessage(f" Just finished merging all the points into one file: {datetime.datetime.now()- start}")

#create a minimum site boundary around the site.

minSiteBound = arcpy.management.MinimumBoundingGeometry(pointsMerge, minSiteBound, 'CONVEX_HULL', 'LIST', nameField)

arcpy.AddMessage(f" Just finished making minimum boundary: {datetime.datetime.now()- start}")

#create a buffer at the specified distance

siteBound= arcpy.Buffer_analysis(minSiteBound, outputName, buffDist)

arcpy.AddMessage(f" Just finished the buffer: {datetime.datetime.now()- start}")

arcpy.management.DeleteField(siteBound, "ORIG_FID") #We don't need to have this field

arcpy.AddMessage(f" Just deleted an unnecessary field: {datetime.datetime.now()- start}")

''' Clean up the temp files'''

for clean in cleanPoints:

arcpy.management.Delete(clean)

#arcpy.AddMessage(f" Just deleted {clean}: {datetime.datetime.now()- start}")

arcpy.management.Delete(pointsMerge)

arcpy.management.Delete(minSiteBound)

arcpy.AddMessage(f" Just finished cleaning up interim files: {datetime.datetime.now()- start}")

'''Add layer to map'''

p = arcpy.mp.ArcGISProject("CURRENT")

mapA = p.activeMap

if mapA and (addOutput == "true"):

lyr = arcpy.management.MakeFeatureLayer(siteBound, lyrAlias).getOutput(0)

mapA.addLayer(lyr)

arcpy.AddMessage(f" Just added the product to the map: {datetime.datetime.now()- start}")

'''Reporting'''

if len(reportHfcs) >0:

if len(reportHfcs) == 1:

reportHfcs = (" ").join(reportHfcs)

arcpy.AddWarning(f"Skipped: {reportHfcs} is a hosted feature class and could not be processed. Please export to a local GDB and try again.")

elif len(reportHfcs) >1:

reportHfcs = (", ").join(reportHfcs)

arcpy.AddWarning(f"Skipped: {reportHfcs} are hosted feature classes and could not be processed. Please export to a local GDB and try again.")

if len(reportShps) >0:

if len(reportShps) == 1:

reportShps = (" ").join(reportShps)

arcpy.AddWarning(f"Skipped: {reportShps} is a shapefile and could not be processed.")

elif len(reportShps) >1:

reportShps = (", ").join(reportShps)

arcpy.AddWarning(f"Skipped: {reportShps} are shapefiles and could not be processed.")

if len(reportSkips) >0:

if len(reportSkips) == 1:

reportSkips = (" ").join(reportSkips)

elif len(reportSkips) >1:

reportSkips = (", ").join(reportSkips)

arcpy.AddWarning(f"Skipped: {reportSkips}. {nameField} not found")

else:

arcpy.AddWarning(f"Tool Unsuccessful. Field: {nameField} not found in any of the input layers.")

if __name__ == '__main__':

main()