- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Notebooks

- :

- ArcGIS Notebooks Blog

- :

- Continuous Integration With Internet Data Sources

Continuous Integration With Internet Data Sources

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

This post shows how to use an ArcGIS Online hosted v 10.0 notebook as a data pump between any data sources on the internet, my specific example being the Snowflake cloud warehouse and a hosted feature service in ArcGIS Online. The systems of record you integrate are only limited by your imagination. When scheduled as a notebook task, this delivers continuous integration, in either or both directions. A bonus feature, you can also perform analysis on your data during data movement.

Some things I'm sharing that I learned from this exercise are:

- How to add notebook dataset connectivity on the fly from Conda Forge or PyPI

- How to use a notebook's built in file system as a staging and analysis workspace

- How to leverage a cloud warehouse's processing power to do work remotely

- How to use SQL to unpack JSON and make relational data

- How to use feature service relationships in queries in Pro

- How to use a couple of new field types, BigInteger* and TimeOnly*

- How to continuously maintain a hosted feature service with the ArcGIS Python API

* Requires notebook runtime version 9+ and ArcGIS Pro 3.2+

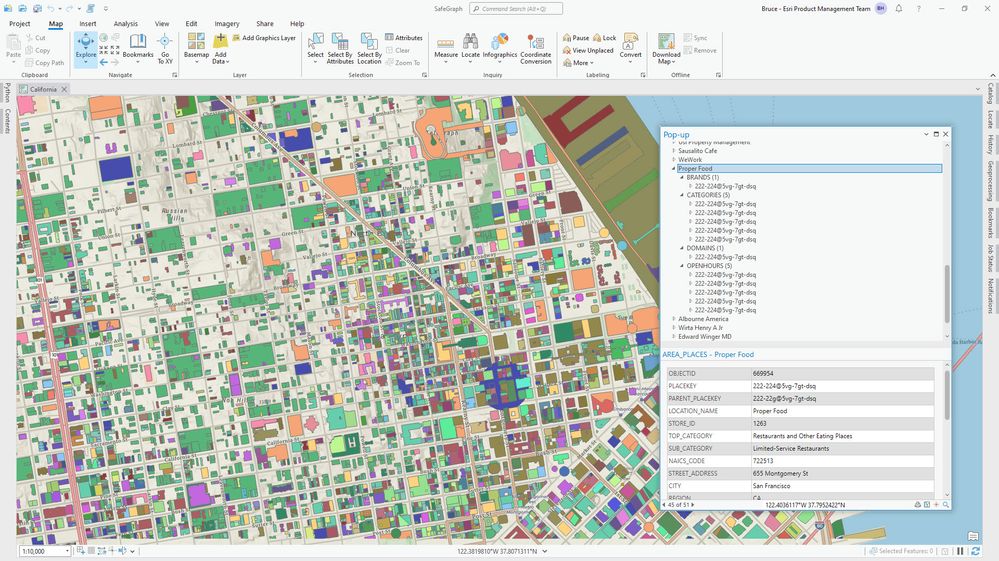

My example uses Esri business partner SafeGraph Places data, with their kind permission, for which I am grateful. If you follow the link you'll see that Places model point-of-interest data. Places have geometry (polygon or point) and attributes. Some of the attribution is best modeled as related data, and you can see from the map popup my data model has four lookup tables for places to expose the brands, place categories, web domains and open hours known for a place.

The main topic of this post is using an Online notebook to maintain a hosted feature layer with source data from an internet connection, namely a cloud warehouse, and without compromising data preparation or data model flexibility - expanding JSON columns and creating relationship classes is shown.

This post does not have a dependency on ArcGIS Data Interoperability, but does include an ETL tool that is an option to create an initial file geodatabase used to publish the target hosted feature service, otherwise use the supplied XML Workspace document to do this. If you do have Data Interoperability the supplied ETL tool looks like this:

Something we're all going to see more of, even in georelational warehouses like Snowflake, is semi-structured data as JSON documents in variant columns. That is the case for SafeGraph places, where the brand, category, domain and open hours values are inside JSON objects in a 1:M cardinality with the owning place. Retaining JSON in fields would make it very hard to query in ArcGIS.

Here's an example value for brand data, an array of dictionaries:

[

{

"safegraph_brand_id": "SG_BRAND_1e8cb2c9bf1caabd",

"safegraph_brand_name": "Bentley Motors"

},

{

"safegraph_brand_id": "SG_BRAND_89ede26d6a5e8208ad28607464dfa1f3",

"safegraph_brand_name": "Land Rover"

},

{

"safegraph_brand_id": "SG_BRAND_2fa3cf424791add1",

"safegraph_brand_name": "Aston Martin"

},

{

"safegraph_brand_id": "SG_BRAND_b94705c817b09f287e31606604e526ba",

"safegraph_brand_name": "Jaguar"

}

]Here is another for open hours, a dictionary keyed on day name with arrays of open/close times:

{

"Fri": [

[

"10:00",

"18:00"

]

],

"Mon": [

[

"10:00",

"18:00"

]

],

"Sat": [

[

"10:00",

"17:00"

]

],

"Sun": [

[

"12:00",

"16:00"

]

],

"Thu": [

[

"10:00",

"18:00"

]

],

"Tue": [

[

"10:00",

"18:00"

]

],

"Wed": [

[

"10:00",

"18:00"

]

]

}It makes sense to unpack this JSON in Snowflake before it gets to your client. The job in each case is to make new rows for each extracted value while retaining the key field in each row. Snowflake provides the FLATTEN table function for the purpose. This is a proprietary SQL extension but analogs exist in other cloud warehouses. SafeGraph places have a primary key named PLACEKEY, so we retain that in all cases.

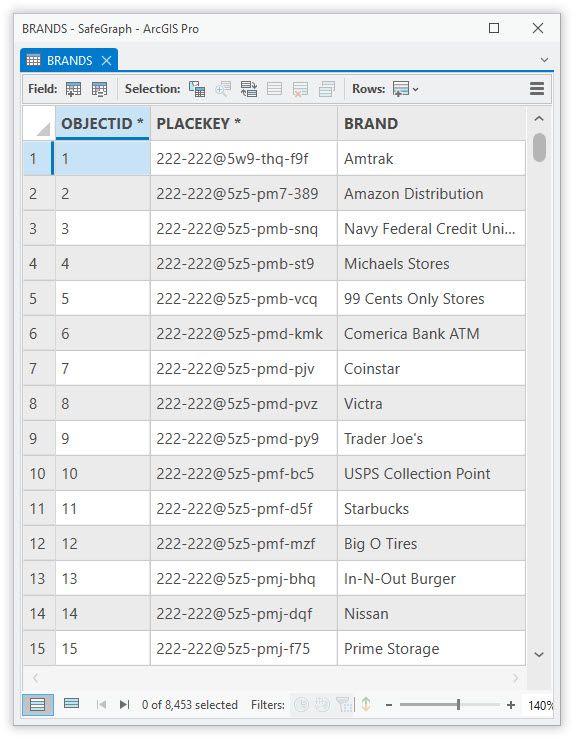

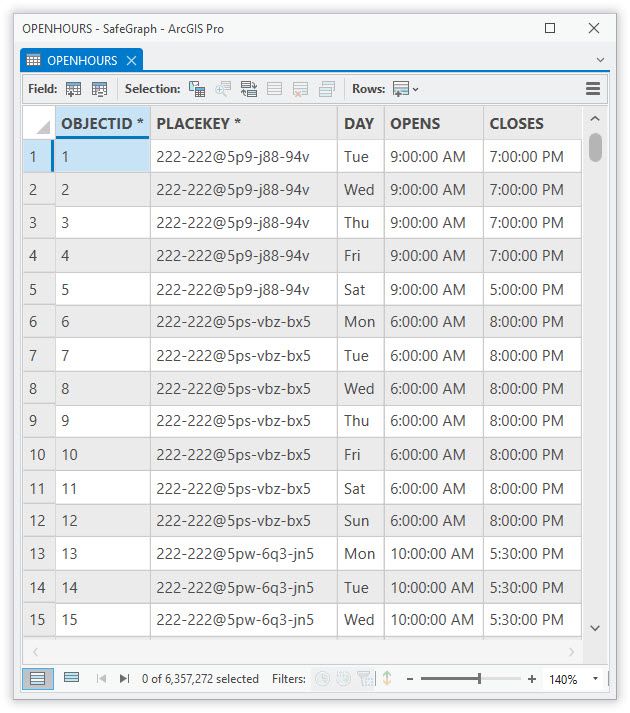

I'll let you inspect the SQL I used to unpack each JSON document type when you look at the code in the notebook in the blog download, but the tabular results for brands and open hours look like this:

Brands:

Open Hours:

I took this detour through some of the processing to make the point that with a back end as powerful as Snowflake, you should get it to do as much of the processing as possible before your integration. If your integration is against data summaries coming from big data then this is particularly relevant.

With the data model I'm using here, reading from Snowflake using a cursor and writing to the file geodatabase in my notebook with another cursor, I get about 10K/sec point features, 7.5K/sec polygon features and 50K/sec table rows throughput - plenty fast enough for the use case.

Now let's get to the core of the matter, how to implement a continuous integration.

With continuous integrations with feature services, you do not want to break any item properties, like item ID or item description metadata. The way to do this is with the overwrite method. A dependency of overwrite is that you must have published the service from a file item, not from map content in Pro, and you must supply a new file item of identical schema to effect the overwrite. I'm using a file geodatabase. In fact I used two editions of geodatabase, first one with all place data for California, so I could get my data model correct (field type, width etc.), then replacing this with one with just Redlands CA place data, so I could store only a small portal item used for overwrite schema checking. If you download the blog attachment and have Data Interoperability extension you will see my ETL tool has table handling set to Truncate Existing when writing to my geodatabase.

When I was happy with my geodatabase schema and had made some layer files from the full version of the data to capture some symbology and popup behavior, I zipped the small version of the geodatabase and published my initial feature service.

To start constructing my notebook I had to learn a fundamental concept. Notebooks support a local filesystem, and this is where I built my overwrite payload (a zipped file geodatabase); I used the default /arcgis/home folder. You can think of this filesystem as a staging area for your data, where you can optionally do some analysis. In my case I'm not doing any analysis but I am assembling a data model and populating it. You might not need to do any geoprocessing, say if you're reading from one cloud warehouse and writing directly to another, but if you know ArcPy the possibilities are endless.

Now the secret sauce. If you read up on Online notebooks you'll see the available Python libraries, and that Snowflake is not amongst them. So install it! Here is the cell in my notebook that adds the connectivity I want:

import arcgis

import arcpy

from datetime import datetime

import os

import zipfile

def getNow():

return str(datetime.utcnow().replace(microsecond=0))

print('Processing starting at {}'.format(getNow()))

gis = arcgis.gis.GIS("https://www.arcgis.com", gisUser, gisPass)

arcpy.SignInToPortal("https://www.arcgis.com", gisUser, gisPass)

os.environ['CRYPTOGRAPHY_OPENSSL_NO_LEGACY'] = '1' # Snowflake dependency

!pip install snowflake-connector-python # pip is faster than conda, or we would use !conda install -y -c conda-forge snowflake-connector-python

import snowflake.connector

print('Snowflake installed at {}'.format(getNow()))

conn = snowflake.connector.connect(

account=snowAccount,

user=snowUser,

password=snowPass,

warehouse=snowWarehouse,

database=snowDatabase,

schema=snowSchema)

print('Imports complete at {}'.format(getNow()))The lines that provide the integration magic are:

- os.environ...

- !pip install...

- import snowflake.connector

I'm making the bold claim that you can integrate with any internet data source because the Python ecosystem is so well endowed and maintained. While Online notebooks come with some basic libraries for Amazon, Azure Storage and Google, there are many more you can reach out for in Conda Forge and PyPI using this technique. Do your research. It may take some trial and error. Online notebooks are Linux containers so check a package supports the platform. For example, the pyodbc module isn't available on Linux, so if you're integrating with Azure SQL you'll need the pymssql module. Before committing to an integration try a stub notebook that tests the import. My notebook uses a Snowflake cursor but the package also supports working with pandas dataframes if that's how you want to work.

If your integration is with ArcGIS Enterprise you would authenticate to that GIS.

Remember you can write to the web as well as read from it. A cursor might not be the best method either, for example in this blog post I upload a Parquet file to Snowflake.

The blog download contains my notebook source, the Spatial ETL workspace to read Snowflake and write file geodatabase, plus a schema-only geodatabase XML document you can import.

After connecting to Snowflake the data movement is simple, I'll let you inspect the notebook source. It took some trial and error to get the SQL right for Snowflake, but when you're done you have a powerful tool. You can share your notebook and keep the credentials private using this approach. Snowflake is read, a file geodatabase is staged, then used to overwrite an existing service.

Note that if your data lifecycle (or size) indicates, in addition to the overwrite method used in this notebook, there is also an append and upsert method. Overwrite is an expensive operation for big data, so if your data can be maintained by append and upsert it is worth considering. Say your data size is in the tens of millions of records, you might have one notebook (or cell) that overwrites a service with a small file and another that appends to it with a much larger one.

To go into production with this sample, the technical steps with core ArcGIS are:

- Obtain access to SafeGraph's Snowflake Marketplace

- Create a new file geodatabase in ArcGIS Pro 3.3+

- Import the geodatabase XML document to a file geodatabase

- Zip the file geodatabase and add it as an item to Online

- Share a feature service from the file geodatabase item

- Create a hosted notebook from the ipynb file supplied

- Edit the injected parameters cell and target item ID

- Create a scheduled task for the notebook

Having put it all together you can use the service across ArcGIS. You'll see in the supplied notebook my service has all places for San Francisco, California and I want to find where I can go for breakfast on Saturdays between 8AM and 10AM, I would query it like this:

TOP_CATEGORY = 'Restaurants and Other Eating Places' AND PLACEKEY IN (SELECT DISTINCT PLACEKEY FROM OPENHOURS WHERE OPENHOURS.DAY = 'Sat' AND OPENHOURS.OPENS <= TIME '08:00:00' And OPENHOURS.CLOSES >= TIME '10:00:00')

There you have it, a continuous integration powered by an ArcGIS Online hosted notebook!

Please comment in this blog space with any questions or suggestions.

I hope you all make lots of data pumps, let us know!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.