- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Questions

- :

- Re: Geoevent Filter to ensure a date field is with...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Geoevent Filter to ensure a date field is within a tolerance of the current time

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I am getting inputs that have a date field. Sometimes the feed provides a bad time stamp. I need to create something (like a filter) to compare the time coming in and the current time. If the time stamp coming in is outside of say 5 minutes of the current time, then i want to process those events differently. Any help would be greatly appreciated.

Thanks,

Shawn

geoevent configuration filter by date

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

MBramer-esristaff had to tackle this issue recently.

I think what you need to do is use a Field Calculator to cast the Date to a Long integer value. Use an expression like myDateAttributeField + 0 and write the value into a new field whose type is Long (not Date).

Then you can use a second Field Calculator to compute a Long integer representing epoch milliseconds five minutes ago: currentTime() - (5 * 60 * 1000). Write this value into another new field whose type is Long.

Then use a filter to compare the two integer values.

- RJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I actually didn't have to do the "+ 0" part, just a regular cast into a new field of Long did the trick. Shawn, I do not know your proficiency with GeoEvent, so if you want more step-by-step instructions, don't be afraid to ask!

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks MBramer-esristaff and RJ Sunderman!

I am fairly new to GeoEvent, specifically the syntax of filters, so a little more step-by-step would be great. I've got a field calculator that is taking in the myDateAttributeField and placing it in a new field of type long. I think i just need help configuring the filter to actually compare the incoming DateField to the 2 tolerance values (5 minutes +/- currentTime).

I should also mention that I'm using 10.4.1 as well.

Thanks again for the help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Shawn,

I've had this set up for a while but ran across a few unexpected things along the way. I'll post a reply soon with all the gory details. Just wanted to know there was activity on our side so you don't think we fell silent on you.

Stay tuned...

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi Shawn (Shawn Boliner)

I have what you need, along with some important information about how GeoEvent handles dates.

I should have asked you early on what input you were using (?). For my testing, I used the "Receive Text from a TCP Socket" and "Receive JSON on a REST Endpoint". I used the TCP/socket input because it is very common in real-world use cases, and I used JSON/REST as I wanted to see how the JSON adapter compared. If you are using a different input, like Poll/ArcGIS, I can look at that as well, but I did not include that for now.

Both the Text Adapter and JSON Adapter exhibit behavior I was not expecting. If the value for a date is invalid, like really invalid, the adapters may choose to make the date an instance of the current system date at the time of processing the geoevent. This is potentially really bad, as GeoEvent is making up data. I had "bada bing" in a date field and GeoEvent proceeded with no error, and made the geoevent's date value an instance of the current system time. I've submitted this issue to the GeoEvent development team, and it has been added to the defect list. The desired behavior is for GeoEvent to make a null date should a value not be parseable as a date. I especially think this is true if the user has specified a value for "expected date format" in the parameters of the input. In this case where a user specifies a value for "expected date format", I'd expect the product to behave like "Ok, the user has instructed me to look for date values in this particular format. I'm going to reject any records with values that don't look like that". That's only my opinion and I will be discussing this with the dev team when the holiday season passes. So with all of this said...

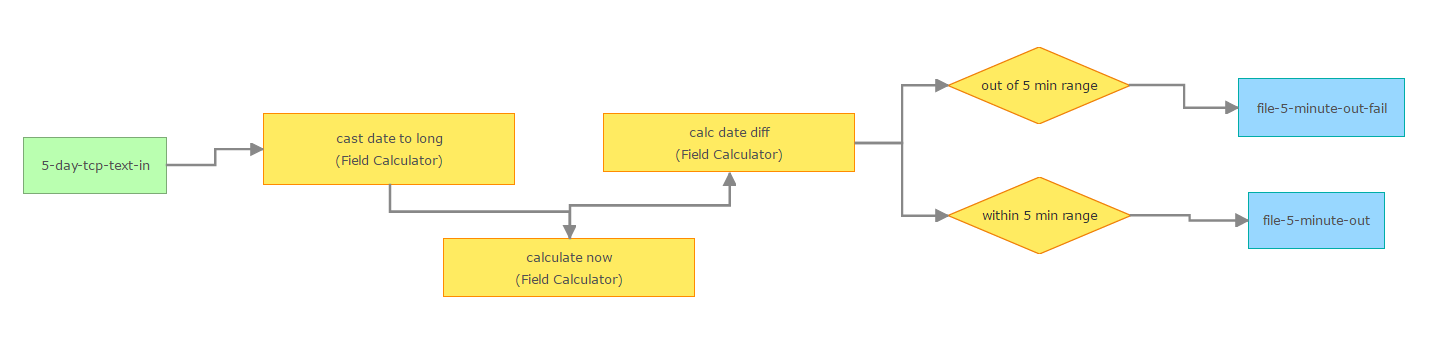

Here is the GeoEvent Service that does what you want:

Let's break it down. I won't talk about the input (green) as it sounds like you already have one set up. I won't talk about the outputs (blue) other than how one represents a outlet for within-range geoevents, and the other is the outlet for out-of-range geoevents. The logic happens in the processors and filters (yellow).

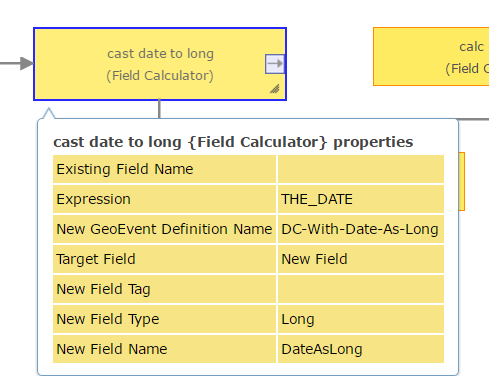

The first processor is "cast date to long" and looks like this:

This processor takes the value from a field named "THE_DATE" in the incoming geoevent, calculates a new field value based solely on the value for THE_DATE, and places it into a new field called DateAsLong. Because adding a new field alters the GeoEvent Definition (e.g. schema), I have to specify a name for the new GeoEvent Definition, and in this case, that name is "DC-With-Date-As-Long". So the geoevent data that flowed into this processor is still intact and emitted from this processor, but has a NEW value appended, which is the original date value in THE_DATE, but as Long data type. This is necessary because the way we do date math in the next couple steps. And by the way, this date value I'm talking about is in epoch time milliseconds.

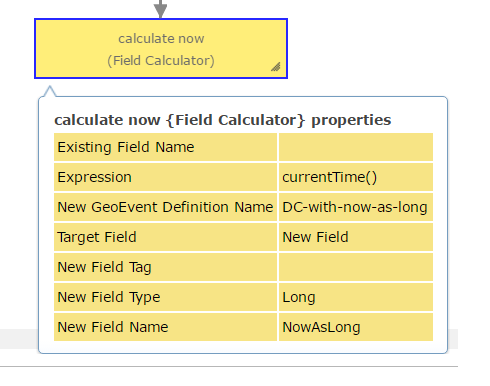

The next processor is "calculate now" and looks like this:

This processor simply uses the currentTime() function to generate a Long-format value for the system time when the geoevent is being processed. Again, this value is newly generated, and we need somewhere to put it, so in this example, it's going into a field called NowAsLong. Once again, a new GeoEvent Definition is created, and is called DC-with-now-as-long.

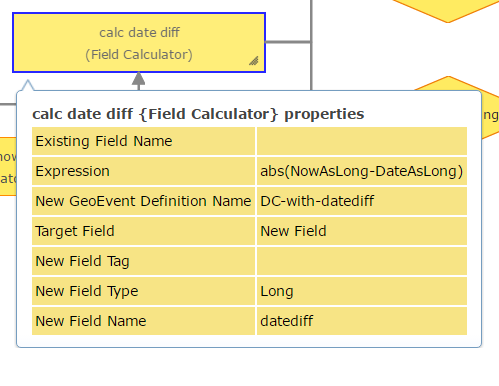

The next processor is "calc date diff" and looks like this:

Recall that between the last two processors, we've calculated two new fields: one called "DateAsLong" and one called "NowAsLong". These are the two pieces of information we need to determine if a geoevent's date is within an acceptable time range. However, we're not quite ready to filter out geoevents just yet. We need to calculate the difference between these two values, in milliseconds, and compare that to the number of milliseconds in the time range we care about. It's with that difference that we will filter out any geoevents that fall outside that range (or vice versa, depending on what is desired).

The "calc date diff" processor (above) calculates the absolute value of the difference between DateAsLong and NowAsLong. By calculating the absolute value, we're comparing a geoevent's time both before and after system time. Using an absolute value covers the "within a tolerance of" aspect of what we're trying to accomplish. The calculated value is inserted into a field called "datediff" and once again, we have to make a new GeoEvent Definition, which will be called "DC-with-datediff".

Finally, I have two filters, one of which catches geoevents outside of the desired date range, the other of which catches geoevents within the desired date range. Note that depending on what you care about, you may only need one of these. I wanted to demonstrate the concept of splitting the stream and sending results down two different paths.

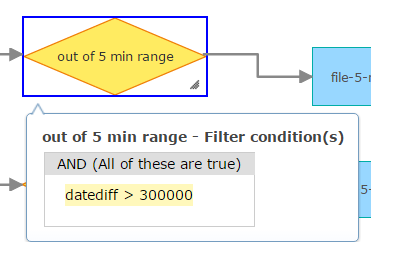

The filter that catches out-of-range geoevents looks like:

300,000 is the number of milliseconds in 5 minutes. So this filter simply looks for any geoevents with a "datediff" value greater than 300,000. Any geoevent that meets this criteria will pass through the filter, and onto whatever downstream processing that awaits. In my case, I simply output these geoevents to a file output called "file-5-minute-out-fail". These geoevents are ones whose timestamps fell outside of a +/- 5 minute time window.

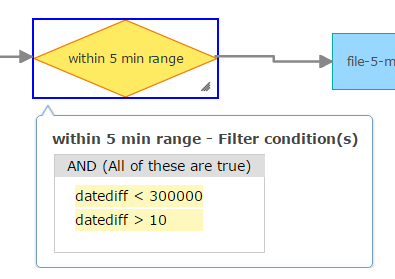

Conversely, the filter that catches geoevents within the time range of interest looks like this:

One would think the logic here would simply be the exact opposite of the other filter (i.e. datediff < 300,000). This is generally true. But notice that I have an additional criteria of "datediff > 10". Recall the earlier topic of the Text and JSON Adapters sometimes creating a timestamp of system time when a date was not able to be parsed. In those cases, a bad date value would result in the Text/JSON Adapter creating a geoevent whose timestamp would pass the filter criteria of "datediff < 300,000". This is undoubtedly NOT what would be desired. So by having an additional check of datediff > 10, I'm not letting geoevents pass through if their timestamp is within 10 milliseconds of system time. Obviously, this is based on an assumption that 10 milliseconds is an acceptable value to use for this secondary catch clause. You may be able to afford a larger value, or even a smaller value. The key here is to realize that this secondary criteria is present entirely to address what I see as a defect in the product at the current version.

I had good luck with the GeoEvent Service discussed in testing. I used the following very simple test data:

CSV:

ID,POINT_X,POINT_Y,STATUS,THE_DATE

8,-92.41646302,35.06684922,offline,12/28/16 18:39:12

3,-92.47439554,35.08497411,offline,1/10/16 1:99:x

3,-92.48372651,35.08497411,offline,12/28/16 18:39:35

5,-92.499569,35.0888676,online, bada bing

JSON:

[{

"ID":8,

"POINT_X":-92.41646302,

"POINT_Y":35.06684922,

"STATUS":"offline",

"THE_DATE":"12/28/16 18:45:00"

},

{

"ID":3,

"POINT_X":-92.47439554,

"POINT_Y":35.08497411,

"STATUS":"offline",

"THE_DATE":"1/10/16 1:0:xx"

},

{

"ID":3,

"POINT_X":-92.48372651,

"POINT_Y":35.08497411,

"STATUS":"offline",

"THE_DATE":"12/28/16 18:45:00"

},

{

"ID":5,

"POINT_X":-92.499569,

"POINT_Y":35.0888676,

"STATUS":"online",

"THE_DATE":"bada bing"

}]

Note that records 1 and 3 are the ones with properly formatted dates. Therefore, for each dataset, during testing, I have to change the timestamp for records 1 and 3 to be within 5 minutes of my system time to achieve the expected results of two records succeeding, and two failing.

I hope this helps - please let me know if any of it is confusing or unclear. Special thanks to rsunderman-esristaff for input and help from the product team side.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have just replicated this workflow for events coming from a Poll-Feature-Class input. One small note is that the currentTime() and receivedTime() functions only appear to populate a Date field.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Mark,

Trying to replicate your workflow and i am not seeing the new GeoEvent Definitions so i cannot complete the out of range filter. Any Ideas?

I am trying to compare an inspection date from a survey123 inspection to the current time and if they are within an acceptable range, ie 15 minutes, send out an email.

Thanks,

--gary

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Hi @GaryBowles1 ,

I was running into the same problem. I was able to get it to add the definitions after I published and ran the new GeoEvent Service. I stopped it quickly and then added the filter, but that was the only way I could get it to work.

Thanks,

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have a similar issue which I have raised on a separate thread here: Using dates in filters?

Was wondering if someone could throw me a bone?