- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Blog

- :

- Debug Techniques - Add/Update Feature Outputs

Debug Techniques - Add/Update Feature Outputs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

This blog is one in a series of blogs discussing debugging techniques you can use when working to identify the root cause of an issue with a GeoEvent Server deployment or configuration. Click any link in the quick list below to jump to another blog in the series.

|

In a client / server context ArcGIS GeoEvent Server sometimes acts as a client and at other times acts as a server. When an Add a Feature or an Update a Feature output is configured to add / update feature records in a geodatabase feature class through a feature service, ArcGIS GeoEvent Server is a client making requests on an ArcGIS Server feature service. In this blog I will show how you can isolate requests GeoEvent Server sends to an ArcGIS Server service and how to use the JSON from the request to debug issues you are potentially encountering.

Scenario

A customer reports that an input connector they have configured appears to be successfully receiving and adapting data from a provider and event records appear to be processed as expected through a GeoEvent Service. The event record count on their output increments, but they are not seeing some – or any – features displayed by a feature layer they have added to a web map.

Request DEBUG logs for the outbound feature service transport

Components in the ArcGIS GeoEvent Server runtime log messages to provide information as well as note warnings and/or errors. Each component uses a logger, an object responsible for logging messages in the system's log file, which can be configured to generate different levels of messages (e.g. DEBUG, INFO, WARN, or ERROR).

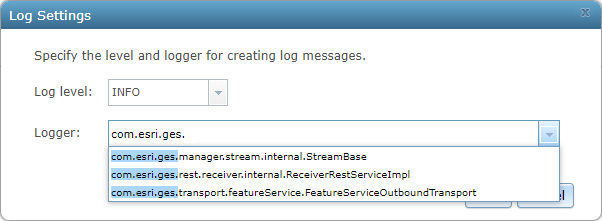

In this case we want to request the com.esri.ges.transport.featureService.FeatureServiceOutboundTransport component log DEBUG messages to help us identify the problem. To enable DEBUG logging for a single component's logger:

- In GeoEvent Manager, navigate to the Logs page and click Settings

- Enter the name of the logging component in the text field Logger and select the DEBUG log level

- Click Save

As you type the name of a logger, if the GeoEvent Manager's cache of logged messages contains a message from a particular component's logger, IntelliSense will help you identify the logger's name.

Querying for additional information

When a processed event record is routed to an Update a Feature output the data is first reformatted as Esri Feature JSON so that it can be incorporated into a map/feature service request. A request is then made using the ArcGIS REST API to either Add Features or Update Features.

An Add a Feature output connector has the easier job – it doesn't care whether a feature record already exists since it is not going to request an update. An Update a Feature output connector on the other hand needs to know the objectid or row identifier of the feature record it should update.

If the output has previously received an event record with this event record's TRACK_ID then it has likely already sent a request to the targeted map/feature service to query for feature records whose Unique Feature Identifier Field was specified as the field to use to identify feature records to update. The output maintains a cache mapping every event record's TRACK_ID to a corresponding object or row identifier of a feature record.

Here is what the logged DEBUG messages look like when an Update a Feature output queries to discover an object or row identifier associated with a feature record:

1 | 2019-06-05T15:12:34,324 | DEBUG | FeatureJsonOutboundAdapter-FlushingThread-com.esri.ges.adapter.outbound/JSON/10.7.0 | FeatureServiceOutboundTransport | 91 - com.esri.ges.framework.transport.featureservice-transport - 10.7.0 | Querying for missing track id '8SKS617' |

2 | 2019-06-05T15:12:34,489 | DEBUG | FeatureJsonOutboundAdapter-FlushingThread-com.esri.ges.adapter.outbound/JSON/10.7.0 | FeatureServiceOutboundTransport | 91 - com.esri.ges.framework.transport.featureservice-transport - 10.7.0 | Posting to URL: https : //localhost.esri.com/server/rest/services/SampleRecord/FeatureServer/0/query with parameters: f=json&token=QNv27Ov9...&where=track_id IN ('8SKS617') &outFields=track_id,objectid. |

3 | 2019-06-05T15:12:34,674 | DEBUG | FeatureJsonOutboundAdapter-FlushingThread-com.esri.ges.adapter.outbound/JSON/10.7.0 | FeatureServiceOutboundTransport | 91 - com.esri.ges.framework.transport.featureservice-transport - 10.7.0 | Response was {"exceededTransferLimit":false,"features":[ ],"fields"... |

Notice a few key values highlighted in the logged message's text above:

- Line 1: The output has recognized that it has not previously seen an event record with the TRACK_ID 8SKS617 (so it must query the map/feature service to see if it can find a matching feature record).

- Line 2: This is the actual query sent to the SampleRecord feature service's query endpoint requesting a feature record whose track_id attribute is one of several in a specified list (8SKS617 is actually the only value in the list). The query requests that the response include only the track_id attribute and an object identifier value.

- Line 3: The ArcGIS Server service responds with an empty array features[ ]. This indicates that there are no features whose track_id attribute matches any of the values in the query's list.

The output was configured with its Update Only parameter set to 'No' (the default). So, given that there is no existing record whose track_id attribute matches the event record's tagged TRACK_ID field, the output connector fails over to add a new feature record instead:

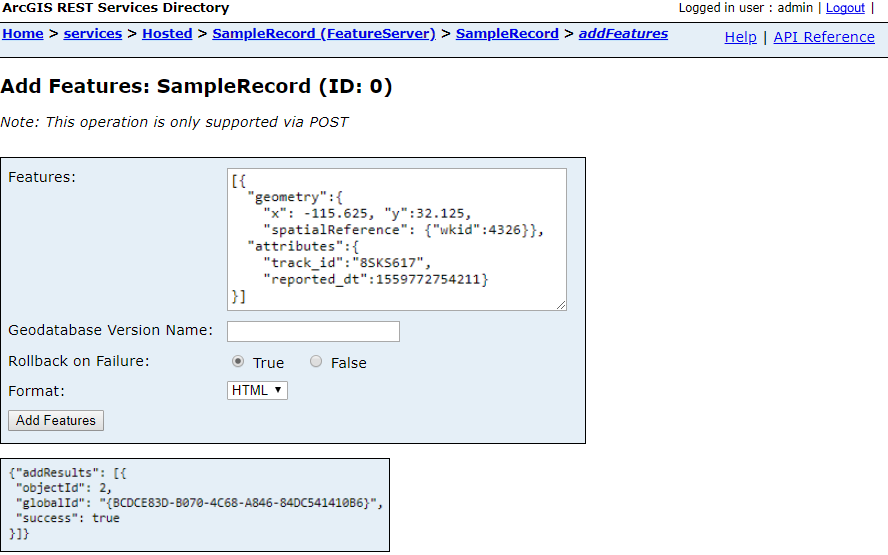

4 | 2019-06-05T15:12:34,769 | DEBUG | FeatureJsonOutboundAdapter-FlushingThread-com.esri.ges.adapter.outbound/JSON/10.7.0 | FeatureServiceOutboundTransport | 91 - com.esri.ges.framework.transport.featureservice-transport - 10.7.0 | Posting to URL: https : //localhost.esri.com/server/rest/services/SampleRecord/FeatureServer/0/addFeatures with parameters: f=json&token=QNv27Ov9...&rollbackOnFailure=true features=[{"geometry":{"x":-115.625,"y":32.125, "spatialReference":{"wkid":4326}},"attributes":{"track_id":"8SKS617","reported_dt":1559772754211}}]. |

5 | 2019-06-05T15:12:34,935 | DEBUG | FeatureJsonOutboundAdapter-FlushingThread-com.esri.ges.adapter.outbound/JSON/10.7.0 | FeatureServiceOutboundTransport | 91 - com.esri.ges.framework.transport.featureservice-transport - 10.7.0 | Response was {"addResults":[{"objectId":1,"globalId":"{B1384CE2-7501-4753-983B-F6640AB63816}", "success":true}]}. |

Again, take a moment to examine the highlighted text:

- Line 4: The ArcGIS REST API endpoint to which the request is sent is the Add Features endpoint. An Esri Feature JSON representation of the event data is highlighted in green.

- Line 5: The ArcGIS Server service responds with a block of JSON indicating that it successfully updated a feature record, assigning the new record the object identifier '1' and a globally unique identifier (the feature service I'm using in this example is actually one hosted by my ArcGIS Enterprise portal).

The debug logs include the Esri Feature JSON constructed by the output connector. You can actually copy and paste this JSON into the feature service's web page in the ArcGIS REST Services Directory. This is an excellent way to abstract ArcGIS GeoEvent Server from your debugging workflow and determine if there are problems with how the JSON is formatted or reasons why a feature service might reject a client's request.

I used this technique once to demonstrate that a polygon geometry created by a Create Buffer processor in a GeoEvent Service had several dozen vertices, allowing the geometry to approximate a circular area. When the polygon was committed to the geodatabase as a feature record, however, its geometry had been generalized such that it only had a few vertices. Web maps were displaying very rough approximations of the area of interest, not circular buffers. But it wasn't ArcGIS GeoEvent Server that had failed to produce a geometry representing a circular area. The problem was somewhere in the back-end relational database configuration.

Rollback on Failure?

There is a query parameter on Line 4 in the illustration above which is easily overlooked: rollbackOnFailure=true

The default action for both the Add a Feature and Update a Feature outputs is to request that the geodatabase rollback the feature record transaction request if a problem is encountered. In many cases this is why customers are not seeing all of the feature records they expect updated in a feature layer they have added to a web map. Consider the following fields specification for the targeted feature service's feature layer:

Fields:

track_id ( alias: track_id, type: esriFieldTypeString, length: 512, editable: true, nullable: true )

reported_dt ( alias: reported_dt, type: esriFieldTypeDate, length: 29, editable: true, nullable: true )

objectid ( alias: objectid, type: esriFieldTypeOID, length: 8, editable: false, nullable: false )

globalid ( alias: globalid, type: esriFieldTypeGlobalID, length: 38, editable: false, nullable: false )

Suppose for a moment that the esriFieldTypeString specification for the track_id attribute specified that the string should not exceed seven characters. If a web application (client) were to send the feature service a request with a value for the track_id which was longer than seven characters, the data would not comply with the feature layer's specification and the feature service would be expected to reject the request.

Likewise, if attribute fields other than esriFieldTypeOID or esriFieldTypeGlobalID were specified as not allowing null values, and a client request was made whose attribute values were null, the data would not be compliant with the feature layer's specification; the feature service should reject the request.

By default both the Add a Feature and Update a Feature output connectors begin working through a cache of event records they have formatted as Esri Feature JSON placing the formatted data in one or more requests that are sent to the targeted feature service's feature layer. Each request, again by default, is allowed to contain up to 500 event / feature records.

It only takes one bad apple to spoil a batch. If even one processed event record's data in a transaction containing ten, fifty, or a hundred feature records in a single transaction request is not compliant with string length restrictions, value nullability restrictions – or any other restriction enforced by an ArcGIS Server feature service – the entire transaction will rollback and none of the feature records associated with that batch of processed event records will be updated.

Reduce the Maximum Features Per Transaction

You cannot change the rollback on failure behavior. The outbound connectors interfacing with ArcGIS Server feature services do not implement a mechanism to retry an add/update feature record operation because one or more feature records in a batch do not comply with a feature layer's specification.

You can change the number of processed event records an Add a Feature or Update a Feature output connector will include in each transaction. If you configure your output to specify a maximum number of one feature record per transaction you can begin to work around the issue of one bad record spoiling an entire transaction. If bad data or null values were to occasionally creep into processed event records then only the bad records will fail to update a corresponding feature record and the rollback on failure won't suppress any valid feature record updates.

The downside to this is that REST requests are inherently expensive. If it were to take as little as 20 milliseconds to make a round-trip to the database and receive a response to a transaction request you could effectively cut your event throughput to less than 50 event records per second if you throttle feature record updating by allowing only one processed event record per transaction. The upside to reducing, at least temporarily, the number of records allowed in a transaction is that it makes the messages being logged much, much easier to read. It also guarantees that each success / fail response from the ArcGIS Server feature service can be traced back to a single add / update feature request.

Timestamps – another benefit to logging DEBUG messages for the outbound transport

Every logged message includes a timestamp with millisecond precision. This can be very useful when debugging unexpected latency when interacting with a geodatabase's feature class through an ArcGIS Server's REST interface.

Looking back at the two tables above with the logged DEBUG messages, the time difference between the messages on Line 1 and Line 2 is 165 milliseconds (489 - 324 = 165). That tells us it took over a tenth of a second for the output to formulate its query for "missing" object identifiers needed to request updates for specific feature records. It takes another 185 milliseconds (674 - 489 = 185) to actually query for the needed identifiers and discover that there are no feature records with those track_id values.

To be fair, you should expect this latency to drop as ArcGIS Server and/or your RDBMS begin caching information about the requests being made by clients. But it is important to be able to measure the latency ArcGIS GeoEvent Server is experiencing. If every time an Add a Feature output connector's timer expires (which is once every second by default) it takes a couple hundred milliseconds to complete a transaction, you should have a pretty good idea how many transactions you can make in one second. You might need to increase your output's Update Interval so that it holds only its cache of processed event records longer before starting a series of transactions. If you do this, know that as updates arrive for a given tracked asset older records will be purged from the cache. When updating feature records the cache will be managed to contain only one processed event record for each unique TRACK_ID.

Conclusion

Taking the time to analyze the DEBUG messages logged by the outbound feature service transport can provide you a wealth of information. You can immediately see if values obtained from an event record's tagged TRACK_ID field are reasonably expected to be found in whatever feature layer's attribute field is being used to query for feature records that correlate to processed event records. You can check to see if any values in a processed event record are unexpectedly null, have strings which are longer than the feature layer will accept, or – my favorite – contain what ArcGIS Server suspects is HTML or SQL code resulting in a service rejecting the transaction to prevent a suspected injection attack.

ArcGIS GeoEvent Server, when interfacing with an RDBMS through a map / feature service's REST interface, is acting as any other web mapping application client would act in making requests on a service it assumes is available. You can eliminate GeoEvent Server entirely from your debugging workflow if you copy / paste information like the ESRI Feature JSON from a DEBUG message logged by the outbound transport into an HTML page in the ArcGIS REST Services Directory. I did exactly this to prove, once, that polygon geometries with hundreds of vertices modeling a circular area were somehow being generalized as they were committed into a SQL Server back-end geodatabase.

If a customer reports that some – or all – of the features they expect should be getting added or updated in a feature layer are not displayed by a web map's feature layer, take a close look at the requests the configured output is sending to the feature service.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.