- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS GeoEvent Server

- :

- ArcGIS GeoEvent Server Blog

- :

- Debug Techniques - Application logging tips and tr...

Debug Techniques - Application logging tips and tricks

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

This blog is one in a series of blogs discussing debugging techniques you can use when working to identify the root cause of an issue with a GeoEvent Server deployment or configuration. Click any link in the quick list below to jump to another blog in the series.

|

In this blog I will illustrate a couple of techniques I use to identify more granular component logging than requesting the ROOT component produce DEBUG messages for all component loggers. I will also introduce a couple command-line utilities I frequently use to interrogate the ArcGIS GeoEvent Server's system log file. I'll consider a specific scenario and show how to isolate logged messages that provide information about an output's requests to a feature service which identify the criteria used to discover and delete feature records.

Scenario

A customer has configured the Delete Old Features capability on an Add a Feature output connector and reports feature records are being deleted from the geodatabase earlier than expected. Following advice from the blog Add/Update Feature Output Connectors they have captured a few logged messages from the outbound feature transport but are not seeing any information about criteria the connector is using to determine which feature records should be deleted or when the records should be deleted.

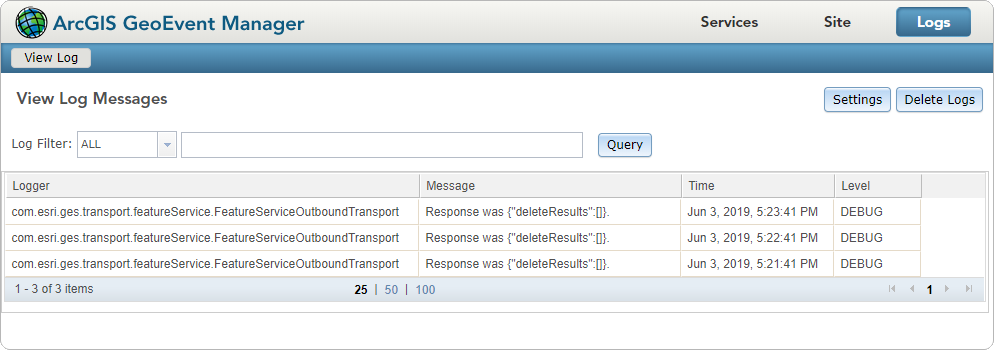

What is the outbound feature transport telling us?

The illustration above does not give us much information. It confirms that an Add a Feature output is periodically, once a minute, making requests on a feature service to delete old feature records and that, for the three intervals shown, no feature records were deleted (the JSON array in the response from the feature service is empty).

If one or more existing feature records had satisfied criteria included in the delete features request, then the logged messages would contain feature record identifiers to confirm which feature records had been deleted. Hypothetically, looking at the raw logged messages in the karaf.log file, we would expect to see a message similar to the following:

2019-06-03T16:42:41,474 | DEBUG | OutboundFeatureServiceCleanerThread-[Default][/][SampleRecord][0][FeatureServer] | FeatureServiceOutboundTransport | 91 - com.esri. ges.framework.transport.featureservice-transport - 10.7.0 | Response was {"deleteResults":[{"objectid":3, ... "success":true},{ "objectid":4, ... "success": true}]}. |

The outbound feature transport is only confirming what has been deleted, not criteria used to determine what should be deleted. The information we need, hopefully, is being logged by a different component logger.

How to determine which component logger to watch

As I mentioned in the blog Configuring the application logger, the logging system implemented by ArcGIS GeoEvent Server logs messages from the Java runtime. The messages being logged generally contain good information for software developers, but are rather hard for a GIS analyst to review and interpret. If someone from the product team has not identified a component logger from which you should request more detailed log messages, your only option is to request DEBUG logging on the ROOT component.

If you elect to do this you must know that the karaf.log will quickly grow very large and will roll over as described in the aforementioned blog.

All hope is not lost lost however. One technique I have found helpful is turn off as many of my running inputs and outputs as I can to quiet ArcGIS GeoEvent Server's activity and then briefly, for perhaps a minute or two, request DEBUG level messages be produced by setting the debugging level on the ROOT component. GeoEvent Manager's logging user interface will quickly cache up to 500 messages and you can use built-in IntelliSense to at least get an idea of which components are actively running and producing log messages.

.png)

Once you understand that both the Add a Feature and Update a Feature output connectors use endpoints exposed through the ArcGIS REST Services Directory to interface with their targeted feature services, one component logger should stand out – the HTTP Client component logger highlighted in the illustration above. The information we need on the criteria used to identify feature records to delete is probably being logged as part of an HTTP REST request.

Request DEBUG logs for the HTTP Client

In this case we want to request the com.esri.ges.httpclient.Http component log DEBUG messages to help us identify the problem. To enable DEBUG logging for a the identified component's logger:

- Navigate to the Logs page in GeoEvent Manger and click the Settings button.

- Restore the ROOT component logger to its default level WARN and click Save.

- Specify the name of the HTTP Client component logger, select the DEBUG log level, and Save again.

ArcGIS GeoEvent Server is fundamentally RESTful, which means you will still have a high volume of messages being logged to the karaf.log – but not as many as if you had left DEBUG logging set on the ROOT component logger.

Useful command-line utilities for interrogating karaf.log

I operate almost exclusively on a Windows platform, but Cygwin is one of the first things I install whenever I get a new machine. Cygwin is a free, open source, environment which provides a native Windows integrated command-line shell from which I can execute some of my favorite Unix utilities like sed, grep, awk, and tail. There are probably other packages available which provide similar utilities and tools, but I like Cygwin.

If I open a Cygwin command-line shell I can change directory to where the karaf.log file is being written and generate an active tail of the log so that I don't have to open the log file in a text editor and frequently re-load the file as its content is updated. I am also able to pipe the streaming content from tail through grep to limit the logged messages displayed to those which contain specific keywords or phrases. For example:

1 | rsunderman@localhost //localhost/C$/Program Files/ArcGIS/Server/GeoEvent/data/log |

2 | $ tail -0f karaf.log |grep --line-buffered 'where.*reported_dt' |

3 | 2019-06-07T16:33:19,545 | DEBUG | OutboundFeatureServiceCleanerThread-[Default][/][New_SampleRecord][0][FeatureServer] | Http | 60 - com.esri.ges.framework. httpclient - 10.7.0 | Adding parameter (where/reported_dt < timestamp '2019-06-07 17:33:19'). |

4 | 2019-06-07T16:34:20,269 | DEBUG | OutboundFeatureServiceCleanerThread-[Default][/][New_SampleRecord][0][FeatureServer] | Http | 60 - com.esri.ges.framework. httpclient - 10.7.0 | Adding parameter (where/reported_dt < timestamp '2019-06-07 17:34:20'). |

5 | 2019-06-07T16:35:20,433 | DEBUG | OutboundFeatureServiceCleanerThread-[Default][/][New_SampleRecord][0][FeatureServer] | Http | 60 - com.esri.ges.framework. httpclient - 10.7.0 | Adding parameter (where/reported_dt < timestamp '2019-06-07 17:35:20'). |

The above quickly reduces all the noise logged by the HTTP Client component logger to only those messages which include the name of the attribute field reported_dt which the Add a Feature output was configured to use when identifying feature records older than a specified number of minutes. The criteria we are looking for is clearly identified as a parameter the HTTP Client is adding to the request it is constructing to send to the feature service to identify and delete old feature records.

The system I am running is in California, which is -07:00 hours behind GMT. The date/time values in the reported_dt attribute of each feature record in my feature are expressed as epoch long integers and represent GMT values. My output is configured to query every 60 seconds and delete feature records which are more than six hours old. The logged messages above bear timestamps which are roughly 60 seconds apart and the where clause identifies any feature record whose date/time is "now" + 07:00 hours (UTC offset) - 06:00 hours (the number of hours at which a feature record is considered "old").

Using the ArcGIS REST Services Directory to query feature records from the feature service, I can quickly see that feature records which are not yet six hours old (relative to GMT) remain but those I add or update with a reported_dt value which is at least six hours old get deleted every 60 seconds.

What if the above had not yielded the information we needed?

We could always fall back to set the ROOT logger to DEBUG so that all component loggers produced debug messages. While this is extremely verbose the technique which uses the tail and grep command-line utilities can still be used to try and find anything which mentions our particular feature service's REST endpoint.

In this case my feature service's name was New_SampleRecord, so I can reasonably expect to find logged messages which include references to: New_SampleRecord/FeatureServer/0/deleteFeatures

A grep command, using a regular expression pattern match like the following should find only those logged messages which appear to be attempting to delete features from the feature layer in question:

tail -0f karaf.log |grep --line-buffered 'SampleRecord.*FeatureServer.*deleteFeatures'

Tests using the above grep log message filter reveal about 75 messages logged every 60 seconds which include a reference to the deleteFeatures endpoint for the feature layer my output is targeting. Copying and pasting these lines into a text editor I can review them to discover that only one message contains a SQL WHERE clause. Such a clause would be required to identify records with a date/time value which should be considered "old".

While the date/time value in this logged message is HTTP encoded, because this particular message depicts text ready to be sent out over the HTTP wire, we can still use the logged message to understand the criteria being applied by the ArcGIS GeoEvent Server's output.

2019-06-07T18:10:06,956 | DEBUG | HttpRequest Worker Thread: https://localhost.esri.com /server/rest/services/New_SampleRecord/FeatureServer/0/deleteFeatures | wire | 60 - com.esri.ges.framework.httpclient - 10.7.0 | http-outgoing-27360 >> "f=json&token=HM85k4E...&rollbackOnFailure=true&where=reported_dt+%3C+timestamp+%272019-06-07+19%3A10%3A06%27"

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.