- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: Help designing load test

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Help designing load test

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Our organization is experiencing issues with a service during periods of high demand. The service supports a flood warning system so those periods of high demand are sporadic so I'm looking towards using a load test with Apache JMeter to help us evaluate what we can do to better support our needs. I'm using this blog post and tutorial to help guide me and I've installed JMeter and have a test case set up. Since I'm new to this, I'm not sure if my parameters really mirror the real world so I'm posting to get some more experienced eyes on this.

Anyways, instead of a a map image export like in the tutorial, I'm just performing a simple "1=1" query on our particular map service. Our web map builds our stream gage layer on the fly at page load using this query based on a table in this particular service.

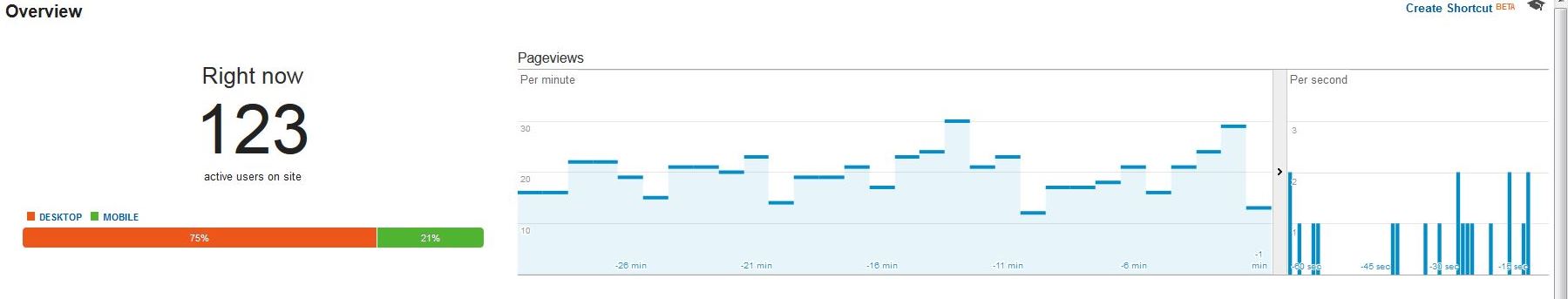

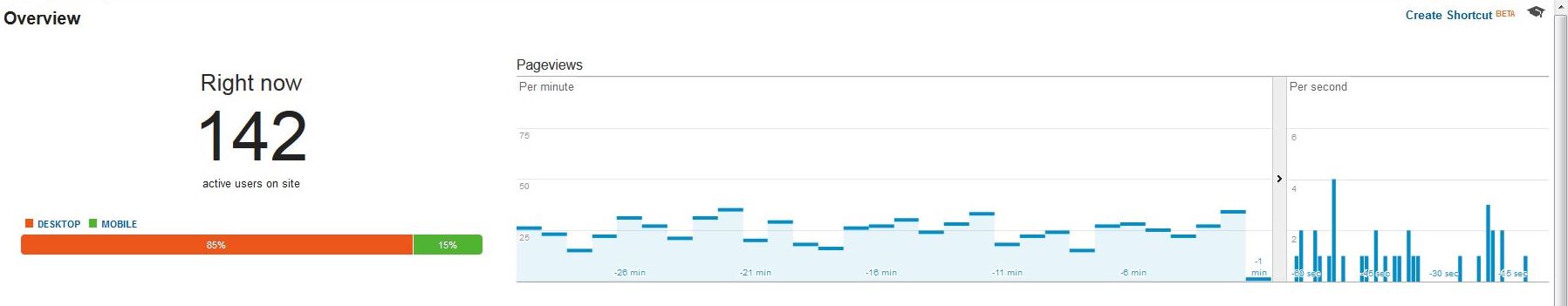

Below are a couple of screenshots from Google Analytics real-time view during our most recent events:

Based on this, I created a test with a thread of 40 users, a ramp up period of 20 seconds, and a loop count of 3. Does this sound reasonable, given the real-world examples shown above? Am I on the right track of way off?

Thanks!

Steve

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

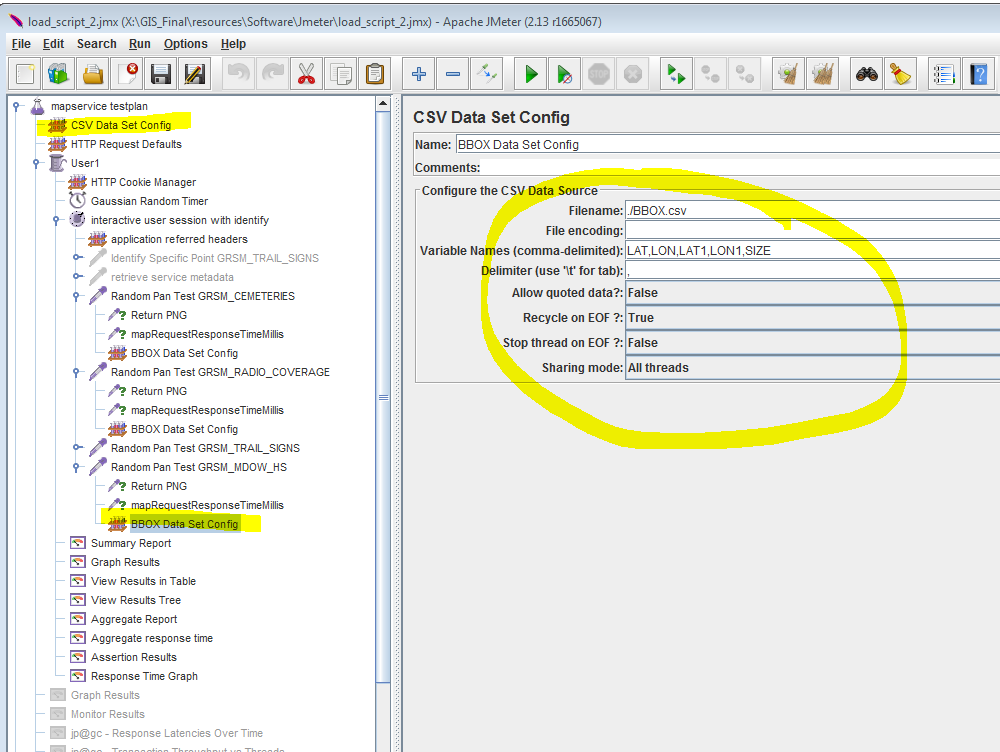

I've had good luck with Jmeter. Instead of the 1=1 query plan, I'd add a csv element pre-populated with various zoom and extent levels, a wide range, and randomize zoom and extent with each query. Further more, I'd add a pan request. In this manner, you'll more accurately test what a real user does: Access the initial map view, then zoom and pan around. I've found rapid panning is what puts the most load on REST requests. To get really "real", add an identify query here and there. Zoom, pan, then click on something.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

...and as a side-note: Jmeter is difficult, if not impossible, to configure to test against ArcGIS server that has a https cert on the web adapter and the GIS server. You might need to turn those things off, but be sure to test the http requests as they pass through the web adapter: if you test against the GIS server (Apache) but are using a web adapater, you won't see the performance hit that using a WA incurs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thanks for the response, Thomas. Unfortunately the "1=1" query *IS* the issue we're experiencing. If the query returns an empty array of results, the layer of stream gages doesn't get constructed properly (it's done client side) and that's what people are complaining about. The table getting query'd has anywhere from 2800-3400 records in it at any given time. The other two layers in the map are incredibly small (point layer of 16 points & county boundary line features). I ran my JMeter load test 100 times yesterday and only received correct results 51% of the time (results were identical for AGS 10.1 and AGS 10.3).

I'm kinda convinced we don't know what we're doing to support high load services (using the ESRI Web Adapter, no cluster of AGS servers, etc) but, to quote the adage, "that's not my department". As the developer, I just get the blame that the application doesn't work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

What is the architecture? Is this pulling from SQL, a remote data service, or local FGDB? Any chance you could put the same data on AGOL and run the same query? Is this going through HTTPS? Windows Authentication? I've gotten better performance by siloing the site (putting Web Adapter on it's own server).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I'm getting outside my knowledge since I'm not responsible for the backend stuff but it's in our organizations Enterprise SDE in SqlServer 2012.

It's not going through HTTPS.

We do use Windows Authentication

I suspect that the web adapter is NOT silo'd. I'd bet money we just ran with the ESRI installation guide when setting things up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

80% confident SQL is your bottleneck. Live GIS data from SQL will not stand up to "high-demand", which I define as more than 10 users, unless you're running SQL on a clustered, high-availability instance. Not likely you have 100k$ lying around, so you're best bet is to look at what sort of storage format you're using for the feature classes, index tune where/if you can, and/or move some of the data into views or hourly-updated FGDB's. USGS National Map serves 1000's of requests per hour (minute?), but sourced from FGDB.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

That's interesting. The stream gage data is stored natively in an MS Access database (old gage software which is on its last legs officially and unofficially). Due to some permission conditions the gage software has with its corresponding database, we've set up a system where we use a python script to transfer data from the MS Access db into an SDE table. I suppose we could alter that process and have it dump the information into a FGDB and publish off of that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I forgot to add a key piece of information. The data transfer from MS Access to SDE occurs every 10,15 minutes. During the transfer the table in SDE is purged and then the Access records are inserted. We've been getting erratic run times on the script. Initially, it was completing in 4 seconds but somehow it grows to 1-2 minutes. During that time, of course, the SDE table has no records and results in no data for folks who happen to load/re-load the page at that time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I do not think you would want to use a file gdb for your stream gauge data. My organization tried to setup a road closure mapservice that would update every 5 minutes to the registered file gdb from another database that was capturing the data from a MS Access application. The mapservice was OK for some time, but the constant refreshing of data (OBJECTIDs were climbing rapidly) caused a severe degradation of performance. In the end someone scripted the data to be updated on AGOL every 5 minutes which resolved the performance issue.

As such, maybe you could make use of AGOL's scalability to solve your performance issue as an alternative to your current setup.