- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Enterprise

- :

- ArcGIS Enterprise Questions

- :

- Re: ArcGIS Server Geoprocessing service and SDE Da...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ArcGIS Server Geoprocessing service and SDE Database problem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

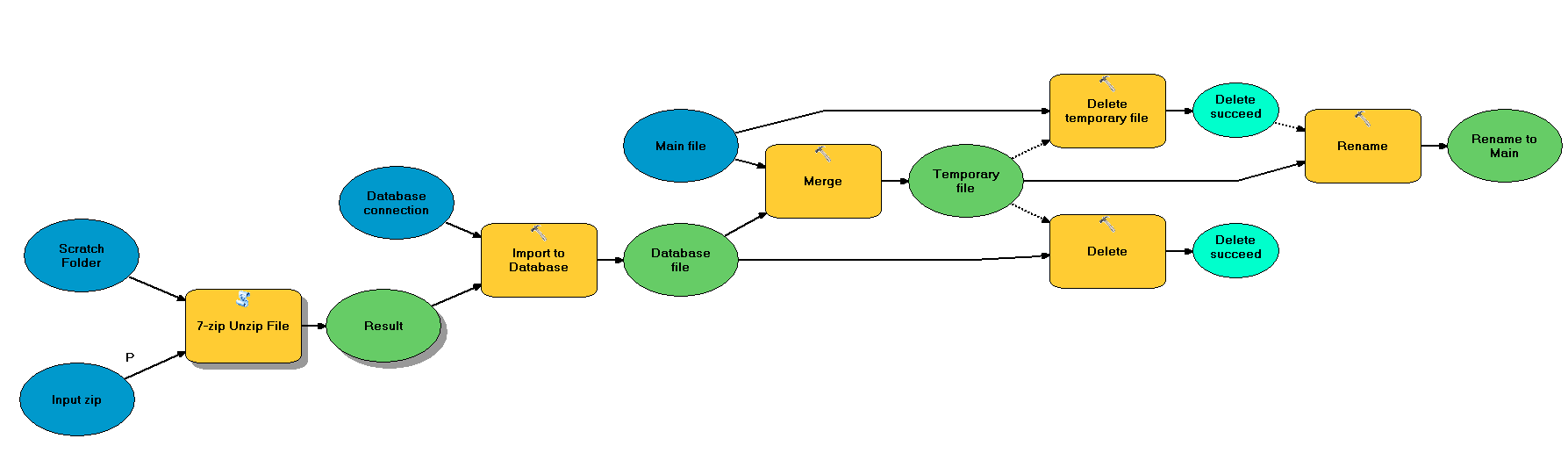

I very happily tried to publish as a service the following model and run very quickly into trouble. What it does is basically taking a zip file with a shapefile, import it into an sde database (SQL server) merge it with an existing featureset and delete intermediate products. It works perfectly on my desktop but after publishing the results as a service the "Import to database" gives the following error "ERROR 000210: Cannot create output C:\arcgisserver\directories\arcgissystem\arcgisinput\TestFolder\Model5.GPServer\extracted\v101\sql06.sde\temp1 Failed to execute (Import to Database). Failed to execute (Model3). Failed to execute (merge_layer)." The database is registered with the AGS (10.3) and when I publish I don't get prompted to copy anything.

Any ideas why AGS can't write to the database?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Check all your directory permissions, SDE privileges, model parameters and pathways, tool input and output parameters. GPs that run well on the desktop with physical output locations do well, but when publishing as a GP service locations must be reset to in_memory so the input and jobs directories can access them. Lastly, back your model up to the first point of failure and solve from there

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Thank you David,

You helped me put things in order. I managed to solved it. There was a number of things going wrong:

- AGS kept locking the schema so converting the model to python helped

- The "in_memory" never worked for me so I used scratch_folder

- arcpy.env.workspace didn't work properly when I set it to the SDE either so UNC path names with the awkward "//"

- The user running AGS service even though is an administrator for the machine that is running AGS and owner of the SDE database he had permission problems. The logs of the SQL server showed that he didn't make an attempt to connect to the database when the GP tool was running. However the user had no problems writing to the local folders. This still remains a mystery to me. The solution was to run the process as another user. This was probably caused by our internal structure, however it would be nice to know exactly what this user needs as far as permissions are concerned.

- To access tables in the SDE the db name and the schema was necessary (check script below)

Here is the script that worked. It used to be a lot prettier but every time I try to beautify it, something goes wrong...

import arcpy

from arcpy import env

import zipfile

# Script arguments

Input_zip = arcpy.GetParameterAsText(0)

arcpy.env.overwriteOutput = True

fh = open(Input_zip, 'rb')

z = zipfile.ZipFile(fh)

for name in z.namelist():

z.extract(name, arcpy.env.scratchFolder)

if name.endswith('.shp'):

result = arcpy.env.scratchFolder+"\\"+name

fh.close()

sdecon = arcpy.CreateDatabaseConnection_management(arcpy.env.scratchFolder, "tempcon", "SQL_SERVER", "xxxxxx.xxxx.xxxxx", "OPERATING_SYSTEM_AUTH", "", "*****", "SAVE_USERNAME", "GDB", "", "TRANSACTIONAL", "sde.DEFAULT", "")

outpath = arcpy.env.scratchFolder+"\\"+"tempcon.sde"

arcpy.FeatureClassToFeatureClass_conversion(result,outpath,"NEWFILE")

newfile=outpath+"\\"+"GDB.SDE.NEWFILE"

oldfile=outpath+"\\"+"GDB.SDE.MAIN"

renamefile=outpath+"\\"+"RENAMEME"

arcpy.Merge_management([oldfile,newfile], renamefile )

fromname = outpath+"\\"+"GDB.SDE.RENAMEME"

arcpy.Delete_management(oldfile, "FeatureClass")

arcpy.Delete_management(newfile, "FeatureClass")

arcpy.Rename_management(fromname, "MAIN")

Thanks again. I hope some of the above will help other user.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

great, glad to help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

I have ran into similar problems when publishing Geoprocessing scripts. Just because it published fine, does not mean that it will run fine.

When you publish the script, it (ESRI) will manipulate your python script and add its own interpretation of your variables / data locations / connection files. You have to look at the error messages to hunt down the actual script that it is running so you can either change stuff back or overwrite it with your original script.

For example, I had a connection file to a SQL database so it could GeoCode a View that I created. Even though during the publishing it made me add the connection file to the Server's Data Store, the new script doesn't even use the Data Store, and it copied the file and repointed my script to the ....\v101 directory. On top of that, it changed the connection file from a 'SQL Server' to an 'Application Server' and it doesn't even open in ArcCatalog.

Basically, just be aware that once you publish, it is not your original script anymore and you must start another round of trouble shooting.

Enjoy!,

Dave

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

Is it related to database user role and connection privileges.

Please check