- Home

- :

- All Communities

- :

- Products

- :

- ArcGIS Data Reviewer

- :

- ArcGIS Data Reviewer Blog

- :

- Reporting data quality using Attribute rules and p...

Reporting data quality using Attribute rules and python

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

An important responsibility in data stewardship is delivering metrics that inform stakeholders as to the quality of the data they consume. Many of the questions that data consumers may ask are easily answered when data validation workflows are implemented using Attribute rules. These include:

- Has the quality of the features I'm working with already been assessed?

- Are the features in my project area error-free?

- If no, how severe are the errors in my data and will it impact my work?

- What type of errors are present in my data?

In this blog, we will identify different sources of data quality information that are automatically created when using Attribute rules and capabilities in ArcGIS that assist in increasing awareness of data quality.

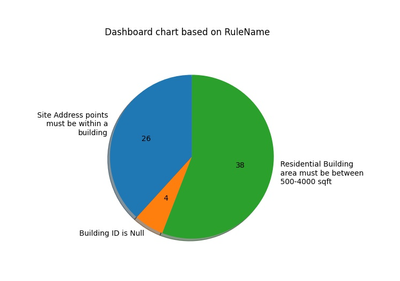

Dashboard charts

Attribute rules allow you to evaluate a feature’s quality based on requirements implemented as a series of validation rules. Evaluation is done on an as-needed basis either interactively using the Error Inspector in ArcGIS Pro, as a step in an automated workflow using the Evaluate Rules geoprocessing tool, or using Evaluate REST operation. Error features are created when a feature fails to meet the criteria implemented in the validation attribute rule.

In this example, error features are leveraged using ArcPy to provide a dashboard chart that summarizes error information based on a field. Some of the common fields include the error lifecycle phase or status, severity, or rule name. First, the script queries all error layers in the geodatabase based on the provided field. Once the geodatabase path and a field name are passed in the “make_combined_list” function, it returns a list of error results:

Note : This script uses the Matplotlib python library available with ArcGIS Pro.

def make_combine_list(gdb, field):

Occurances = []

with arcpy.da.SearchCursor (gdb + "\\GDB_ValidationPointErrors", field) as cursor:

for row in cursor:

Occurances.append (row[0])

with arcpy.da.SearchCursor (gdb + "\\GDB_ValidationLineErrors", field) as cursor:

for row in cursor:

Occurances.append (row[0])

with arcpy.da.SearchCursor (gdb + "\\GDB_ValidationPolygonErrors", field) as cursor:

for row in cursor:

Occurances.append (row[0])

with arcpy.da.SearchCursor (gdb + "\\GDB_ValidationObjectErrors", field) as cursor:

for row in cursor:

Occurances.append (row[0])

return Occurances

Once the list is created with all the results combined at one place then we find the unique count and values based on those results. These values are then used to label the dashboard chart. The “get_unique_count” functions take two parameters, a list and a bool if count required “true” or “false” and returns a list of unique values if bool is false and a list of count of unique values if bool is true:

def get_unique_count(list, Is_Count):

unique_values = []

count = []

for i in list:

if i not in unique_values:

unique_values.append(i)

for i in unique_values:

count.append (list.count(i))

if Is_Count == True:

return count

else:

return unique_values

If the field value is “Error status”, which uses a coded value domain, the codes must be replaced by the description value to get a better understanding in the chart. To do this, we pass the list of unique values to replace function and it will return a list with the description value. This logic can be used with any coded domain value field i.e. Life

def replace(list_unique_values):

for i in range(len(list_unique_values)):

if list_unique_values [i] == 1:

list_unique_values [i] = "Reviewed"

elif list_unique_values [i] == 2:

list_unique_values [i] = "Resolved"

elif list_unique_values [i] == 3:

list_unique_values [i] = "Mark As exception"

elif list_unique_values [i] == 4:

list_unique_values [i] = "Acceptable"

elif list_unique_values [i] == 6:

list_unique_values [i] = "Unacceptable"

elif list_unique_values [i] == 9:

list_unique_values [i] = "Exception"

return list_unique_values

Once we get the unique field values and counts, we pass this info to the Matplotlib object to draw the chart.

Accuracy report of your data

The goal of this report is to increase awareness by providing a summary of your data’s accuracy that can be leveraged by multiple stakeholders in your organization. In Attribute rule workflows, each feature’s status is automatically maintained and indicates whether it requires calculation, validation and if it is in error.

In this example, error information is stored in the features themselves and the script checks for the presence of the ValidationStatus field in every feature class. When found, it calculates the dataset’s accuracy based on the value of that field in each feature. For example, in a feature class with 100 features, 70 of them do not require validation and contain errors (ValidationStatus equals 1, 3, 5 or 7) and 30 of them require validation and do not contain errors (ValidationStatus equals 2 or 6) then the calculated percentage accuracy ((1 - (total_errors / total_feature_count)) * 100) of that feature class is 70% with a margin of error of 30%.

Note : This script uses the Tabulate python library to format the accuracy report which is not installed by default with ArcGIS Pro.

def calculate_percentage_accuracy(gdb):

fcname = []

accuracy = []

featurecount = []

errorcount = []

marginoferror = []

arcpy.env.workspace = gdb

datasets = arcpy.ListDatasets(feature_type='feature')

datasets = [''] + datasets if datasets is not None else []

for ds in datasets:

for fc in arcpy.ListFeatureClasses(feature_dataset=ds):

totalcount = int(arcpy.GetCount_management(fc).getOutput(0))

for field in arcpy.ListFields(fc):

if("VALIDATIONSTATUS" in field.name.upper()):

a = 0

b = 0

with arcpy.da.SearchCursor(fc,field.name) as cursor:

for row in cursor:

if(1 in row or 3 in row or 5 in row or 7 in row):

a = a + 1

elif(2 in row or 6 in row):

b = b + 1

percentacc = (1 - (a / totalcount)) * 100

fcname.append(fc)

accuracy.append(round(percentacc, 1))

featurecount.append(totalcount)

errorcount.append(a)

if b == 0:

M_err = 0

else:

M_err = (b / totalcount) * 100

marginoferror.append(round(M_err, 1))

return fcname, accuracy, featurecount, errorcount, marginoferror

To learn more about Python in ArcGIS Pro, python libraries used in this script and Attribute rules please follow the links below:

Python in ArcGIS Pro

https://pro.arcgis.com/en/pro-app/latest/arcpy/get-started/installing-python-for-arcgis-pro.htm

What is ArcPy?

https://pro.arcgis.com/en/pro-app/latest/arcpy/get-started/what-is-arcpy-.htm

Introduction to attribute rules

Evaluation of features using Reviewer rules

Manage attribute rule errors

Matplotlib python library

Tabulate python library

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.