- Home

- :

- All Communities

- :

- Products

- :

- ETL Patterns & Data Interoperability

- :

- ArcGIS Data Interoperability

- :

- Data Interoperability Blog

- :

- Scheduling Web ETL with ArcGIS Data Interoperabili...

Scheduling Web ETL with ArcGIS Data Interoperability for Enterprise

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

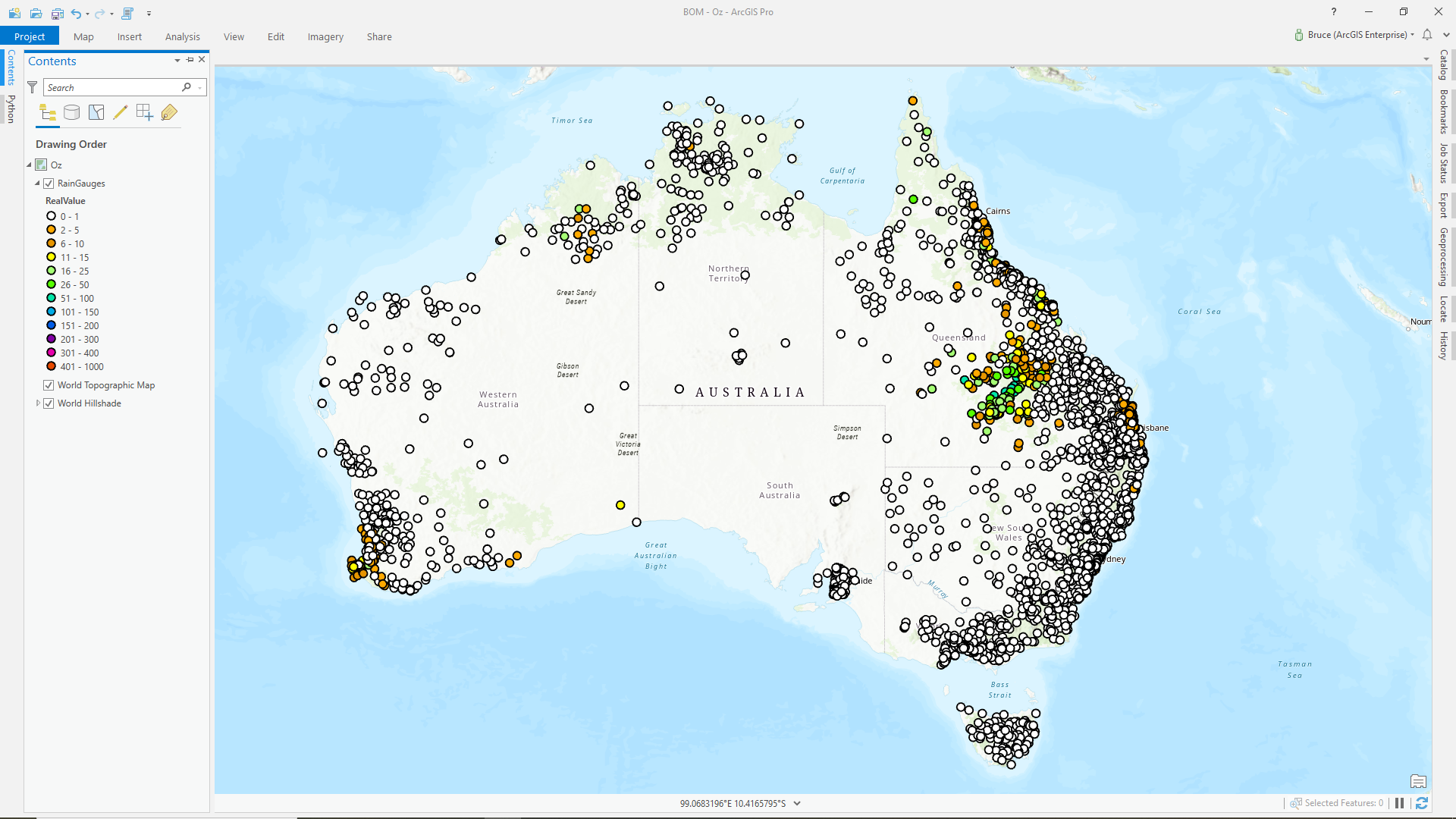

Here is where it rained today in Australia, a bit around Perth, a bunch in Queensland and for the sharp-eyed a little at Cape York to keep the prawns and crocs happy. The legend shows mm precipitation.

The Australian Bureau of Meteorology publishes downloadable weather data; my scenario is I'm interested in republishing rain gauge observations to a hosted feature service, which is in the map above. After a little digging around I find the data is available via FTP with a schema described in this user guide. The data is refreshed daily. While I'm not redistributing the data in this blog I'll mention it is licensed under Creative Commons terms so you can implement my sample if you wish.

While the refresh rate is daily each file can contain observations spanning more than 24 hours and from multiple sensors at a site. Anyway, what I wanted was the daily observations pushed into a feature service in my portal; I could just as easily send the data to ArcGIS Online.

These periodic synchronizations from the web are everywhere in GIS. Data custodians make it easy to manually obtain data, I'm going to show you how simple it is to automate synchronizations with Data Interoperability. I usually describe Data Interoperability as Esri's 'no-code' app integration technology. Full disclosure, in this sample I did resort to some Python in the FME Workbenches I created, so I have to back off the no-code claim, but I can say its low-code. You can see for yourself in the Workbenches.

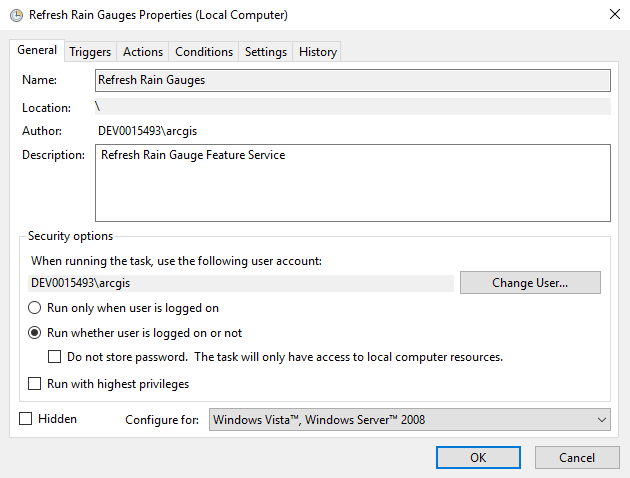

I started out thinking I would make the scheduled process a web tool and schedule it with Notebook Server. That might be the most fun to build but I realized it just isn't called for in my use case. I fell back to a pattern I previously blogged about, namely using Windows Task Scheduler, but this time on a server. Why not use the desktop software approach? Well, to take advantage of a machine with likely very high uptime I know can be scheduled outside my normal working hours.

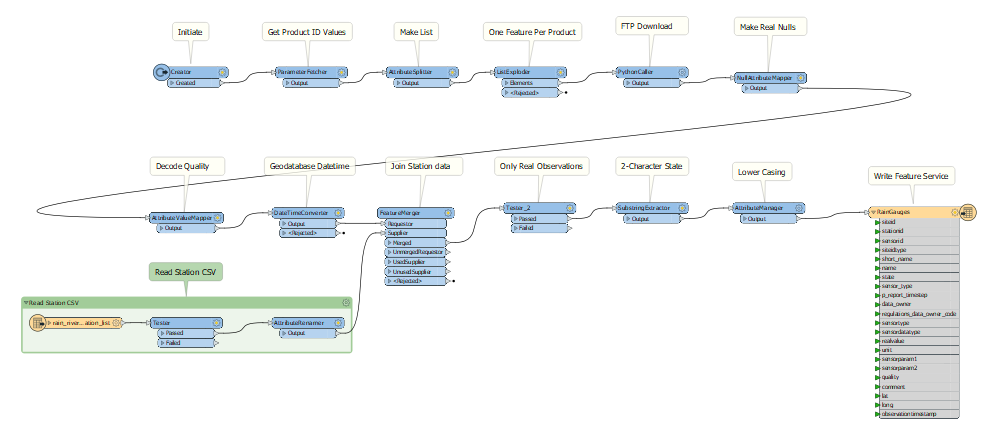

Here is the Workbench that does the job of downloading the BoM product files and sending features to my portal's hosted feature service:

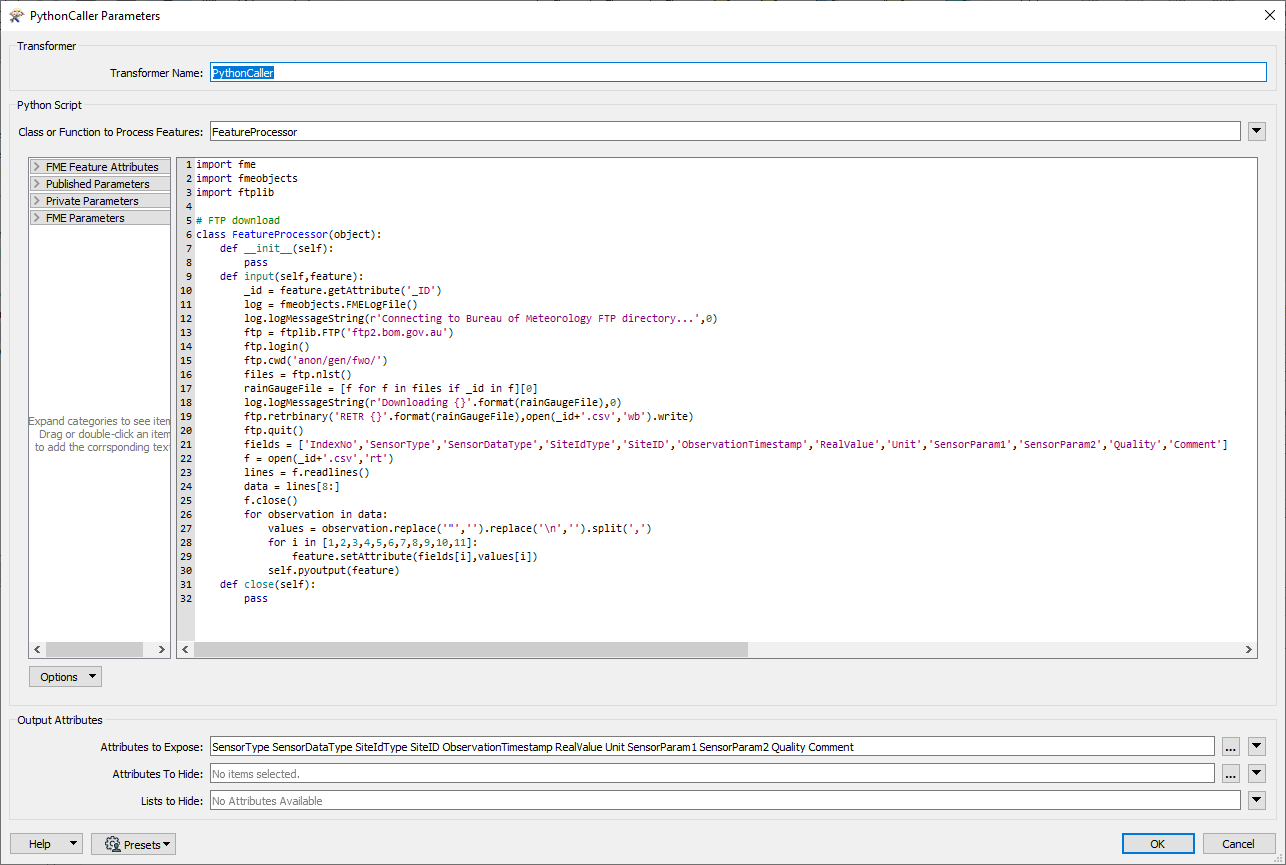

And I can't resist it, here is the Python, not too scary. It would be unnecessary if the filenames at the FTP site were stable, I could have used an FTPCaller transformer, but they have datestamps as part of their name, it was just easier to handle that with some Python. While I was downloading the data I also cleaned it up a little (removing enclosing double quotes and newline characters) then sent all observations out into the stream.

As the data sources don't change I made all parameters private, this simplifies the command to schedule. All that I need to do is get the Workbench onto the server and make sure it runs. In the post download you'll find three FMW files:

MakeRainGauges.fmw

RefreshRainGauges.fmw

RefreshRainGauges - Server Copy.fmw

MakeRainGauges creates a geodatabase feature class I used in Pro to instantiate my hosted feature service. RefreshRainGauges is built from MakeRainGauges and only differs in that it writes to the feature service, with initial truncation. That's the Workbench I want to schedule. RefreshRainGauges - Server Copy only differs from RefreshRainGauges in its Python settings, to use Python 3.6+. I didn't use that name on the server, just to get it into the post download.

On my portal server there was a little setup (I have a single machine with everything on on it, don't forget to install and license Data Interoperability!). RefreshRainGauges uses a web connection to my portal. In this blog I describe how to create a portal web connection. This has to be copied to the server for the arcgis user which will run the scheduled process. The simplest way is method #2 in this article. Logged onto the server as arcgis, I first created a desktop shortcut to "C:\Program Files\ESRI\Data Interoperability\Data Interoperability AO11\fmeworkbench.exe", started Workbench, then imported the web connection XML file and tested reading the feature service. I also copied RefreshRainGauges to a folder and edited it to adjust the Python environment to suit the server (the sample was built with Pro 2.7 Beta but the server is running Enterprise 10.8.1). Running the workspace interactively, the top of the log reveals the command to be scheduled:

Command-line to run this workspace:

"C:\Program Files\ESRI\Data Interoperability\Data Interoperability AO11\fme.exe" C:\Users\arcgis\Desktop\RefreshRainGauges.fmw

The rest is simple, just create a basic task, the hardest part was figuring out what time to execute the command (I see data changing as late as 1AM UTC so I went with 6PM local time on my server, which is in US West). Make sure the task will run if arcgis is not logged on, and the arcgis user will need batch job rights (or be an administrator, which I can do on my VM machine but you likely will not be allowed to do).

That's it, automated maintenance of data I can share to anyone! I finish with a screen shot of the processing log from last night's synchronization:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.